Peter Eastman

On the design space between molecular mechanics and machine learning force fields

Sep 03, 2024Abstract:A force field as accurate as quantum mechanics (QM) and as fast as molecular mechanics (MM), with which one can simulate a biomolecular system efficiently enough and meaningfully enough to get quantitative insights, is among the most ardent dreams of biophysicists -- a dream, nevertheless, not to be fulfilled any time soon. Machine learning force fields (MLFFs) represent a meaningful endeavor towards this direction, where differentiable neural functions are parametrized to fit ab initio energies, and furthermore forces through automatic differentiation. We argue that, as of now, the utility of the MLFF models is no longer bottlenecked by accuracy but primarily by their speed (as well as stability and generalizability), as many recent variants, on limited chemical spaces, have long surpassed the chemical accuracy of $1$ kcal/mol -- the empirical threshold beyond which realistic chemical predictions are possible -- though still magnitudes slower than MM. Hoping to kindle explorations and designs of faster, albeit perhaps slightly less accurate MLFFs, in this review, we focus our attention on the design space (the speed-accuracy tradeoff) between MM and ML force fields. After a brief review of the building blocks of force fields of either kind, we discuss the desired properties and challenges now faced by the force field development community, survey the efforts to make MM force fields more accurate and ML force fields faster, envision what the next generation of MLFF might look like.

Nutmeg and SPICE: Models and Data for Biomolecular Machine Learning

Jun 18, 2024

Abstract:We describe version 2 of the SPICE dataset, a collection of quantum chemistry calculations for training machine learning potentials. It expands on the original dataset by adding much more sampling of chemical space and more data on non-covalent interactions. We train a set of potential energy functions called Nutmeg on it. They use a novel mechanism to improve performance on charged and polar molecules, injecting precomputed partial charges into the model to provide a reference for the large scale charge distribution. Evaluation of the new models shows they do an excellent job of reproducing energy differences between conformations, even on highly charged molecules or ones that are significantly larger than the molecules in the training set. They also produce stable molecular dynamics trajectories, and are fast enough to be useful for routine simulation of small molecules.

TorchMD-Net 2.0: Fast Neural Network Potentials for Molecular Simulations

Feb 27, 2024

Abstract:Achieving a balance between computational speed, prediction accuracy, and universal applicability in molecular simulations has been a persistent challenge. This paper presents substantial advancements in the TorchMD-Net software, a pivotal step forward in the shift from conventional force fields to neural network-based potentials. The evolution of TorchMD-Net into a more comprehensive and versatile framework is highlighted, incorporating cutting-edge architectures such as TensorNet. This transformation is achieved through a modular design approach, encouraging customized applications within the scientific community. The most notable enhancement is a significant improvement in computational efficiency, achieving a very remarkable acceleration in the computation of energy and forces for TensorNet models, with performance gains ranging from 2-fold to 10-fold over previous iterations. Other enhancements include highly optimized neighbor search algorithms that support periodic boundary conditions and the smooth integration with existing molecular dynamics frameworks. Additionally, the updated version introduces the capability to integrate physical priors, further enriching its application spectrum and utility in research. The software is available at https://github.com/torchmd/torchmd-net.

OpenMM 8: Molecular Dynamics Simulation with Machine Learning Potentials

Oct 04, 2023

Abstract:Machine learning plays an important and growing role in molecular simulation. The newest version of the OpenMM molecular dynamics toolkit introduces new features to support the use of machine learning potentials. Arbitrary PyTorch models can be added to a simulation and used to compute forces and energy. A higher-level interface allows users to easily model their molecules of interest with general purpose, pretrained potential functions. A collection of optimized CUDA kernels and custom PyTorch operations greatly improves the speed of simulations. We demonstrate these features on simulations of cyclin-dependent kinase 8 (CDK8) and the green fluorescent protein (GFP) chromophore in water. Taken together, these features make it practical to use machine learning to improve the accuracy of simulations at only a modest increase in cost.

SPICE, A Dataset of Drug-like Molecules and Peptides for Training Machine Learning Potentials

Sep 21, 2022

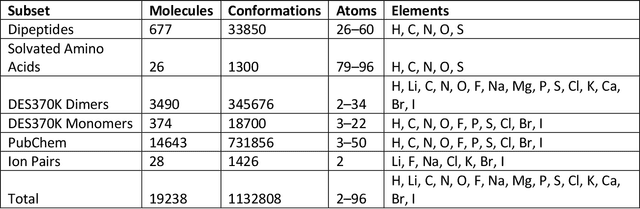

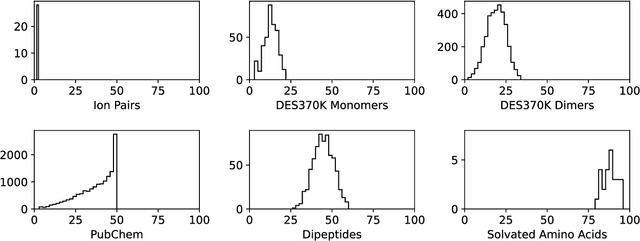

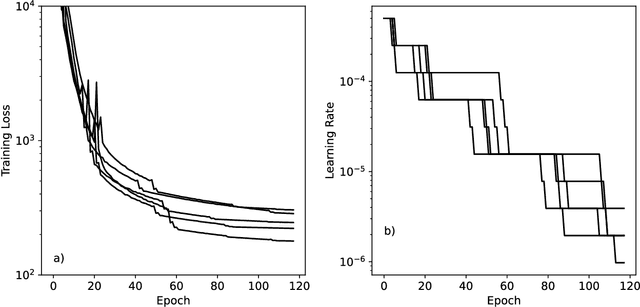

Abstract:Machine learning potentials are an important tool for molecular simulation, but their development is held back by a shortage of high quality datasets to train them on. We describe the SPICE dataset, a new quantum chemistry dataset for training potentials relevant to simulating drug-like small molecules interacting with proteins. It contains over 1.1 million conformations for a diverse set of small molecules, dimers, dipeptides, and solvated amino acids. It includes 15 elements, charged and uncharged molecules, and a wide range of covalent and non-covalent interactions. It provides both forces and energies calculated at the {\omega}B97M-D3(BJ)/def2-TZVPPD level of theory, along with other useful quantities such as multipole moments and bond orders. We train a set of machine learning potentials on it and demonstrate that they can achieve chemical accuracy across a broad region of chemical space. It can serve as a valuable resource for the creation of transferable, ready to use potential functions for use in molecular simulations.

NNP/MM: Fast molecular dynamics simulations with machine learning potentials and molecular mechanics

Jan 20, 2022Abstract:Parametric and non-parametric machine learning potentials have emerged recently as a way to improve the accuracy of bio-molecular simulations. Here, we present NNP/MM, an hybrid method integrating neural network potentials (NNPs) and molecular mechanics (MM). It allows to simulate a part of molecular system with NNP, while the rest is simulated with MM for efficiency. The method is currently available in ACEMD using OpenMM plugins to optimize the performance of NNPs. The achieved performance is slower but comparable to the state-of-the-art GPU-accelerated MM simulations. We validated NNP/MM by performing MD simulations of four protein-ligand complexes, where NNP is used for the intra-molecular interactions of a lignad and MM for the rest interactions. This shows that NNP can already replace MM for small molecules in protein-ligand simulations. The combined sampling of each complex is 1 microsecond, which are the longest simulations of NNP/MM ever reported. Finally, we have made the setup of the NNP/MM simulations simple and user-friendly.

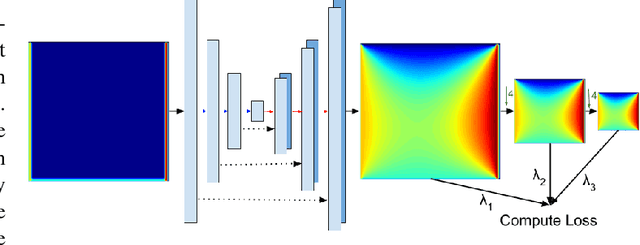

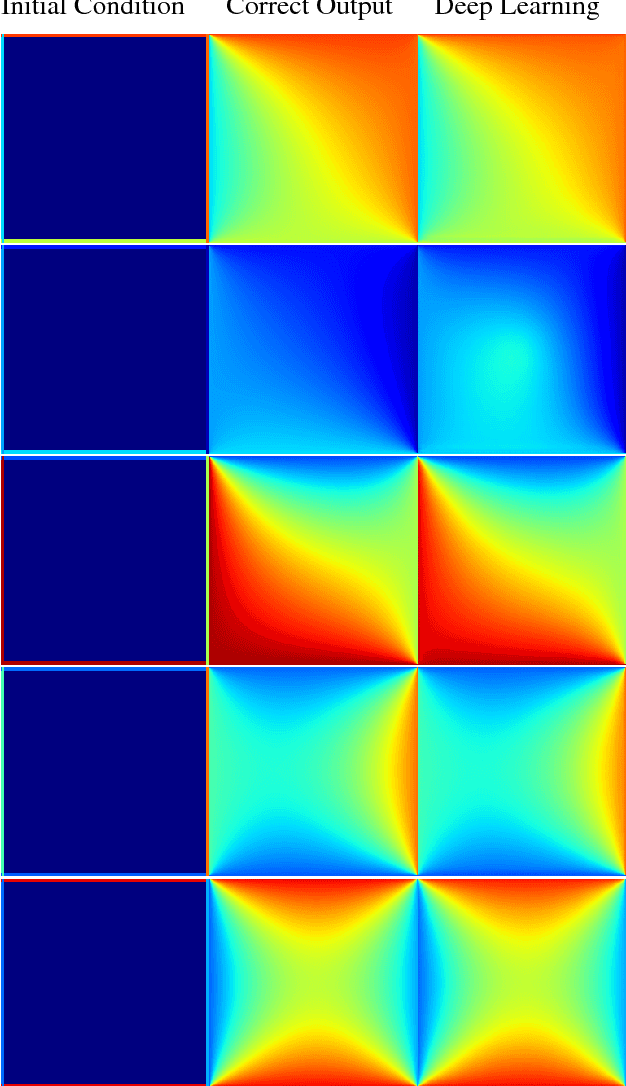

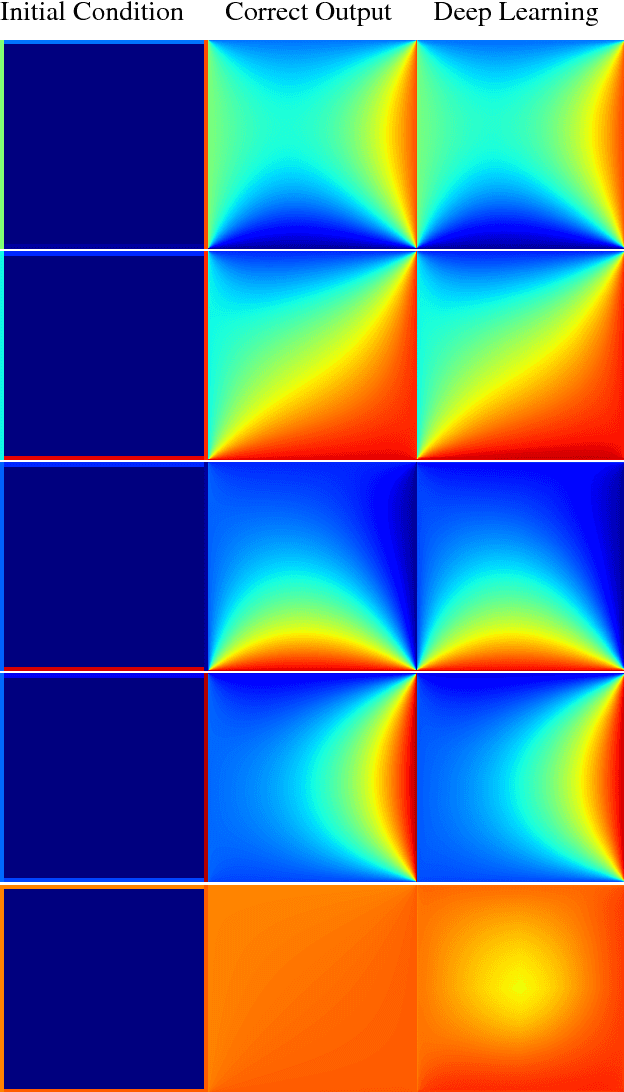

Weakly-Supervised Deep Learning of Heat Transport via Physics Informed Loss

Aug 21, 2018

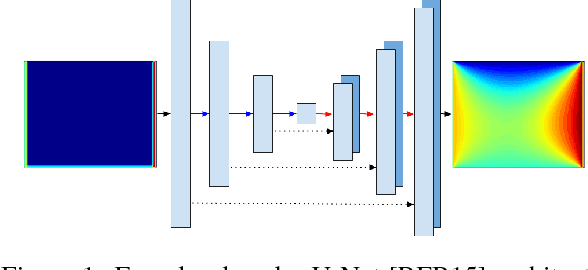

Abstract:In typical machine learning tasks and applications, it is necessary to obtain or create large labeled datasets in order to to achieve high performance. Unfortunately, large labeled datasets are not always available and can be expensive to source, creating a bottleneck towards more widely applicable machine learning. The paradigm of weak supervision offers an alternative that allows for integration of domain-specific knowledge by enforcing constraints that a correct solution to the learning problem will obey over the output space. In this work, we explore the application of this paradigm to 2-D physical systems governed by non-linear differential equations. We demonstrate that knowledge of the partial differential equations governing a system can be encoded into the loss function of a neural network via an appropriately chosen convolutional kernel. We demonstrate this by showing that the steady-state solution to the 2-D heat equation can be learned directly from initial conditions by a convolutional neural network, in the absence of labeled training data. We also extend recent work in the progressive growing of fully convolutional networks to achieve high accuracy (< 1.5% error) at multiple scales of the heat-flow problem, including at the very large scale (1024x1024). Finally, we demonstrate that this method can be used to speed up exact calculation of the solution to the differential equations via finite difference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge