John E. Herr

SPICE, A Dataset of Drug-like Molecules and Peptides for Training Machine Learning Potentials

Sep 21, 2022

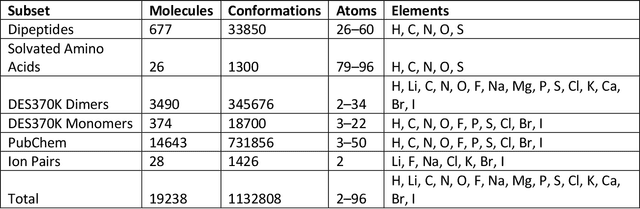

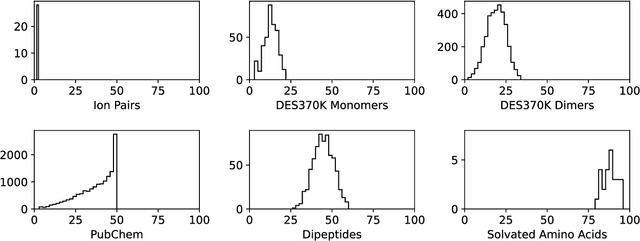

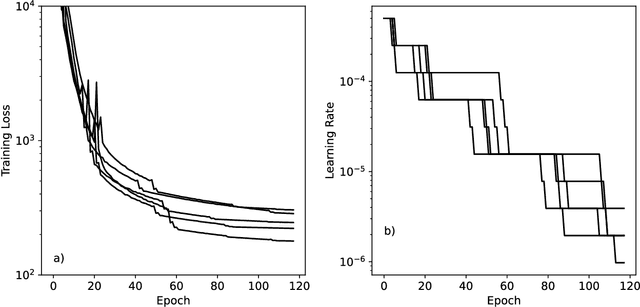

Abstract:Machine learning potentials are an important tool for molecular simulation, but their development is held back by a shortage of high quality datasets to train them on. We describe the SPICE dataset, a new quantum chemistry dataset for training potentials relevant to simulating drug-like small molecules interacting with proteins. It contains over 1.1 million conformations for a diverse set of small molecules, dimers, dipeptides, and solvated amino acids. It includes 15 elements, charged and uncharged molecules, and a wide range of covalent and non-covalent interactions. It provides both forces and energies calculated at the {\omega}B97M-D3(BJ)/def2-TZVPPD level of theory, along with other useful quantities such as multipole moments and bond orders. We train a set of machine learning potentials on it and demonstrate that they can achieve chemical accuracy across a broad region of chemical space. It can serve as a valuable resource for the creation of transferable, ready to use potential functions for use in molecular simulations.

Compressing physical properties of atomic species for improving predictive chemistry

Oct 31, 2018

Abstract:The answers to many unsolved problems lie in the intractable chemical space of molecules and materials. Machine learning techniques are rapidly growing in popularity as a way to compress and explore chemical space efficiently. One of the most important aspects of machine learning techniques is representation through the feature vector, which should contain the most important descriptors necessary to make accurate predictions, not least of which is the atomic species in the molecule or material. In this work we introduce a compressed representation of physical properties for atomic species we call the elemental modes. The elemental modes provide an excellent representation by capturing many of the nuances of the periodic table and the similarity of atomic species. We apply the elemental modes to several different tasks for machine learning algorithms and show that they enable us to make improvements to these tasks even beyond simply achieving higher accuracy predictions.

Metadynamics for Training Neural Network Model Chemistries: a Competitive Assessment

Dec 19, 2017

Abstract:Neural network (NN) model chemistries (MCs) promise to facilitate the accurate exploration of chemical space and simulation of large reactive systems. One important path to improving these models is to add layers of physical detail, especially long-range forces. At short range, however, these models are data driven and data limited. Little is systematically known about how data should be sampled, and `test data' chosen randomly from some sampling techniques can provide poor information about generality. If the sampling method is narrow `test error' can appear encouragingly tiny while the model fails catastrophically elsewhere. In this manuscript we competitively evaluate two common sampling methods: molecular dynamics (MD), normal-mode sampling (NMS) and one uncommon alternative, Metadynamics (MetaMD), for preparing training geometries. We show that MD is an inefficient sampling method in the sense that additional samples do not improve generality. We also show MetaMD is easily implemented in any NNMC software package with cost that scales linearly with the number of atoms in a sample molecule. MetaMD is a black-box way to ensure samples always reach out to new regions of chemical space, while remaining relevant to chemistry near $k_bT$. It is one cheap tool to address the issue of generalization.

The Many-Body Expansion Combined with Neural Networks

Sep 22, 2016

Abstract:Fragmentation methods such as the many-body expansion (MBE) are a common strategy to model large systems by partitioning energies into a hierarchy of decreasingly significant contributions. The number of fragments required for chemical accuracy is still prohibitively expensive for ab-initio MBE to compete with force field approximations for applications beyond single-point energies. Alongside the MBE, empirical models of ab-initio potential energy surfaces have improved, especially non-linear models based on neural networks (NN) which can reproduce ab-initio potential energy surfaces rapidly and accurately. Although they are fast, NNs suffer from their own curse of dimensionality; they must be trained on a representative sample of chemical space. In this paper we examine the synergy of the MBE and NN's, and explore their complementarity. The MBE offers a systematic way to treat systems of arbitrary size and intelligently sample chemical space. NN's reduce, by a factor in excess of $10^6$ the computational overhead of the MBE and reproduce the accuracy of ab-initio calculations without specialized force fields. We show they are remarkably general, providing comparable accuracy with drastically different chemical embeddings. To assess this we test a new chemical embedding which can be inverted to predict molecules with desired properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge