Peizhen Yang

Perturbing a Neural Network to Infer Effective Connectivity: Evidence from Synthetic EEG Data

Jul 19, 2023

Abstract:Identifying causal relationships among distinct brain areas, known as effective connectivity, holds key insights into the brain's information processing and cognitive functions. Electroencephalogram (EEG) signals exhibit intricate dynamics and inter-areal interactions within the brain. However, methods for characterizing nonlinear causal interactions among multiple brain regions remain relatively underdeveloped. In this study, we proposed a data-driven framework to infer effective connectivity by perturbing the trained neural networks. Specifically, we trained neural networks (i.e., CNN, vanilla RNN, GRU, LSTM, and Transformer) to predict future EEG signals according to historical data and perturbed the networks' input to obtain effective connectivity (EC) between the perturbed EEG channel and the rest of the channels. The EC reflects the causal impact of perturbing one node on others. The performance was tested on the synthetic EEG generated by a biological-plausible Jansen-Rit model. CNN and Transformer obtained the best performance on both 3-channel and 90-channel synthetic EEG data, outperforming the classical Granger causality method. Our work demonstrated the potential of perturbing an artificial neural network, learned to predict future system dynamics, to uncover the underlying causal structure.

Exploiting Neighborhood Structural Features for Change Detection

Feb 10, 2023Abstract:In this letter, a novel method for change detection is proposed using neighborhood structure correlation. Because structure features are insensitive to the intensity differences between bi-temporal images, we perform the correlation analysis on structure features rather than intensity information. First, we extract the structure feature maps by using multi-orientated gradient information. Then, the structure feature maps are used to obtain the Neighborhood Structural Correlation Image (NSCI), which can represent the context structure information. In addition, we introduce a measure named matching error which can be used to improve neighborhood information. Subsequently, a change detection model based on the random forest is constructed. The NSCI feature and matching error are used as the model inputs for training and prediction. Finally, the decision tree voting is used to produce the change detection result. To evaluate the performance of the proposed method, it was compared with three state-of-the-art change detection methods. The experimental results on two datasets demonstrated the effectiveness and robustness of the proposed method.

FedGS: Federated Graph-based Sampling with Arbitrary Client Availability

Dec 07, 2022Abstract:While federated learning has shown strong results in optimizing a machine learning model without direct access to the original data, its performance may be hindered by intermittent client availability which slows down the convergence and biases the final learned model. There are significant challenges to achieve both stable and bias-free training under arbitrary client availability. To address these challenges, we propose a framework named Federated Graph-based Sampling (FedGS), to stabilize the global model update and mitigate the long-term bias given arbitrary client availability simultaneously. First, we model the data correlations of clients with a Data-Distribution-Dependency Graph (3DG) that helps keep the sampled clients data apart from each other, which is theoretically shown to improve the approximation to the optimal model update. Second, constrained by the far-distance in data distribution of the sampled clients, we further minimize the variance of the numbers of times that the clients are sampled, to mitigate long-term bias. To validate the effectiveness of FedGS, we conduct experiments on three datasets under a comprehensive set of seven client availability modes. Our experimental results confirm FedGS's advantage in both enabling a fair client-sampling scheme and improving the model performance under arbitrary client availability. Our code is available at \url{https://github.com/WwZzz/FedGS}.

ConTIG: Continuous Representation Learning on Temporal Interaction Graphs

Sep 27, 2021

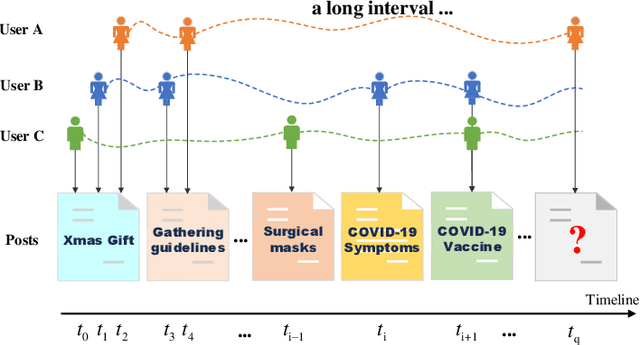

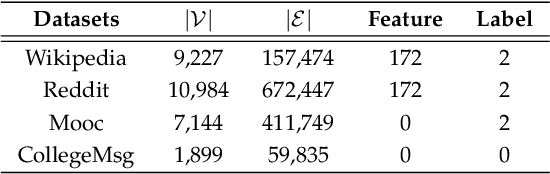

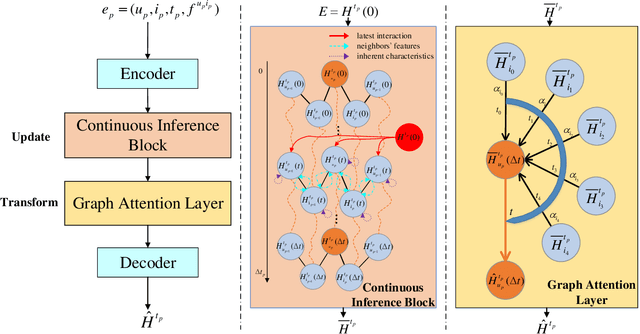

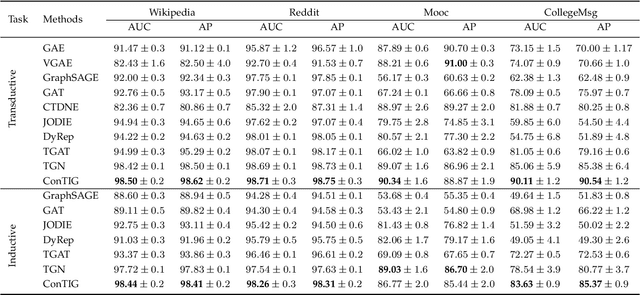

Abstract:Representation learning on temporal interaction graphs (TIG) is to model complex networks with the dynamic evolution of interactions arising in a broad spectrum of problems. Existing dynamic embedding methods on TIG discretely update node embeddings merely when an interaction occurs. They fail to capture the continuous dynamic evolution of embedding trajectories of nodes. In this paper, we propose a two-module framework named ConTIG, a continuous representation method that captures the continuous dynamic evolution of node embedding trajectories. With two essential modules, our model exploit three-fold factors in dynamic networks which include latest interaction, neighbor features and inherent characteristics. In the first update module, we employ a continuous inference block to learn the nodes' state trajectories by learning from time-adjacent interaction patterns between node pairs using ordinary differential equations. In the second transform module, we introduce a self-attention mechanism to predict future node embeddings by aggregating historical temporal interaction information. Experiments results demonstrate the superiority of ConTIG on temporal link prediction, temporal node recommendation and dynamic node classification tasks compared with a range of state-of-the-art baselines, especially for long-interval interactions prediction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge