Bai Zhu

Exploiting Neighborhood Structural Features for Change Detection

Feb 10, 2023Abstract:In this letter, a novel method for change detection is proposed using neighborhood structure correlation. Because structure features are insensitive to the intensity differences between bi-temporal images, we perform the correlation analysis on structure features rather than intensity information. First, we extract the structure feature maps by using multi-orientated gradient information. Then, the structure feature maps are used to obtain the Neighborhood Structural Correlation Image (NSCI), which can represent the context structure information. In addition, we introduce a measure named matching error which can be used to improve neighborhood information. Subsequently, a change detection model based on the random forest is constructed. The NSCI feature and matching error are used as the model inputs for training and prediction. Finally, the decision tree voting is used to produce the change detection result. To evaluate the performance of the proposed method, it was compared with three state-of-the-art change detection methods. The experimental results on two datasets demonstrated the effectiveness and robustness of the proposed method.

Advances and Challenges in Multimodal Remote Sensing Image Registration

Feb 07, 2023Abstract:Over the past few decades, with the rapid development of global aerospace and aerial remote sensing technology, the types of sensors have evolved from the traditional monomodal sensors (e.g., optical sensors) to the new generation of multimodal sensors [e.g., multispectral, hyperspectral, light detection and ranging (LiDAR) and synthetic aperture radar (SAR) sensors]. These advanced devices can dynamically provide various and abundant multimodal remote sensing images with different spatial, temporal, and spectral resolutions according to different application requirements. Since then, it is of great scientific significance to carry out the research of multimodal remote sensing image registration, which is a crucial step for integrating the complementary information among multimodal data and making comprehensive observations and analysis of the Earths surface. In this work, we will present our own contributions to the field of multimodal image registration, summarize the advantages and limitations of existing multimodal image registration methods, and then discuss the remaining challenges and make a forward-looking prospect for the future development of the field.

R2FD2: Fast and Robust Matching of Multimodal Remote Sensing Image via Repeatable Feature Detector and Rotation-invariant Feature Descriptor

Dec 06, 2022Abstract:Automatically identifying feature correspondences between multimodal images is facing enormous challenges because of the significant differences both in radiation and geometry. To address these problems, we propose a novel feature matching method (named R2FD2) that is robust to radiation and rotation differences. Our R2FD2 is conducted in two critical contributions, consisting of a repeatable feature detector and a rotation-invariant feature descriptor. In the first stage, a repeatable feature detector called the Multi-channel Auto-correlation of the Log-Gabor (MALG) is presented for feature detection, which combines the multi-channel auto-correlation strategy with the Log-Gabor wavelets to detect interest points (IPs) with high repeatability and uniform distribution. In the second stage, a rotation-invariant feature descriptor is constructed, named the Rotation-invariant Maximum index map of the Log-Gabor (RMLG), which consists of two components: fast assignment of dominant orientation and construction of feature representation. In the process of fast assignment of dominant orientation, a Rotation-invariant Maximum Index Map (RMIM) is built to address rotation deformations. Then, the proposed RMLG incorporates the rotation-invariant RMIM with the spatial configuration of DAISY to depict a more discriminative feature representation, which improves RMLG's resistance to radiation and rotation variances.Experimental results show that the proposed R2FD2 outperforms five state-of-the-art feature matching methods, and has superior advantages in adaptability and universality. Moreover, our R2FD2 achieves the accuracy of matching within two pixels and has a great advantage in matching efficiency over other state-of-the-art methods.

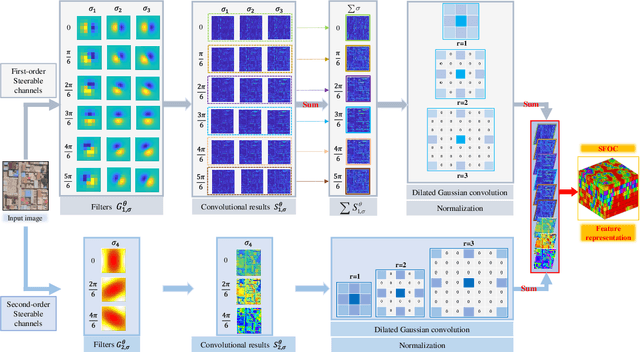

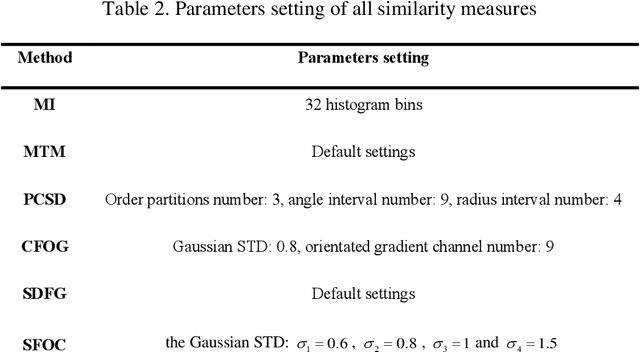

A Robust Multimodal Remote Sensing Image Registration Method and System Using Steerable Filters with First- and Second-order Gradients

Feb 27, 2022

Abstract:Co-registration of multimodal remote sensing images is still an ongoing challenge because of nonlinear radiometric differences (NRD) and significant geometric distortions (e.g., scale and rotation changes) between these images. In this paper, a robust matching method based on the Steerable filters is proposed consisting of two critical steps. First, to address severe NRD, a novel structural descriptor named the Steerable Filters of first- and second-Order Channels (SFOC) is constructed, which combines the first- and second-order gradient information by using the steerable filters with a multi-scale strategy to depict more discriminative structure features of images. Then, a fast similarity measure is established called Fast Normalized Cross-Correlation (Fast-NCCSFOC), which employs the Fast Fourier Transform technique and the integral image to improve the matching efficiency. Furthermore, to achieve reliable registration performance, a coarse-to-fine multimodal registration system is designed consisting of two pivotal modules. The local coarse registration is first conducted by involving both detection of interest points (IPs) and local geometric correction, which effectively utilizes the prior georeferencing information of RS images to address global geometric distortions. In the fine registration stage, the proposed SFOC is used to resist significant NRD, and to detect control points between multimodal images by a template matching scheme. The performance of the proposed matching method has been evaluated with many different kinds of multimodal RS images. The results show its superior matching performance compared with the state-of-the-art methods. Moreover, the designed registration system also outperforms the popular commercial software in both registration accuracy and computational efficiency. Our system is available at https://github.com/yeyuanxin110.

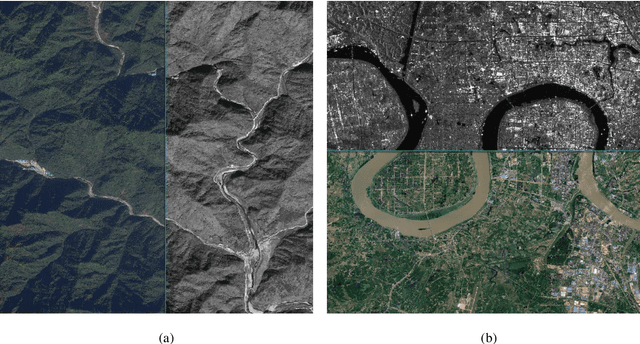

Misregistration Measurement and Improvement for Sentinel-1 SAR and Sentinel-2 Optical images

May 22, 2020

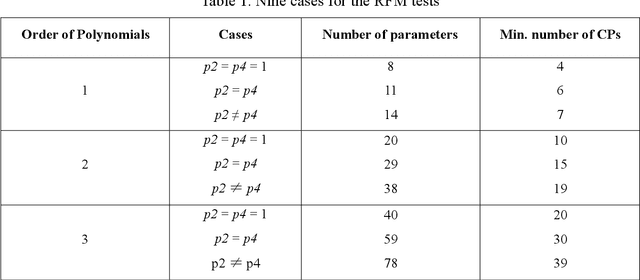

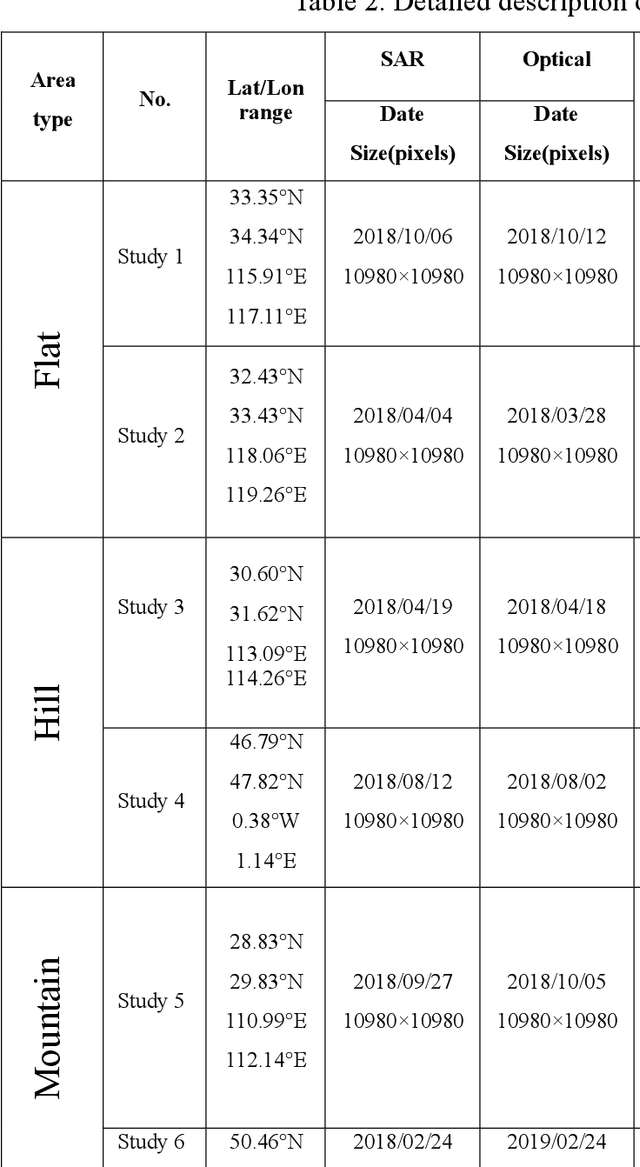

Abstract:Co-registering the Sentinel-1 SAR and Sentinel-2 optical data of European Space Agency (ESA) is of great importance for many remote sensing applications. The Sentinel-1 and 2 product specifications from ESA show that the Sentinel-1 SAR L1 and the Sentinel-2 optical L1C images have a co-registration accuracy of within 2 pixels. However, we find that the actual misregistration errors are much larger than that between such images. This paper measures the misregistration errors by a block-based multimodal image matching strategy to six pairs of the Sentinel-1 SAR and Sentinel-2 optical images, which locate in China and Europe and cover three different terrains such as flat areas, hilly areas and mountainous areas. Our experimental results show the misregistration errors of the flat areas are 20-30 pixels, and these of the hilly areas are 20-40 pixels. While in the mountainous areas, the errors increase to 50-60 pixels. To eliminate the misregistration, we use some representative geometric transformation models such as polynomial models, projective models, and rational function models for the co-registration of the two types of images, and compare and analyze their registration accuracy under different number of control points and different terrains. The results of our analysis show that the 3rd. Order polynomial achieves the most satisfactory registration results. Its registration accuracy of the flat areas is less than 1.0 10m pixels, and that of the hilly areas is about 1.5 pixels, and that of the mountainous areas is between 1.8 and 2.3 pixels. In a word, this paper discloses and measures the misregistration between the Sentinel-1 SAR L1 and Sentinel-2 optical L1C images for the first time. Moreover, we also determine a relatively optimal geometric transformation model of the co-registration of the two types of images.

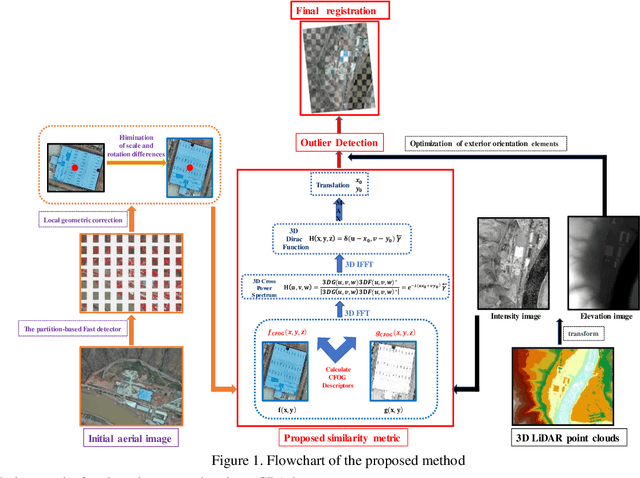

Fast and Robust Registration of Aerial Images and LiDAR data Based on Structrual Features and 3D Phase Correlation

Apr 21, 2020

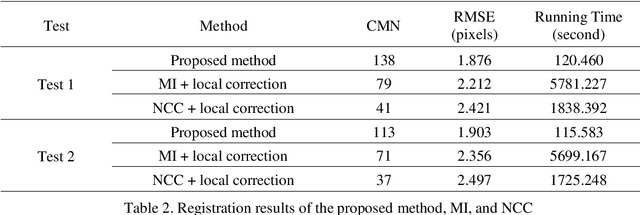

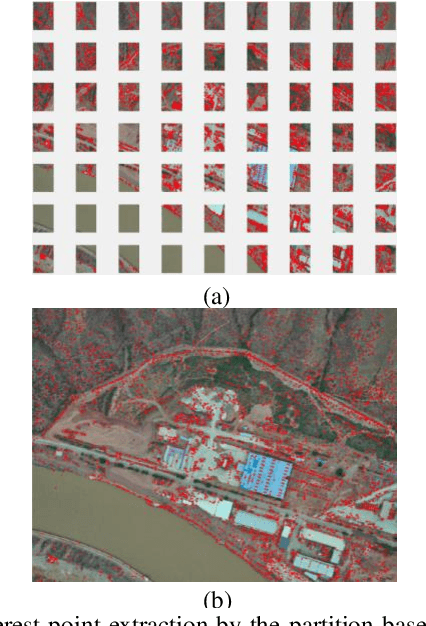

Abstract:Co-Registration of aerial imagery and Light Detection and Ranging (LiDAR) data is quilt challenging because the different imaging mechanism causes significant geometric and radiometric distortions between such data. To tackle the problem, this paper proposes an automatic registration method based on structural features and three-dimension (3D) phase correlation. In the proposed method, the LiDAR point cloud data is first transformed into the intensity map, which is used as the reference image. Then, we employ the Fast operator to extract uniformly distributed interest points in the aerial image by a partition strategy and perform a local geometric correction by using the collinearity equation to eliminate scale and rotation difference between images. Subsequently, a robust structural feature descriptor is build based on dense gradient features, and the 3D phase correlation is used to detect control points (CPs) between aerial images and LiDAR data in the frequency domain, where the image matching is accelerated by the 3D Fast Fourier Transform (FFT). Finally, the obtained CPs are employed to correct the exterior orientation elements, which is used to achieve co-registration of aerial images and LiDAR data. Experiments with two datasets of aerial images and LiDAR data show that the proposed method is much faster and more robust than state of the art methods

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge