Qizhi Xu

Tokenize Image Patches: Global Context Fusion for Effective Haze Removal in Large Images

Apr 13, 2025Abstract:Global contextual information and local detail features are essential for haze removal tasks. Deep learning models perform well on small, low-resolution images, but they encounter difficulties with large, high-resolution ones due to GPU memory limitations. As a compromise, they often resort to image slicing or downsampling. The former diminishes global information, while the latter discards high-frequency details. To address these challenges, we propose DehazeXL, a haze removal method that effectively balances global context and local feature extraction, enabling end-to-end modeling of large images on mainstream GPU hardware. Additionally, to evaluate the efficiency of global context utilization in haze removal performance, we design a visual attribution method tailored to the characteristics of haze removal tasks. Finally, recognizing the lack of benchmark datasets for haze removal in large images, we have developed an ultra-high-resolution haze removal dataset (8KDehaze) to support model training and testing. It includes 10000 pairs of clear and hazy remote sensing images, each sized at 8192 $\times$ 8192 pixels. Extensive experiments demonstrate that DehazeXL can infer images up to 10240 $\times$ 10240 pixels with only 21 GB of memory, achieving state-of-the-art results among all evaluated methods. The source code and experimental dataset are available at https://github.com/CastleChen339/DehazeXL.

A Robust Multimodal Remote Sensing Image Registration Method and System Using Steerable Filters with First- and Second-order Gradients

Feb 27, 2022

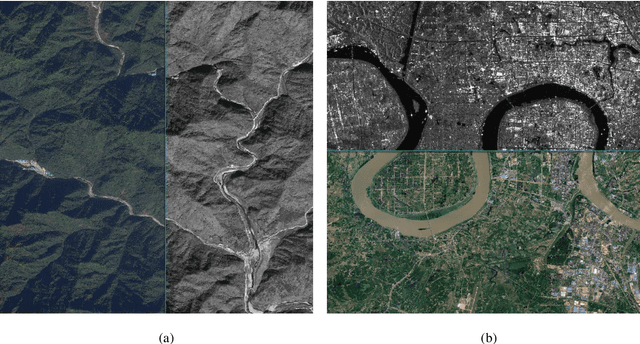

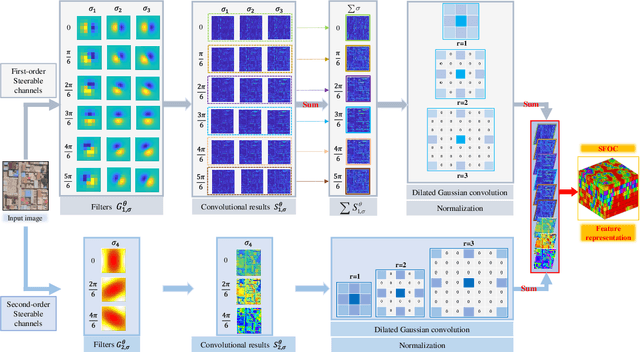

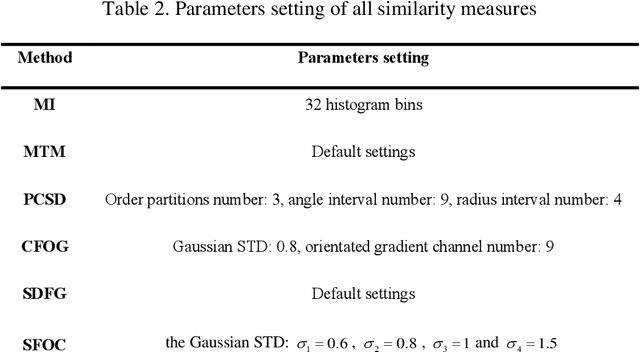

Abstract:Co-registration of multimodal remote sensing images is still an ongoing challenge because of nonlinear radiometric differences (NRD) and significant geometric distortions (e.g., scale and rotation changes) between these images. In this paper, a robust matching method based on the Steerable filters is proposed consisting of two critical steps. First, to address severe NRD, a novel structural descriptor named the Steerable Filters of first- and second-Order Channels (SFOC) is constructed, which combines the first- and second-order gradient information by using the steerable filters with a multi-scale strategy to depict more discriminative structure features of images. Then, a fast similarity measure is established called Fast Normalized Cross-Correlation (Fast-NCCSFOC), which employs the Fast Fourier Transform technique and the integral image to improve the matching efficiency. Furthermore, to achieve reliable registration performance, a coarse-to-fine multimodal registration system is designed consisting of two pivotal modules. The local coarse registration is first conducted by involving both detection of interest points (IPs) and local geometric correction, which effectively utilizes the prior georeferencing information of RS images to address global geometric distortions. In the fine registration stage, the proposed SFOC is used to resist significant NRD, and to detect control points between multimodal images by a template matching scheme. The performance of the proposed matching method has been evaluated with many different kinds of multimodal RS images. The results show its superior matching performance compared with the state-of-the-art methods. Moreover, the designed registration system also outperforms the popular commercial software in both registration accuracy and computational efficiency. Our system is available at https://github.com/yeyuanxin110.

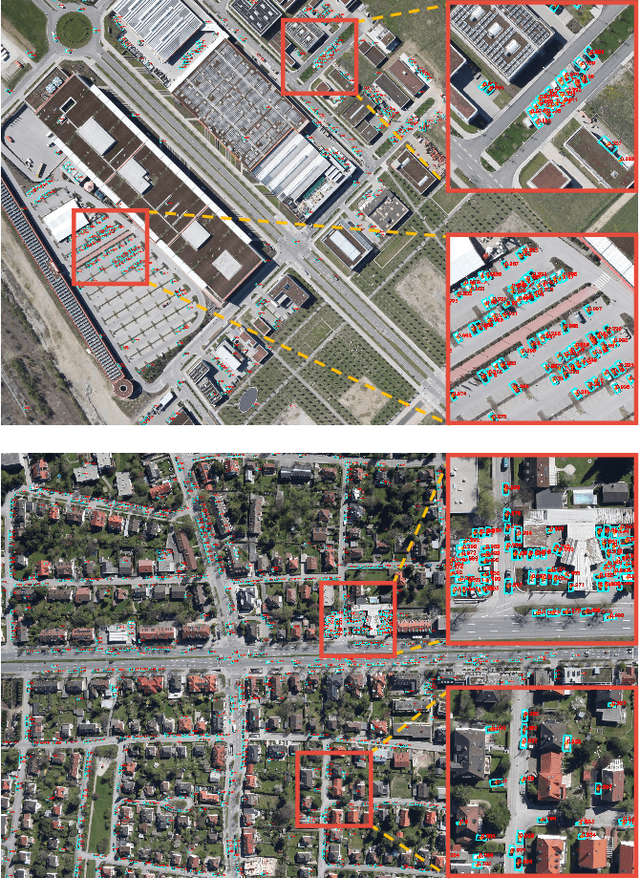

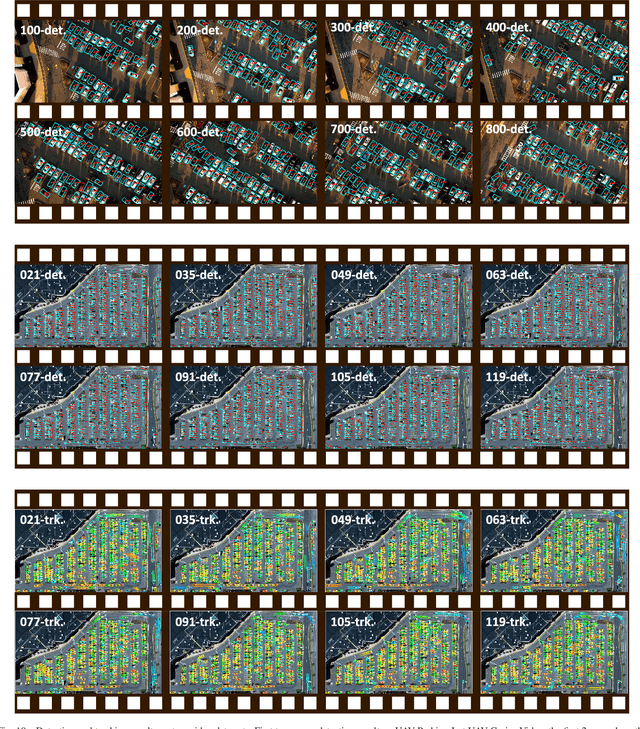

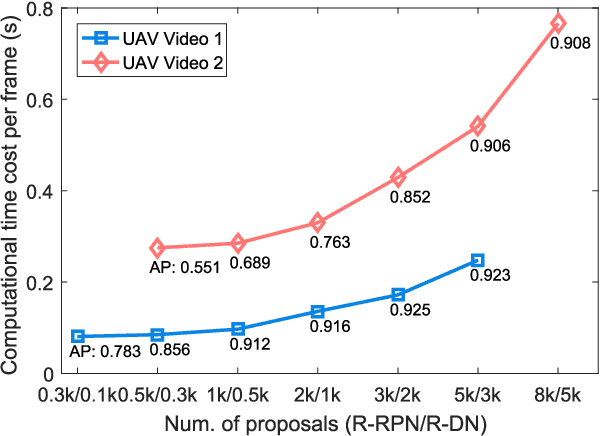

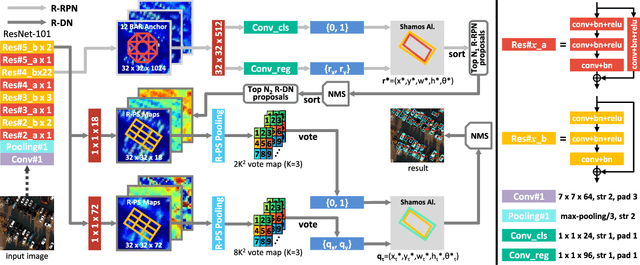

R$^3$-Net: A Deep Network for Multi-oriented Vehicle Detection in Aerial Images and Videos

Aug 16, 2018

Abstract:Vehicle detection is a significant and challenging task in aerial remote sensing applications. Most existing methods detect vehicles with regular rectangle boxes and fail to offer the orientation of vehicles. However, the orientation information is crucial for several practical applications, such as the trajectory and motion estimation of vehicles. In this paper, we propose a novel deep network, called rotatable region-based residual network (R$^3$-Net), to detect multi-oriented vehicles in aerial images and videos. More specially, R$^3$-Net is utilized to generate rotatable rectangular target boxes in a half coordinate system. First, we use a rotatable region proposal network (R-RPN) to generate rotatable region of interests (R-RoIs) from feature maps produced by a deep convolutional neural network. Here, a proposed batch averaging rotatable anchor (BAR anchor) strategy is applied to initialize the shape of vehicle candidates. Next, we propose a rotatable detection network (R-DN) for the final classification and regression of the R-RoIs. In R-DN, a novel rotatable position sensitive pooling (R-PS pooling) is designed to keep the position and orientation information simultaneously while downsampling the feature maps of R-RoIs. In our model, R-RPN and R-DN can be trained jointly. We test our network on two open vehicle detection image datasets, namely DLR 3K Munich Dataset and VEDAI Dataset, demonstrating the high precision and robustness of our method. In addition, further experiments on aerial videos show the good generalization capability of the proposed method and its potential for vehicle tracking in aerial videos. The demo video is available at https://youtu.be/xCYD-tYudN0.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge