Novi Quadrianto

University of Cambridge

Who Pays for Fairness? Rethinking Recourse under Social Burden

Sep 04, 2025Abstract:Machine learning based predictions are increasingly used in sensitive decision-making applications that directly affect our lives. This has led to extensive research into ensuring the fairness of classifiers. Beyond just fair classification, emerging legislation now mandates that when a classifier delivers a negative decision, it must also offer actionable steps an individual can take to reverse that outcome. This concept is known as algorithmic recourse. Nevertheless, many researchers have expressed concerns about the fairness guarantees within the recourse process itself. In this work, we provide a holistic theoretical characterization of unfairness in algorithmic recourse, formally linking fairness guarantees in recourse and classification, and highlighting limitations of the standard equal cost paradigm. We then introduce a novel fairness framework based on social burden, along with a practical algorithm (MISOB), broadly applicable under real-world conditions. Empirical results on real-world datasets show that MISOB reduces the social burden across all groups without compromising overall classifier accuracy.

The Decoupled Risk Landscape in Performative Prediction

Jun 10, 2025

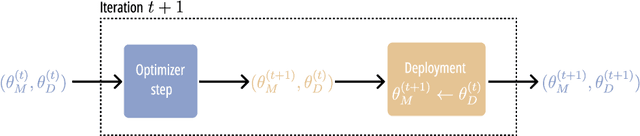

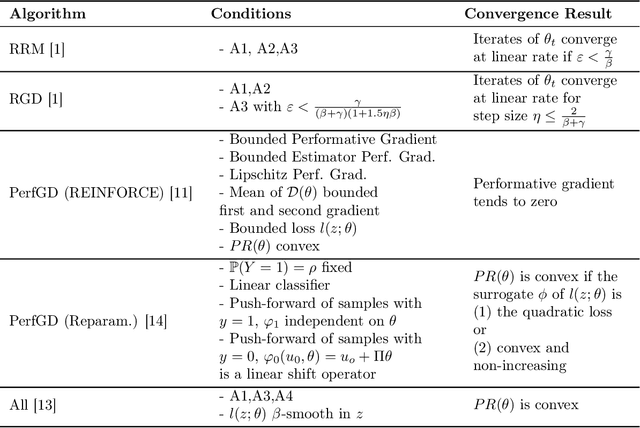

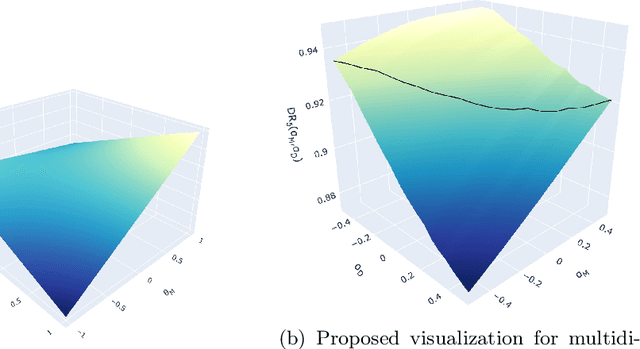

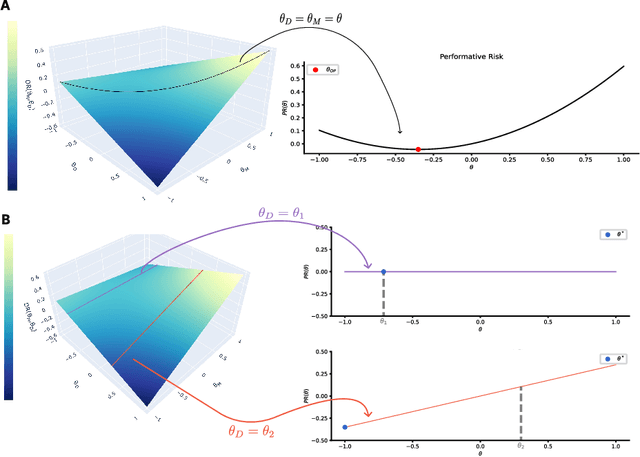

Abstract:Performative Prediction addresses scenarios where deploying a model induces a distribution shift in the input data, such as individuals modifying their features and reapplying for a bank loan after rejection. Literature has had a theoretical perspective giving mathematical guarantees for convergence (either to the stable or optimal point). We believe that visualization of the loss landscape can complement this theoretical advances with practical insights. Therefore, (1) we introduce a simple decoupled risk visualization method inspired in the two-step process that performative prediction is. Our approach visualizes the risk landscape with respect to two parameter vectors: model parameters and data parameters. We use this method to propose new properties of the interest points, to examine how existing algorithms traverse the risk landscape and perform under more realistic conditions, including strategic classification with non-linear models. (2) Building on this decoupled risk visualization, we introduce a novel setting - extended Performative Prediction - which captures scenarios where the distribution reacts to a model different from the decision-making one, reflecting the reality that agents often lack full access to the deployed model.

Diversity-Driven Learning: Tackling Spurious Correlations and Data Heterogeneity in Federated Models

Apr 15, 2025

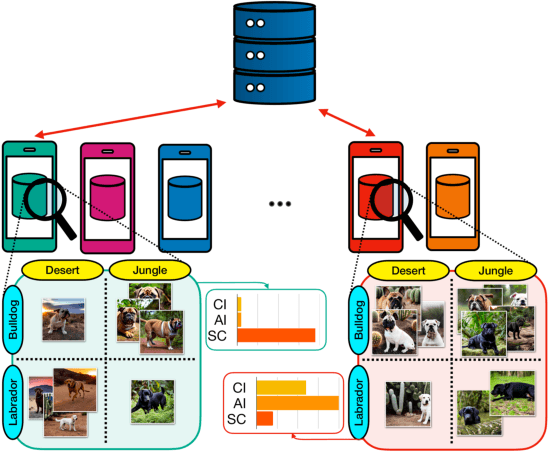

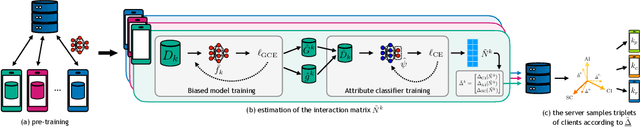

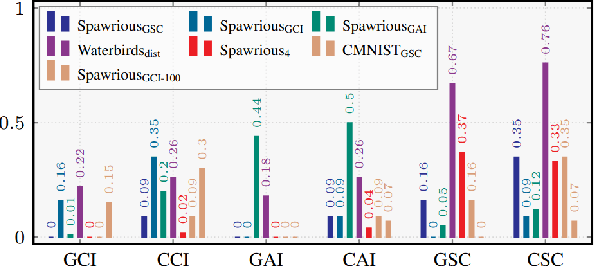

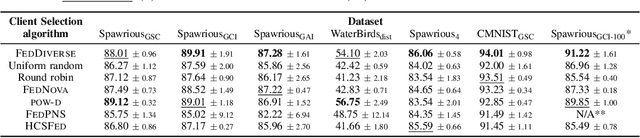

Abstract:Federated Learning (FL) enables decentralized training of machine learning models on distributed data while preserving privacy. However, in real-world FL settings, client data is often non-identically distributed and imbalanced, resulting in statistical data heterogeneity which impacts the generalization capabilities of the server's model across clients, slows convergence and reduces performance. In this paper, we address this challenge by first proposing a characterization of statistical data heterogeneity by means of 6 metrics of global and client attribute imbalance, class imbalance, and spurious correlations. Next, we create and share 7 computer vision datasets for binary and multiclass image classification tasks in Federated Learning that cover a broad range of statistical data heterogeneity and hence simulate real-world situations. Finally, we propose FedDiverse, a novel client selection algorithm in FL which is designed to manage and leverage data heterogeneity across clients by promoting collaboration between clients with complementary data distributions. Experiments on the seven proposed FL datasets demonstrate FedDiverse's effectiveness in enhancing the performance and robustness of a variety of FL methods while having low communication and computational overhead.

Efficient Online Inference of Vision Transformers by Training-Free Tokenization

Nov 23, 2024

Abstract:The cost of deploying vision transformers increasingly represents a barrier to wider industrial adoption. Existing compression requires additional end-to-end fine-tuning or incurs a significant drawback to runtime, thus making them ill-suited for online inference. We introduce the $\textbf{Visual Word Tokenizer}$ (VWT), a training-free method for reducing energy costs while retaining performance and runtime. The VWT groups patches (visual subwords) that are frequently used into visual words while infrequent ones remain intact. To do so, intra-image or inter-image statistics are leveraged to identify similar visual concepts for compression. Experimentally, we demonstrate a reduction in wattage of up to 19% with only a 20% increase in runtime at most. Comparative approaches of 8-bit quantization and token merging achieve a lower or similar energy efficiency but exact a higher toll on runtime (up to $2\times$ or more). Our results indicate that VWTs are well-suited for efficient online inference with a marginal compromise on performance.

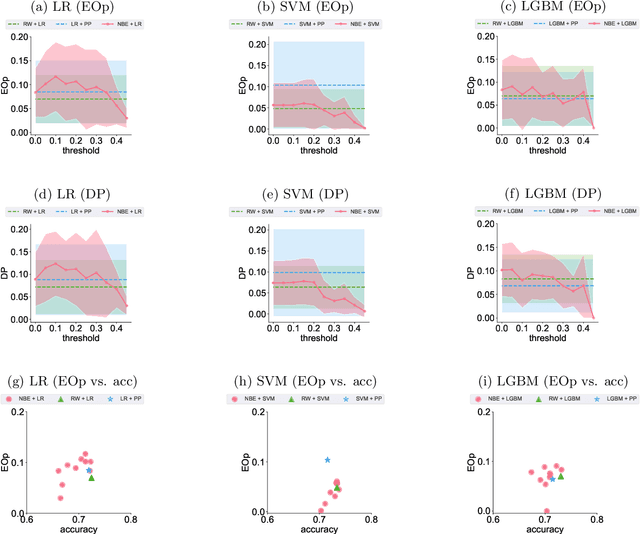

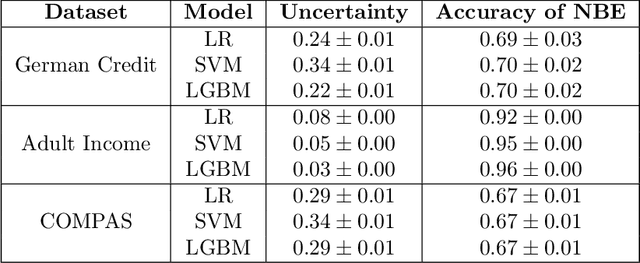

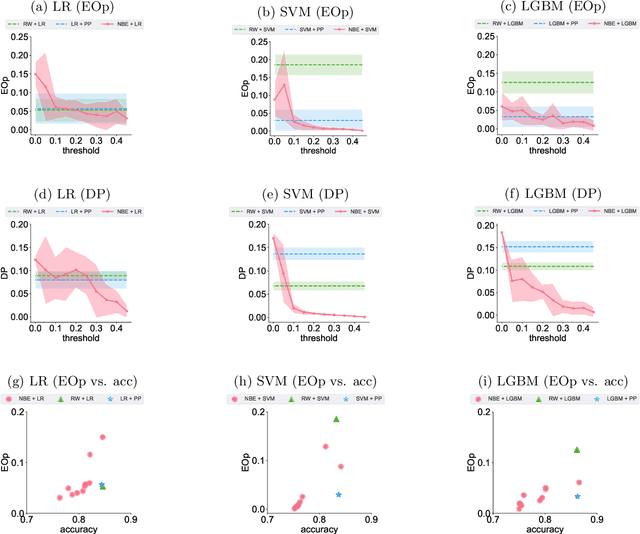

Dancing in the Shadows: Harnessing Ambiguity for Fairer Classifiers

Jun 27, 2024

Abstract:This paper introduces a novel approach to bolster algorithmic fairness in scenarios where sensitive information is only partially known. In particular, we propose to leverage instances with uncertain identity with regards to the sensitive attribute to train a conventional machine learning classifier. The enhanced fairness observed in the final predictions of this classifier highlights the promising potential of prioritizing ambiguity (i.e., non-normativity) as a means to improve fairness guarantees in real-world classification tasks.

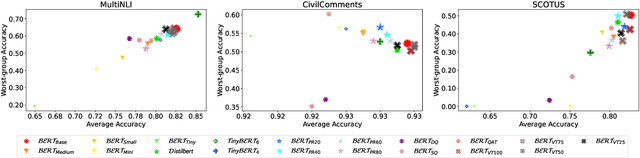

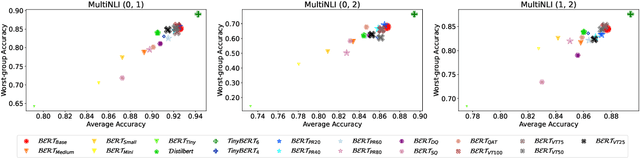

Are Compressed Language Models Less Subgroup Robust?

Mar 26, 2024

Abstract:To reduce the inference cost of large language models, model compression is increasingly used to create smaller scalable models. However, little is known about their robustness to minority subgroups defined by the labels and attributes of a dataset. In this paper, we investigate the effects of 18 different compression methods and settings on the subgroup robustness of BERT language models. We show that worst-group performance does not depend on model size alone, but also on the compression method used. Additionally, we find that model compression does not always worsen the performance on minority subgroups. Altogether, our analysis serves to further research into the subgroup robustness of model compression.

* The 2023 Conference on Empirical Methods in Natural Language Processing (EMNLP 2023)

Addressing Membership Inference Attack in Federated Learning with Model Compression

Nov 29, 2023Abstract:Federated Learning (FL) has been proposed as a privacy-preserving solution for machine learning. However, recent works have shown that Federated Learning can leak private client data through membership attacks. In this paper, we show that the effectiveness of these attacks on the clients negatively correlates with the size of the client datasets and model complexity. Based on this finding, we propose model-agnostic Federated Learning as a privacy-enhancing solution because it enables the use of models of varying complexity in the clients. To this end, we present $\texttt{MaPP-FL}$, a novel privacy-aware FL approach that leverages model compression on the clients while keeping a full model on the server. We compare the performance of $\texttt{MaPP-FL}$ against state-of-the-art model-agnostic FL methods on the CIFAR-10, CIFAR-100, and FEMNIST vision datasets. Our experiments show the effectiveness of $\texttt{MaPP-FL}$ in preserving the clients' and the server's privacy while achieving competitive classification accuracies.

Uncertainty in Fairness Assessment: Maintaining Stable Conclusions Despite Fluctuations

Feb 02, 2023Abstract:Several recent works encourage the use of a Bayesian framework when assessing performance and fairness metrics of a classification algorithm in a supervised setting. We propose the Uncertainty Matters (UM) framework that generalizes a Beta-Binomial approach to derive the posterior distribution of any criteria combination, allowing stable performance assessment in a bias-aware setting.We suggest modeling the confusion matrix of each demographic group using a Multinomial distribution updated through a Bayesian procedure. We extend UM to be applicable under the popular K-fold cross-validation procedure. Experiments highlight the benefits of UM over classical evaluation frameworks regarding informativeness and stability.

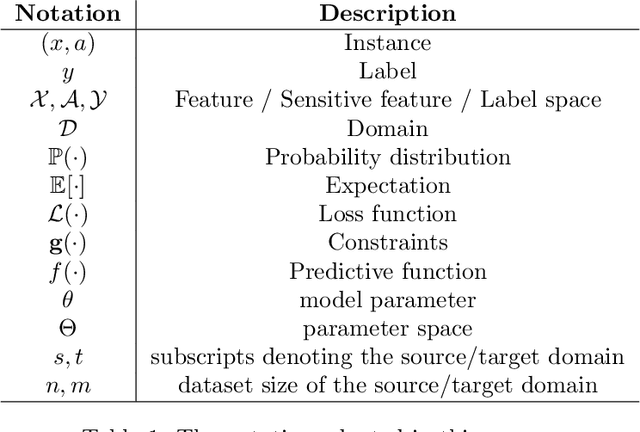

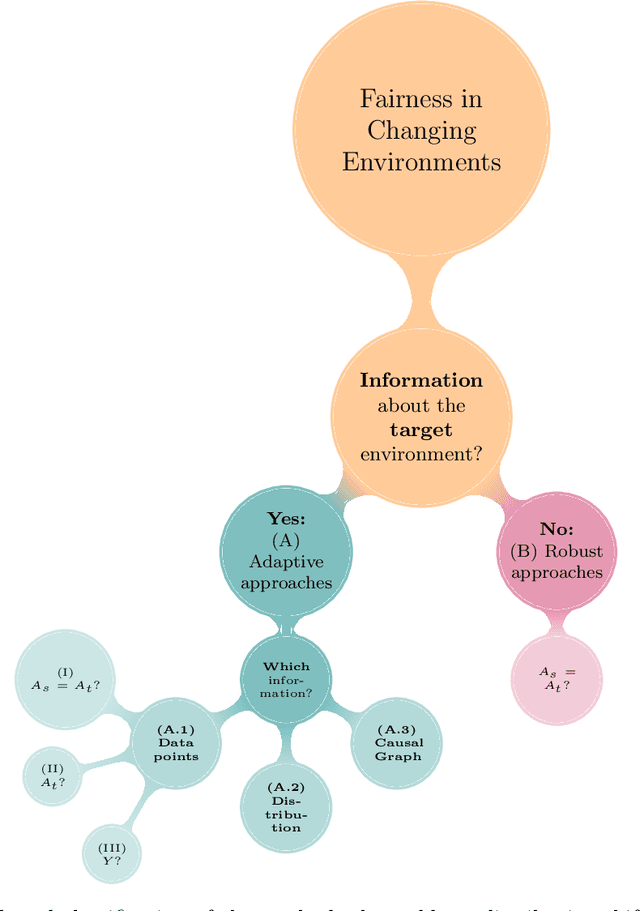

A Survey on Preserving Fairness Guarantees in Changing Environments

Nov 14, 2022

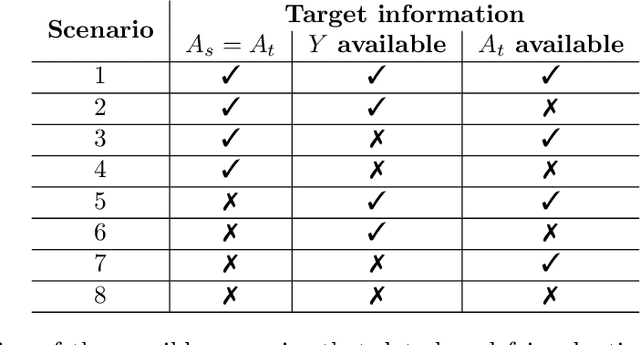

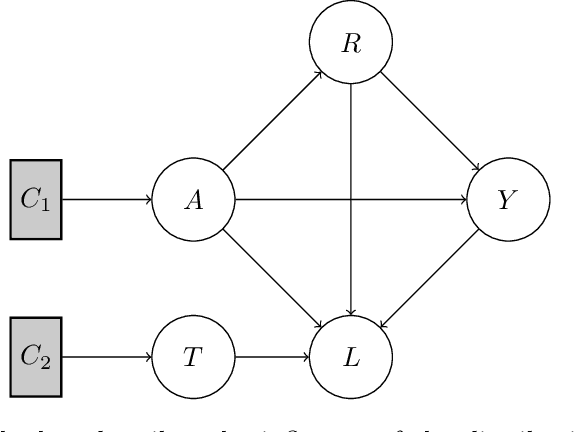

Abstract:Human lives are increasingly being affected by the outcomes of automated decision-making systems and it is essential for the latter to be, not only accurate, but also fair. The literature of algorithmic fairness has grown considerably over the last decade, where most of the approaches are evaluated under the strong assumption that the train and test samples are independently and identically drawn from the same underlying distribution. However, in practice, dissimilarity between the training and deployment environments exists, which compromises the performance of the decision-making algorithm as well as its fairness guarantees in the deployment data. There is an emergent research line that studies how to preserve fairness guarantees when the data generating processes differ between the source (train) and target (test) domains, which is growing remarkably. With this survey, we aim to provide a wide and unifying overview on the topic. For such purpose, we propose a taxonomy of the existing approaches for fair classification under distribution shift, highlight benchmarking alternatives, point out the relation with other similar research fields and eventually, identify future venues of research.

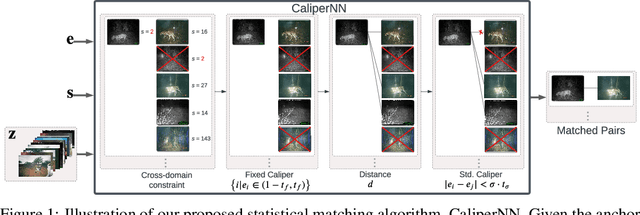

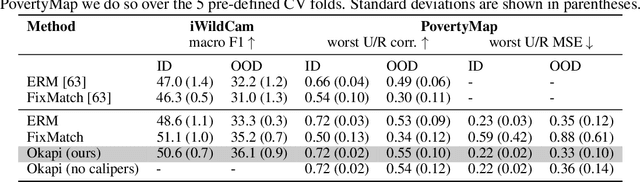

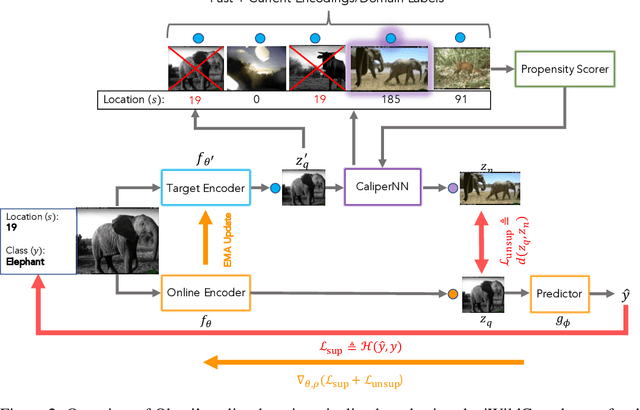

Okapi: Generalising Better by Making Statistical Matches Match

Nov 07, 2022

Abstract:We propose Okapi, a simple, efficient, and general method for robust semi-supervised learning based on online statistical matching. Our method uses a nearest-neighbours-based matching procedure to generate cross-domain views for a consistency loss, while eliminating statistical outliers. In order to perform the online matching in a runtime- and memory-efficient way, we draw upon the self-supervised literature and combine a memory bank with a slow-moving momentum encoder. The consistency loss is applied within the feature space, rather than on the predictive distribution, making the method agnostic to both the modality and the task in question. We experiment on the WILDS 2.0 datasets Sagawa et al., which significantly expands the range of modalities, applications, and shifts available for studying and benchmarking real-world unsupervised adaptation. Contrary to Sagawa et al., we show that it is in fact possible to leverage additional unlabelled data to improve upon empirical risk minimisation (ERM) results with the right method. Our method outperforms the baseline methods in terms of out-of-distribution (OOD) generalisation on the iWildCam (a multi-class classification task) and PovertyMap (a regression task) image datasets as well as the CivilComments (a binary classification task) text dataset. Furthermore, from a qualitative perspective, we show the matches obtained from the learned encoder are strongly semantically related. Code for our paper is publicly available at https://github.com/wearepal/okapi/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge