Jose A. Lozano

The Decoupled Risk Landscape in Performative Prediction

Jun 10, 2025

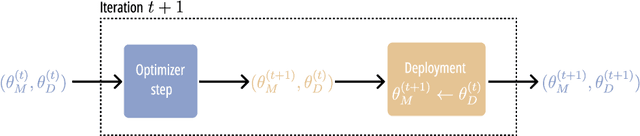

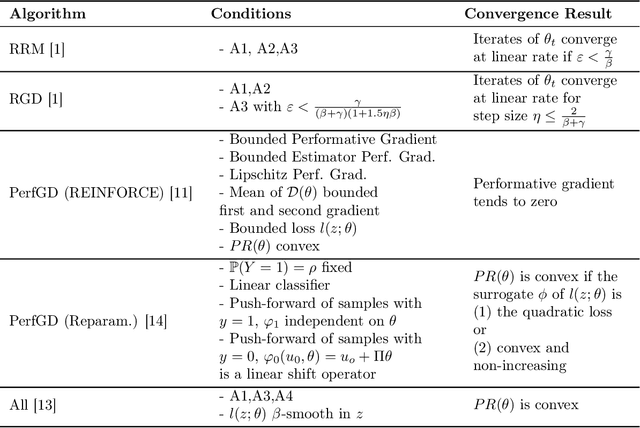

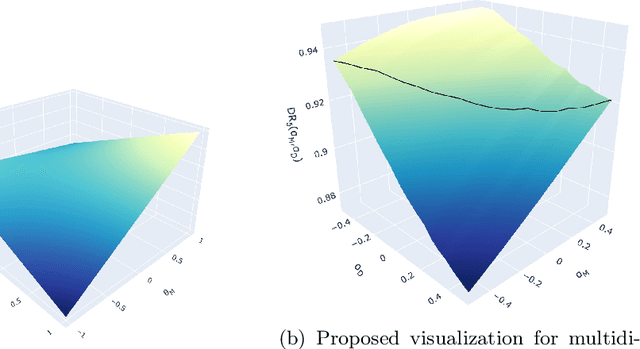

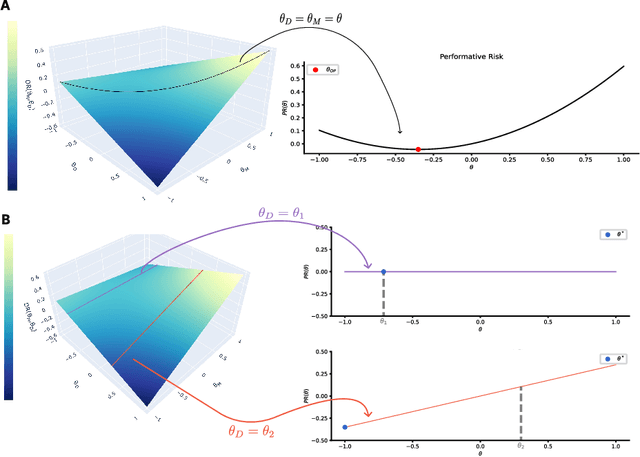

Abstract:Performative Prediction addresses scenarios where deploying a model induces a distribution shift in the input data, such as individuals modifying their features and reapplying for a bank loan after rejection. Literature has had a theoretical perspective giving mathematical guarantees for convergence (either to the stable or optimal point). We believe that visualization of the loss landscape can complement this theoretical advances with practical insights. Therefore, (1) we introduce a simple decoupled risk visualization method inspired in the two-step process that performative prediction is. Our approach visualizes the risk landscape with respect to two parameter vectors: model parameters and data parameters. We use this method to propose new properties of the interest points, to examine how existing algorithms traverse the risk landscape and perform under more realistic conditions, including strategic classification with non-linear models. (2) Building on this decoupled risk visualization, we introduce a novel setting - extended Performative Prediction - which captures scenarios where the distribution reacts to a model different from the decision-making one, reflecting the reality that agents often lack full access to the deployed model.

A Review on Self-Supervised Learning for Time Series Anomaly Detection: Recent Advances and Open Challenges

Jan 25, 2025Abstract:Time series anomaly detection presents various challenges due to the sequential and dynamic nature of time-dependent data. Traditional unsupervised methods frequently encounter difficulties in generalization, often overfitting to known normal patterns observed during training and struggling to adapt to unseen normality. In response to this limitation, self-supervised techniques for time series have garnered attention as a potential solution to undertake this obstacle and enhance the performance of anomaly detectors. This paper presents a comprehensive review of the recent methods that make use of self-supervised learning for time series anomaly detection. A taxonomy is proposed to categorize these methods based on their primary characteristics, facilitating a clear understanding of their diversity within this field. The information contained in this survey, along with additional details that will be periodically updated, is available on the following GitHub repository: https://github.com/Aitorzan3/Awesome-Self-Supervised-Time-Series-Anomaly-Detection.

Supervised Learning with Evolving Tasks and Performance Guarantees

Jan 09, 2025Abstract:Multiple supervised learning scenarios are composed by a sequence of classification tasks. For instance, multi-task learning and continual learning aim to learn a sequence of tasks that is either fixed or grows over time. Existing techniques for learning tasks that are in a sequence are tailored to specific scenarios, lacking adaptability to others. In addition, most of existing techniques consider situations in which the order of the tasks in the sequence is not relevant. However, it is common that tasks in a sequence are evolving in the sense that consecutive tasks often have a higher similarity. This paper presents a learning methodology that is applicable to multiple supervised learning scenarios and adapts to evolving tasks. Differently from existing techniques, we provide computable tight performance guarantees and analytically characterize the increase in the effective sample size. Experiments on benchmark datasets show the performance improvement of the proposed methodology in multiple scenarios and the reliability of the presented performance guarantees.

Craftium: An Extensible Framework for Creating Reinforcement Learning Environments

Jul 04, 2024Abstract:Most Reinforcement Learning (RL) environments are created by adapting existing physics simulators or video games. However, they usually lack the flexibility required for analyzing specific characteristics of RL methods often relevant to research. This paper presents Craftium, a novel framework for exploring and creating rich 3D visual RL environments that builds upon the Minetest game engine and the popular Gymnasium API. Minetest is built to be extended and can be used to easily create voxel-based 3D environments (often similar to Minecraft), while Gymnasium offers a simple and common interface for RL research. Craftium provides a platform that allows practitioners to create fully customized environments to suit their specific research requirements, ranging from simple visual tasks to infinite and procedurally generated worlds. We also provide five ready-to-use environments for benchmarking and as examples of how to develop new ones. The code and documentation are available at https://github.com/mikelma/craftium/.

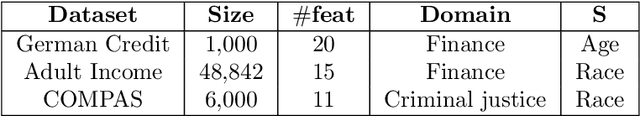

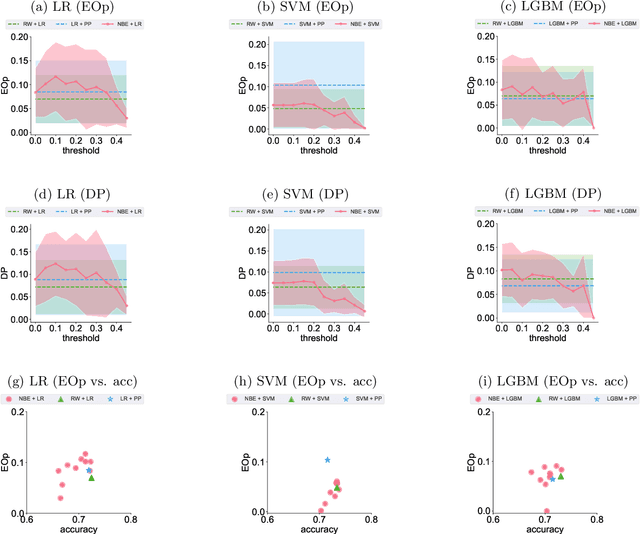

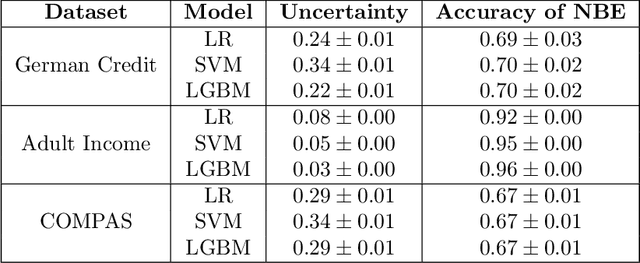

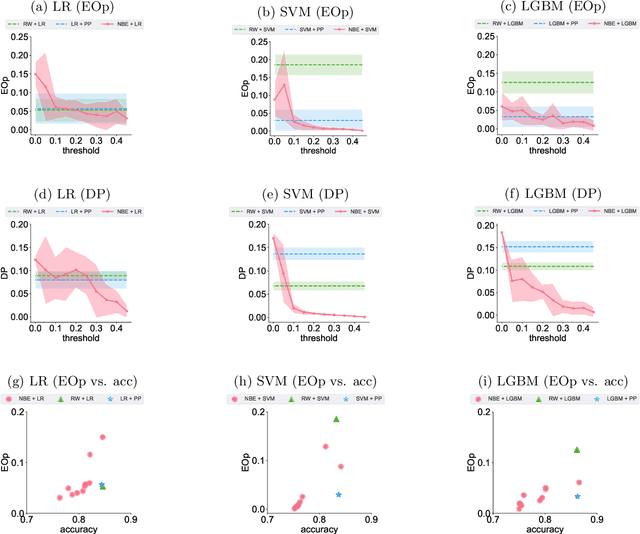

Dancing in the Shadows: Harnessing Ambiguity for Fairer Classifiers

Jun 27, 2024

Abstract:This paper introduces a novel approach to bolster algorithmic fairness in scenarios where sensitive information is only partially known. In particular, we propose to leverage instances with uncertain identity with regards to the sensitive attribute to train a conventional machine learning classifier. The enhanced fairness observed in the final predictions of this classifier highlights the promising potential of prioritizing ambiguity (i.e., non-normativity) as a means to improve fairness guarantees in real-world classification tasks.

Uncertainty-Aware Explanations Through Probabilistic Self-Explainable Neural Networks

Mar 20, 2024Abstract:The lack of transparency of Deep Neural Networks continues to be a limitation that severely undermines their reliability and usage in high-stakes applications. Promising approaches to overcome such limitations are Prototype-Based Self-Explainable Neural Networks (PSENNs), whose predictions rely on the similarity between the input at hand and a set of prototypical representations of the output classes, offering therefore a deep, yet transparent-by-design, architecture. So far, such models have been designed by considering pointwise estimates for the prototypes, which remain fixed after the learning phase of the model. In this paper, we introduce a probabilistic reformulation of PSENNs, called Prob-PSENN, which replaces point estimates for the prototypes with probability distributions over their values. This provides not only a more flexible framework for an end-to-end learning of prototypes, but can also capture the explanatory uncertainty of the model, which is a missing feature in previous approaches. In addition, since the prototypes determine both the explanation and the prediction, Prob-PSENNs allow us to detect when the model is making uninformed or uncertain predictions, and to obtain valid explanations for them. Our experiments demonstrate that Prob-PSENNs provide more meaningful and robust explanations than their non-probabilistic counterparts, thus enhancing the explainability and reliability of the models.

Time-dependent Probabilistic Generative Models for Disease Progression

Nov 15, 2023Abstract:Electronic health records contain valuable information for monitoring patients' health trajectories over time. Disease progression models have been developed to understand the underlying patterns and dynamics of diseases using these data as sequences. However, analyzing temporal data from EHRs is challenging due to the variability and irregularities present in medical records. We propose a Markovian generative model of treatments developed to (i) model the irregular time intervals between medical events; (ii) classify treatments into subtypes based on the patient sequence of medical events and the time intervals between them; and (iii) segment treatments into subsequences of disease progression patterns. We assume that sequences have an associated structure of latent variables: a latent class representing the different subtypes of treatments; and a set of latent stages indicating the phase of progression of the treatments. We use the Expectation-Maximization algorithm to learn the model, which is efficiently solved with a dynamic programming-based method. Various parametric models have been employed to model the time intervals between medical events during the learning process, including the geometric, exponential, and Weibull distributions. The results demonstrate the effectiveness of our model in recovering the underlying model from data and accurately modeling the irregular time intervals between medical actions.

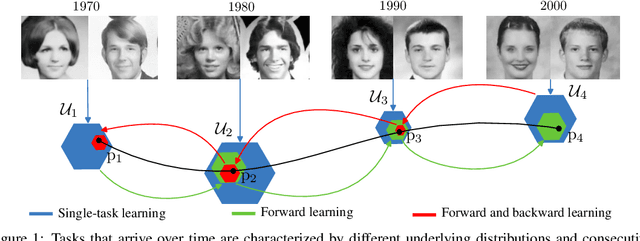

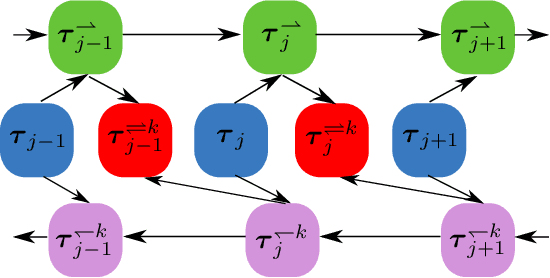

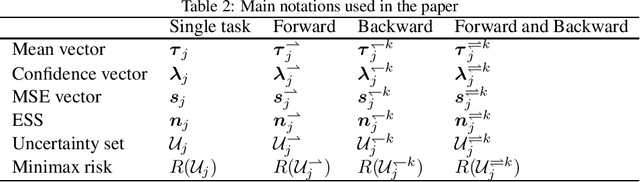

Minimax Forward and Backward Learning of Evolving Tasks with Performance Guarantees

Oct 24, 2023

Abstract:For a sequence of classification tasks that arrive over time, it is common that tasks are evolving in the sense that consecutive tasks often have a higher similarity. The incremental learning of a growing sequence of tasks holds promise to enable accurate classification even with few samples per task by leveraging information from all the tasks in the sequence (forward and backward learning). However, existing techniques developed for continual learning and concept drift adaptation are either designed for tasks with time-independent similarities or only aim to learn the last task in the sequence. This paper presents incremental minimax risk classifiers (IMRCs) that effectively exploit forward and backward learning and account for evolving tasks. In addition, we analytically characterize the performance improvement provided by forward and backward learning in terms of the tasks' expected quadratic change and the number of tasks. The experimental evaluation shows that IMRCs can result in a significant performance improvement, especially for reduced sample sizes.

Uncertainty in Fairness Assessment: Maintaining Stable Conclusions Despite Fluctuations

Feb 02, 2023Abstract:Several recent works encourage the use of a Bayesian framework when assessing performance and fairness metrics of a classification algorithm in a supervised setting. We propose the Uncertainty Matters (UM) framework that generalizes a Beta-Binomial approach to derive the posterior distribution of any criteria combination, allowing stable performance assessment in a bias-aware setting.We suggest modeling the confusion matrix of each demographic group using a Multinomial distribution updated through a Bayesian procedure. We extend UM to be applicable under the popular K-fold cross-validation procedure. Experiments highlight the benefits of UM over classical evaluation frameworks regarding informativeness and stability.

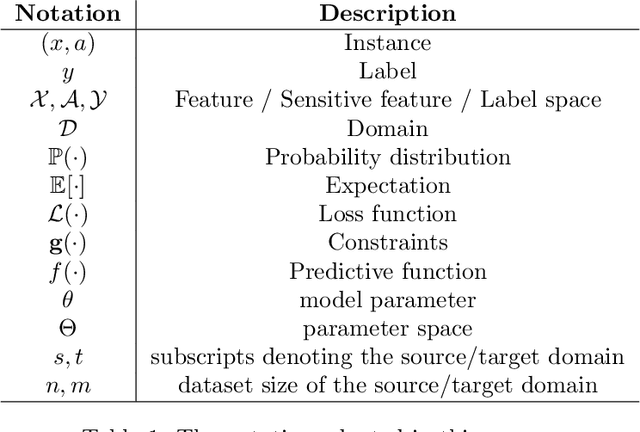

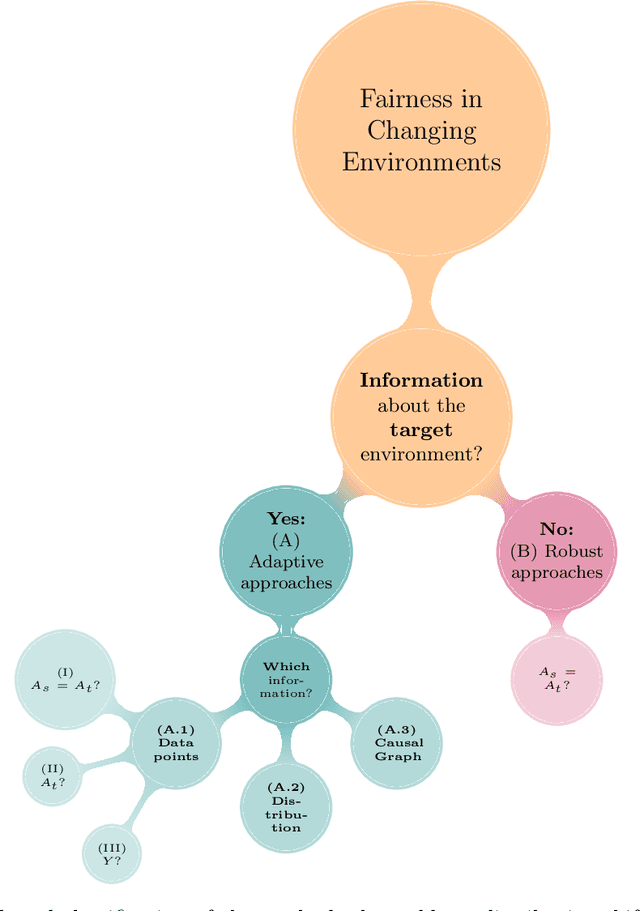

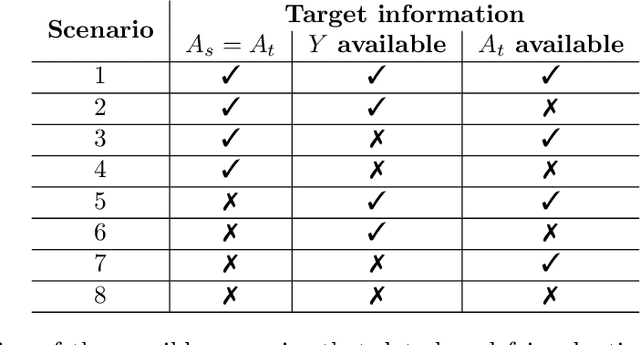

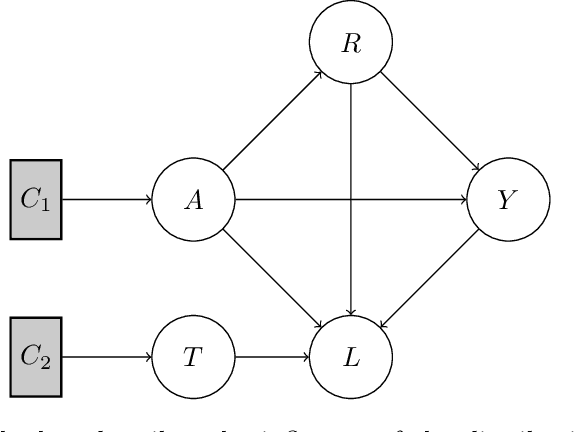

A Survey on Preserving Fairness Guarantees in Changing Environments

Nov 14, 2022

Abstract:Human lives are increasingly being affected by the outcomes of automated decision-making systems and it is essential for the latter to be, not only accurate, but also fair. The literature of algorithmic fairness has grown considerably over the last decade, where most of the approaches are evaluated under the strong assumption that the train and test samples are independently and identically drawn from the same underlying distribution. However, in practice, dissimilarity between the training and deployment environments exists, which compromises the performance of the decision-making algorithm as well as its fairness guarantees in the deployment data. There is an emergent research line that studies how to preserve fairness guarantees when the data generating processes differ between the source (train) and target (test) domains, which is growing remarkably. With this survey, we aim to provide a wide and unifying overview on the topic. For such purpose, we propose a taxonomy of the existing approaches for fair classification under distribution shift, highlight benchmarking alternatives, point out the relation with other similar research fields and eventually, identify future venues of research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge