Nirmalya Roy

A Survey on Efficient Vision-Language Models

Apr 13, 2025

Abstract:Vision-language models (VLMs) integrate visual and textual information, enabling a wide range of applications such as image captioning and visual question answering, making them crucial for modern AI systems. However, their high computational demands pose challenges for real-time applications. This has led to a growing focus on developing efficient vision language models. In this survey, we review key techniques for optimizing VLMs on edge and resource-constrained devices. We also explore compact VLM architectures, frameworks and provide detailed insights into the performance-memory trade-offs of efficient VLMs. Furthermore, we establish a GitHub repository at https://github.com/MPSCUMBC/Efficient-Vision-Language-Models-A-Survey to compile all surveyed papers, which we will actively update. Our objective is to foster deeper research in this area.

CAM-Seg: A Continuous-valued Embedding Approach for Semantic Image Generation

Mar 19, 2025

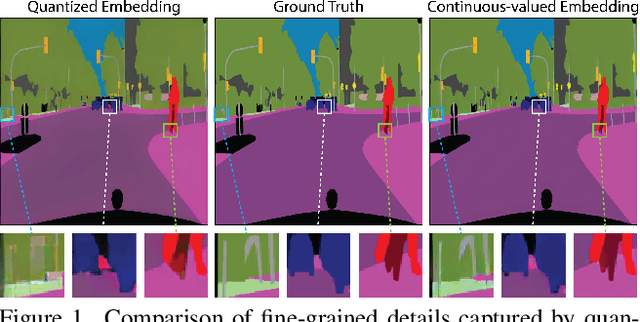

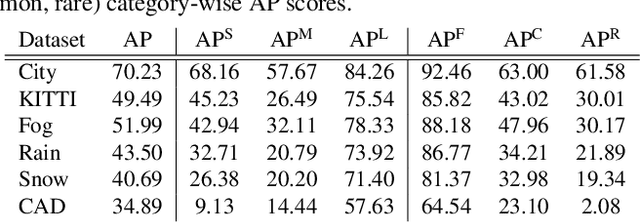

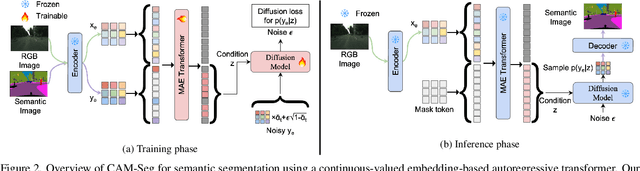

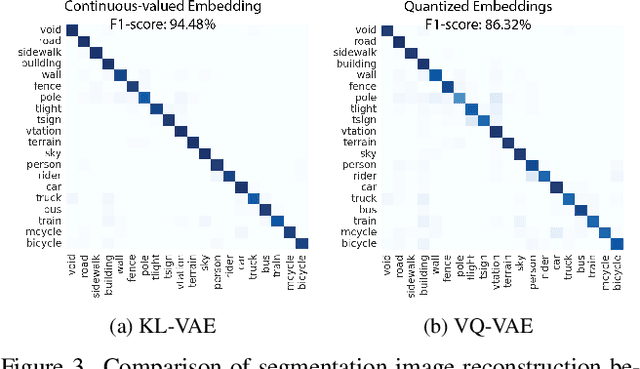

Abstract:Traditional transformer-based semantic segmentation relies on quantized embeddings. However, our analysis reveals that autoencoder accuracy on segmentation mask using quantized embeddings (e.g. VQ-VAE) is 8% lower than continuous-valued embeddings (e.g. KL-VAE). Motivated by this, we propose a continuous-valued embedding framework for semantic segmentation. By reformulating semantic mask generation as a continuous image-to-embedding diffusion process, our approach eliminates the need for discrete latent representations while preserving fine-grained spatial and semantic details. Our key contribution includes a diffusion-guided autoregressive transformer that learns a continuous semantic embedding space by modeling long-range dependencies in image features. Our framework contains a unified architecture combining a VAE encoder for continuous feature extraction, a diffusion-guided transformer for conditioned embedding generation, and a VAE decoder for semantic mask reconstruction. Our setting facilitates zero-shot domain adaptation capabilities enabled by the continuity of the embedding space. Experiments across diverse datasets (e.g., Cityscapes and domain-shifted variants) demonstrate state-of-the-art robustness to distribution shifts, including adverse weather (e.g., fog, snow) and viewpoint variations. Our model also exhibits strong noise resilience, achieving robust performance ($\approx$ 95% AP compared to baseline) under gaussian noise, moderate motion blur, and moderate brightness/contrast variations, while experiencing only a moderate impact ($\approx$ 90% AP compared to baseline) from 50% salt and pepper noise, saturation and hue shifts. Code available: https://github.com/mahmed10/CAMSS.git

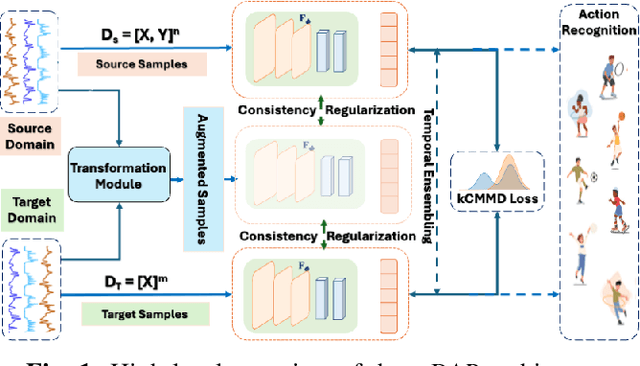

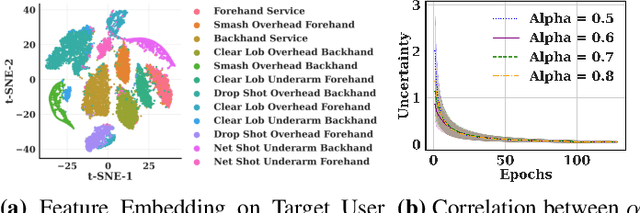

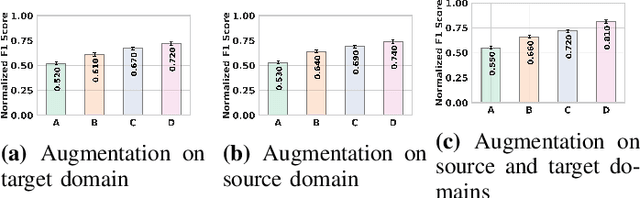

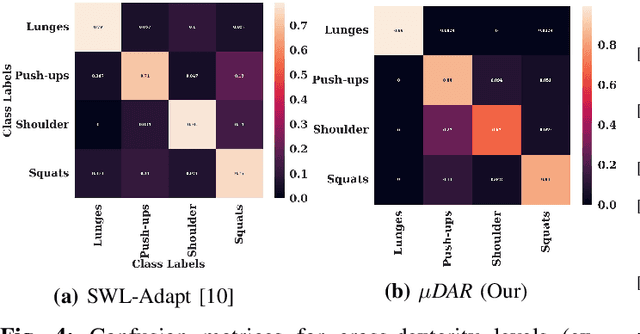

Unsupervised Domain Adaptation for Action Recognition via Self-Ensembling and Conditional Embedding Alignment

Oct 23, 2024

Abstract:Recent advancements in deep learning-based wearable human action recognition (wHAR) have improved the capture and classification of complex motions, but adoption remains limited due to the lack of expert annotations and domain discrepancies from user variations. Limited annotations hinder the model's ability to generalize to out-of-distribution samples. While data augmentation can improve generalizability, unsupervised augmentation techniques must be applied carefully to avoid introducing noise. Unsupervised domain adaptation (UDA) addresses domain discrepancies by aligning conditional distributions with labeled target samples, but vanilla pseudo-labeling can lead to error propagation. To address these challenges, we propose $\mu$DAR, a novel joint optimization architecture comprised of three functions: (i) consistency regularizer between augmented samples to improve model classification generalizability, (ii) temporal ensemble for robust pseudo-label generation and (iii) conditional distribution alignment to improve domain generalizability. The temporal ensemble works by aggregating predictions from past epochs to smooth out noisy pseudo-label predictions, which are then used in the conditional distribution alignment module to minimize kernel-based class-wise conditional maximum mean discrepancy ($k$CMMD) between the source and target feature space to learn a domain invariant embedding. The consistency-regularized augmentations ensure that multiple augmentations of the same sample share the same labels; this results in (a) strong generalization with limited source domain samples and (b) consistent pseudo-label generation in target samples. The novel integration of these three modules in $\mu$DAR results in a range of $\approx$ 4-12% average macro-F1 score improvement over six state-of-the-art UDA methods in four benchmark wHAR datasets

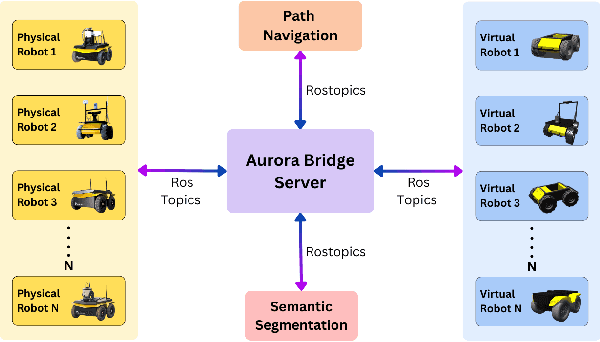

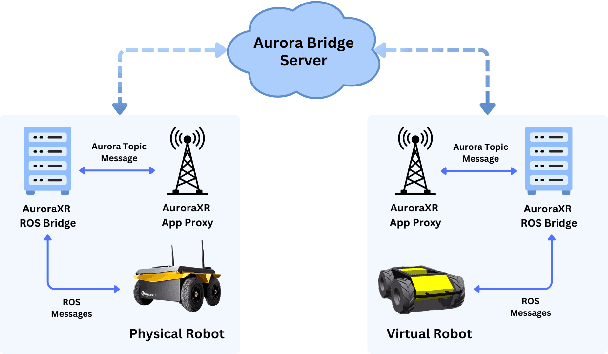

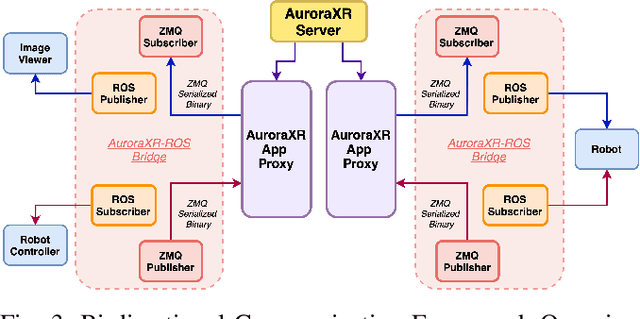

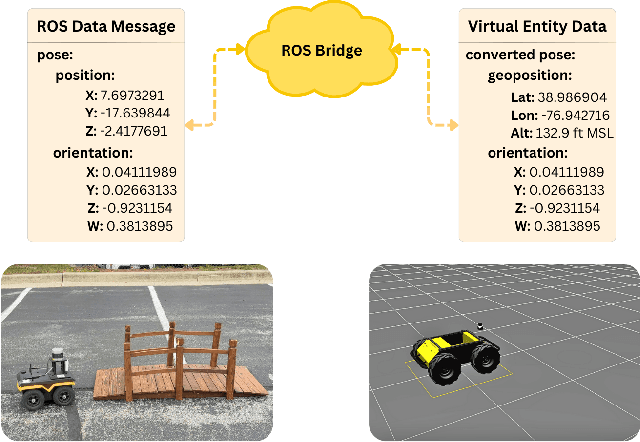

SERN: Simulation-Enhanced Realistic Navigation for Multi-Agent Robotic Systems in Contested Environments

Oct 22, 2024

Abstract:The increasing deployment of autonomous systems in complex environments necessitates efficient communication and task completion among multiple agents. This paper presents SERN (Simulation-Enhanced Realistic Navigation), a novel framework integrating virtual and physical environments for real-time collaborative decision-making in multi-robot systems. SERN addresses key challenges in asset deployment and coordination through a bi-directional communication framework using the AuroraXR ROS Bridge. Our approach advances the SOTA through accurate real-world representation in virtual environments using Unity high-fidelity simulator; synchronization of physical and virtual robot movements; efficient ROS data distribution between remote locations; and integration of SOTA semantic segmentation for enhanced environmental perception. Our evaluations show a 15% to 24% improvement in latency and up to a 15% increase in processing efficiency compared to traditional ROS setups. Real-world and virtual simulation experiments with multiple robots demonstrate synchronization accuracy, achieving less than 5 cm positional error and under 2-degree rotational error. These results highlight SERN's potential to enhance situational awareness and multi-agent coordination in diverse, contested environments.

QuasiNav: Asymmetric Cost-Aware Navigation Planning with Constrained Quasimetric Reinforcement Learning

Oct 22, 2024Abstract:Autonomous navigation in unstructured outdoor environments is inherently challenging due to the presence of asymmetric traversal costs, such as varying energy expenditures for uphill versus downhill movement. Traditional reinforcement learning methods often assume symmetric costs, which can lead to suboptimal navigation paths and increased safety risks in real-world scenarios. In this paper, we introduce QuasiNav, a novel reinforcement learning framework that integrates quasimetric embeddings to explicitly model asymmetric costs and guide efficient, safe navigation. QuasiNav formulates the navigation problem as a constrained Markov decision process (CMDP) and employs quasimetric embeddings to capture directionally dependent costs, allowing for a more accurate representation of the terrain. This approach is combined with adaptive constraint tightening within a constrained policy optimization framework to dynamically enforce safety constraints during learning. We validate QuasiNav across three challenging navigation scenarios-undulating terrains, asymmetric hill traversal, and directionally dependent terrain traversal-demonstrating its effectiveness in both simulated and real-world environments. Experimental results show that QuasiNav significantly outperforms conventional methods, achieving higher success rates, improved energy efficiency, and better adherence to safety constraints.

EnCoMP: Enhanced Covert Maneuver Planning using Offline Reinforcement Learning

Mar 29, 2024Abstract:Cover navigation in complex environments is a critical challenge for autonomous robots, requiring the identification and utilization of environmental cover while maintaining efficient navigation. We propose an enhanced navigation system that enables robots to identify and utilize natural and artificial environmental features as cover, thereby minimizing exposure to potential threats. Our perception pipeline leverages LiDAR data to generate high-fidelity cover maps and potential threat maps, providing a comprehensive understanding of the surrounding environment. We train an offline reinforcement learning model using a diverse dataset collected from real-world environments, learning a robust policy that evaluates the quality of candidate actions based on their ability to maximize cover utilization, minimize exposure to threats, and reach the goal efficiently. Extensive real-world experiments demonstrate the superiority of our approach in terms of success rate, cover utilization, exposure minimization, and navigation efficiency compared to state-of-the-art methods.

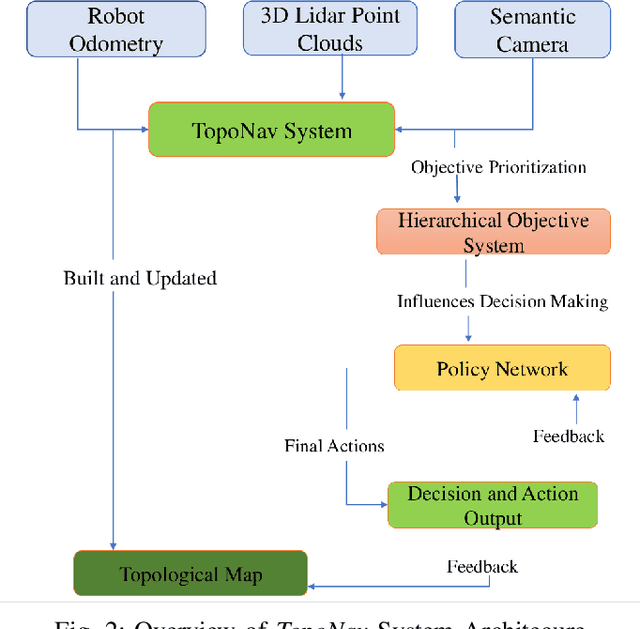

TopoNav: Topological Navigation for Efficient Exploration in Sparse Reward Environments

Feb 06, 2024

Abstract:Autonomous robots exploring unknown areas face a significant challenge -- navigating effectively without prior maps and with limited external feedback. This challenge intensifies in sparse reward environments, where traditional exploration techniques often fail. In this paper, we introduce TopoNav, a novel framework that empowers robots to overcome these constraints and achieve efficient, adaptable, and goal-oriented exploration. TopoNav's fundamental building blocks are active topological mapping, intrinsic reward mechanisms, and hierarchical objective prioritization. Throughout its exploration, TopoNav constructs a dynamic topological map that captures key locations and pathways. It utilizes intrinsic rewards to guide the robot towards designated sub-goals within this map, fostering structured exploration even in sparse reward settings. To ensure efficient navigation, TopoNav employs the Hierarchical Objective-Driven Active Topologies framework, enabling the robot to prioritize immediate tasks like obstacle avoidance while maintaining focus on the overall goal. We demonstrate TopoNav's effectiveness in simulated environments that replicate real-world conditions. Our results reveal significant improvements in exploration efficiency, navigational accuracy, and adaptability to unforeseen obstacles, showcasing its potential to revolutionize autonomous exploration in a wide range of applications, including search and rescue, environmental monitoring, and planetary exploration.

Enhancing Robotic Navigation: An Evaluation of Single and Multi-Objective Reinforcement Learning Strategies

Dec 14, 2023

Abstract:This study presents a comparative analysis between single-objective and multi-objective reinforcement learning methods for training a robot to navigate effectively to an end goal while efficiently avoiding obstacles. Traditional reinforcement learning techniques, namely Deep Q-Network (DQN), Deep Deterministic Policy Gradient (DDPG), and Twin Delayed DDPG (TD3), have been evaluated using the Gazebo simulation framework in a variety of environments with parameters such as random goal and robot starting locations. These methods provide a numerical reward to the robot, offering an indication of action quality in relation to the goal. However, their limitations become apparent in complex settings where multiple, potentially conflicting, objectives are present. To address these limitations, we propose an approach employing Multi-Objective Reinforcement Learning (MORL). By modifying the reward function to return a vector of rewards, each pertaining to a distinct objective, the robot learns a policy that effectively balances the different goals, aiming to achieve a Pareto optimal solution. This comparative study highlights the potential for MORL in complex, dynamic robotic navigation tasks, setting the stage for future investigations into more adaptable and robust robotic behaviors.

CoverNav: Cover Following Navigation Planning in Unstructured Outdoor Environment with Deep Reinforcement Learning

Aug 12, 2023Abstract:Autonomous navigation in offroad environments has been extensively studied in the robotics field. However, navigation in covert situations where an autonomous vehicle needs to remain hidden from outside observers remains an underexplored area. In this paper, we propose a novel Deep Reinforcement Learning (DRL) based algorithm, called CoverNav, for identifying covert and navigable trajectories with minimal cost in offroad terrains and jungle environments in the presence of observers. CoverNav focuses on unmanned ground vehicles seeking shelters and taking covers while safely navigating to a predefined destination. Our proposed DRL method computes a local cost map that helps distinguish which path will grant the maximal covertness while maintaining a low cost trajectory using an elevation map generated from 3D point cloud data, the robot's pose, and directed goal information. CoverNav helps robot agents to learn the low elevation terrain using a reward function while penalizing it proportionately when it experiences high elevation. If an observer is spotted, CoverNav enables the robot to select natural obstacles (e.g., rocks, houses, disabled vehicles, trees, etc.) and use them as shelters to hide behind. We evaluate CoverNav using the Unity simulation environment and show that it guarantees dynamically feasible velocities in the terrain when fed with an elevation map generated by another DRL based navigation algorithm. Additionally, we evaluate CoverNav's effectiveness in achieving a maximum goal distance of 12 meters and its success rate in different elevation scenarios with and without cover objects. We observe competitive performance comparable to state of the art (SOTA) methods without compromising accuracy.

Novel Categories Discovery from probability matrix perspective

Jul 07, 2023Abstract:Novel Categories Discovery (NCD) tackles the open-world problem of classifying known and clustering novel categories based on the class semantics using partial class space annotated data. Unlike traditional pseudo-label and retraining, we investigate NCD from the novel data probability matrix perspective. We leverage the connection between NCD novel data sampling with provided novel class Multinoulli (categorical) distribution and hypothesize to implicitly achieve semantic-based novel data clustering by learning their class distribution. We propose novel constraints on first-order (mean) and second-order (covariance) statistics of probability matrix features while applying instance-wise information constraints. In particular, we align the neuron distribution (activation patterns) under a large batch of Monte-Carlo novel data sampling by matching their empirical features mean and covariance with the provided Multinoulli-distribution. Simultaneously, we minimize entropy and enforce prediction consistency for each instance. Our simple approach successfully realizes semantic-based novel data clustering provided the semantic similarity between label-unlabeled classes. We demonstrate the discriminative capacity of our approaches in image and video modalities. Moreover, we perform extensive ablation studies regarding data, networks, and our framework components to provide better insights. Our approach maintains ~94%, ~93%, and ~85%, classification accuracy in labeled data while achieving ~90%, ~84%, and ~72% clustering accuracy for novel categories for Cifar10, UCF101, and MPSC-ARL datasets that matches state-of-the-art approaches without any external clustering.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge