Sanjay Purushotham

Multi-Speaker Conversational Audio Deepfake: Taxonomy, Dataset and Pilot Study

Jan 30, 2026Abstract:The rapid advances in text-to-speech (TTS) technologies have made audio deepfakes increasingly realistic and accessible, raising significant security and trust concerns. While existing research has largely focused on detecting single-speaker audio deepfakes, real-world malicious applications with multi-speaker conversational settings is also emerging as a major underexplored threat. To address this gap, we propose a conceptual taxonomy of multi-speaker conversational audio deepfakes, distinguishing between partial manipulations (one or multiple speakers altered) and full manipulations (entire conversations synthesized). As a first step, we introduce a new Multi-speaker Conversational Audio Deepfakes Dataset (MsCADD) of 2,830 audio clips containing real and fully synthetic two-speaker conversations, generated using VITS and SoundStorm-based NotebookLM models to simulate natural dialogue with variations in speaker gender, and conversational spontaneity. MsCADD is limited to text-to-speech (TTS) types of deepfake. We benchmark three neural baseline models; LFCC-LCNN, RawNet2, and Wav2Vec 2.0 on this dataset and report performance in terms of F1 score, accuracy, true positive rate (TPR), and true negative rate (TNR). Results show that these baseline models provided a useful benchmark, however, the results also highlight that there is a significant gap in multi-speaker deepfake research in reliably detecting synthetic voices under varied conversational dynamics. Our dataset and benchmarks provide a foundation for future research on deepfake detection in conversational scenarios, which is a highly underexplored area of research but also a major area of threat to trustworthy information in audio settings. The MsCADD dataset is publicly available to support reproducibility and benchmarking by the research community.

Cloud Optical Thickness Retrievals Using Angle Invariant Attention Based Deep Learning Models

May 30, 2025Abstract:Cloud Optical Thickness (COT) is a critical cloud property influencing Earth's climate, weather, and radiation budget. Satellite radiance measurements enable global COT retrieval, but challenges like 3D cloud effects, viewing angles, and atmospheric interference must be addressed to ensure accurate estimation. Traditionally, the Independent Pixel Approximation (IPA) method, which treats individual pixels independently, has been used for COT estimation. However, IPA introduces significant bias due to its simplified assumptions. Recently, deep learning-based models have shown improved performance over IPA but lack robustness, as they are sensitive to variations in radiance intensity, distortions, and cloud shadows. These models also introduce substantial errors in COT estimation under different solar and viewing zenith angles. To address these challenges, we propose a novel angle-invariant, attention-based deep model called Cloud-Attention-Net with Angle Coding (CAAC). Our model leverages attention mechanisms and angle embeddings to account for satellite viewing geometry and 3D radiative transfer effects, enabling more accurate retrieval of COT. Additionally, our multi-angle training strategy ensures angle invariance. Through comprehensive experiments, we demonstrate that CAAC significantly outperforms existing state-of-the-art deep learning models, reducing cloud property retrieval errors by at least a factor of nine.

Joint Retrieval of Cloud properties using Attention-based Deep Learning Models

Apr 09, 2025

Abstract:Accurate cloud property retrieval is vital for understanding cloud behavior and its impact on climate, including applications in weather forecasting, climate modeling, and estimating Earth's radiation balance. The Independent Pixel Approximation (IPA), a widely used physics-based approach, simplifies radiative transfer calculations by assuming each pixel is independent of its neighbors. While computationally efficient, IPA has significant limitations, such as inaccuracies from 3D radiative effects, errors at cloud edges, and ineffectiveness for overlapping or heterogeneous cloud fields. Recent AI/ML-based deep learning models have improved retrieval accuracy by leveraging spatial relationships across pixels. However, these models are often memory-intensive, retrieve only a single cloud property, or struggle with joint property retrievals. To overcome these challenges, we introduce CloudUNet with Attention Module (CAM), a compact UNet-based model that employs attention mechanisms to reduce errors in thick, overlapping cloud regions and a specialized loss function for joint retrieval of Cloud Optical Thickness (COT) and Cloud Effective Radius (CER). Experiments on a Large Eddy Simulation (LES) dataset show that our CAM model outperforms state-of-the-art deep learning methods, reducing mean absolute errors (MAE) by 34% for COT and 42% for CER, and achieving 76% and 86% lower MAE for COT and CER retrievals compared to the IPA method.

CAM-Seg: A Continuous-valued Embedding Approach for Semantic Image Generation

Mar 19, 2025

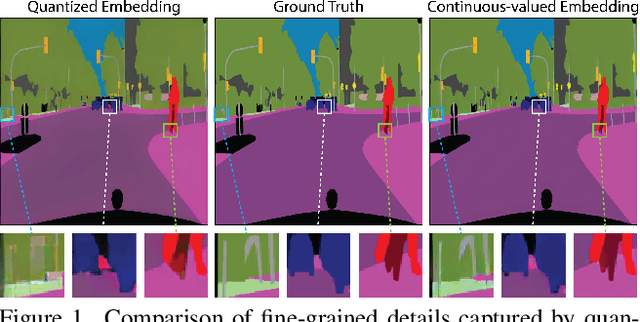

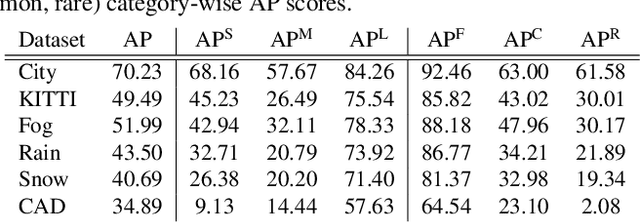

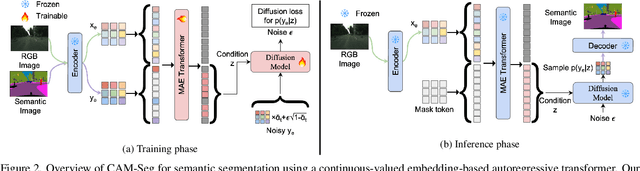

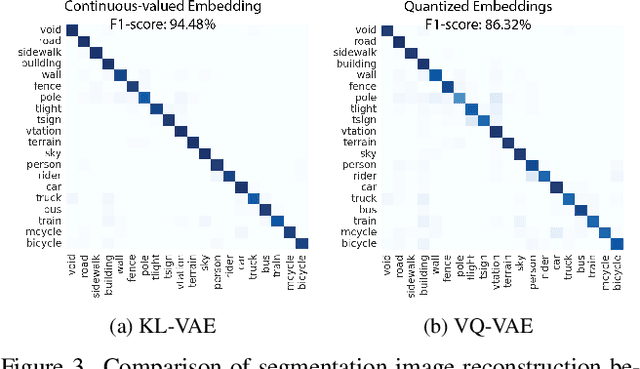

Abstract:Traditional transformer-based semantic segmentation relies on quantized embeddings. However, our analysis reveals that autoencoder accuracy on segmentation mask using quantized embeddings (e.g. VQ-VAE) is 8% lower than continuous-valued embeddings (e.g. KL-VAE). Motivated by this, we propose a continuous-valued embedding framework for semantic segmentation. By reformulating semantic mask generation as a continuous image-to-embedding diffusion process, our approach eliminates the need for discrete latent representations while preserving fine-grained spatial and semantic details. Our key contribution includes a diffusion-guided autoregressive transformer that learns a continuous semantic embedding space by modeling long-range dependencies in image features. Our framework contains a unified architecture combining a VAE encoder for continuous feature extraction, a diffusion-guided transformer for conditioned embedding generation, and a VAE decoder for semantic mask reconstruction. Our setting facilitates zero-shot domain adaptation capabilities enabled by the continuity of the embedding space. Experiments across diverse datasets (e.g., Cityscapes and domain-shifted variants) demonstrate state-of-the-art robustness to distribution shifts, including adverse weather (e.g., fog, snow) and viewpoint variations. Our model also exhibits strong noise resilience, achieving robust performance ($\approx$ 95% AP compared to baseline) under gaussian noise, moderate motion blur, and moderate brightness/contrast variations, while experiencing only a moderate impact ($\approx$ 90% AP compared to baseline) from 50% salt and pepper noise, saturation and hue shifts. Code available: https://github.com/mahmed10/CAMSS.git

Building Machine Learning Challenges for Anomaly Detection in Science

Mar 03, 2025

Abstract:Scientific discoveries are often made by finding a pattern or object that was not predicted by the known rules of science. Oftentimes, these anomalous events or objects that do not conform to the norms are an indication that the rules of science governing the data are incomplete, and something new needs to be present to explain these unexpected outliers. The challenge of finding anomalies can be confounding since it requires codifying a complete knowledge of the known scientific behaviors and then projecting these known behaviors on the data to look for deviations. When utilizing machine learning, this presents a particular challenge since we require that the model not only understands scientific data perfectly but also recognizes when the data is inconsistent and out of the scope of its trained behavior. In this paper, we present three datasets aimed at developing machine learning-based anomaly detection for disparate scientific domains covering astrophysics, genomics, and polar science. We present the different datasets along with a scheme to make machine learning challenges around the three datasets findable, accessible, interoperable, and reusable (FAIR). Furthermore, we present an approach that generalizes to future machine learning challenges, enabling the possibility of large, more compute-intensive challenges that can ultimately lead to scientific discovery.

gWaveNet: Classification of Gravity Waves from Noisy Satellite Data using Custom Kernel Integrated Deep Learning Method

Aug 26, 2024Abstract:Atmospheric gravity waves occur in the Earths atmosphere caused by an interplay between gravity and buoyancy forces. These waves have profound impacts on various aspects of the atmosphere, including the patterns of precipitation, cloud formation, ozone distribution, aerosols, and pollutant dispersion. Therefore, understanding gravity waves is essential to comprehend and monitor changes in a wide range of atmospheric behaviors. Limited studies have been conducted to identify gravity waves from satellite data using machine learning techniques. Particularly, without applying noise removal techniques, it remains an underexplored area of research. This study presents a novel kernel design aimed at identifying gravity waves within satellite images. The proposed kernel is seamlessly integrated into a deep convolutional neural network, denoted as gWaveNet. Our proposed model exhibits impressive proficiency in detecting images containing gravity waves from noisy satellite data without any feature engineering. The empirical results show our model outperforms related approaches by achieving over 98% training accuracy and over 94% test accuracy which is known to be the best result for gravity waves detection up to the time of this work. We open sourced our code at https://rb.gy/qn68ku.

A Generative Approach for Image Registration of Visible-Thermal (VT) Cancer Faces

Aug 23, 2023Abstract:Since thermal imagery offers a unique modality to investigate pain, the U.S. National Institutes of Health (NIH) has collected a large and diverse set of cancer patient facial thermograms for AI-based pain research. However, differing angles from camera capture between thermal and visible sensors has led to misalignment between Visible-Thermal (VT) images. We modernize the classic computer vision task of image registration by applying and modifying a generative alignment algorithm to register VT cancer faces, without the need for a reference or alignment parameters. By registering VT faces, we demonstrate that the quality of thermal images produced in the generative AI downstream task of Visible-to-Thermal (V2T) image translation significantly improves up to 52.5\%, than without registration. Images in this paper have been approved by the NIH NCI for public dissemination.

* 2nd Annual Artificial Intelligence over Infrared Images for Medical Applications Workshop (AIIIMA) at the 26th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2023)

Novel Categories Discovery from probability matrix perspective

Jul 07, 2023Abstract:Novel Categories Discovery (NCD) tackles the open-world problem of classifying known and clustering novel categories based on the class semantics using partial class space annotated data. Unlike traditional pseudo-label and retraining, we investigate NCD from the novel data probability matrix perspective. We leverage the connection between NCD novel data sampling with provided novel class Multinoulli (categorical) distribution and hypothesize to implicitly achieve semantic-based novel data clustering by learning their class distribution. We propose novel constraints on first-order (mean) and second-order (covariance) statistics of probability matrix features while applying instance-wise information constraints. In particular, we align the neuron distribution (activation patterns) under a large batch of Monte-Carlo novel data sampling by matching their empirical features mean and covariance with the provided Multinoulli-distribution. Simultaneously, we minimize entropy and enforce prediction consistency for each instance. Our simple approach successfully realizes semantic-based novel data clustering provided the semantic similarity between label-unlabeled classes. We demonstrate the discriminative capacity of our approaches in image and video modalities. Moreover, we perform extensive ablation studies regarding data, networks, and our framework components to provide better insights. Our approach maintains ~94%, ~93%, and ~85%, classification accuracy in labeled data while achieving ~90%, ~84%, and ~72% clustering accuracy for novel categories for Cifar10, UCF101, and MPSC-ARL datasets that matches state-of-the-art approaches without any external clustering.

Vista-Morph: Unsupervised Image Registration of Visible-Thermal Facial Pairs

Jun 10, 2023Abstract:For a variety of biometric cross-spectral tasks, Visible-Thermal (VT) facial pairs are used. However, due to a lack of calibration in the lab, photographic capture between two different sensors leads to severely misaligned pairs that can lead to poor results for person re-identification and generative AI. To solve this problem, we introduce our approach for VT image registration called Vista Morph. Unlike existing VT facial registration that requires manual, hand-crafted features for pixel matching and/or a supervised thermal reference, Vista Morph is completely unsupervised without the need for a reference. By learning the affine matrix through a Vision Transformer (ViT)-based Spatial Transformer Network (STN) and Generative Adversarial Networks (GAN), Vista Morph successfully aligns facial and non-facial VT images. Our approach learns warps in Hard, No, and Low-light visual settings and is robust to geometric perturbations and erasure at test time. We conduct a downstream generative AI task to show that registering training data with Vista Morph improves subject identity of generated thermal faces when performing V2T image translation.

NEV-NCD: Negative Learning, Entropy, and Variance regularization based novel action categories discovery

Apr 14, 2023

Abstract:Novel Categories Discovery (NCD) facilitates learning from a partially annotated label space and enables deep learning (DL) models to operate in an open-world setting by identifying and differentiating instances of novel classes based on the labeled data notions. One of the primary assumptions of NCD is that the novel label space is perfectly disjoint and can be equipartitioned, but it is rarely realized by most NCD approaches in practice. To better align with this assumption, we propose a novel single-stage joint optimization-based NCD method, Negative learning, Entropy, and Variance regularization NCD (NEV-NCD). We demonstrate the efficacy of NEV-NCD in previously unexplored NCD applications of video action recognition (VAR) with the public UCF101 dataset and a curated in-house partial action-space annotated multi-view video dataset. We perform a thorough ablation study by varying the composition of final joint loss and associated hyper-parameters. During our experiments with UCF101 and multi-view action dataset, NEV-NCD achieves ~ 83% classification accuracy in test instances of labeled data. NEV-NCD achieves ~ 70% clustering accuracy over unlabeled data outperforming both naive baselines (by ~ 40%) and state-of-the-art pseudo-labeling-based approaches (by ~ 3.5%) over both datasets. Further, we propose to incorporate optional view-invariant feature learning with the multiview dataset to identify novel categories from novel viewpoints. Our additional view-invariance constraint improves the discriminative accuracy for both known and unknown categories by ~ 10% for novel viewpoints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge