Emon Dey

A Survey on Efficient Vision-Language Models

Apr 13, 2025

Abstract:Vision-language models (VLMs) integrate visual and textual information, enabling a wide range of applications such as image captioning and visual question answering, making them crucial for modern AI systems. However, their high computational demands pose challenges for real-time applications. This has led to a growing focus on developing efficient vision language models. In this survey, we review key techniques for optimizing VLMs on edge and resource-constrained devices. We also explore compact VLM architectures, frameworks and provide detailed insights into the performance-memory trade-offs of efficient VLMs. Furthermore, we establish a GitHub repository at https://github.com/MPSCUMBC/Efficient-Vision-Language-Models-A-Survey to compile all surveyed papers, which we will actively update. Our objective is to foster deeper research in this area.

SERN: Simulation-Enhanced Realistic Navigation for Multi-Agent Robotic Systems in Contested Environments

Oct 22, 2024

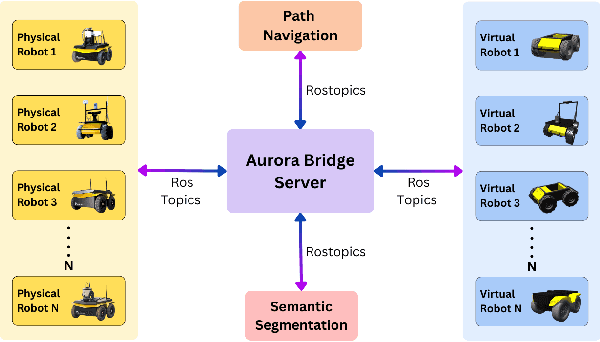

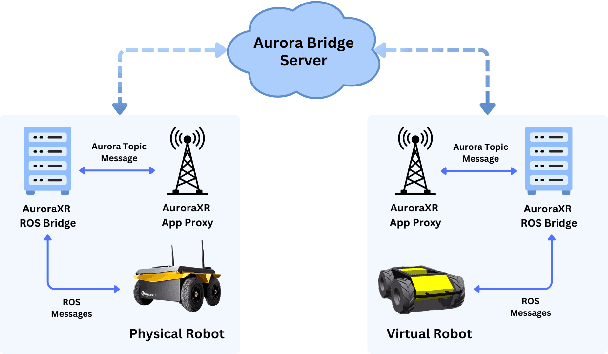

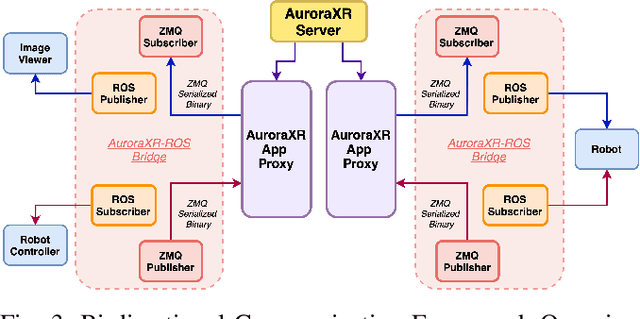

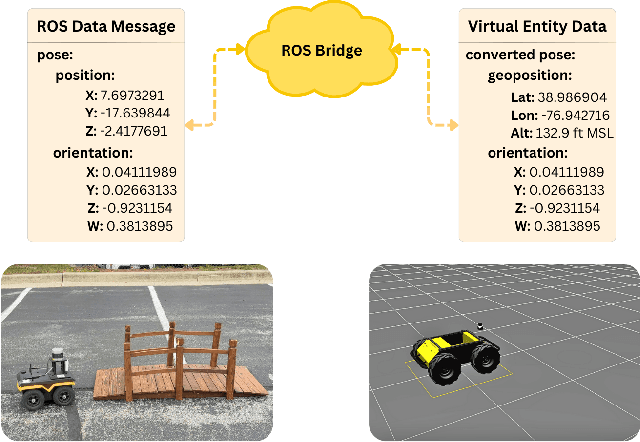

Abstract:The increasing deployment of autonomous systems in complex environments necessitates efficient communication and task completion among multiple agents. This paper presents SERN (Simulation-Enhanced Realistic Navigation), a novel framework integrating virtual and physical environments for real-time collaborative decision-making in multi-robot systems. SERN addresses key challenges in asset deployment and coordination through a bi-directional communication framework using the AuroraXR ROS Bridge. Our approach advances the SOTA through accurate real-world representation in virtual environments using Unity high-fidelity simulator; synchronization of physical and virtual robot movements; efficient ROS data distribution between remote locations; and integration of SOTA semantic segmentation for enhanced environmental perception. Our evaluations show a 15% to 24% improvement in latency and up to a 15% increase in processing efficiency compared to traditional ROS setups. Real-world and virtual simulation experiments with multiple robots demonstrate synchronization accuracy, achieving less than 5 cm positional error and under 2-degree rotational error. These results highlight SERN's potential to enhance situational awareness and multi-agent coordination in diverse, contested environments.

HeteroEdge: Addressing Asymmetry in Heterogeneous Collaborative Autonomous Systems

May 05, 2023

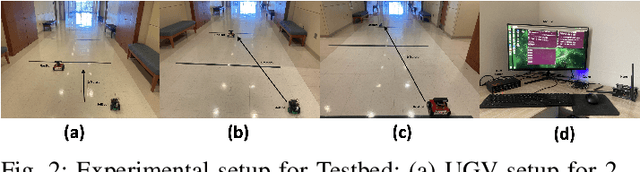

Abstract:Gathering knowledge about surroundings and generating situational awareness for IoT devices is of utmost importance for systems developed for smart urban and uncontested environments. For example, a large-area surveillance system is typically equipped with multi-modal sensors such as cameras and LIDARs and is required to execute deep learning algorithms for action, face, behavior, and object recognition. However, these systems face power and memory constraints due to their ubiquitous nature, making it crucial to optimize data processing, deep learning algorithm input, and model inference communication. In this paper, we propose a self-adaptive optimization framework for a testbed comprising two Unmanned Ground Vehicles (UGVs) and two NVIDIA Jetson devices. This framework efficiently manages multiple tasks (storage, processing, computation, transmission, inference) on heterogeneous nodes concurrently. It involves compressing and masking input image frames, identifying similar frames, and profiling devices to obtain boundary conditions for optimization.. Finally, we propose and optimize a novel parameter split-ratio, which indicates the proportion of the data required to be offloaded to another device while considering the networking bandwidth, busy factor, memory (CPU, GPU, RAM), and power constraints of the devices in the testbed. Our evaluations captured while executing multiple tasks (e.g., PoseNet, SegNet, ImageNet, DetectNet, DepthNet) simultaneously, reveal that executing 70% (split-ratio=70%) of the data on the auxiliary node minimizes the offloading latency by approx. 33% (18.7 ms/image to 12.5 ms/image) and the total operation time by approx. 47% (69.32s to 36.43s) compared to the baseline configuration (executing on the primary node).

A Systematic Study on Object Recognition Using Millimeter-wave Radar

May 03, 2023

Abstract:Due to its light and weather-independent sensing, millimeter-wave (MMW) radar is essential in smart environments. Intelligent vehicle systems and industry-grade MMW radars have integrated such capabilities. Industry-grade MMW radars are expensive and hard to get for community-purpose smart environment applications. However, commercially available MMW radars have hidden underpinning challenges that need to be investigated for tasks like recognizing objects and activities, real-time person tracking, object localization, etc. Image and video data are straightforward to gather, understand, and annotate for such jobs. Image and video data are light and weather-dependent, susceptible to the occlusion effect, and present privacy problems. To eliminate dependence and ensure privacy, commercial MMW radars should be tested. MMW radar's practicality and performance in varied operating settings must be addressed before promoting it. To address the problems, we collected a dataset using Texas Instruments' Automotive mmWave Radar (AWR2944) and reported the best experimental settings for object recognition performance using different deep learning algorithms. Our extensive data gathering technique allows us to systematically explore and identify object identification task problems under cross-ambience conditions. We investigated several solutions and published detailed experimental data.

A Reliable and Low Latency Synchronizing Middleware for Co-simulation of a Heterogeneous Multi-Robot Systems

Nov 10, 2022Abstract:Search and rescue, wildfire monitoring, and flood/hurricane impact assessment are mission-critical services for recent IoT networks. Communication synchronization, dependability, and minimal communication jitter are major simulation and system issues for the time-based physics-based ROS simulator, event-based network-based wireless simulator, and complex dynamics of mobile and heterogeneous IoT devices deployed in actual environments. Simulating a heterogeneous multi-robot system before deployment is difficult due to synchronizing physics (robotics) and network simulators. Due to its master-based architecture, most TCP/IP-based synchronization middlewares use ROS1. A real-time ROS2 architecture with masterless packet discovery synchronizes robotics and wireless network simulations. A velocity-aware Transmission Control Protocol (TCP) technique for ground and aerial robots using Data Distribution Service (DDS) publish-subscribe transport minimizes packet loss, synchronization, transmission, and communication jitters. Gazebo and NS-3 simulate and test. Simulator-agnostic middleware. LOS/NLOS and TCP/UDP protocols tested our ROS2-based synchronization middleware for packet loss probability and average latency. A thorough ablation research replaced NS-3 with EMANE, a real-time wireless network simulator, and masterless ROS2 with master-based ROS1. Finally, we tested network synchronization and jitter using one aerial drone (Duckiedrone) and two ground vehicles (TurtleBot3 Burger) on different terrains in masterless (ROS2) and master-enabled (ROS1) clusters. Our middleware shows that a large-scale IoT infrastructure with a diverse set of stationary and robotic devices can achieve low-latency communications (12% and 11% reduction in simulation and real) while meeting mission-critical application reliability (10% and 15% packet loss reduction) and high-fidelity requirements.

Demo: RhythmEdge: Enabling Contactless Heart Rate Estimation on the Edge

Aug 13, 2022

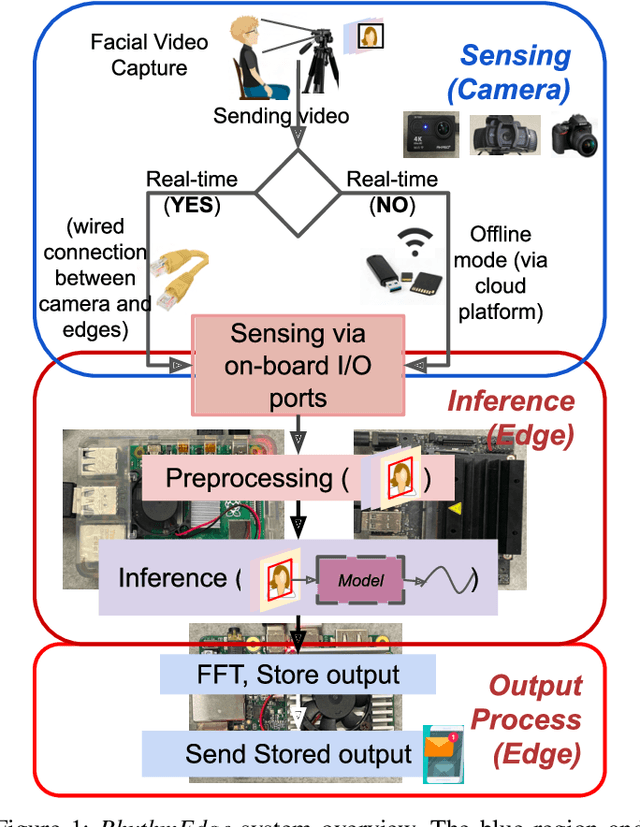

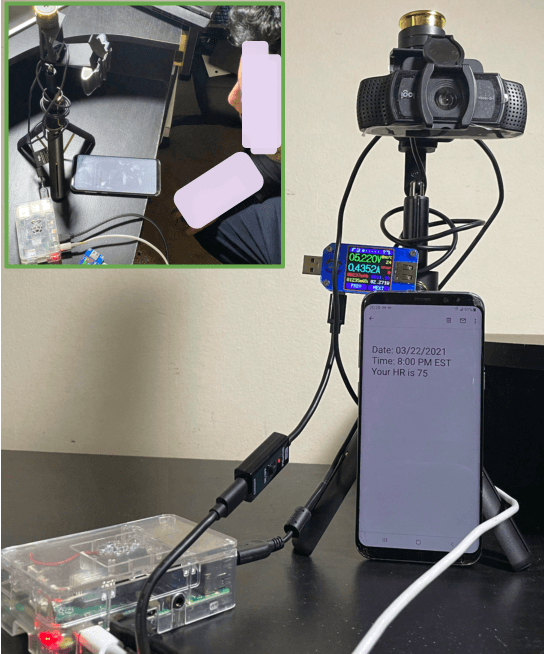

Abstract:In this demo paper, we design and prototype RhythmEdge, a low-cost, deep-learning-based contact-less system for regular HR monitoring applications. RhythmEdge benefits over existing approaches by facilitating contact-less nature, real-time/offline operation, inexpensive and available sensing components, and computing devices. Our RhythmEdge system is portable and easily deployable for reliable HR estimation in moderately controlled indoor or outdoor environments. RhythmEdge measures HR via detecting changes in blood volume from facial videos (Remote Photoplethysmography; rPPG) and provides instant assessment using off-the-shelf commercially available resource-constrained edge platforms and video cameras. We demonstrate the scalability, flexibility, and compatibility of the RhythmEdge by deploying it on three resource-constrained platforms of differing architectures (NVIDIA Jetson Nano, Google Coral Development Board, Raspberry Pi) and three heterogeneous cameras of differing sensitivity, resolution, properties (web camera, action camera, and DSLR). RhythmEdge further stores longitudinal cardiovascular information and provides instant notification to the users. We thoroughly test the prototype stability, latency, and feasibility for three edge computing platforms by profiling their runtime, memory, and power usage.

SynchroSim: An Integrated Co-simulation Middleware for Heterogeneous Multi-robot System

Aug 13, 2022

Abstract:With the advancement of modern robotics, autonomous agents are now capable of hosting sophisticated algorithms, which enables them to make intelligent decisions. But developing and testing such algorithms directly in real-world systems is tedious and may result in the wastage of valuable resources. Especially for heterogeneous multi-agent systems in battlefield environments where communication is critical in determining the system's behavior and usability. Due to the necessity of simulators of separate paradigms (co-simulation) to simulate such scenarios before deploying, synchronization between those simulators is vital. Existing works aimed at resolving this issue fall short of addressing diversity among deployed agents. In this work, we propose \textit{SynchroSim}, an integrated co-simulation middleware to simulate a heterogeneous multi-robot system. Here we propose a velocity difference-driven adjustable window size approach with a view to reducing packet loss probability. It takes into account the respective velocities of deployed agents to calculate a suitable window size before transmitting data between them. We consider our algorithm-specific simulator agnostic but for the sake of implementation results, we have used Gazebo as a Physics simulator and NS-3 as a network simulator. Also, we design our algorithm considering the Perception-Action loop inside a closed communication channel, which is one of the essential factors in a contested scenario with the requirement of high fidelity in terms of data transmission. We validate our approach empirically at both the simulation and system level for both line-of-sight (LOS) and non-line-of-sight (NLOS) scenarios. Our approach achieves a noticeable improvement in terms of reducing packet loss probability ($\approx$11\%), and average packet delay ($\approx$10\%) compared to the fixed window size-based synchronization approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge