Muhammad Ibrahim

Reliable Deep Learning for Small-Scale Classifications: Experiments on Real-World Image Datasets from Bangladesh

Jan 17, 2026Abstract:Convolutional neural networks (CNNs) have achieved state-of-the-art performance in image recognition tasks but often involve complex architectures that may overfit on small datasets. In this study, we evaluate a compact CNN across five publicly available, real-world image datasets from Bangladesh, including urban encroachment, vehicle detection, road damage, and agricultural crops. The network demonstrates high classification accuracy, efficient convergence, and low computational overhead. Quantitative metrics and saliency analyses indicate that the model effectively captures discriminative features and generalizes robustly across diverse scenarios, highlighting the suitability of streamlined CNN architectures for small-class image classification tasks.

Detecting Unauthorized Vehicles using Deep Learning for Smart Cities: A Case Study on Bangladesh

Oct 30, 2025Abstract:Modes of transportation vary across countries depending on geographical location and cultural context. In South Asian countries rickshaws are among the most common means of local transport. Based on their mode of operation, rickshaws in cities across Bangladesh can be broadly classified into non-auto (pedal-powered) and auto-rickshaws (motorized). Monitoring the movement of auto-rickshaws is necessary as traffic rules often restrict auto-rickshaws from accessing certain routes. However, existing surveillance systems make it quite difficult to monitor them due to their similarity to other vehicles, especially non-auto rickshaws whereas manual video analysis is too time-consuming. This paper presents a machine learning-based approach to automatically detect auto-rickshaws in traffic images. In this system, we used real-time object detection using the YOLOv8 model. For training purposes, we prepared a set of 1,730 annotated images that were captured under various traffic conditions. The results show that our proposed model performs well in real-time auto-rickshaw detection and offers an mAP50 of 83.447% and binary precision and recall values above 78%, demonstrating its effectiveness in handling both dense and sparse traffic scenarios. The dataset has been publicly released for further research.

Multispectral Remote Sensing for Weed Detection in West Australian Agricultural Lands

Feb 12, 2025Abstract:The Kondinin region in Western Australia faces significant agricultural challenges due to pervasive weed infestations, causing economic losses and ecological impacts. This study constructs a tailored multispectral remote sensing dataset and an end-to-end framework for weed detection to advance precision agriculture practices. Unmanned aerial vehicles were used to collect raw multispectral data from two experimental areas (E2 and E8) over four years, covering 0.6046 km^{2} and ground truth annotations were created with GPS-enabled vehicles to manually label weeds and crops. The dataset is specifically designed for agricultural applications in Western Australia. We propose an end-to-end framework for weed detection that includes extensive preprocessing steps, such as denoising, radiometric calibration, image alignment, orthorectification, and stitching. The proposed method combines vegetation indices (NDVI, GNDVI, EVI, SAVI, MSAVI) with multispectral channels to form classification features, and employs several deep learning models to identify weeds based on the input features. Among these models, ResNet achieves the highest performance, with a weed detection accuracy of 0.9213, an F1-Score of 0.8735, an mIOU of 0.7888, and an mDC of 0.8865, validating the efficacy of the dataset and the proposed weed detection method.

* 8 pages, 9 figures, 1 table, Accepted for oral presentation at IEEE 25th International Conference on Digital Image Computing: Techniques and Applications (DICTA 2024). Conference Proceeding: 979-8-3503-7903-7/24/\$31.00 (C) 2024 IEEE

Automated Road Extraction and Centreline Fitting in LiDAR Point Clouds

Feb 11, 2025Abstract:Road information extraction from 3D point clouds is useful for urban planning and traffic management. Existing methods often rely on local features and the refraction angle of lasers from kerbs, which makes them sensitive to variable kerb designs and issues in high-density areas due to data homogeneity. We propose an approach for extracting road points and fitting centrelines using a top-down view of LiDAR based ground-collected point clouds. This prospective view reduces reliance on specific kerb design and results in better road extraction. We first perform statistical outlier removal and density-based clustering to reduce noise from 3D point cloud data. Next, we perform ground point filtering using a grid-based segmentation method that adapts to diverse road scenarios and terrain characteristics. The filtered points are then projected onto a 2D plane, and the road is extracted by a skeletonisation algorithm. The skeleton is back-projected onto the 3D point cloud with calculated normals, which guide a region growing algorithm to find nearby road points. The extracted road points are then smoothed with the Savitzky-Golay filter to produce the final centreline. Our initial approach without post-processing of road skeleton achieved 67% in IoU by testing on the Perth CBD dataset with different road types. Incorporating the post-processing of the road skeleton improved the extraction of road points around the smoothed skeleton. The refined approach achieved a higher IoU value of 73% and with 23% reduction in the processing time. Our approach offers a generalised and computationally efficient solution that combines 3D and 2D processing techniques, laying the groundwork for future road reconstruction and 3D-to-2D point cloud alignment.

Soft Masked Transformer for Point Cloud Processing with Skip Attention-Based Upsampling

Mar 21, 2024

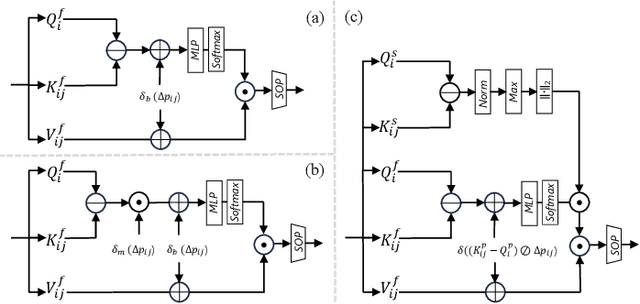

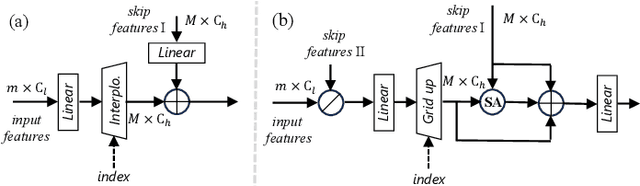

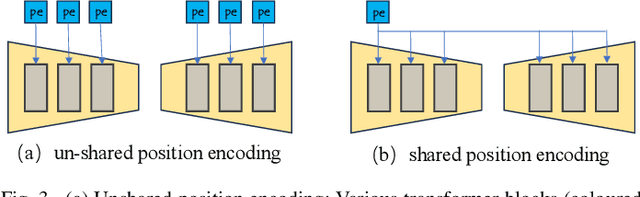

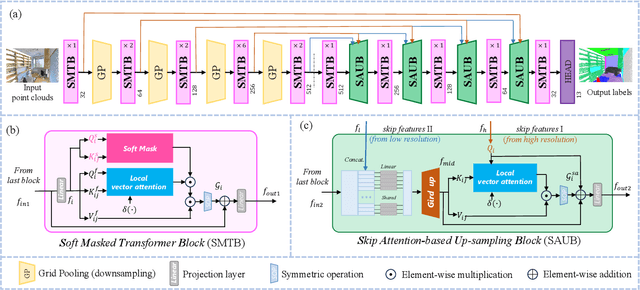

Abstract:Point cloud processing methods leverage local and global point features %at the feature level to cater to downstream tasks, yet they often overlook the task-level context inherent in point clouds during the encoding stage. We argue that integrating task-level information into the encoding stage significantly enhances performance. To that end, we propose SMTransformer which incorporates task-level information into a vector-based transformer by utilizing a soft mask generated from task-level queries and keys to learn the attention weights. Additionally, to facilitate effective communication between features from the encoding and decoding layers in high-level tasks such as segmentation, we introduce a skip-attention-based up-sampling block. This block dynamically fuses features from various resolution points across the encoding and decoding layers. To mitigate the increase in network parameters and training time resulting from the complexity of the aforementioned blocks, we propose a novel shared position encoding strategy. This strategy allows various transformer blocks to share the same position information over the same resolution points, thereby reducing network parameters and training time without compromising accuracy.Experimental comparisons with existing methods on multiple datasets demonstrate the efficacy of SMTransformer and skip-attention-based up-sampling for point cloud processing tasks, including semantic segmentation and classification. In particular, we achieve state-of-the-art semantic segmentation results of 73.4% mIoU on S3DIS Area 5 and 62.4% mIoU on SWAN dataset

CompactifAI: Extreme Compression of Large Language Models using Quantum-Inspired Tensor Networks

Jan 25, 2024

Abstract:Large Language Models (LLMs) such as ChatGPT and LlaMA are advancing rapidly in generative Artificial Intelligence (AI), but their immense size poses significant challenges, such as huge training and inference costs, substantial energy demands, and limitations for on-site deployment. Traditional compression methods such as pruning, distillation, and low-rank approximation focus on reducing the effective number of neurons in the network, while quantization focuses on reducing the numerical precision of individual weights to reduce the model size while keeping the number of neurons fixed. While these compression methods have been relatively successful in practice, there's no compelling reason to believe that truncating the number of neurons is an optimal strategy. In this context, this paper introduces CompactifAI, an innovative LLM compression approach using quantum-inspired Tensor Networks that focuses on the model's correlation space instead, allowing for a more controlled, refined and interpretable model compression. Our method is versatile and can be implemented with - or on top of - other compression techniques. As a benchmark, we demonstrate that CompactifAI alone enables compression of the LlaMA-2 7B model to only $30\%$ of its original size while recovering over $90\%$ of the original accuracy after a brief distributed retraining.

A Novel Neural Network-Based Federated Learning System for Imbalanced and Non-IID Data

Nov 16, 2023

Abstract:With the growth of machine learning techniques, privacy of data of users has become a major concern. Most of the machine learning algorithms rely heavily on large amount of data which may be collected from various sources. Collecting these data yet maintaining privacy policies has become one of the most challenging tasks for the researchers. To combat this issue, researchers have introduced federated learning, where a prediction model is learnt by ensuring the privacy of data of clients data. However, the prevalent federated learning algorithms possess an accuracy and efficiency trade-off, especially for non-IID data. In this research, we propose a centralized, neural network-based federated learning system. The centralized algorithm incorporates micro-level parallel processing inspired by the traditional mini-batch algorithm where the client devices and the server handle the forward and backward propagation respectively. We also devise a semi-centralized version of our proposed algorithm. This algorithm takes advantage of edge computing for minimizing the load from the central server, where clients handle both the forward and backward propagation while sacrificing the overall train time to some extent. We evaluate our proposed systems on five well-known benchmark datasets and achieve satisfactory performance in a reasonable time across various data distribution settings as compared to some existing benchmark algorithms.

An Exploratory Study on Simulated Annealing for Feature Selection in Learning-to-Rank

Oct 20, 2023

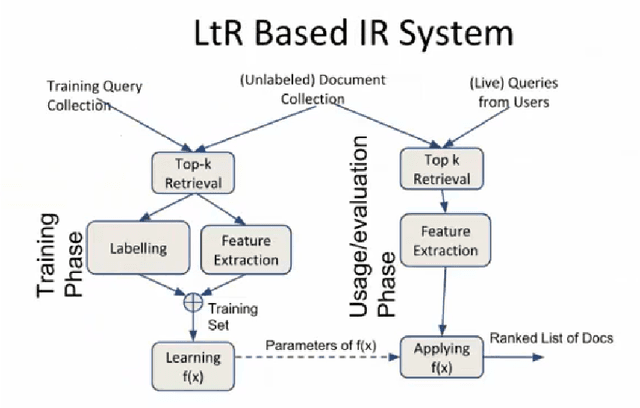

Abstract:Learning-to-rank is an applied domain of supervised machine learning. As feature selection has been found to be effective for improving the accuracy of learning models in general, it is intriguing to investigate this process for learning-to-rank domain. In this study, we investigate the use of a popular meta-heuristic approach called simulated annealing for this task. Under the general framework of simulated annealing, we explore various neighborhood selection strategies and temperature cooling schemes. We further introduce a new hyper-parameter called the progress parameter that can effectively be used to traverse the search space. Our algorithms are evaluated on five publicly benchmark datasets of learning-to-rank. For a better validation, we also compare the simulated annealing-based feature selection algorithm with another effective meta-heuristic algorithm, namely local beam search. Extensive experimental results shows the efficacy of our proposed models.

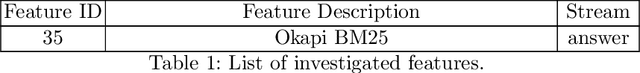

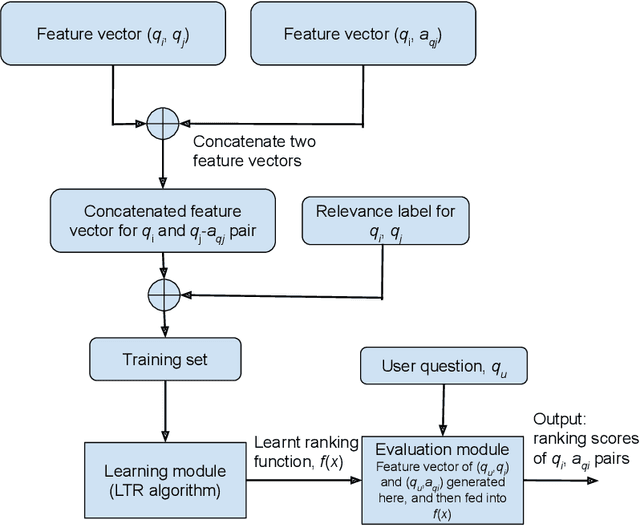

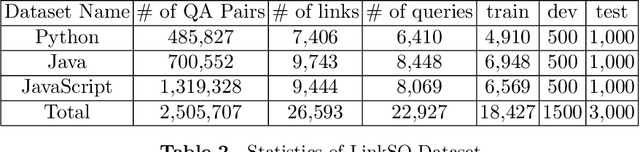

Feature Engineering in Learning-to-Rank for Community Question Answering Task

Sep 14, 2023

Abstract:Community question answering (CQA) forums are Internet-based platforms where users ask questions about a topic and other expert users try to provide solutions. Many CQA forums such as Quora, Stackoverflow, Yahoo!Answer, StackExchange exist with a lot of user-generated data. These data are leveraged in automated CQA ranking systems where similar questions (and answers) are presented in response to the query of the user. In this work, we empirically investigate a few aspects of this domain. Firstly, in addition to traditional features like TF-IDF, BM25 etc., we introduce a BERT-based feature that captures the semantic similarity between the question and answer. Secondly, most of the existing research works have focused on features extracted only from the question part; features extracted from answers have not been explored extensively. We combine both types of features in a linear fashion. Thirdly, using our proposed concepts, we conduct an empirical investigation with different rank-learning algorithms, some of which have not been used so far in CQA domain. On three standard CQA datasets, our proposed framework achieves state-of-the-art performance. We also analyze importance of the features we use in our investigation. This work is expected to guide the practitioners to select a better set of features for the CQA retrieval task.

UnLoc: A Universal Localization Method for Autonomous Vehicles using LiDAR, Radar and/or Camera Input

Jul 03, 2023

Abstract:Localization is a fundamental task in robotics for autonomous navigation. Existing localization methods rely on a single input data modality or train several computational models to process different modalities. This leads to stringent computational requirements and sub-optimal results that fail to capitalize on the complementary information in other data streams. This paper proposes UnLoc, a novel unified neural modeling approach for localization with multi-sensor input in all weather conditions. Our multi-stream network can handle LiDAR, Camera and RADAR inputs for localization on demand, i.e., it can work with one or more input sensors, making it robust to sensor failure. UnLoc uses 3D sparse convolutions and cylindrical partitioning of the space to process LiDAR frames and implements ResNet blocks with a slot attention-based feature filtering module for the Radar and image modalities. We introduce a unique learnable modality encoding scheme to distinguish between the input sensor data. Our method is extensively evaluated on Oxford Radar RobotCar, ApolloSouthBay and Perth-WA datasets. The results ascertain the efficacy of our technique.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge