Mohammad Reza Javan

Senior Member, IEEE

Smart Resource Allocation Model via Artificial Intelligence in Software Defined 6G Networks

Feb 09, 2023

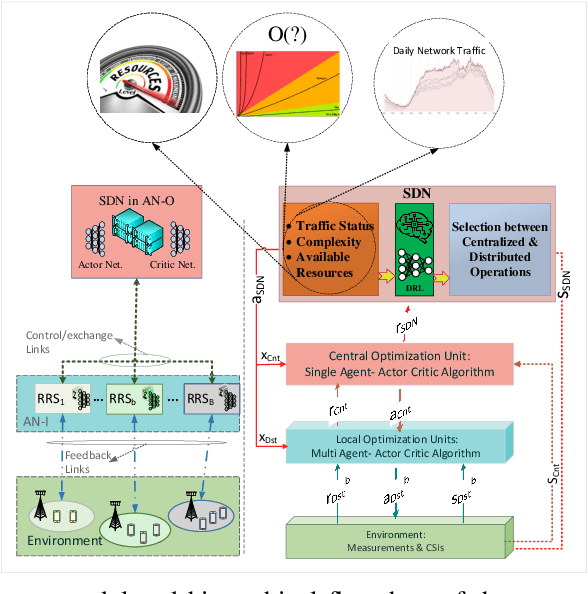

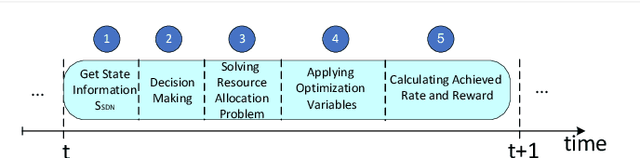

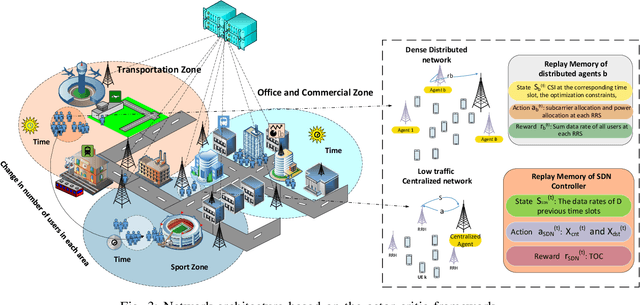

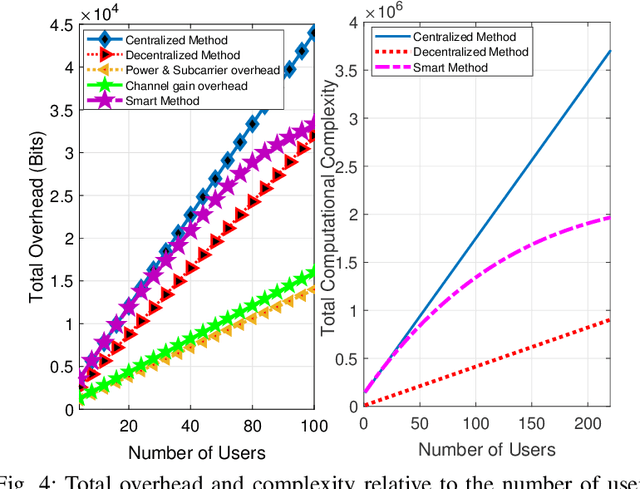

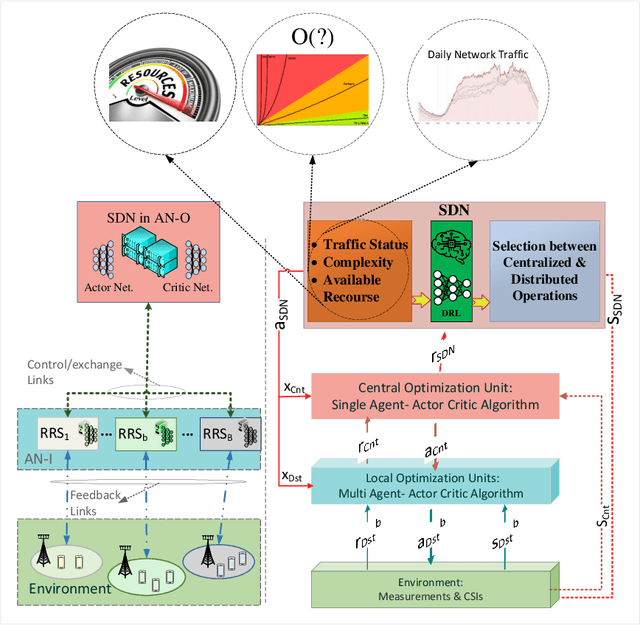

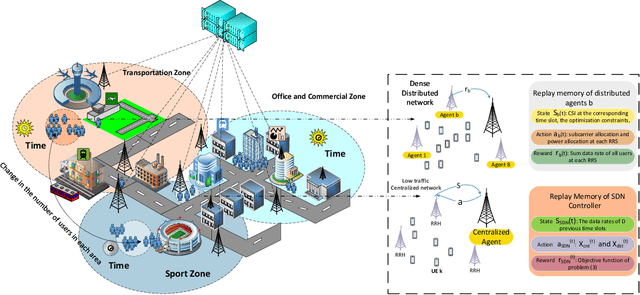

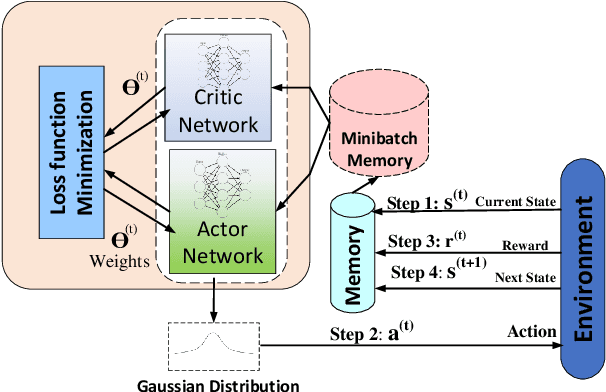

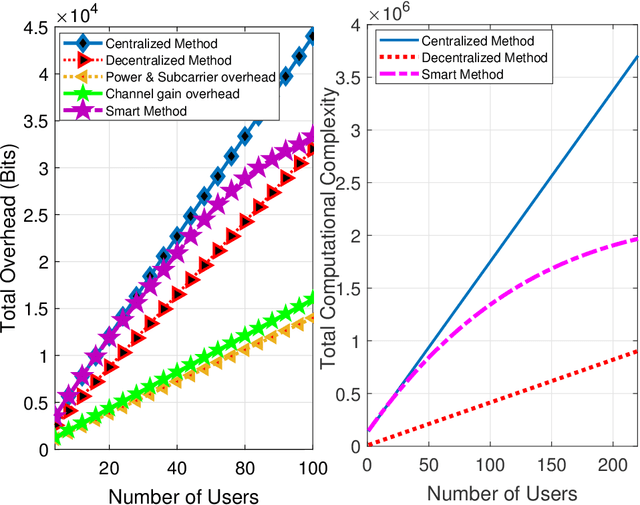

Abstract:In this paper, we design a new flexible smart software-defined radio access network (Soft-RAN) architecture with traffic awareness for sixth generation (6G) wireless networks. In particular, we consider a hierarchical resource allocation model for the proposed smart soft-RAN model where the software-defined network (SDN) controller is the first and foremost layer of the framework. This unit dynamically monitors the network to select a network operation type on the basis of distributed or centralized resource allocation procedures to intelligently perform decision-making. In this paper, our aim is to make the network more scalable and more flexible in terms of conflicting performance indicators such as achievable data rate, overhead, and complexity indicators. To this end, we introduce a new metric, i.e., throughput-overhead-complexity (TOC), for the proposed machine learning-based algorithm, which supports a trade-off between these performance indicators. In particular, the decision making based on TOC is solved via deep reinforcement learning (DRL) which determines an appropriate resource allocation policy. Furthermore, for the selected algorithm, we employ the soft actor-critic (SAC) method which is more accurate, scalable, and robust than other learning methods. Simulation results demonstrate that the proposed smart network achieves better performance in terms of TOC compared to fixed centralized or distributed resource management schemes that lack dynamism. Moreover, our proposed algorithm outperforms conventional learning methods employed in recent state-of-the-art network designs.

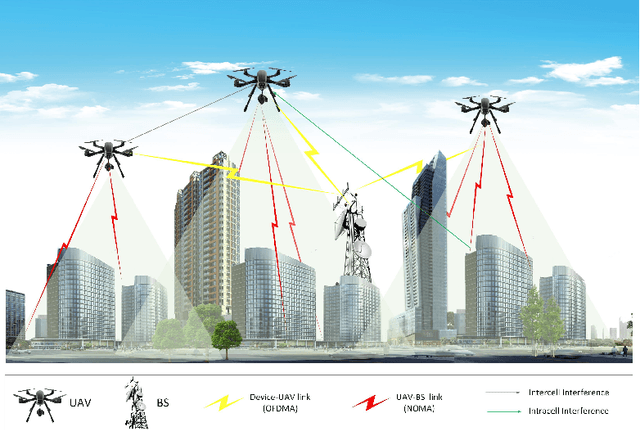

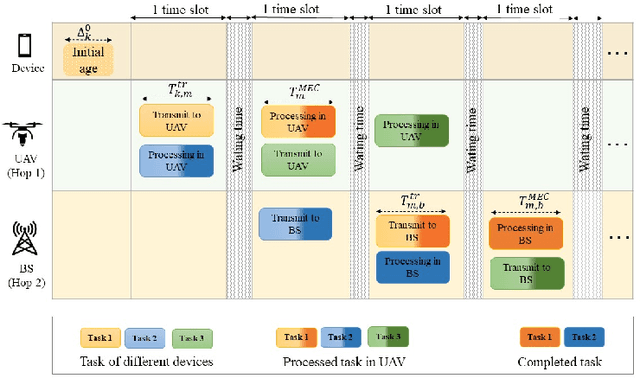

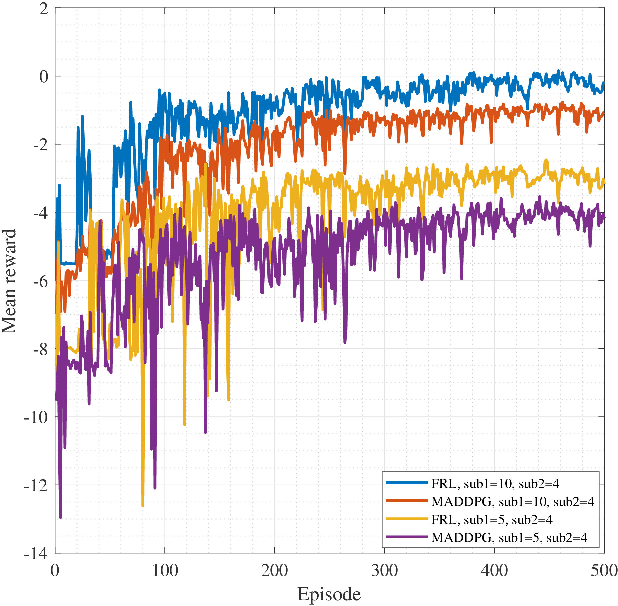

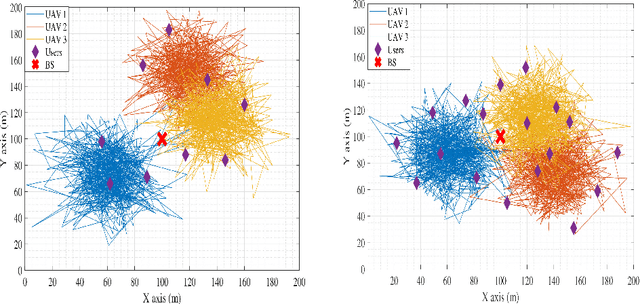

Two-Hop Age of Information Scheduling for Multi-UAV Assisted Mobile Edge Computing: FRL vs MADDPG

Jun 19, 2022

Abstract:In this work, we adopt the emerging technology of mobile edge computing (MEC) in the Unmanned aerial vehicles (UAVs) for communication-computing systems, to optimize the age of information (AoI) in the network. We assume that tasks are processed jointly on UAVs and BS to enhance edge performance with limited connectivity and computing. Using UAVs and BS jointly with MEC can reduce AoI on the network. To maintain the freshness of the tasks, we formulate the AoI minimization in two-hop communication framework, the first hop at the UAVs and the second hop at the BS. To approach the challenge, we optimize the problem using a deep reinforcement learning (DRL) framework, called federated reinforcement learning (FRL). In our network we have two types of agents with different states and actions but with the same policy. Our FRL enables us to handle the two-step AoI minimization and UAV trajectory problems. In addition, we compare our proposed algorithm, which has a centralized processing unit to update the weights, with fully decentralized multi-agent deep deterministic policy gradient (MADDPG), which enhances the agent's performance. As a result, the suggested algorithm outperforms the MADDPG by about 38\%

A Comprehensive Survey of Spectrum Sharing Schemes from a Standardization and Implementation Perspective

Mar 21, 2022

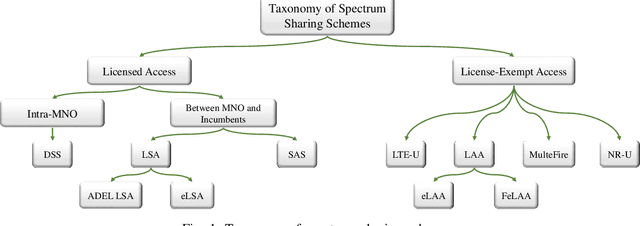

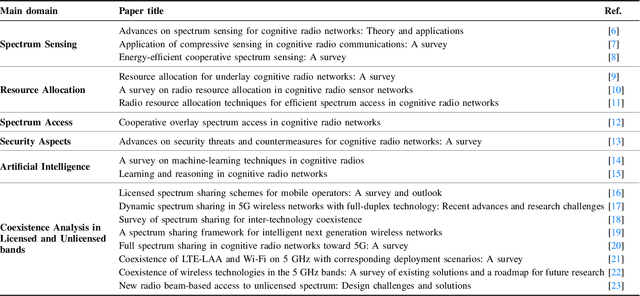

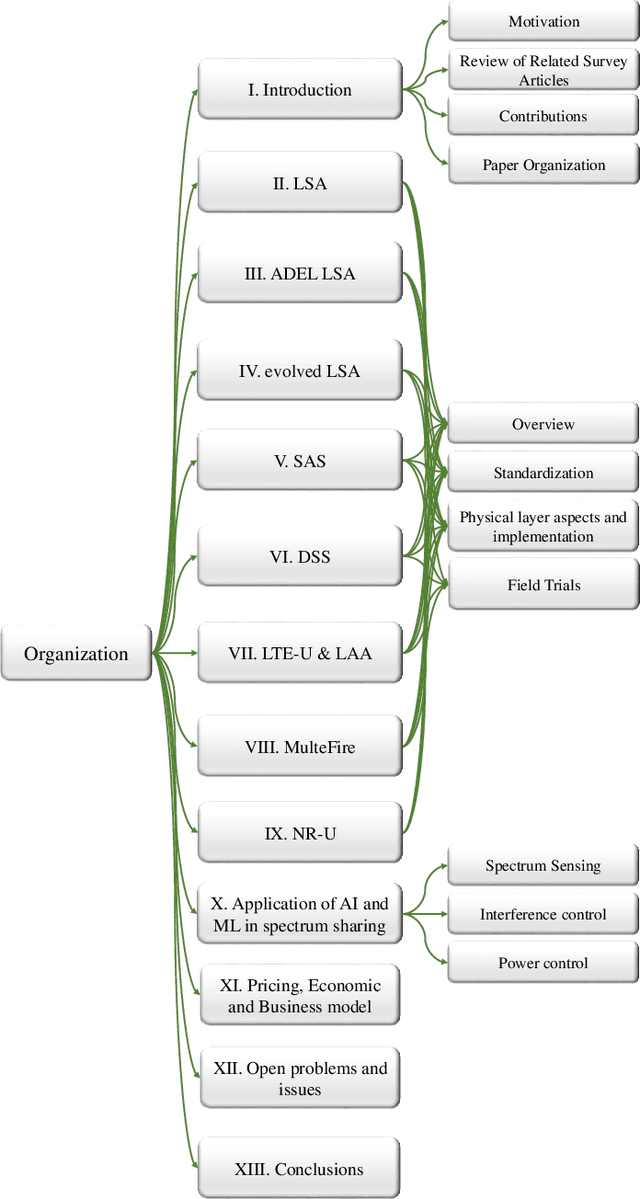

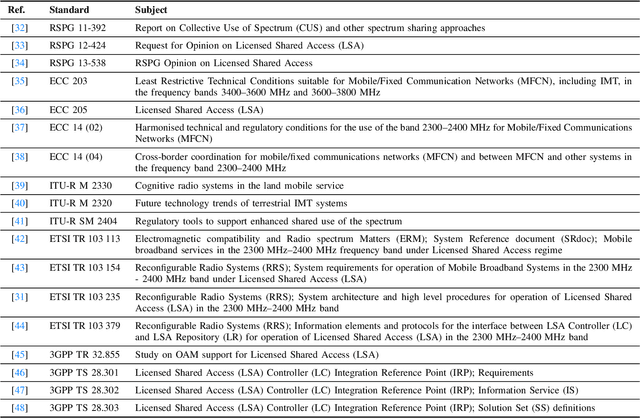

Abstract:As the services and requirements of next-generation wireless networks become increasingly diversified, it is estimated that the current frequency bands of mobile network operators (MNOs) will be unable to cope with the immensity of anticipated demands. Due to spectrum scarcity, there has been a growing trend among stakeholders toward identifying practical solutions to make the most productive use of the exclusively allocated bands on a shared basis through spectrum sharing mechanisms. However, due to the technical complexities of these mechanisms, their design presents challenges, as it requires coordination among multiple entities. To address this challenge, in this paper, we begin with a detailed review of the recent literature on spectrum sharing methods, classifying them on the basis of their operational frequency regime that is, whether they are implemented to operate in licensed bands (e.g., licensed shared access (LSA), spectrum access system (SAS), and dynamic spectrum sharing (DSS)) or unlicensed bands (e.g., LTE-unlicensed (LTE-U), licensed assisted access (LAA), MulteFire, and new radio-unlicensed (NR-U)). Then, in order to narrow the gap between the standardization and vendor-specific implementations, we provide a detailed review of the potential implementation scenarios and necessary amendments to legacy cellular networks from the perspective of telecom vendors and regulatory bodies. Next, we analyze applications of artificial intelligence (AI) and machine learning (ML) techniques for facilitating spectrum sharing mechanisms and leveraging the full potential of autonomous sharing scenarios. Finally, we conclude the paper by presenting open research challenges, which aim to provide insights into prospective research endeavors.

Toward a Smart Resource Allocation Policy via Artificial Intelligence in 6G Networks: Centralized or Decentralized?

Feb 18, 2022

Abstract:In this paper, we design a new smart softwaredefined radio access network (RAN) architecture with important properties like flexibility and traffic awareness for sixth generation (6G) wireless networks. In particular, we consider a hierarchical resource allocation framework for the proposed smart soft-RAN model, where the software-defined network (SDN) controller is the first and foremost layer of the framework. This unit dynamically monitors the network to select a network operation type on the basis of distributed or centralized resource allocation architectures to perform decision-making intelligently. In this paper, our aim is to make the network more scalable and more flexible in terms of achievable data rate, overhead, and complexity indicators. To this end, we introduce a new metric, throughput overhead complexity (TOC), for the proposed machine learning-based algorithm, which makes a trade-off between these performance indicators. In particular, the decision making based on TOC is solved via deep reinforcement learning (DRL), which determines an appropriate resource allocation policy. Furthermore, for the selected algorithm, we employ the soft actor-critic method, which is more accurate, scalable, and robust than other learning methods. Simulation results demonstrate that the proposed smart network achieves better performance in terms of TOC compared to fixed centralized or distributed resource management schemes that lack dynamism. Moreover, our proposed algorithm outperforms conventional learning methods employed in other state-of-the-art network designs.

Multi Agent Reinforcement Learning Trajectory Design and Two-Stage Resource Management in CoMP UAV VLC Networks

Dec 03, 2021

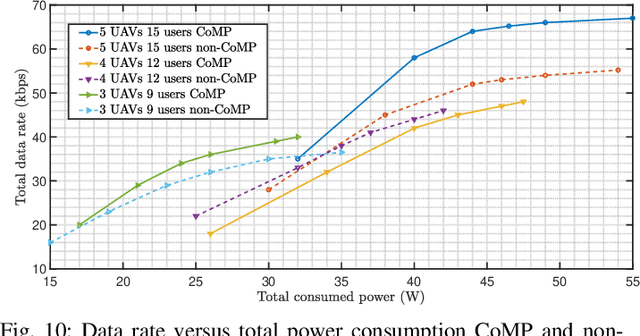

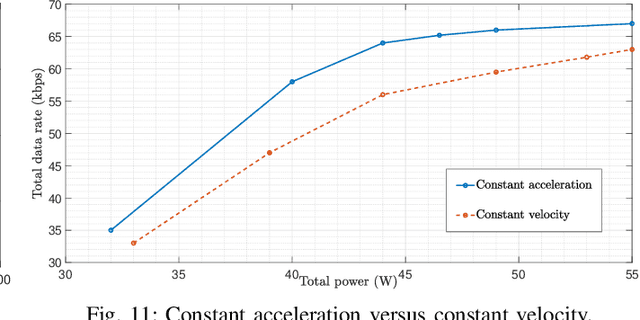

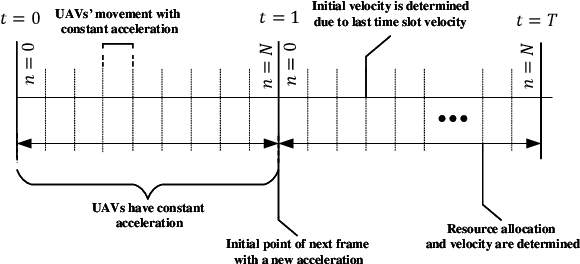

Abstract:In this paper, we consider unmanned aerial vehicles (UAVs) equipped with a visible light communication (VLC) access point and coordinated multipoint (CoMP) capability that allows users to connect to more than one UAV. UAVs can move in 3-dimensional (3D) at a constant acceleration, where a central server is responsible for synchronization and cooperation among UAVs. The effect of accelerated motion in UAV is necessary to be considered. Unlike most existing works, we examine the effects of variable speed on kinetics and radio resource allocations. For the proposed system model, we define two different time frames. In the frame, the acceleration of each UAV is specified, and in each slot, radio resources are allocated. Our goal is to formulate a multiobjective optimization problem where the total data rate is maximized, and the total communication power consumption is minimized simultaneously. To handle this multiobjective optimization, we first apply the scalarization method and then apply multi-agent deep deterministic policy gradient (MADDPG). We improve this solution method by adding two critic networks together with two-stage resources allocation. Simulation results indicate that the constant acceleration motion of UAVs shows about 8\% better results than conventional motion systems in terms of performance.

Learning based E2E Energy Efficient in Joint Radio and NFV Resource Allocation for 5G and Beyond Networks

Jul 13, 2021

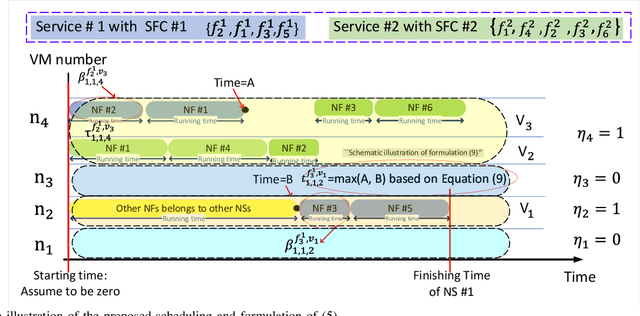

Abstract:In this paper, we propose a joint radio and core resource allocation framework for NFV-enabled networks. In the proposed system model, the goal is to maximize energy efficiency (EE), by guaranteeing end-to-end (E2E) quality of service (QoS) for different service types. To this end, we formulate an optimization problem in which power and spectrum resources are allocated in the radio part. In the core part, the chaining, placement, and scheduling of functions are performed to ensure the QoS of all users. This joint optimization problem is modeled as a Markov decision process (MDP), considering time-varying characteristics of the available resources and wireless channels. A soft actor-critic deep reinforcement learning (SAC-DRL) algorithm based on the maximum entropy framework is subsequently utilized to solve the above MDP. Numerical results reveal that the proposed joint approach based on the SAC-DRL algorithm could significantly reduce energy consumption compared to the case in which R-RA and NFV-RA problems are optimized separately.

AI-Based and Mobility-Aware Energy Efficient Resource Allocation and Trajectory Design for NFV Enabled Aerial Networks

May 21, 2021

Abstract:In this paper, we propose a novel joint intelligent trajectory design and resource allocation algorithm based on user's mobility and their requested services for unmanned aerial vehicles (UAVs) assisted networks, where UAVs act as nodes of a network function virtualization (NFV) enabled network. Our objective is to maximize energy efficiency and minimize the average delay on all services by allocating the limited radio and NFV resources. In addition, due to the traffic conditions and mobility of users, we let some Virtual Network Functions (VNFs) to migrate from their current locations to other locations to satisfy the Quality of Service requirements. We formulate our problem to find near-optimal locations of UAVs, transmit power, subcarrier assignment, placement, and scheduling the requested service's functions over the UAVs and perform suitable VNF migration. Then we propose a novel Hierarchical Hybrid Continuous and Discrete Action (HHCDA) deep reinforcement learning method to solve our problem. Finally, the convergence and computational complexity of the proposed algorithm and its performance analyzed for different parameters. Simulation results show that our proposed HHCDA method decreases the request reject rate and average delay by 31.5% and 20% and increases the energy efficiency by 40% compared to DDPG method.

AoI-Aware Resource Allocation for Platoon-Based C-V2X Networks via Multi-Agent Multi-Task Reinforcement Learning

May 10, 2021

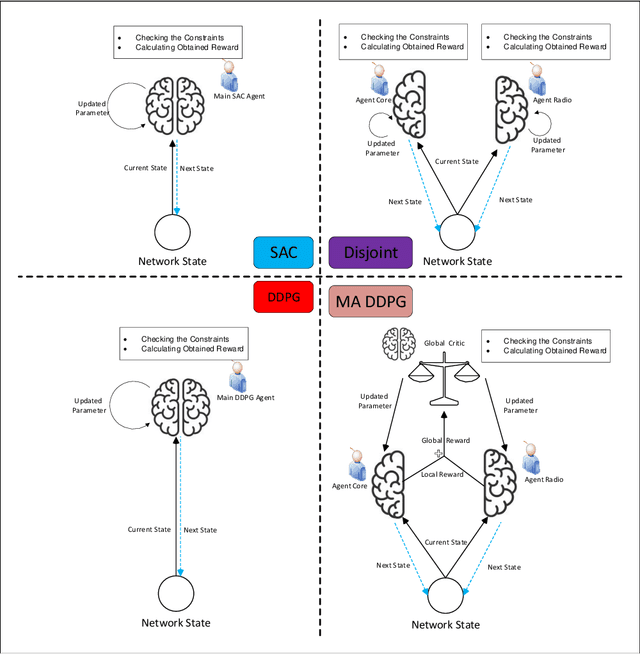

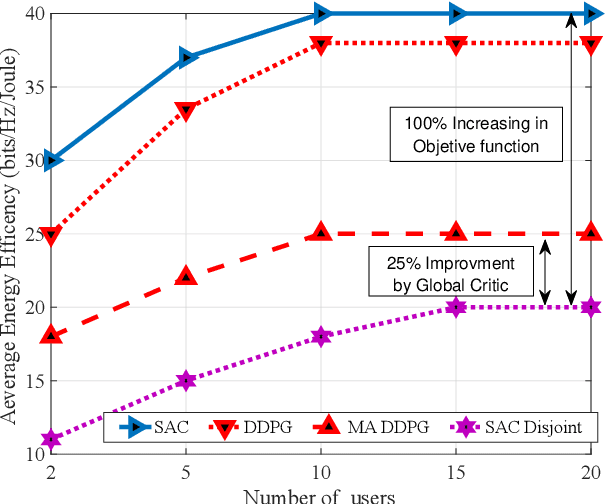

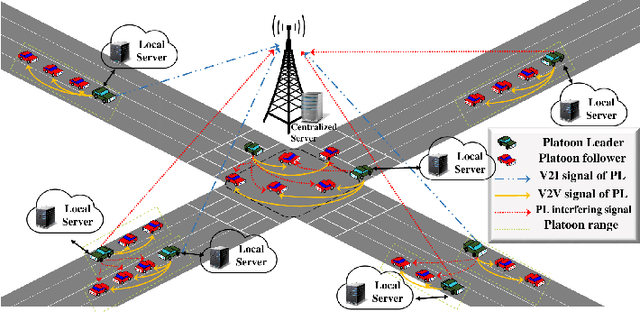

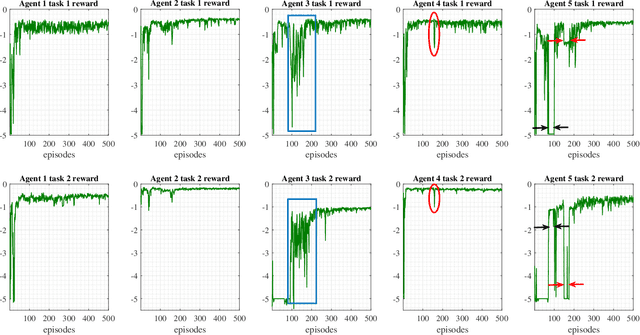

Abstract:This paper investigates the problem of age of information (AoI) aware radio resource management for a platooning system. Multiple autonomous platoons exploit the cellular wireless vehicle-to-everything (C-V2X) communication technology to disseminate the cooperative awareness messages (CAMs) to their followers while ensuring timely delivery of safety-critical messages to the Road-Side Unit (RSU). Due to the challenges of dynamic channel conditions, centralized resource management schemes that require global information are inefficient and lead to large signaling overheads. Hence, we exploit a distributed resource allocation framework based on multi-agent reinforcement learning (MARL), where each platoon leader (PL) acts as an agent and interacts with the environment to learn its optimal policy. Existing MARL algorithms consider a holistic reward function for the group's collective success, which often ends up with unsatisfactory results and cannot guarantee an optimal policy for each agent. Consequently, motivated by the existing literature in RL, we propose a novel MARL framework that trains two critics with the following goals: A global critic which estimates the global expected reward and motivates the agents toward a cooperating behavior and an exclusive local critic for each agent that estimates the local individual reward. Furthermore, based on the tasks each agent has to accomplish, the individual reward of each agent is decomposed into multiple sub-reward functions where task-wise value functions are learned separately. Numerical results indicate our proposed algorithm's effectiveness compared with the conventional RL methods applied in this area.

Robust Energy-Efficient Resource Management, SIC Ordering, and Beamforming Design for MC MISO-NOMA Enabled 6G

Jan 17, 2021

Abstract:This paper studies a novel approach for successive interference cancellation (SIC) ordering and beamforming in a multiple antennas non-orthogonal multiple access (NOMA) network with multi-carrier multi-user setup. To this end, we formulate a joint beamforming design, subcarrier allocation, user association, and SIC ordering algorithm to maximize the worst-case energy efficiency (EE). The formulated problem is a non-convex mixed integer non-linear programming (MINLP) which is generally difficult to solve. To handle it, we first adopt the linearizion technique as well as relaxing the integer variables, and then we employ the Dinkelbach algorithm to convert it into a more mathematically tractable form. The adopted non-convex optimization problem is transformed into an equivalent rank-constrained semidefinite programming (SDP) and is solved by SDP relaxation and exploiting sequential fractional programming. Furthermore, to strike a balance between complexity and performance, a low complex approach based on alternative optimization is adopted. Numerical results unveil that the proposed SIC ordering method outperforms the conventional existing works addressed in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge