Abolfazl Zakeri

Student Member, IEEE

Constrained Multimodal Sensing-Aided Communications: A Dynamic Beamforming Design

May 15, 2025Abstract:Using multimodal sensory data can enhance communications systems by reducing the overhead and latency in beam training. However, processing such data incurs high computational complexity, and continuous sensing results in significant power and bandwidth consumption. This gives rise to a tradeoff between the (multimodal) sensing data acquisition rate and communications performance. In this work, we develop a constrained multimodal sensing-aided communications framework where dynamic sensing and beamforming are performed under a sensing budget. Specifically, we formulate an optimization problem that maximizes the average received signal-to-noise ratio (SNR) of user equipment, subject to constraints on the average number of sensing actions and power budget. Using the Saleh-Valenzuela mmWave channel model, we construct the channel primarily based on position information obtained via multimodal sensing. Stricter sensing constraints reduce the availability of position data, leading to degraded channel estimation and thus lower performance. We apply Lyapunov optimization to solve the problem and derive a dynamic sensing and beamforming algorithm. Numerical evaluations on the DeepSense and Raymobtime datasets show that halving sensing times leads to only up to 7.7% loss in average SNR.

Dynamic Joint Communications and Sensing Precoding Design: A Lyapunov Approach

Mar 18, 2025Abstract:This letter proposes a dynamic joint communications and sensing (JCAS) framework to adaptively design dedicated sensing and communications precoders. We first formulate a stochastic control problem to maximize the long-term average signal-to-noise ratio for sensing, subject to a minimum average communications signal-to-interference-plus-noise ratio requirement and a power budget. Using Lyapunov optimization, specifically the drift-plus-penalty method, we cast the problem into a sequence of per-slot non-convex problems. To solve these problems, we develop a successive convex approximation method. Additionally, we derive a closed-form solution to the per-slot problems based on the notion of zero-forcing. Numerical evaluations demonstrate the efficacy of the proposed methods and highlight their superiority compared to a baseline method based on conventional design.

Goal-Oriented Remote Tracking Through Correlated Observations in Pull-based Communications

Mar 17, 2025Abstract:We address the real-time remote tracking problem in a status update system comprising two sensors, two independent information sources, and a remote monitor. The status updating follows a pull-based communication, where the monitor commands/pulls the sensors for status updates, i.e., the actual state of the sources. We consider that the observations are correlated, meaning that each sensor sent data could also include the state of the other source due to, e.g., inter-sensor communication or proximity-based monitoring. The effectiveness of data communication is measured by a generic distortion, capturing the underlying application goal. We provide optimal command/pulling policies for the monitor that minimize the average weighted sum distortion and transmission cost. Since the monitor cannot fully observe the exact state of each source, we propose a partially observable Markov decision process (POMDP) and reformulate it as a belief MDP problem. We then effectively truncate the infinite belief space and transform it into a finite-state MDP problem, which is solved via relative value iteration. Simulation results show the effectiveness of the derived policy over the age-optimal and max-age-first baseline policies.

Semantic-aware Sampling and Transmission in Energy Harvesting Systems: A POMDP Approach

Nov 11, 2023Abstract:We study real-time tracking problem in an energy harvesting system with a Markov source under an imperfect channel. We consider both sampling and transmission costs and different from most prior studies that assume the source is fully observable, the sampling cost renders the source unobservable. The goal is to jointly optimize sampling and transmission policies for three semantic-aware metrics: i) the age of information (AoI), ii) general distortion, and iii) the age of incorrect information (AoII). To this end, we formulate and solve a stochastic control problem. Specifically, for the AoI metric, we cast a Markov decision process (MDP) problem and solve it using relative value iteration (RVI). For the distortion and AoII metrics, we utilize the partially observable MDP (POMDP) modeling and leverage the notion of belief MDP formulation of POMDP to find optimal policies. For the distortion metric and the AoII metric under the perfect channel setup, we effectively truncate the corresponding belief space and solve an MDP problem using RVI. For the general setup, a deep reinforcement learning policy is proposed. Through simulations, we demonstrate significant performance improvements achieved by the derived policies. The results reveal various switching-type structures of optimal policies and show that a distortion-optimal policy is also AoII optimal.

Optimal Semantic-aware Sampling and Transmission in Energy Harvesting Systems Through the AoII

Apr 03, 2023Abstract:We study a real-time tracking problem in an energy harvesting status update system with a Markov source under both sampling and transmission costs. The problem's primary challenge stems from the non-observability of the source due to the sampling cost. By using the age of incorrect information (AoII) as a semantic-aware performance metric, our main goal is to find an optimal policy that minimizes the time average AoII subject to an energy-causality constraint. To this end, a stochastic optimization problem is formulated and solved by modeling it as a partially observable Markov decision process. More specifically, to solve the problem, we use the notion of belief state and by characterizing the belief space, we cast the main problem as an MDP whose cost function is a non-linear function of the age of information (AoI) and solve it via relative value iteration. Simulation results show the effectiveness of the derived policy, with a double-threshold structure on the battery levels and AoI.

Query-Age-Optimal Scheduling under Sampling and Transmission Constraints

Sep 23, 2022

Abstract:This letter provides query-age-optimal joint sam- pling and transmission scheduling policies for a heterogeneous status update system, consisting of a stochastic arrival and a generate-at-will source, with an unreliable channel. Our main goal is to minimize the average query age of information (QAoI) subject to average sampling, average transmission, and per-slot transmission constraints. To this end, an optimization problem is formulated and solved by casting it into a linear program. We also provide a low-complexity near-optimal policy using the notion of weakly coupled constrained Markov decision processes. The numerical results show up to 32% performance improvement by the proposed policies compared with a benchmark policy.

Dynamic Scheduling for Minimizing AoI in Resource-Constrained Multi-Source Relaying Systems with Stochastic Arrivals

Mar 10, 2022

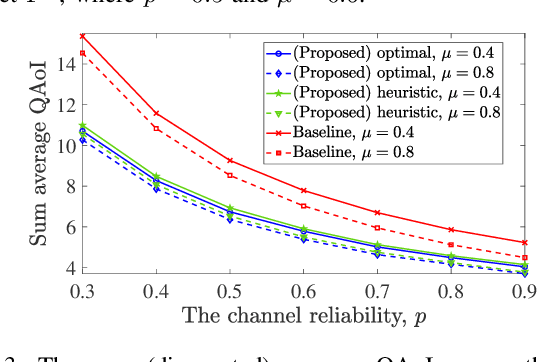

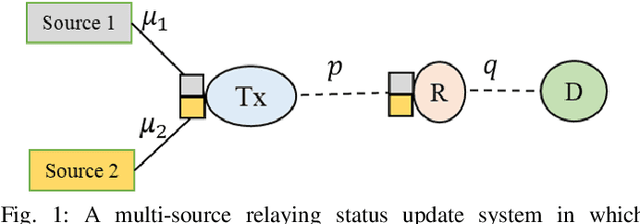

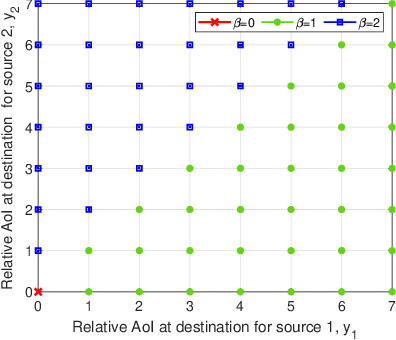

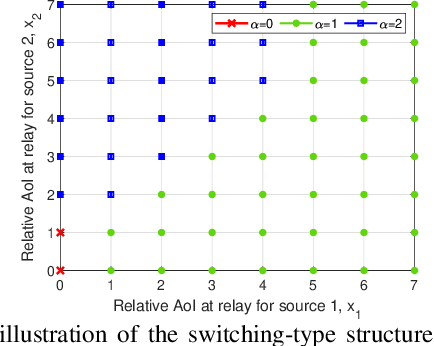

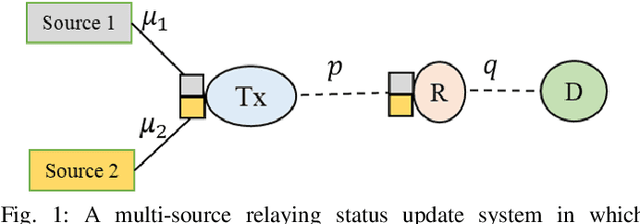

Abstract:We consider a multi-source relaying system where the independent sources randomly generate status update packets which are sent to the destination with the aid of a relay through unreliable links. We develop scheduling policies to minimize the sum average age of information (AoI) subject to transmission capacity and long-run average resource constraints. We formulate a stochastic optimization problem and solve it under two different scenarios regarding the knowledge of system statistics: known environment and unknown environment. For the known environment, a constrained Markov decision process (CMDP) approach and a drift-plus-penalty method are proposed. The CMDP problem is solved by transforming it into an MDP problem using the Lagrangian relaxation method. We theoretically analyze the structure of optimal policies for the MDP problem and subsequently propose a structure-aware algorithm that returns a practical near-optimal policy. By the drift-plus-penalty method, we devise a dynamic near-optimal low-complexity policy. For the unknown environment, we develop a deep reinforcement learning policy by employing the Lyapunov optimization theory and a dueling double deep Q-network. Simulation results are provided to assess the performance of our policies and validate the theoretical results. The results show up to 91% performance improvement compared to a baseline policy.

Minimizing AoI in Resource-Constrained Multi-Source Relaying Systems with Stochastic Arrivals

Sep 10, 2021

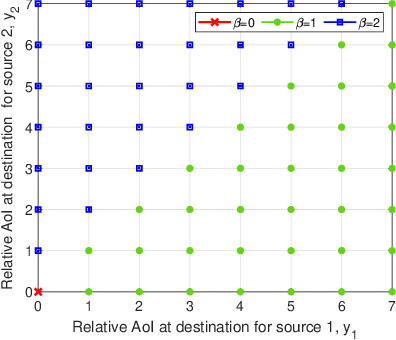

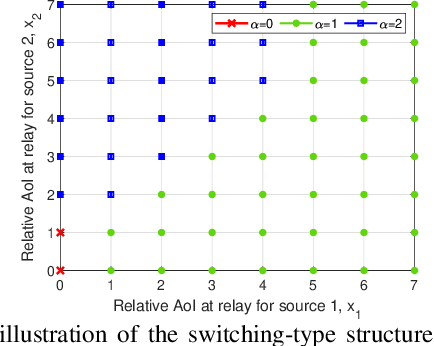

Abstract:We consider a multi-source relaying system where the sources independently and randomly generate status update packets which are sent to the destination with the aid of a bufferaided relay through unreliable links. We formulate a stochastic optimization problem aiming to minimize the sum average age of information (AAoI) of sources under per-slot transmission capacity constraints and a long-run average resource constraint. To solve the problem, we recast it as a constrained Markov decision process (CMDP) problem and adopt the Lagrangian method. We analyze the structure of an optimal policy for the resulting MDP problem that possesses a switching-type structure. We propose an algorithm that obtains a stationary deterministic near-optimal policy, establishing a benchmark for the system. Simulation results show the effectiveness of our algorithm compared to benchmark algorithms.

Robust Energy-Efficient Resource Management, SIC Ordering, and Beamforming Design for MC MISO-NOMA Enabled 6G

Jan 17, 2021

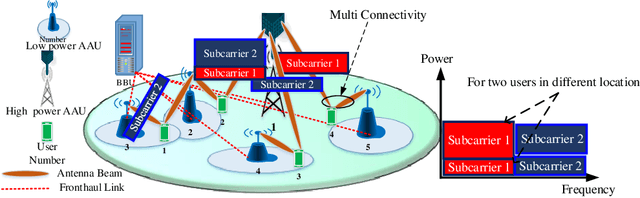

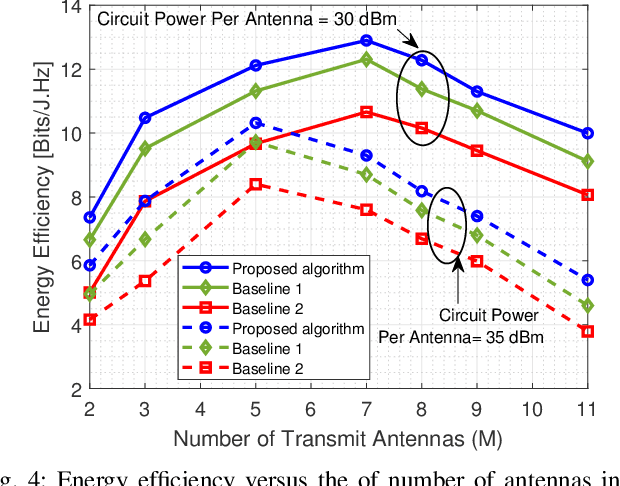

Abstract:This paper studies a novel approach for successive interference cancellation (SIC) ordering and beamforming in a multiple antennas non-orthogonal multiple access (NOMA) network with multi-carrier multi-user setup. To this end, we formulate a joint beamforming design, subcarrier allocation, user association, and SIC ordering algorithm to maximize the worst-case energy efficiency (EE). The formulated problem is a non-convex mixed integer non-linear programming (MINLP) which is generally difficult to solve. To handle it, we first adopt the linearizion technique as well as relaxing the integer variables, and then we employ the Dinkelbach algorithm to convert it into a more mathematically tractable form. The adopted non-convex optimization problem is transformed into an equivalent rank-constrained semidefinite programming (SDP) and is solved by SDP relaxation and exploiting sequential fractional programming. Furthermore, to strike a balance between complexity and performance, a low complex approach based on alternative optimization is adopted. Numerical results unveil that the proposed SIC ordering method outperforms the conventional existing works addressed in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge