Marian Codreanu

Goal-Oriented Remote Tracking Through Correlated Observations in Pull-based Communications

Mar 17, 2025Abstract:We address the real-time remote tracking problem in a status update system comprising two sensors, two independent information sources, and a remote monitor. The status updating follows a pull-based communication, where the monitor commands/pulls the sensors for status updates, i.e., the actual state of the sources. We consider that the observations are correlated, meaning that each sensor sent data could also include the state of the other source due to, e.g., inter-sensor communication or proximity-based monitoring. The effectiveness of data communication is measured by a generic distortion, capturing the underlying application goal. We provide optimal command/pulling policies for the monitor that minimize the average weighted sum distortion and transmission cost. Since the monitor cannot fully observe the exact state of each source, we propose a partially observable Markov decision process (POMDP) and reformulate it as a belief MDP problem. We then effectively truncate the infinite belief space and transform it into a finite-state MDP problem, which is solved via relative value iteration. Simulation results show the effectiveness of the derived policy over the age-optimal and max-age-first baseline policies.

Semantic-aware Sampling and Transmission in Energy Harvesting Systems: A POMDP Approach

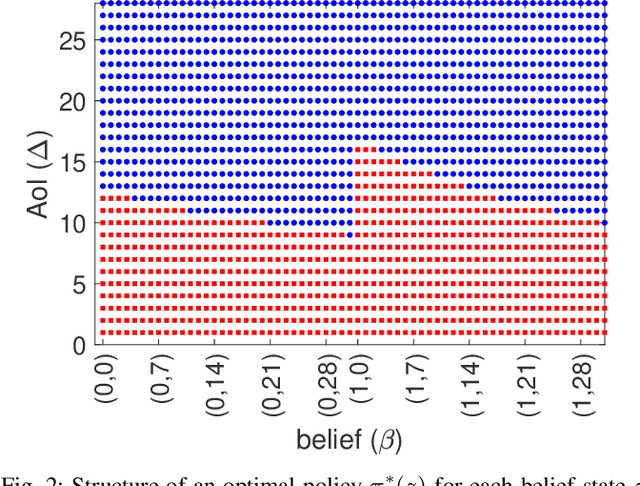

Nov 11, 2023Abstract:We study real-time tracking problem in an energy harvesting system with a Markov source under an imperfect channel. We consider both sampling and transmission costs and different from most prior studies that assume the source is fully observable, the sampling cost renders the source unobservable. The goal is to jointly optimize sampling and transmission policies for three semantic-aware metrics: i) the age of information (AoI), ii) general distortion, and iii) the age of incorrect information (AoII). To this end, we formulate and solve a stochastic control problem. Specifically, for the AoI metric, we cast a Markov decision process (MDP) problem and solve it using relative value iteration (RVI). For the distortion and AoII metrics, we utilize the partially observable MDP (POMDP) modeling and leverage the notion of belief MDP formulation of POMDP to find optimal policies. For the distortion metric and the AoII metric under the perfect channel setup, we effectively truncate the corresponding belief space and solve an MDP problem using RVI. For the general setup, a deep reinforcement learning policy is proposed. Through simulations, we demonstrate significant performance improvements achieved by the derived policies. The results reveal various switching-type structures of optimal policies and show that a distortion-optimal policy is also AoII optimal.

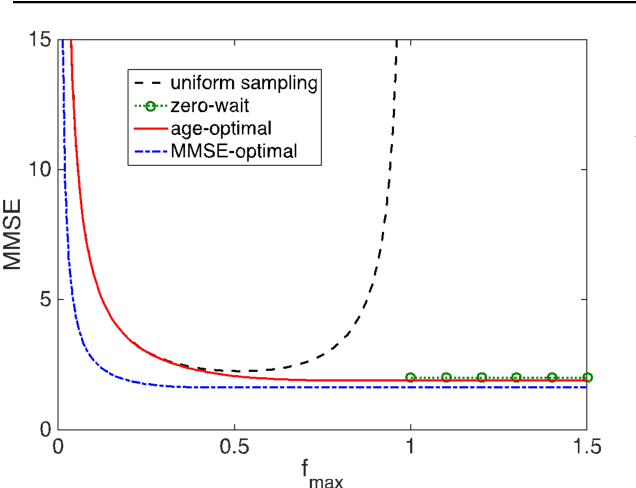

Optimal Semantic-aware Sampling and Transmission in Energy Harvesting Systems Through the AoII

Apr 03, 2023Abstract:We study a real-time tracking problem in an energy harvesting status update system with a Markov source under both sampling and transmission costs. The problem's primary challenge stems from the non-observability of the source due to the sampling cost. By using the age of incorrect information (AoII) as a semantic-aware performance metric, our main goal is to find an optimal policy that minimizes the time average AoII subject to an energy-causality constraint. To this end, a stochastic optimization problem is formulated and solved by modeling it as a partially observable Markov decision process. More specifically, to solve the problem, we use the notion of belief state and by characterizing the belief space, we cast the main problem as an MDP whose cost function is a non-linear function of the age of information (AoI) and solve it via relative value iteration. Simulation results show the effectiveness of the derived policy, with a double-threshold structure on the battery levels and AoI.

Query-Age-Optimal Scheduling under Sampling and Transmission Constraints

Sep 23, 2022

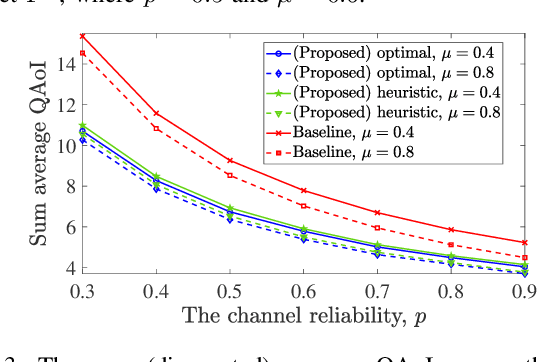

Abstract:This letter provides query-age-optimal joint sam- pling and transmission scheduling policies for a heterogeneous status update system, consisting of a stochastic arrival and a generate-at-will source, with an unreliable channel. Our main goal is to minimize the average query age of information (QAoI) subject to average sampling, average transmission, and per-slot transmission constraints. To this end, an optimization problem is formulated and solved by casting it into a linear program. We also provide a low-complexity near-optimal policy using the notion of weakly coupled constrained Markov decision processes. The numerical results show up to 32% performance improvement by the proposed policies compared with a benchmark policy.

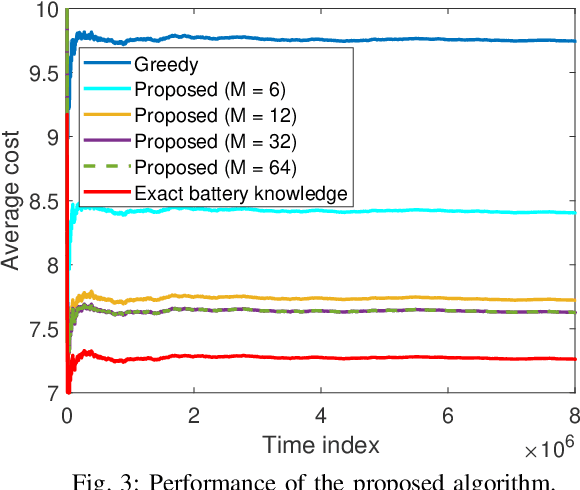

Status Updating with an Energy Harvesting Sensor under Partial Battery Knowledge

Apr 12, 2022

Abstract:We consider status updating under inexact knowledge of the battery level of an energy harvesting (EH) sensor that sends status updates about a random process to users via a cache-enabled edge node. More precisely, the control decisions are performed by relying only on the battery level knowledge captured from the last received status update packet. Upon receiving on-demand requests for fresh information from the users, the edge node uses the available information to decide whether to command the sensor to send a status update or to retrieve the most recently received measurement from the cache. We seek for the best actions of the edge node to minimize the average AoI of the served measurements, i.e., average on-demand AoI. Accounting for the partial battery knowledge, we model the problem as a partially observable Markov decision process (POMDP), and, through characterizing its key structures, develop a dynamic programming algorithm to obtain an optimal policy. Simulation results illustrate the threshold-based structure of an optimal policy and show the gains obtained by the proposed optimal POMDP-based policy compared to a request-aware greedy (myopic) policy.

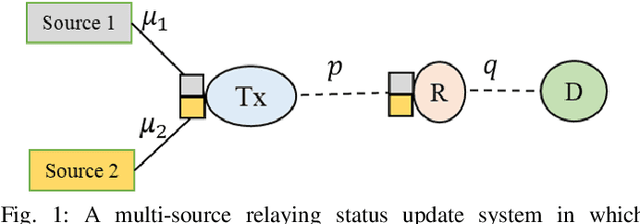

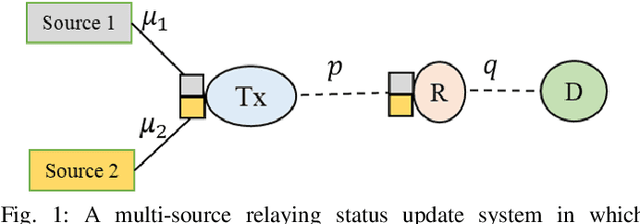

Dynamic Scheduling for Minimizing AoI in Resource-Constrained Multi-Source Relaying Systems with Stochastic Arrivals

Mar 10, 2022

Abstract:We consider a multi-source relaying system where the independent sources randomly generate status update packets which are sent to the destination with the aid of a relay through unreliable links. We develop scheduling policies to minimize the sum average age of information (AoI) subject to transmission capacity and long-run average resource constraints. We formulate a stochastic optimization problem and solve it under two different scenarios regarding the knowledge of system statistics: known environment and unknown environment. For the known environment, a constrained Markov decision process (CMDP) approach and a drift-plus-penalty method are proposed. The CMDP problem is solved by transforming it into an MDP problem using the Lagrangian relaxation method. We theoretically analyze the structure of optimal policies for the MDP problem and subsequently propose a structure-aware algorithm that returns a practical near-optimal policy. By the drift-plus-penalty method, we devise a dynamic near-optimal low-complexity policy. For the unknown environment, we develop a deep reinforcement learning policy by employing the Lyapunov optimization theory and a dueling double deep Q-network. Simulation results are provided to assess the performance of our policies and validate the theoretical results. The results show up to 91% performance improvement compared to a baseline policy.

On-Demand AoI Minimization in Resource-Constrained Cache-Enabled IoT Networks with Energy Harvesting Sensors

Jan 28, 2022

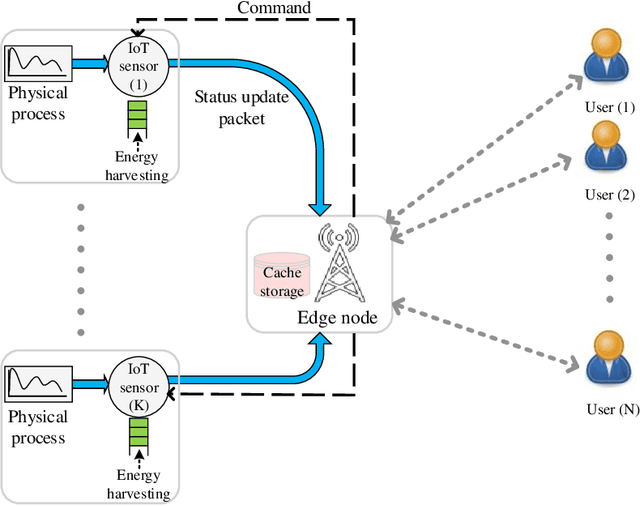

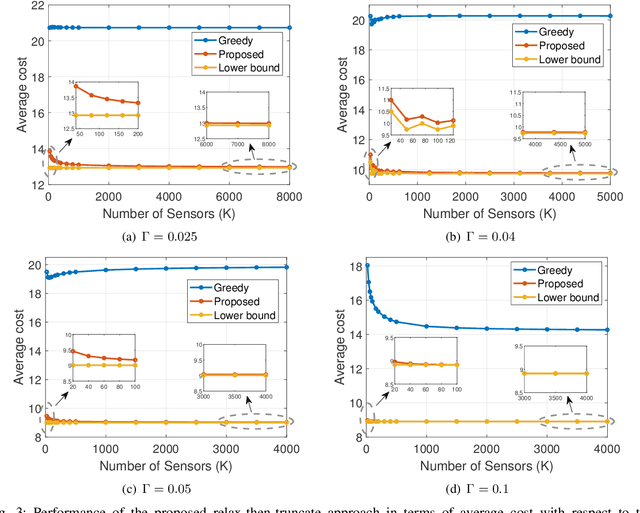

Abstract:We consider a resource-constrained IoT network, where multiple users make on-demand requests to a cache-enabled edge node to send status updates about various random processes, each monitored by an energy harvesting sensor. The edge node serves users' requests by deciding whether to command the corresponding sensor to send a fresh status update or retrieve the most recently received measurement from the cache. Our objective is to find the best actions of the edge node to minimize the average age of information (AoI) of the received measurements upon request, i.e., average on-demand AoI, subject to per-slot transmission and energy constraints. First, we derive a Markov decision process model and propose an iterative algorithm that obtains an optimal policy. Then, we develop an asymptotically optimal low-complexity algorithm -- termed relax-then-truncate -- and prove that it is optimal as the number of sensors goes to infinity. Simulation results illustrate that the proposed relax-then-truncate approach significantly reduces the average on-demand AoI compared to a request-aware greedy (myopic) policy and also depict that it performs close to the optimal solution even for moderate numbers of sensors.

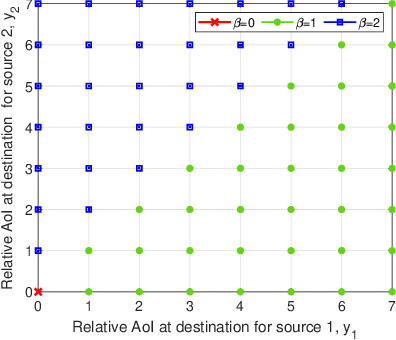

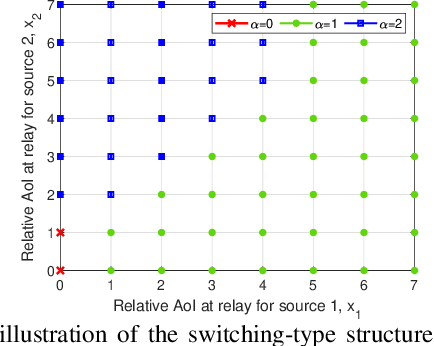

Minimizing AoI in Resource-Constrained Multi-Source Relaying Systems with Stochastic Arrivals

Sep 10, 2021

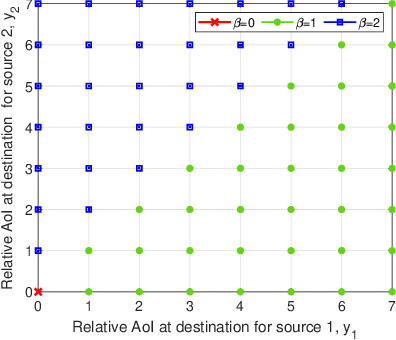

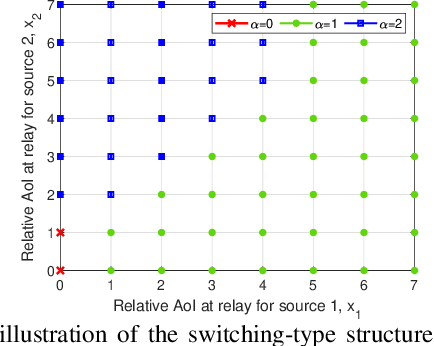

Abstract:We consider a multi-source relaying system where the sources independently and randomly generate status update packets which are sent to the destination with the aid of a bufferaided relay through unreliable links. We formulate a stochastic optimization problem aiming to minimize the sum average age of information (AAoI) of sources under per-slot transmission capacity constraints and a long-run average resource constraint. To solve the problem, we recast it as a constrained Markov decision process (CMDP) problem and adopt the Lagrangian method. We analyze the structure of an optimal policy for the resulting MDP problem that possesses a switching-type structure. We propose an algorithm that obtains a stationary deterministic near-optimal policy, establishing a benchmark for the system. Simulation results show the effectiveness of our algorithm compared to benchmark algorithms.

Semantic Communications in Networked Systems

Mar 09, 2021

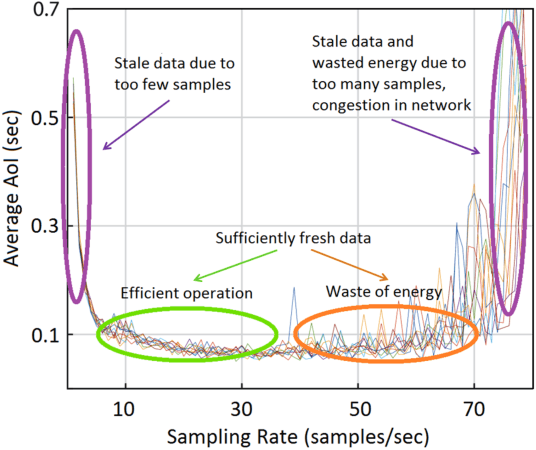

Abstract:We present our vision for a departure from the established way of architecting and assessing communication networks, by incorporating the semantics of information for communications and control in networked systems. We define semantics of information, not as the meaning of the messages, but as their significance, possibly within a real time constraint, relative to the purpose of the data exchange. We argue that research efforts must focus on laying the theoretical foundations of a redesign of the entire process of information generation, transmission and usage in unison by developing: advanced semantic metrics for communications and control systems; an optimal sampling theory combining signal sparsity and semantics, for real-time prediction, reconstruction and control under communication constraints and delays; semantic compressed sensing techniques for decision making and inference directly in the compressed domain; semantic-aware data generation, channel coding, feedback, multiple and random access schemes that reduce the volume of data and the energy consumption, increasing the number of supportable devices.

Age-Aware Status Update Control for Energy Harvesting IoT Sensors via Reinforcement Learning

Apr 27, 2020

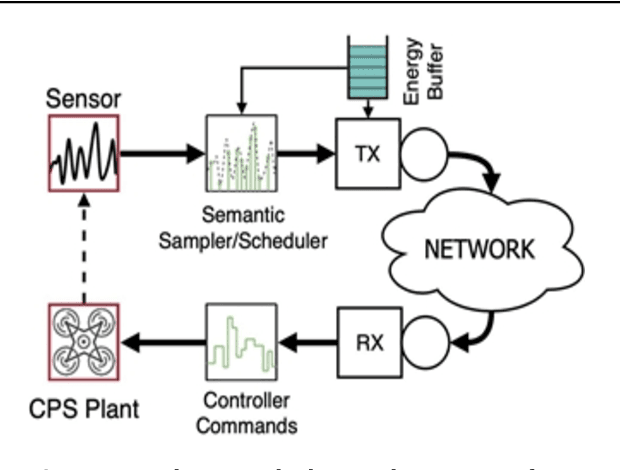

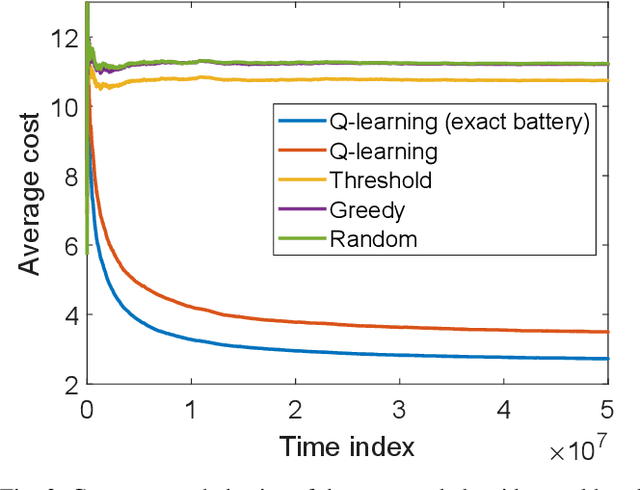

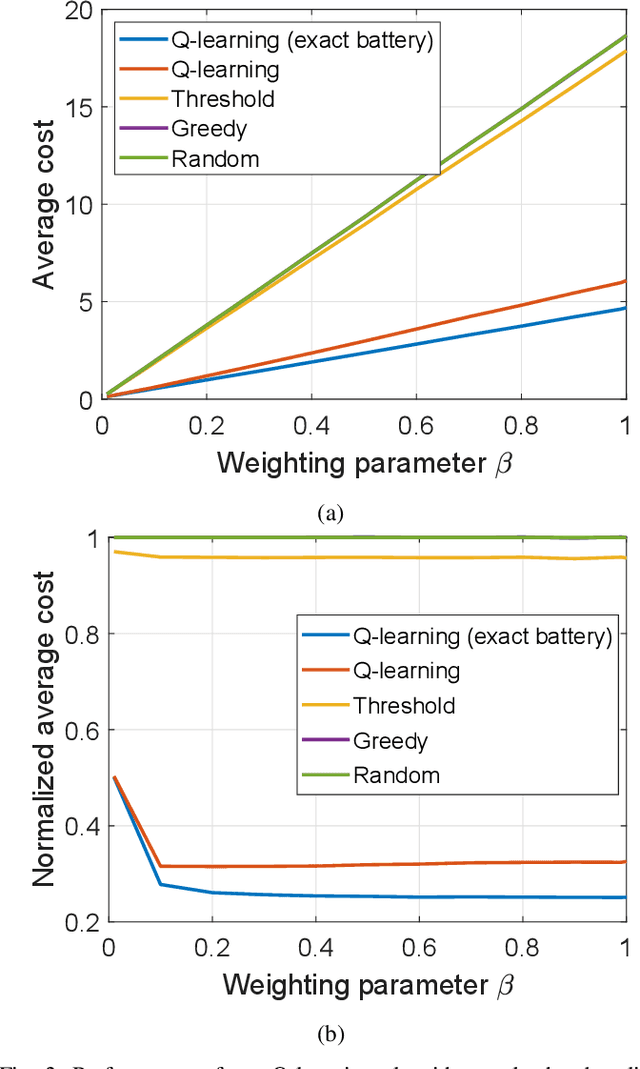

Abstract:We consider an IoT sensing network with multiple users, multiple energy harvesting sensors, and a wireless edge node acting as a gateway between the users and sensors. The users request for updates about the value of physical processes, each of which is measured by one sensor. The edge node has a cache storage that stores the most recently received measurements from each sensor. Upon receiving a request, the edge node can either command the corresponding sensor to send a status update, or use the data in the cache. We aim to find the best action of the edge node to minimize the average long-term cost which trade-offs between the age of information and energy consumption. We propose a practical reinforcement learning approach that finds an optimal policy without knowing the exact battery levels of the sensors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge