Mohamad Assaad

Energy-Efficient Quantized Federated Learning for Resource-constrained IoT devices

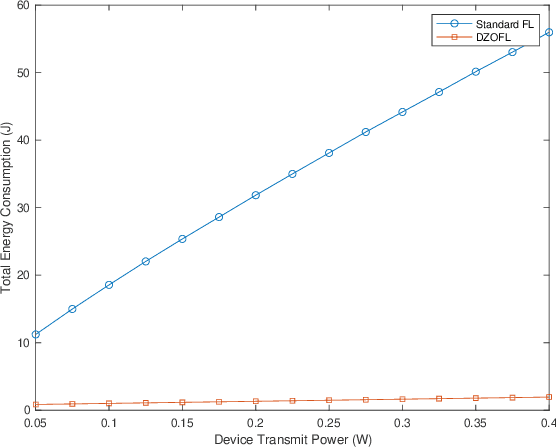

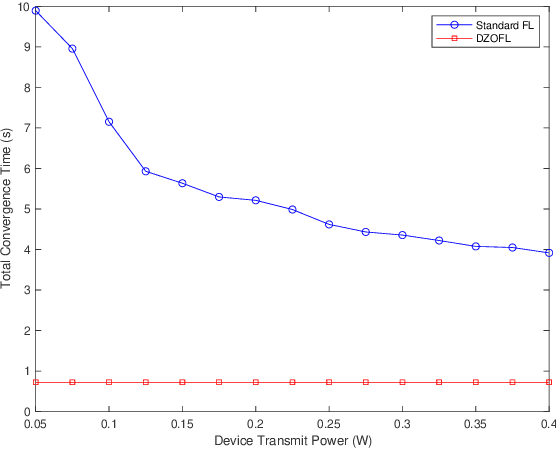

Sep 16, 2025Abstract:Federated Learning (FL) has emerged as a promising paradigm for enabling collaborative machine learning while preserving data privacy, making it particularly suitable for Internet of Things (IoT) environments. However, resource-constrained IoT devices face significant challenges due to limited energy,unreliable communication channels, and the impracticality of assuming infinite blocklength transmission. This paper proposes a federated learning framework for IoT networks that integrates finite blocklength transmission, model quantization, and an error-aware aggregation mechanism to enhance energy efficiency and communication reliability. The framework also optimizes uplink transmission power to balance energy savings and model performance. Simulation results demonstrate that the proposed approach significantly reduces energy consumption by up to 75\% compared to a standard FL model, while maintaining robust model accuracy, making it a viable solution for FL in real-world IoT scenarios with constrained resources. This work paves the way for efficient and reliable FL implementations in practical IoT deployments. Index Terms: Federated learning, IoT, finite blocklength, quantization, energy efficiency.

Communication-Efficient Zero-Order and First-Order Federated Learning Methods over Wireless Networks

Aug 11, 2025

Abstract:Federated Learning (FL) is an emerging learning framework that enables edge devices to collaboratively train ML models without sharing their local data. FL faces, however, a significant challenge due to the high amount of information that must be exchanged between the devices and the aggregator in the training phase, which can exceed the limited capacity of wireless systems. In this paper, two communication-efficient FL methods are considered where communication overhead is reduced by communicating scalar values instead of long vectors and by allowing high number of users to send information simultaneously. The first approach employs a zero-order optimization technique with two-point gradient estimator, while the second involves a first-order gradient computation strategy. The novelty lies in leveraging channel information in the learning algorithms, eliminating hence the need for additional resources to acquire channel state information (CSI) and to remove its impact, as well as in considering asynchronous devices. We provide a rigorous analytical framework for the two methods, deriving convergence guarantees and establishing appropriate performance bounds.

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

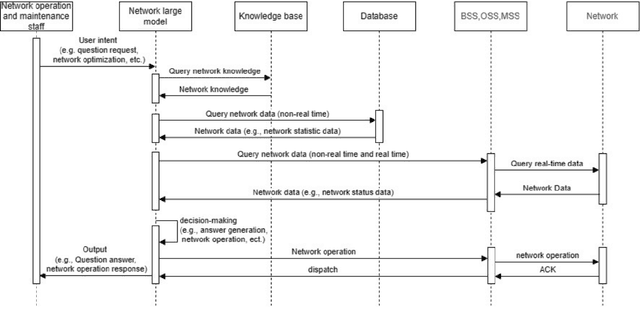

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

Inter-RIS Beam Focusing Codebook Design in Cooperative Distributed RIS Systems

Nov 08, 2024

Abstract:This paper explores distributed Reconfigurable Intelligent Surfaces (RISs) by introducing a cooperative dimension that enhances adaptability and performance. It focuses on strategically deploying multiple RISs to improve Line-of-Sight (LoS) connectivity with the Base Station (BS) and among RISs, thereby aiding users in areas with weak BS coverage and enhancing spatial multiplexing gain. Each RIS can act as a main RIS (mRIS) to directly support users or as an intermediate RIS (iRIS) to reflect signals to another mRIS. This dual functionality allows for flexible responses to changing conditions. We implement an inter-RIS signal focusing design for phase shifts, creating a tailored codebook for precise control over signal direction. This design considers the interplay of incidence and reflection angles to maximize reflected signal power, based on the RIS response function and the physical properties of the RIS elements.

Single Point-Based Distributed Zeroth-Order Optimization with a Non-Convex Stochastic Objective Function

Oct 08, 2024Abstract:Zero-order (ZO) optimization is a powerful tool for dealing with realistic constraints. On the other hand, the gradient-tracking (GT) technique proved to be an efficient method for distributed optimization aiming to achieve consensus. However, it is a first-order (FO) method that requires knowledge of the gradient, which is not always possible in practice. In this work, we introduce a zero-order distributed optimization method based on a one-point estimate of the gradient tracking technique. We prove that this new technique converges with a single noisy function query at a time in the non-convex setting. We then establish a convergence rate of $O(\frac{1}{\sqrt[3]{K}})$ after a number of iterations K, which competes with that of $O(\frac{1}{\sqrt[4]{K}})$ of its centralized counterparts. Finally, a numerical example validates our theoretical results.

* In this version, we slightly modify the proof of Theorem 3.7 in the original publication. We remove the expectation in the proof that was added by error. The original publication can be found at: https://proceedings.mlr.press/v202/mhanna23a.html

Communication and Energy Efficient Federated Learning using Zero-Order Optimization Technique

Sep 24, 2024

Abstract:Federated learning (FL) is a popular machine learning technique that enables multiple users to collaboratively train a model while maintaining the user data privacy. A significant challenge in FL is the communication bottleneck in the upload direction, and thus the corresponding energy consumption of the devices, attributed to the increasing size of the model/gradient. In this paper, we address this issue by proposing a zero-order (ZO) optimization method that requires the upload of a quantized single scalar per iteration by each device instead of the whole gradient vector. We prove its theoretical convergence and find an upper bound on its convergence rate in the non-convex setting, and we discuss its implementation in practical scenarios. Our FL method and the corresponding convergence analysis take into account the impact of quantization and packet dropping due to wireless errors. We show also the superiority of our method, in terms of communication overhead and energy consumption, as compared to standard gradient-based FL methods.

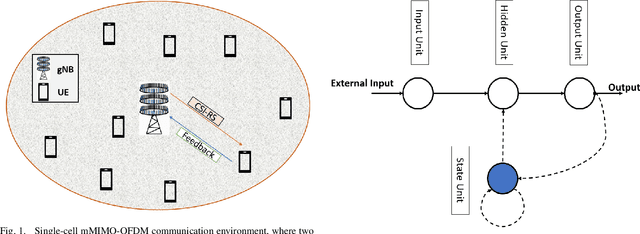

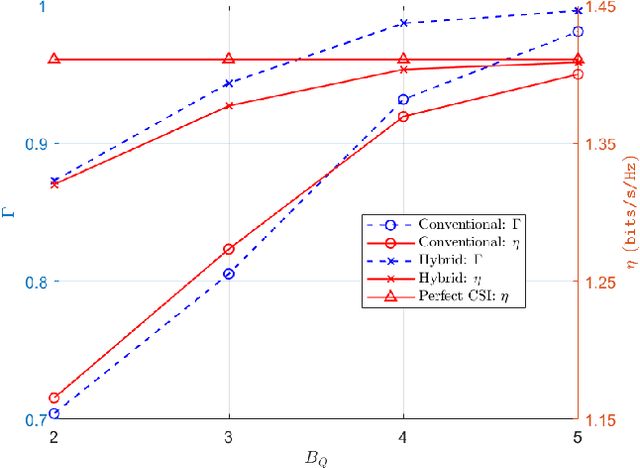

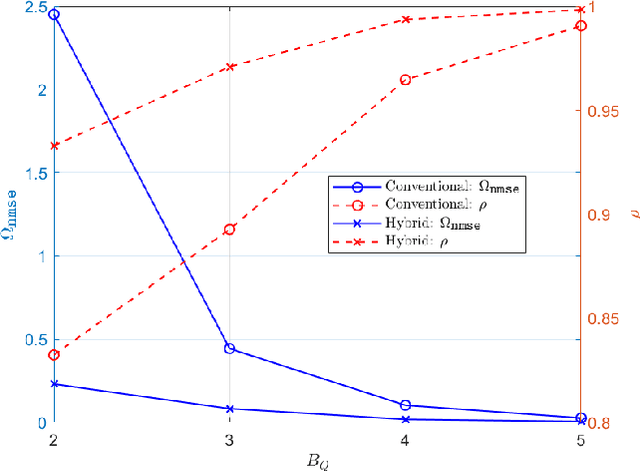

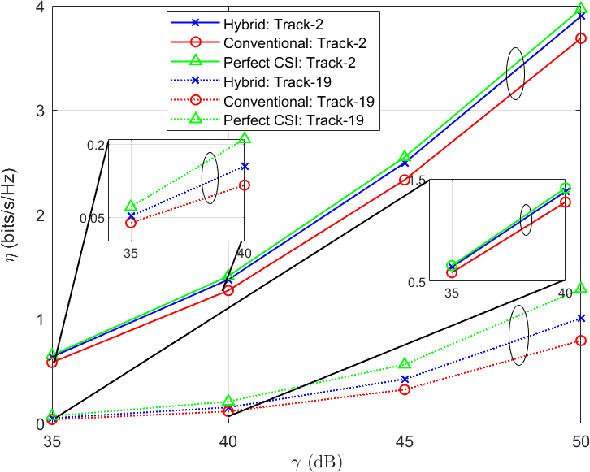

Massive MIMO CSI Feedback using Channel Prediction: How to Avoid Machine Learning at UE?

Mar 20, 2024

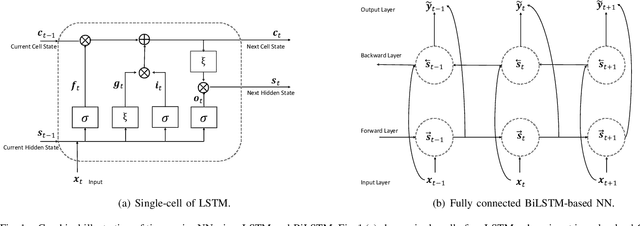

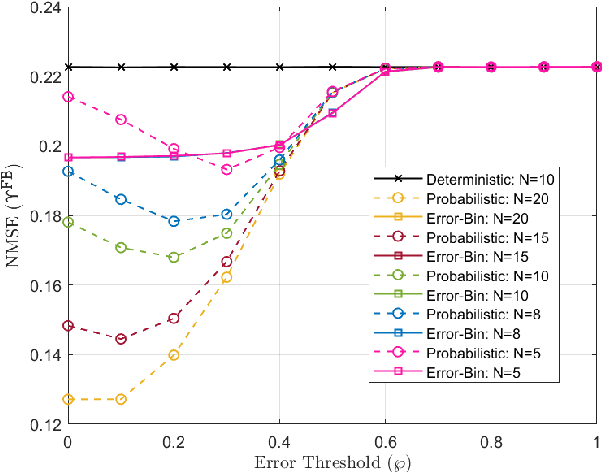

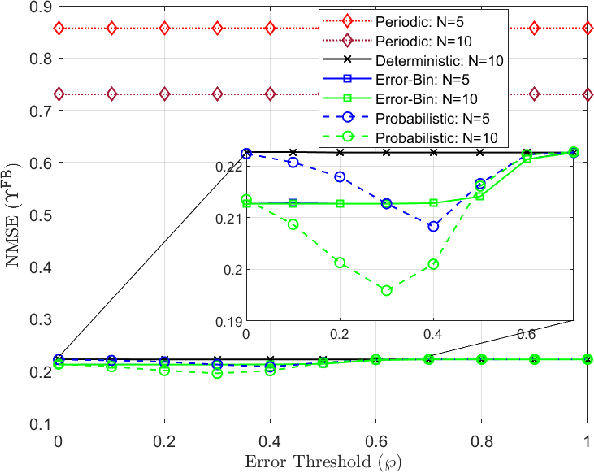

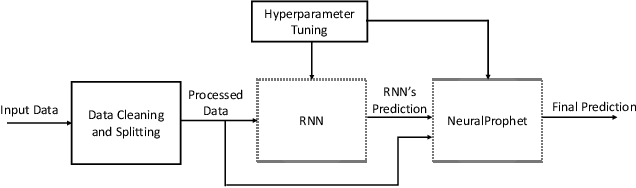

Abstract:In the literature, machine learning (ML) has been implemented at the base station (BS) and user equipment (UE) to improve the precision of downlink channel state information (CSI). However, ML implementation at the UE can be infeasible for various reasons, such as UE power consumption. Motivated by this issue, we propose a CSI learning mechanism at BS, called CSILaBS, to avoid ML at UE. To this end, by exploiting channel predictor (CP) at BS, a light-weight predictor function (PF) is considered for feedback evaluation at the UE. CSILaBS reduces over-the-air feedback overhead, improves CSI quality, and lowers the computation cost of UE. Besides, in a multiuser environment, we propose various mechanisms to select the feedback by exploiting PF while aiming to improve CSI accuracy. We also address various ML-based CPs, such as NeuralProphet (NP), an ML-inspired statistical algorithm. Furthermore, inspired to use a statistical model and ML together, we propose a novel hybrid framework composed of a recurrent neural network and NP, which yields better prediction accuracy than individual models. The performance of CSILaBS is evaluated through an empirical dataset recorded at Nokia Bell-Labs. The outcomes show that ML elimination at UE can retain performance gains, for example, precoding quality.

* 14 pages, 11 figures

Rendering Wireless Environments Useful for Gradient Estimators: A Zero-Order Stochastic Federated Learning Method

Jan 30, 2024

Abstract:Federated learning (FL) is a novel approach to machine learning that allows multiple edge devices to collaboratively train a model without disclosing their raw data. However, several challenges hinder the practical implementation of this approach, especially when devices and the server communicate over wireless channels, as it suffers from communication and computation bottlenecks in this case. By utilizing a communication-efficient framework, we propose a novel zero-order (ZO) method with a one-point gradient estimator that harnesses the nature of the wireless communication channel without requiring the knowledge of the channel state coefficient. It is the first method that includes the wireless channel in the learning algorithm itself instead of wasting resources to analyze it and remove its impact. The two main difficulties of this work are that in FL, the objective function is usually not convex, which makes the extension of FL to ZO methods challenging, and that including the impact of wireless channels requires extra attention. However, we overcome these difficulties and comprehensively analyze the proposed zero-order federated learning (ZOFL) framework. We establish its convergence theoretically, and we prove a convergence rate of $O(\frac{1}{\sqrt[3]{K}})$ in the nonconvex setting. We further demonstrate the potential of our algorithm with experimental results, taking into account independent and identically distributed (IID) and non-IID device data distributions.

Zero-Order One-Point Estimate with Distributed Stochastic Gradient-Tracking Technique

Oct 11, 2022

Abstract:In this work, we consider a distributed multi-agent stochastic optimization problem, where each agent holds a local objective function that is smooth and convex, and that is subject to a stochastic process. The goal is for all agents to collaborate to find a common solution that optimizes the sum of these local functions. With the practical assumption that agents can only obtain noisy numerical function queries at exactly one point at a time, we extend the distributed stochastic gradient-tracking method to the bandit setting where we don't have an estimate of the gradient, and we introduce a zero-order (ZO) one-point estimate (1P-DSGT). We analyze the convergence of this novel technique for smooth and convex objectives using stochastic approximation tools, and we prove that it converges almost surely to the optimum. We then study the convergence rate for when the objectives are additionally strongly convex. We obtain a rate of $O(\frac{1}{\sqrt{k}})$ after a sufficient number of iterations $k > K_2$ which is usually optimal for techniques utilizing one-point estimators. We also provide a regret bound of $O(\sqrt{k})$, which is exceptionally good compared to the aforementioned techniques. We further illustrate the usefulness of the proposed technique using numerical experiments.

Design of an Efficient CSI Feedback Mechanism in Massive MIMO Systems: A Machine Learning Approach using Empirical Data

Aug 25, 2022

Abstract:Massive multiple-input multiple-output (mMIMO) regime reaps the benefits of spatial diversity and multiplexing gains, subject to precise channel state information (CSI) acquisition. In the current communication architecture, the downlink CSI is estimated by the user equipment (UE) via dedicated pilots and then fed back to the gNodeB (gNB). The feedback information is compressed with the goal of reducing over-the-air overhead. This compression increases the inaccuracy of acquired CSI, thus degrading the overall spectral efficiency. This paper proposes a computationally inexpensive machine learning (ML)-based CSI feedback algorithm, which exploits twin channel predictors. The proposed approach can work for both time-division duplex (TDD) and frequency-division duplex (FDD) systems, and it allows to reduce feedback overhead and improves the acquired CSI accuracy. To observe real benefits, we demonstrate the performance of the proposed approach using the empirical data recorded at the Nokia campus in Stuttgart, Germany. Numerical results show the effectiveness of the proposed approach in terms of reducing overhead, minimizing quantization errors, increasing spectral efficiency, cosine similarity, and precoding gain compared to the traditional CSI feedback mechanism.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge