M. Majid Butt

An Overview of Intelligent Meta-surfaces for 6G and Beyond: Opportunities, Trends, and Challenges

May 06, 2024

Abstract:With the impending arrival of the sixth generation (6G) of wireless communication technology, the telecommunications landscape is poised for another revolutionary transformation. At the forefront of this evolution are intelligent meta-surfaces (IS), emerging as a disruptive physical layer technology with the potential to redefine the capabilities and performance metrics of future wireless networks. As 6G evolves from concept to reality, industry stakeholders, standards organizations, and regulatory bodies are collaborating to define the specifications, protocols, and interoperability standards governing IS deployment. Against this background, this article delves into the ongoing standardization efforts, emerging trends, potential opportunities, and prevailing challenges surrounding the integration of IS into the framework of 6G and beyond networks. Specifically, it provides a tutorial-style overview of recent advancements in IS and explores their potential applications within future networks beyond 6G. Additionally, the article identifies key challenges in the design and implementation of various types of intelligent surfaces, along with considerations for their practical standardization. Finally, it highlights potential future prospects in this evolving field.

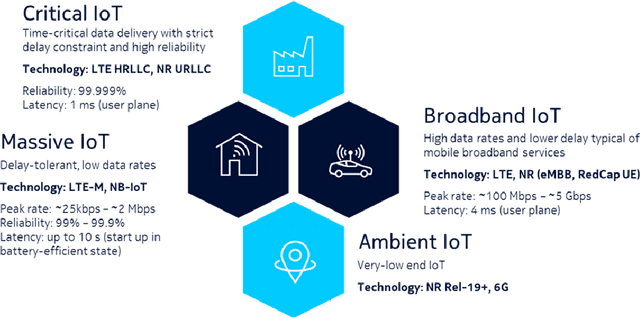

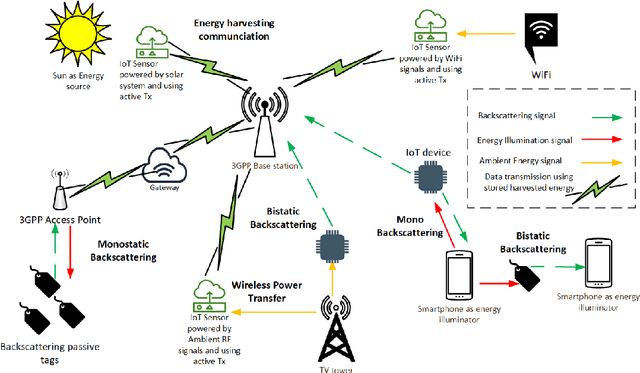

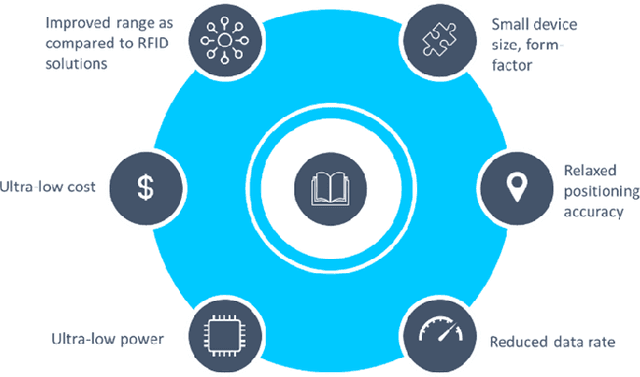

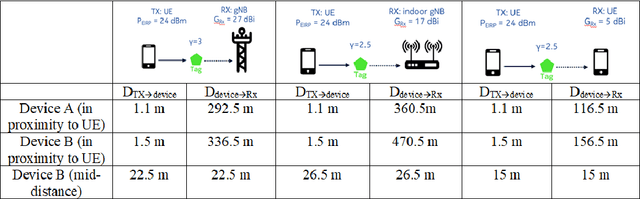

Ambient IoT: A missing link in 3GPP IoT Devices Landscape

Dec 11, 2023

Abstract:Ambient internet of things (IoT) is the network of devices which harvest energy from ambient sources for powering their communication. After decades of research on operation of these devices, Third Generation Partnership Project (3GPP) has started discussing energy harvesting technology in cellular networks to support massive deployment of IoT devices at low operational cost. This article provides a timely update on 3GPP studies on ambient energy harvesting devices including device types, use cases, key requirements, and related design challenges. Supported by link budget analysis for backscattering energy harvesting devices, which are a key component of this study, we provide insight on system design and show how this technology will require a new system design approach as compared to New Radio (NR) system design in 5G.

Artificial Intelligence for 6G Networks: Technology Advancement and Standardization

Apr 02, 2022

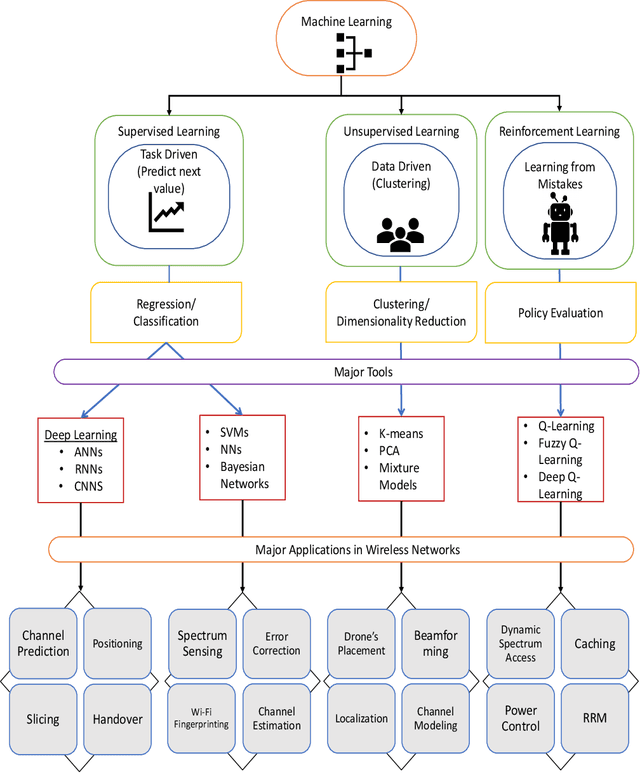

Abstract:With the deployment of 5G networks, standards organizations have started working on the design phase for sixth-generation (6G) networks. 6G networks will be immensely complex, requiring more deployment time, cost and management efforts. On the other hand, mobile network operators demand these networks to be intelligent, self-organizing, and cost-effective to reduce operating expenses (OPEX). Machine learning (ML), a branch of artificial intelligence (AI), is the answer to many of these challenges providing pragmatic solutions, which can entirely change the future of wireless network technologies. By using some case study examples, we briefly examine the most compelling problems, particularly at the physical (PHY) and link layers in cellular networks where ML can bring significant gains. We also review standardization activities in relation to the use of ML in wireless networks and future timeline on readiness of standardization bodies to adapt to these changes. Finally, we highlight major issues in ML use in the wireless technology, and provide potential directions to mitigate some of them in 6G wireless networks.

* 6

ML-Assisted UE Positioning: Performance Analysis and 5G Architecture Enhancements

Aug 25, 2021

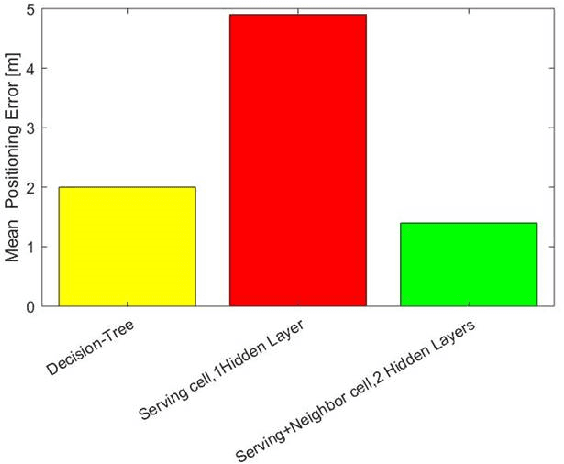

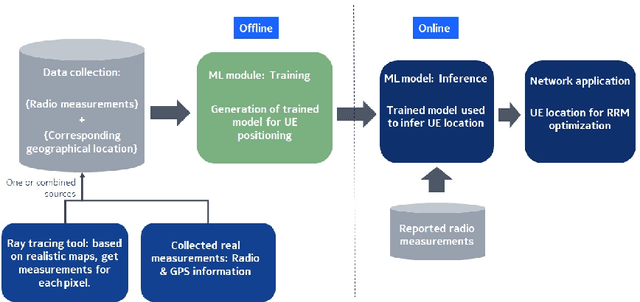

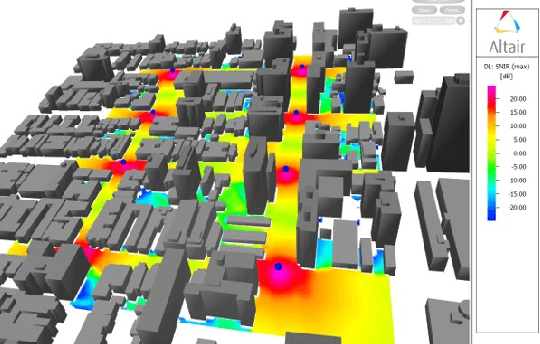

Abstract:Artificial intelligence and data-driven networks will be integral part of 6G systems. In this article, we comprehensively discuss implementation challenges and need for architectural changes in 5G radio access networks for integrating machine learning (ML) solutions. As an example use case, we investigate user equipment (UE) positioning assisted by deep learning (DL) in 5G and beyond networks. As compared to state of the art positioning algorithms used in today's networks, radio signal fingerprinting and machine learning (ML) assisted positioning requires smaller additional feedback overhead; and the positioning estimates are made directly inside the radio access network (RAN), thereby assisting in radio resource management. In this regard, we study ML-assisted positioning methods and evaluate their performance using system level simulations for an outdoor scenario. The study is based on the use of raytracing tool, a 3GPP 5G NR compliant system level simulator and DL framework to estimate positioning accuracy of the UE. We evaluate and compare performance of various DL models and show mean positioning error in the range of 1-1.5m for a 2-hidden layer DL architecture with appropriate feature-modeling. Building on our performance analysis, we discuss pros and cons of various architectures to implement ML solutions for future networks and draw conclusions on the most suitable architecture.

Deep Learning Assisted CSI Estimation for Joint URLLC and eMBB Resource Allocation

Mar 12, 2020

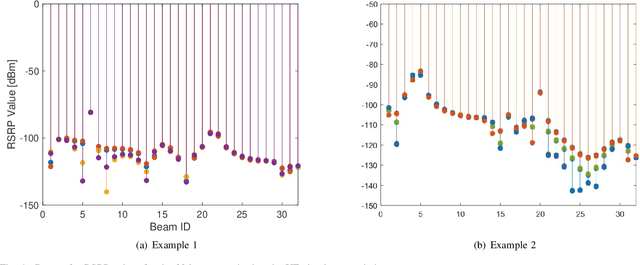

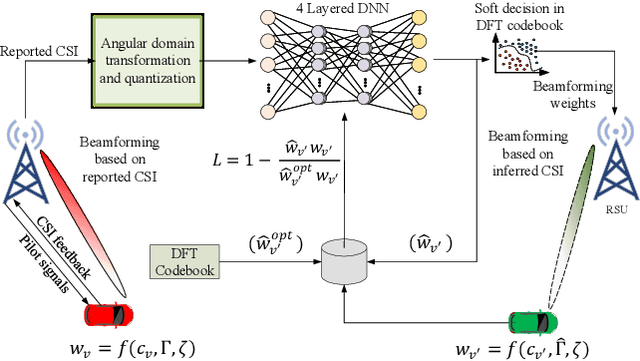

Abstract:Multiple-input multiple-output (MIMO) is a key for the fifth generation (5G) and beyond wireless communication systems owing to higher spectrum efficiency, spatial gains, and energy efficiency. Reaping the benefits of MIMO transmission can be fully harnessed if the channel state information (CSI) is available at the transmitter side. However, the acquisition of transmitter side CSI entails many challenges. In this paper, we propose a deep learning assisted CSI estimation technique in highly mobile vehicular networks, based on the fact that the propagation environment (scatterers, reflectors) is almost identical thereby allowing a data driven deep neural network (DNN) to learn the non-linear CSI relations with negligible overhead. Moreover, we formulate and solve a dynamic network slicing based resource allocation problem for vehicular user equipments (VUEs) requesting enhanced mobile broadband (eMBB) and ultra-reliable low latency (URLLC) traffic slices. The formulation considers a threshold rate violation probability minimization for the eMBB slice while satisfying a probabilistic threshold rate criterion for the URLLC slice. Simulation result shows that an overhead reduction of 50% can be achieved with 12% increase in threshold violations compared to an ideal case with perfect CSI knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge