Luca Rose

Massive MIMO CSI Feedback using Channel Prediction: How to Avoid Machine Learning at UE?

Mar 20, 2024

Abstract:In the literature, machine learning (ML) has been implemented at the base station (BS) and user equipment (UE) to improve the precision of downlink channel state information (CSI). However, ML implementation at the UE can be infeasible for various reasons, such as UE power consumption. Motivated by this issue, we propose a CSI learning mechanism at BS, called CSILaBS, to avoid ML at UE. To this end, by exploiting channel predictor (CP) at BS, a light-weight predictor function (PF) is considered for feedback evaluation at the UE. CSILaBS reduces over-the-air feedback overhead, improves CSI quality, and lowers the computation cost of UE. Besides, in a multiuser environment, we propose various mechanisms to select the feedback by exploiting PF while aiming to improve CSI accuracy. We also address various ML-based CPs, such as NeuralProphet (NP), an ML-inspired statistical algorithm. Furthermore, inspired to use a statistical model and ML together, we propose a novel hybrid framework composed of a recurrent neural network and NP, which yields better prediction accuracy than individual models. The performance of CSILaBS is evaluated through an empirical dataset recorded at Nokia Bell-Labs. The outcomes show that ML elimination at UE can retain performance gains, for example, precoding quality.

* 14 pages, 11 figures

Design of an Efficient CSI Feedback Mechanism in Massive MIMO Systems: A Machine Learning Approach using Empirical Data

Aug 25, 2022

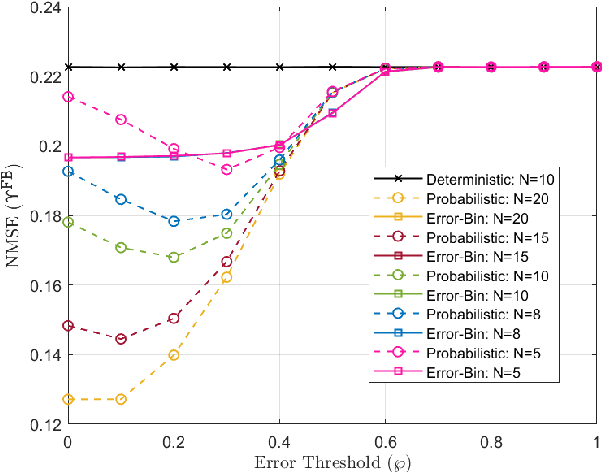

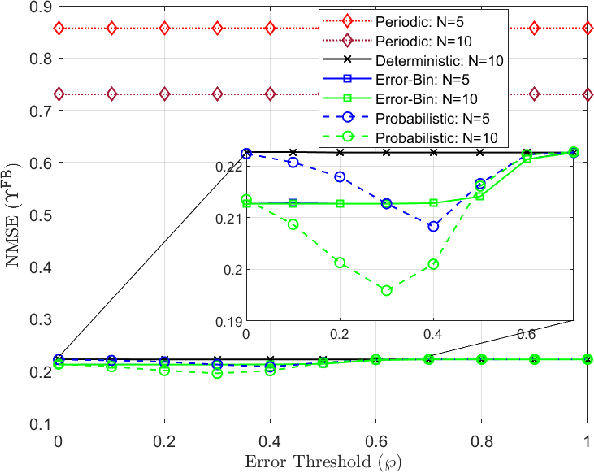

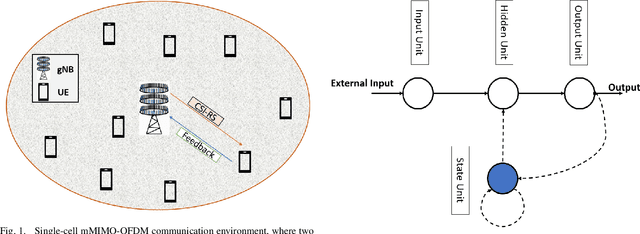

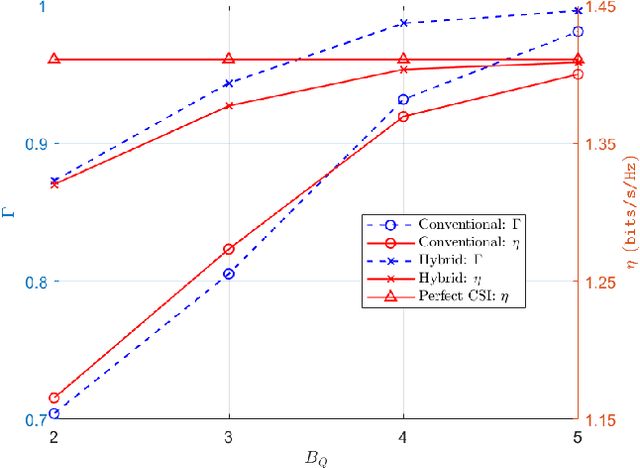

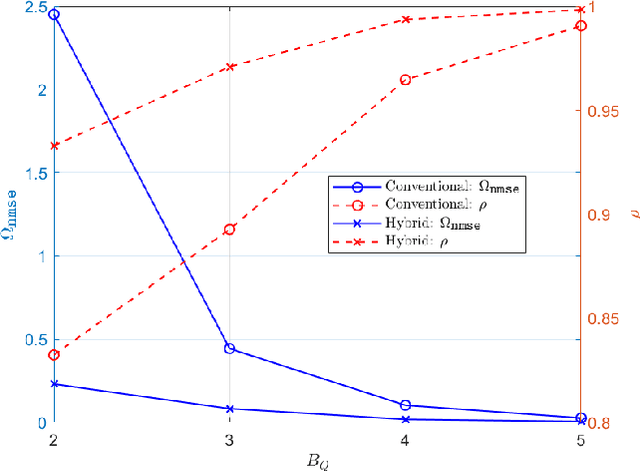

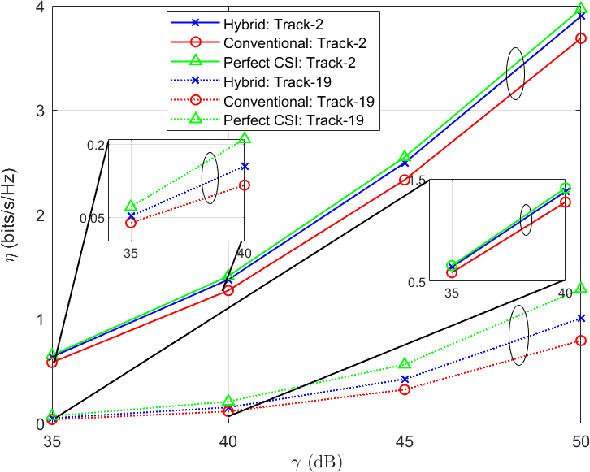

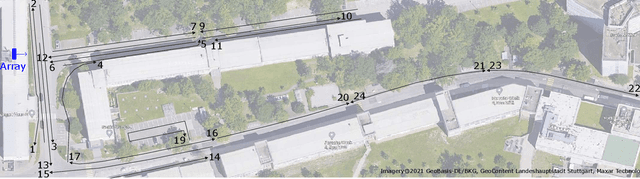

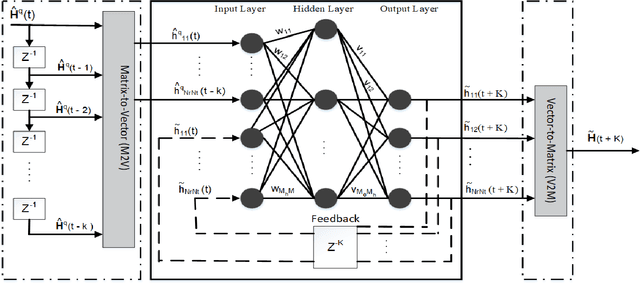

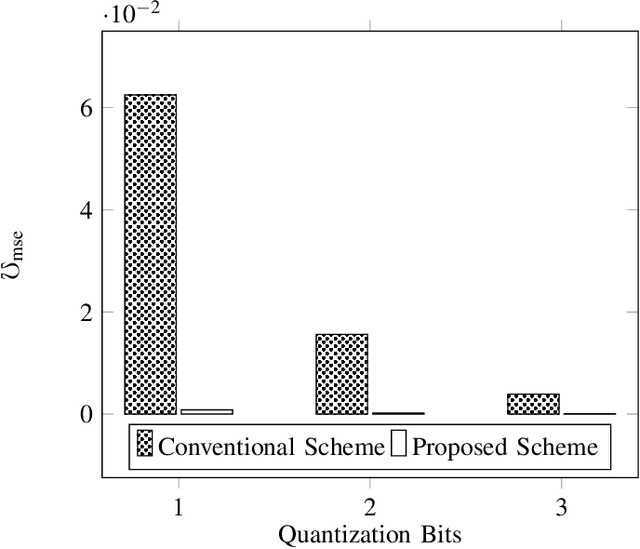

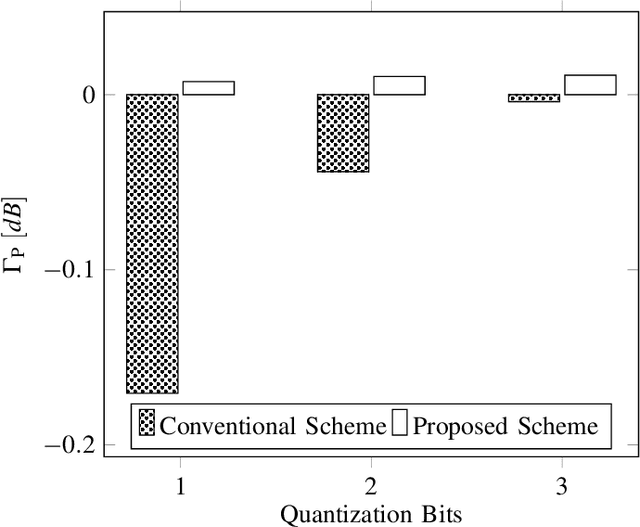

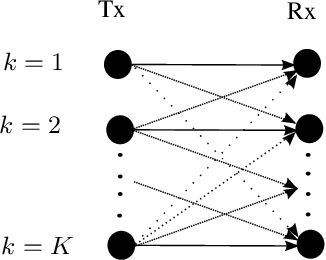

Abstract:Massive multiple-input multiple-output (mMIMO) regime reaps the benefits of spatial diversity and multiplexing gains, subject to precise channel state information (CSI) acquisition. In the current communication architecture, the downlink CSI is estimated by the user equipment (UE) via dedicated pilots and then fed back to the gNodeB (gNB). The feedback information is compressed with the goal of reducing over-the-air overhead. This compression increases the inaccuracy of acquired CSI, thus degrading the overall spectral efficiency. This paper proposes a computationally inexpensive machine learning (ML)-based CSI feedback algorithm, which exploits twin channel predictors. The proposed approach can work for both time-division duplex (TDD) and frequency-division duplex (FDD) systems, and it allows to reduce feedback overhead and improves the acquired CSI accuracy. To observe real benefits, we demonstrate the performance of the proposed approach using the empirical data recorded at the Nokia campus in Stuttgart, Germany. Numerical results show the effectiveness of the proposed approach in terms of reducing overhead, minimizing quantization errors, increasing spectral efficiency, cosine similarity, and precoding gain compared to the traditional CSI feedback mechanism.

Real-Time Massive MIMO Channel Prediction: A Combination of Deep Learning and NeuralProphet

Aug 11, 2022

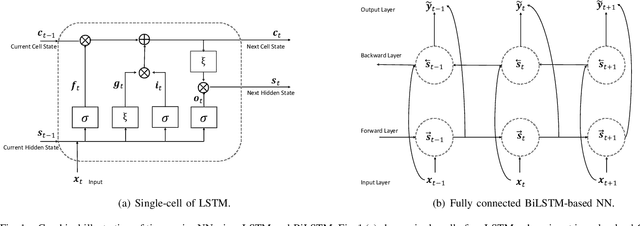

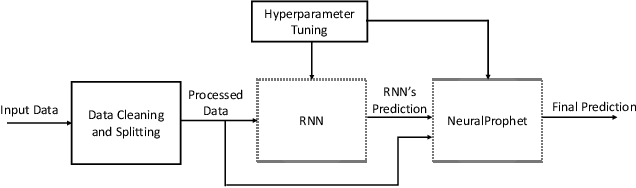

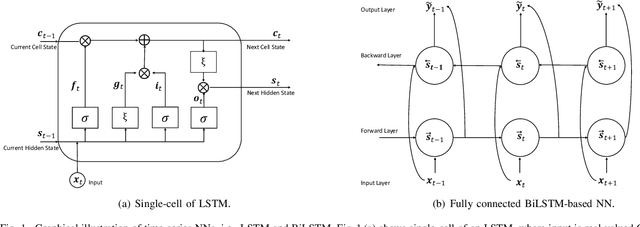

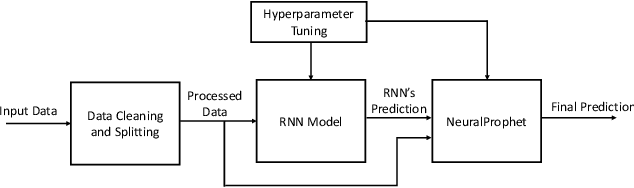

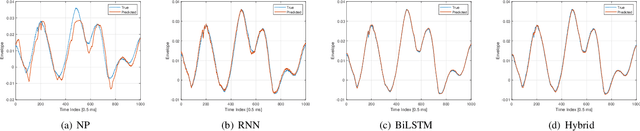

Abstract:Channel state information (CSI) is of pivotal importance as it enables wireless systems to adapt transmission parameters more accurately, thus improving the system's overall performance. However, it becomes challenging to acquire accurate CSI in a highly dynamic environment, mainly due to multi-path fading. Inaccurate CSI can deteriorate the performance, particularly of a massive multiple-input multiple-output (mMIMO) system. This paper adapts machine learning (ML) for CSI prediction. Specifically, we exploit time-series models of deep learning (DL) such as recurrent neural network (RNN) and Bidirectional long-short term memory (BiLSTM). Further, we use NeuralProphet (NP), a recently introduced time-series model, composed of statistical components, e.g., auto-regression (AR) and Fourier terms, for CSI prediction. Inspired by statistical models, we also develop a novel hybrid framework comprising RNN and NP to achieve better prediction accuracy. The proposed channel predictors (CPs) performance is evaluated on a real-time dataset recorded at the Nokia Bell-Labs campus in Stuttgart, Germany. Numerical results show that DL brings performance gain when used with statistical models and showcases robustness.

Artificial Intelligence for 6G Networks: Technology Advancement and Standardization

Apr 02, 2022

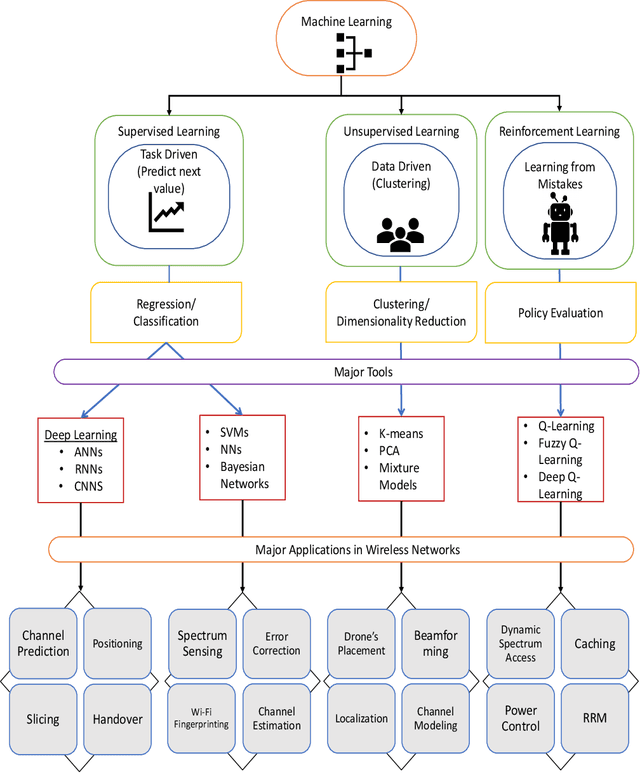

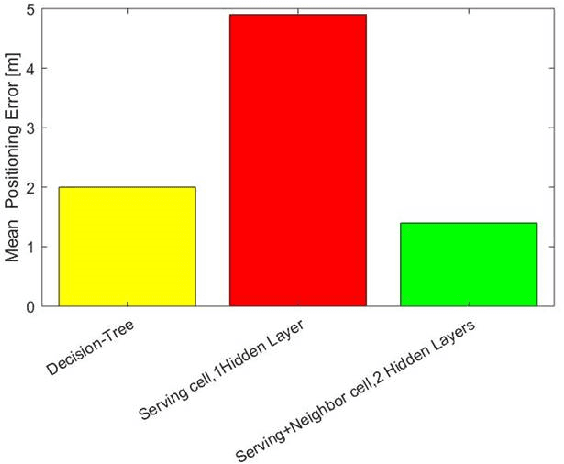

Abstract:With the deployment of 5G networks, standards organizations have started working on the design phase for sixth-generation (6G) networks. 6G networks will be immensely complex, requiring more deployment time, cost and management efforts. On the other hand, mobile network operators demand these networks to be intelligent, self-organizing, and cost-effective to reduce operating expenses (OPEX). Machine learning (ML), a branch of artificial intelligence (AI), is the answer to many of these challenges providing pragmatic solutions, which can entirely change the future of wireless network technologies. By using some case study examples, we briefly examine the most compelling problems, particularly at the physical (PHY) and link layers in cellular networks where ML can bring significant gains. We also review standardization activities in relation to the use of ML in wireless networks and future timeline on readiness of standardization bodies to adapt to these changes. Finally, we highlight major issues in ML use in the wireless technology, and provide potential directions to mitigate some of them in 6G wireless networks.

* 6

A Novel Algorithm to Report CSI in MIMO-Based Wireless Networks

Apr 01, 2021

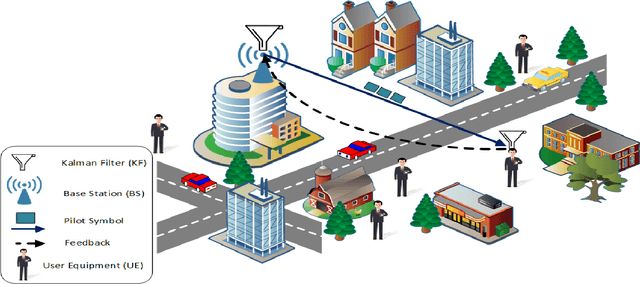

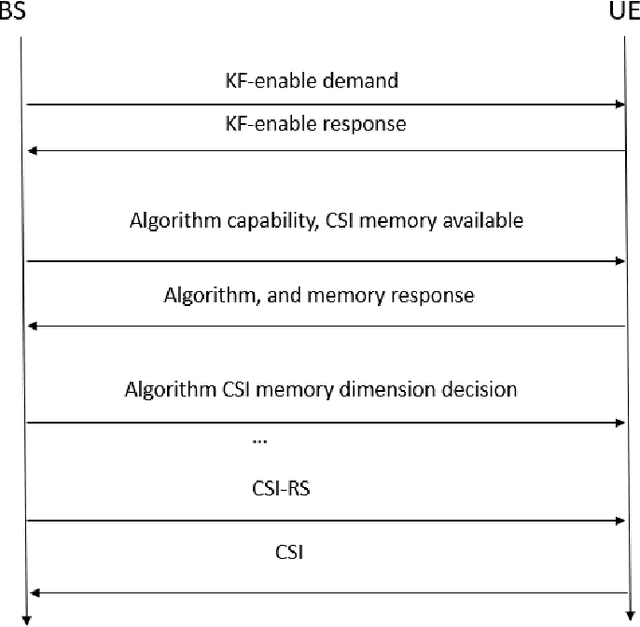

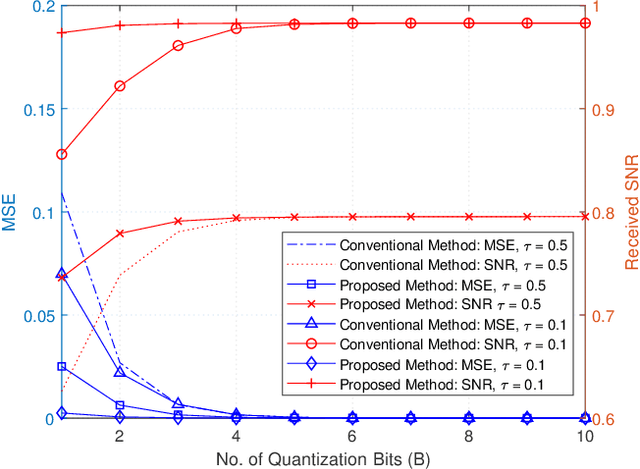

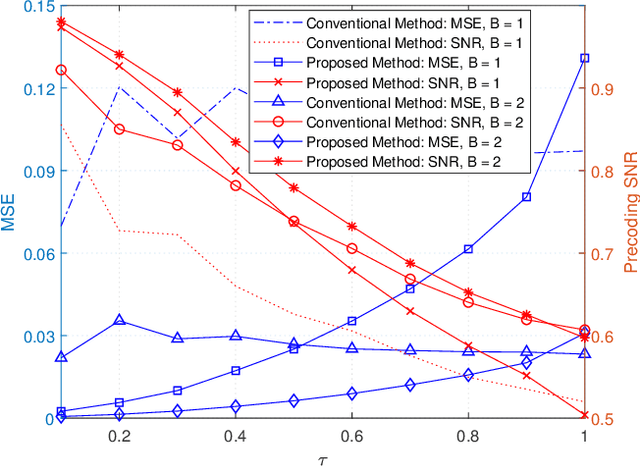

Abstract:In wireless communication, accurate channel state information (CSI) is of pivotal importance. In practice, due to processing and feedback delays, estimated CSI can be outdated, which can severely deteriorate the performance of the communication system. Besides, to feedback estimated CSI, a strong compression of the CSI, evaluated at the user equipment (UE), is performed to reduce the over-the-air (OTA) overhead. Such compression strongly reduces the precision of the estimated CSI, which ultimately impacts the performance of multiple-input multiple-output (MIMO) precoding. Motivated by such issues, we present a novel scalable idea of reporting CSI in wireless networks, which is applicable to both time-division duplex (TDD) and frequency-division duplex (FDD) systems. In particular, the novel approach introduces the use of a channel predictor function, e.g., Kalman filter (KF), at both ends of the communication system to predict CSI. Simulation-based results demonstrate that the novel approach reduces not only the channel mean-squared-error (MSE) but also the OTA overhead to feedback the estimated CSI when there is immense variation in the mobile radio channel. Besides, in the immobile radio channel, feedback can be eliminated, which brings the benefit of further reducing the OTA overhead. Additionally, the proposed method provides a significant signal-to-noise ratio (SNR) gain in both the channel conditions, i.e., highly mobile and immobile.

Dealing with CSI Compression to Reduce Losses and Overhead: An Artificial Intelligence Approach

Apr 01, 2021

Abstract:Motivated by the issue of inaccurate channel state information (CSI) at the base station (BS), which is commonly due to feedback/processing delays and compression problems, in this paper, we introduce a scalable idea of adopting artificial intelligence (AI) aided CSI acquisition. The proposed scheme enhances the CSI compression, which is done at the mobile terminal (MT), along with accurate recovery of estimated CSI at the BS. Simulation-based results corroborate the validity of the proposed scheme. Numerically, nearly 100\% recovery of the estimated CSI is observed with relatively lower overhead than the benchmark scheme. The proposed idea can bring potential benefits in the wireless communication environment, e.g., ultra-reliable and low latency communication (URLLC), where imperfect CSI and overhead is intolerable.

Distributed Power Allocation with SINR Constraints Using Trial and Error Learning

Feb 28, 2012

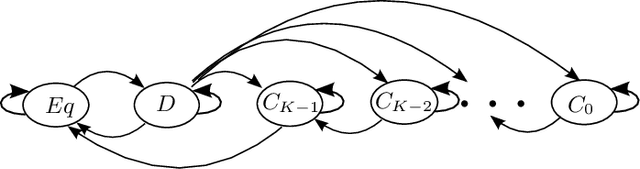

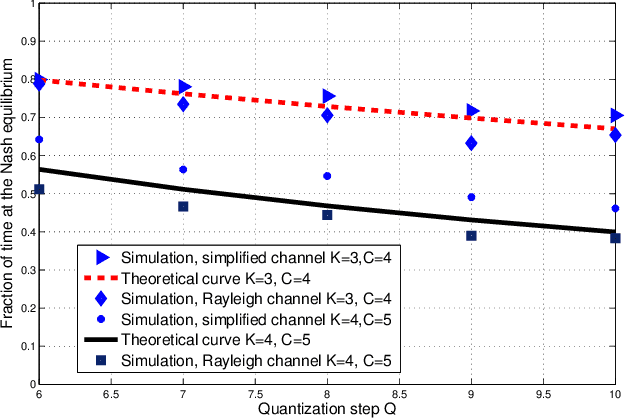

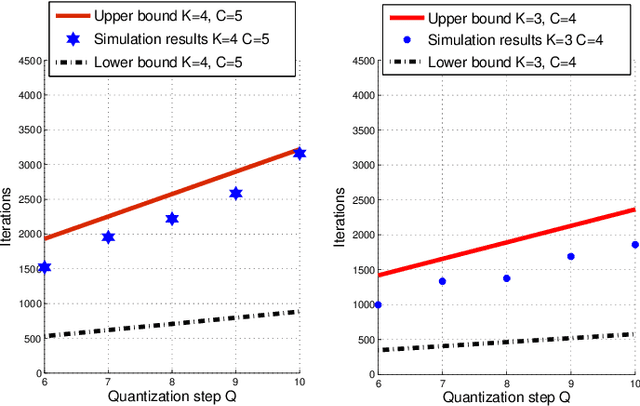

Abstract:In this paper, we address the problem of global transmit power minimization in a self-congiguring network where radio devices are subject to operate at a minimum signal to interference plus noise ratio (SINR) level. We model the network as a parallel Gaussian interference channel and we introduce a fully decentralized algorithm (based on trial and error) able to statistically achieve a congiguration where the performance demands are met. Contrary to existing solutions, our algorithm requires only local information and can learn stable and efficient working points by using only one bit feedback. We model the network under two different game theoretical frameworks: normal form and satisfaction form. We show that the converging points correspond to equilibrium points, namely Nash and satisfaction equilibrium. Similarly, we provide sufficient conditions for the algorithm to converge in both formulations. Moreover, we provide analytical results to estimate the algorithm's performance, as a function of the network parameters. Finally, numerical results are provided to validate our theoretical conclusions. Keywords: Learning, power control, trial and error, Nash equilibrium, spectrum sharing.

Learning Equilibria with Partial Information in Decentralized Wireless Networks

Jun 14, 2011

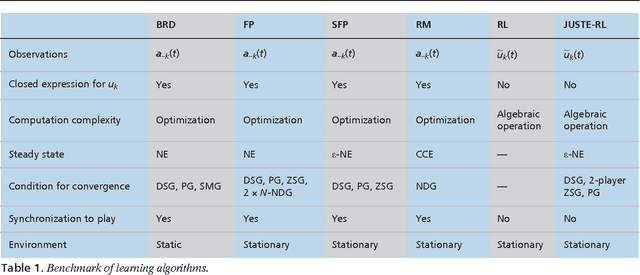

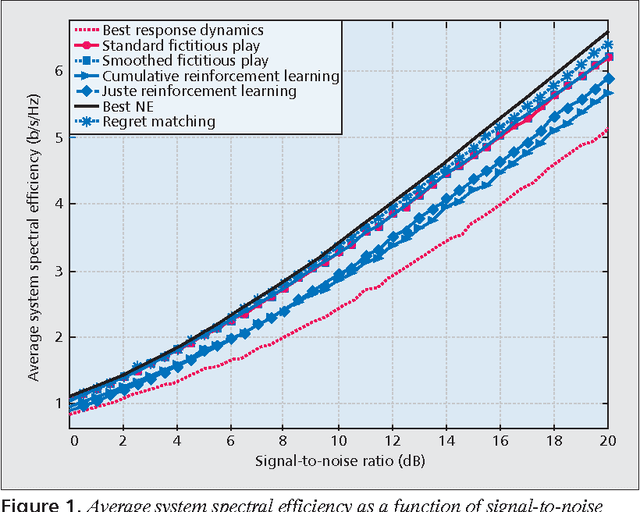

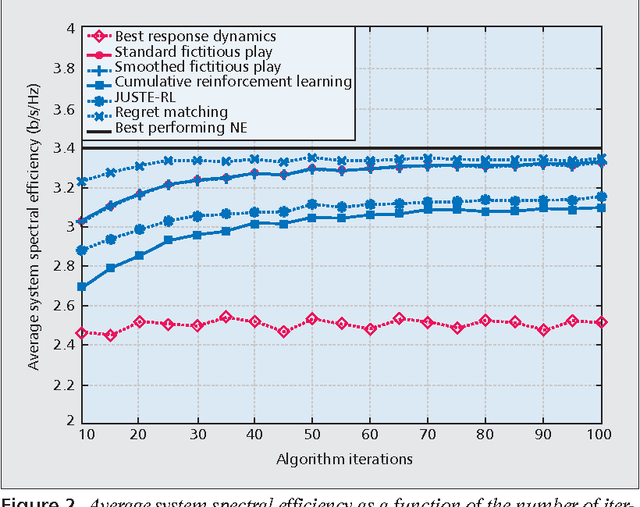

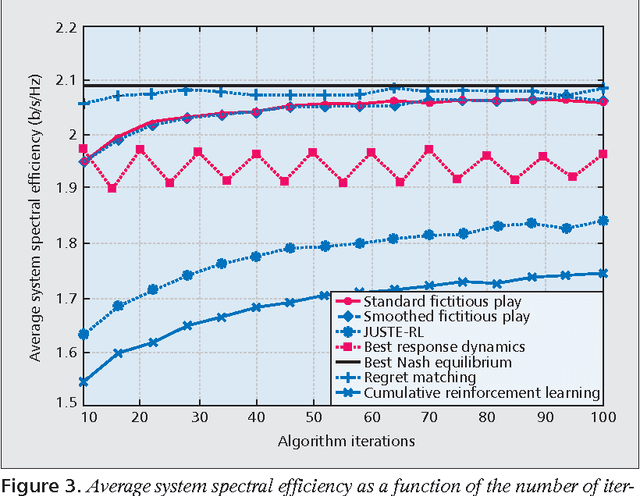

Abstract:In this article, a survey of several important equilibrium concepts for decentralized networks is presented. The term decentralized is used here to refer to scenarios where decisions (e.g., choosing a power allocation policy) are taken autonomously by devices interacting with each other (e.g., through mutual interference). The iterative long-term interaction is characterized by stable points of the wireless network called equilibria. The interest in these equilibria stems from the relevance of network stability and the fact that they can be achieved by letting radio devices to repeatedly interact over time. To achieve these equilibria, several learning techniques, namely, the best response dynamics, fictitious play, smoothed fictitious play, reinforcement learning algorithms, and regret matching, are discussed in terms of information requirements and convergence properties. Most of the notions introduced here, for both equilibria and learning schemes, are illustrated by a simple case study, namely, an interference channel with two transmitter-receiver pairs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge