Nader Mokari

Senior Member, IEEE

AI-enabled Priority and Auction-Based Spectrum Management for 6G

Jan 12, 2024

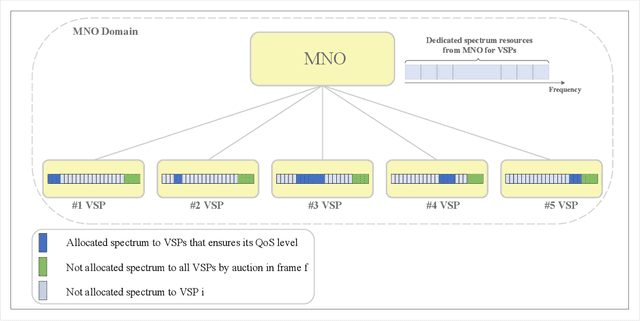

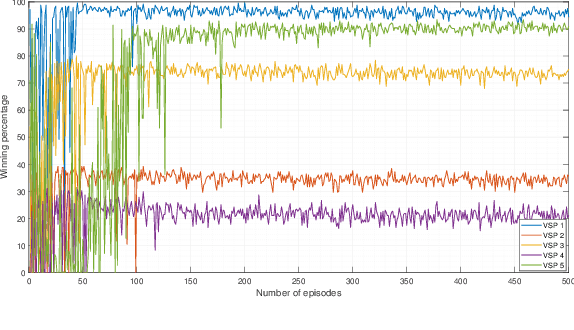

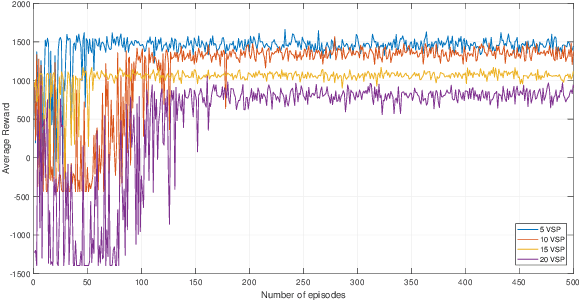

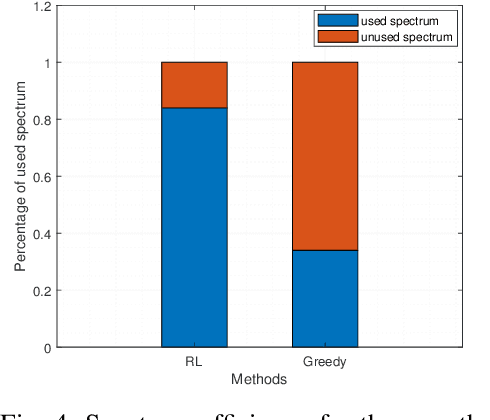

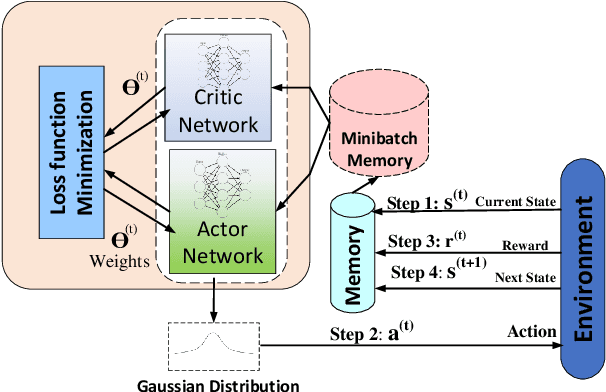

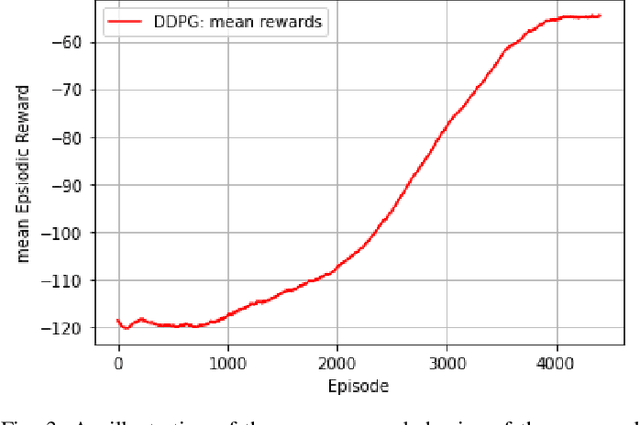

Abstract:In this paper, we present a quality of service (QoS)-aware priority-based spectrum management scheme to guarantee the minimum required bit rate of vertical sector players (VSPs) in the 5G and beyond generation, including the 6th generation (6G). VSPs are considered as spectrum leasers to optimize the overall spectrum efficiency of the network from the perspective of the mobile network operator (MNO) as the spectrum licensee and auctioneer. We exploit a modified Vickrey-Clarke-Groves (VCG) auction mechanism to allocate the spectrum to them where the QoS and the truthfulness of bidders are considered as two important parameters for prioritization of VSPs. The simulation is done with the help of deep deterministic policy gradient (DDPG) as a deep reinforcement learning (DRL)-based algorithm. Simulation results demonstrate that deploying the DDPG algorithm results in significant advantages. In particular, the efficiency of the proposed spectrum management scheme is about %85 compared to the %35 efficiency in traditional auction methods.

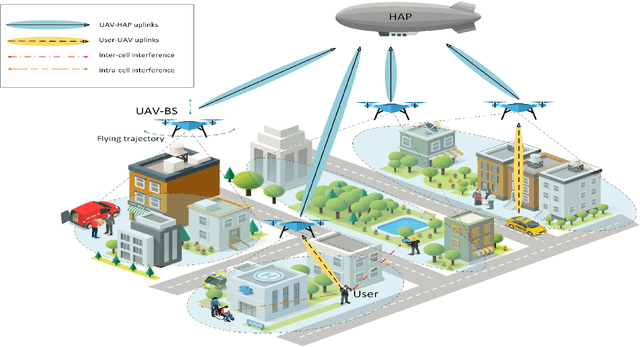

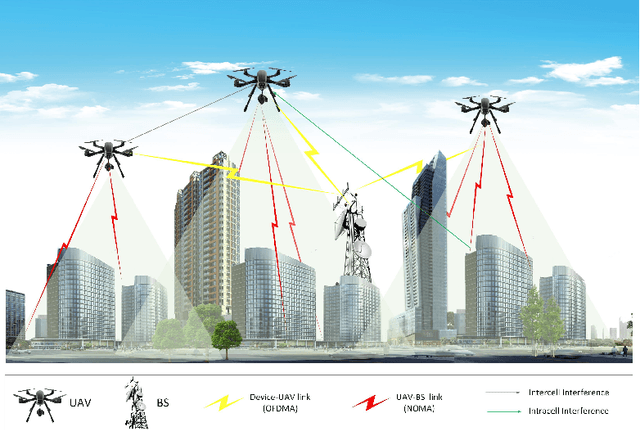

AI-based Radio and Computing Resource Allocation and Path Planning in NOMA NTNs: AoI Minimization under CSI Uncertainty

May 01, 2023

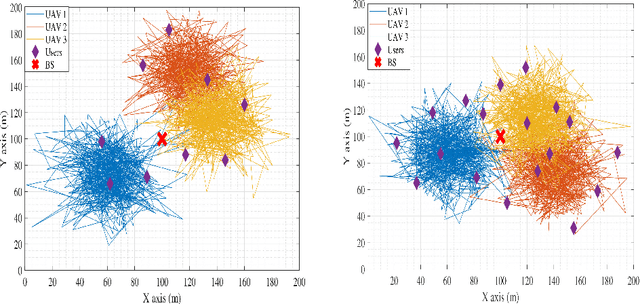

Abstract:In this paper, we develop a hierarchical aerial computing framework composed of high altitude platform (HAP) and unmanned aerial vehicles (UAVs) to compute the fully offloaded tasks of terrestrial mobile users which are connected through an uplink non-orthogonal multiple access (UL-NOMA). In particular, the problem is formulated to minimize the AoI of all users with elastic tasks, by adjusting UAVs trajectory and resource allocation on both UAVs and HAP, which is restricted by the channel state information (CSI) uncertainty and multiple resource constraints of UAVs and HAP. In order to solve this non-convex optimization problem, two methods of multi-agent deep deterministic policy gradient (MADDPG) and federated reinforcement learning (FRL) are proposed to design the UAVs trajectory and obtain channel, power, and CPU allocations. It is shown that task scheduling significantly reduces the average AoI. This improvement is more pronounced for larger task sizes. On the one hand, it is shown that power allocation has a marginal effect on the average AoI compared to using full transmission power for all users. On the other hand, compared with traditional transmissions (fixed method) simulation result shows that our scheduling scheme has a lower average AoI.

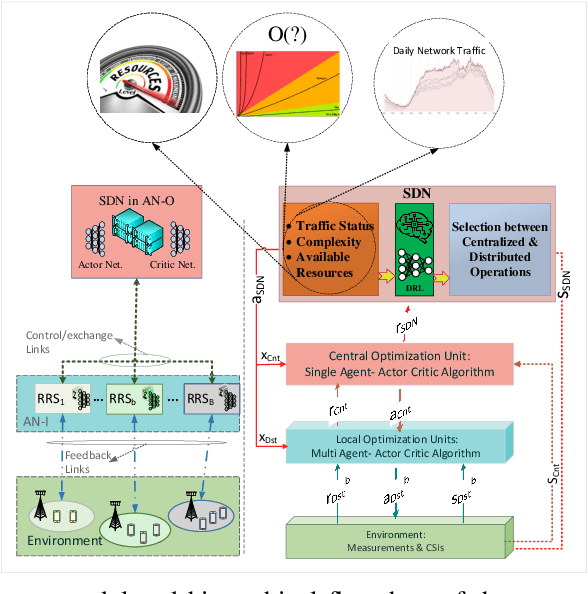

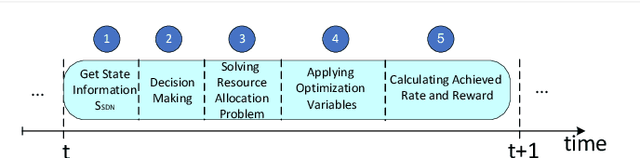

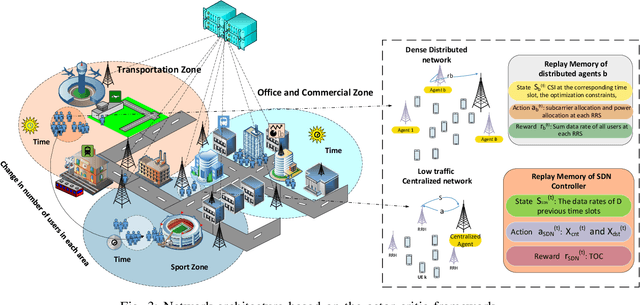

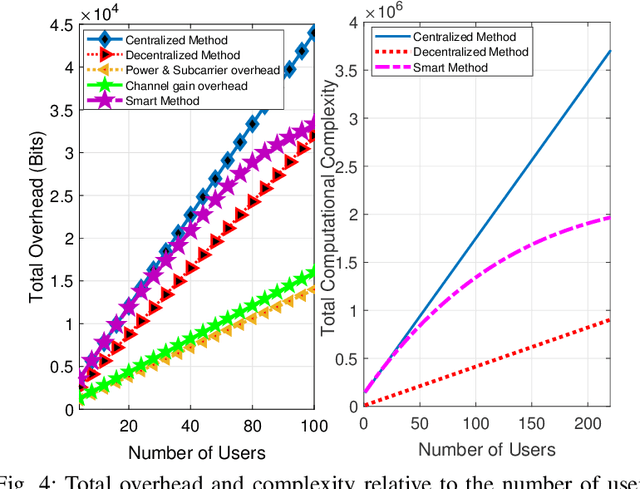

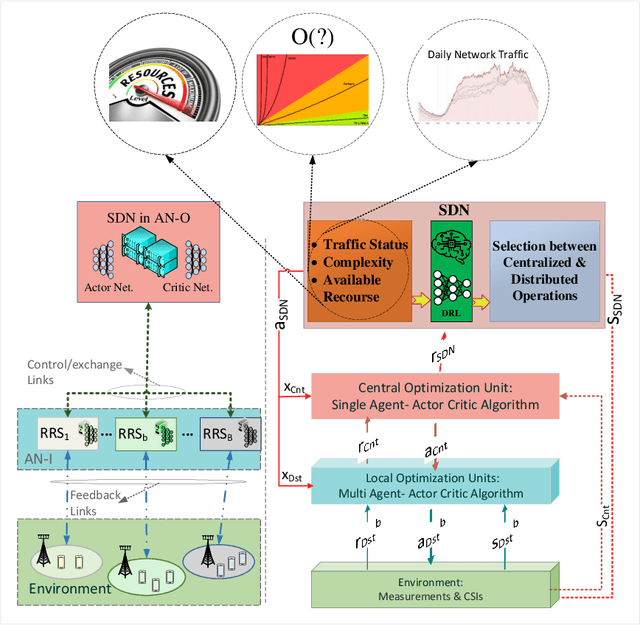

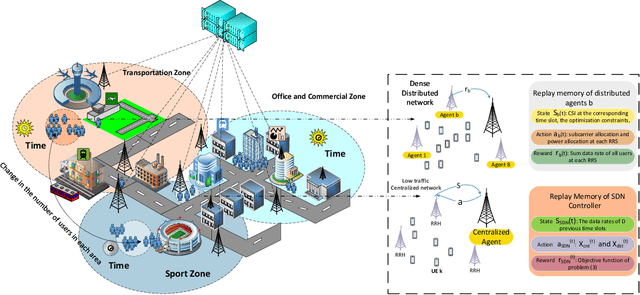

Smart Resource Allocation Model via Artificial Intelligence in Software Defined 6G Networks

Feb 09, 2023

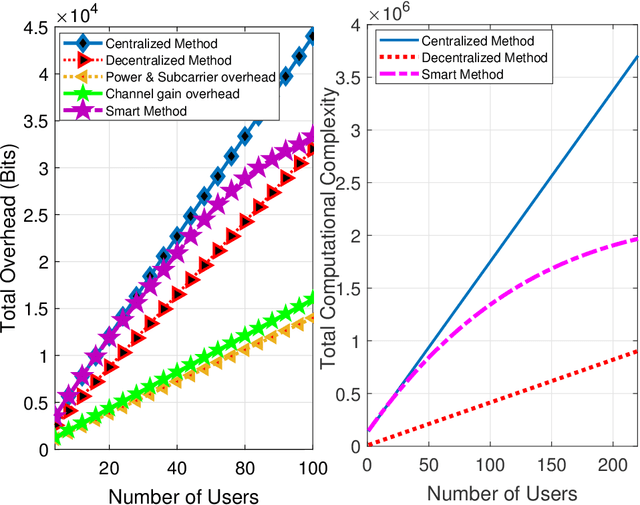

Abstract:In this paper, we design a new flexible smart software-defined radio access network (Soft-RAN) architecture with traffic awareness for sixth generation (6G) wireless networks. In particular, we consider a hierarchical resource allocation model for the proposed smart soft-RAN model where the software-defined network (SDN) controller is the first and foremost layer of the framework. This unit dynamically monitors the network to select a network operation type on the basis of distributed or centralized resource allocation procedures to intelligently perform decision-making. In this paper, our aim is to make the network more scalable and more flexible in terms of conflicting performance indicators such as achievable data rate, overhead, and complexity indicators. To this end, we introduce a new metric, i.e., throughput-overhead-complexity (TOC), for the proposed machine learning-based algorithm, which supports a trade-off between these performance indicators. In particular, the decision making based on TOC is solved via deep reinforcement learning (DRL) which determines an appropriate resource allocation policy. Furthermore, for the selected algorithm, we employ the soft actor-critic (SAC) method which is more accurate, scalable, and robust than other learning methods. Simulation results demonstrate that the proposed smart network achieves better performance in terms of TOC compared to fixed centralized or distributed resource management schemes that lack dynamism. Moreover, our proposed algorithm outperforms conventional learning methods employed in recent state-of-the-art network designs.

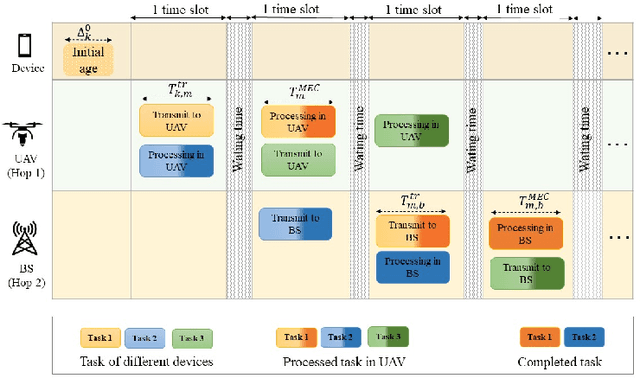

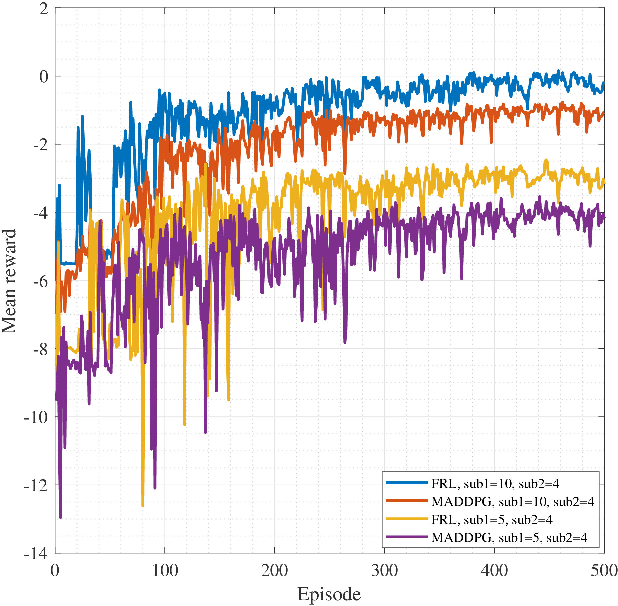

Two-Hop Age of Information Scheduling for Multi-UAV Assisted Mobile Edge Computing: FRL vs MADDPG

Jun 19, 2022

Abstract:In this work, we adopt the emerging technology of mobile edge computing (MEC) in the Unmanned aerial vehicles (UAVs) for communication-computing systems, to optimize the age of information (AoI) in the network. We assume that tasks are processed jointly on UAVs and BS to enhance edge performance with limited connectivity and computing. Using UAVs and BS jointly with MEC can reduce AoI on the network. To maintain the freshness of the tasks, we formulate the AoI minimization in two-hop communication framework, the first hop at the UAVs and the second hop at the BS. To approach the challenge, we optimize the problem using a deep reinforcement learning (DRL) framework, called federated reinforcement learning (FRL). In our network we have two types of agents with different states and actions but with the same policy. Our FRL enables us to handle the two-step AoI minimization and UAV trajectory problems. In addition, we compare our proposed algorithm, which has a centralized processing unit to update the weights, with fully decentralized multi-agent deep deterministic policy gradient (MADDPG), which enhances the agent's performance. As a result, the suggested algorithm outperforms the MADDPG by about 38\%

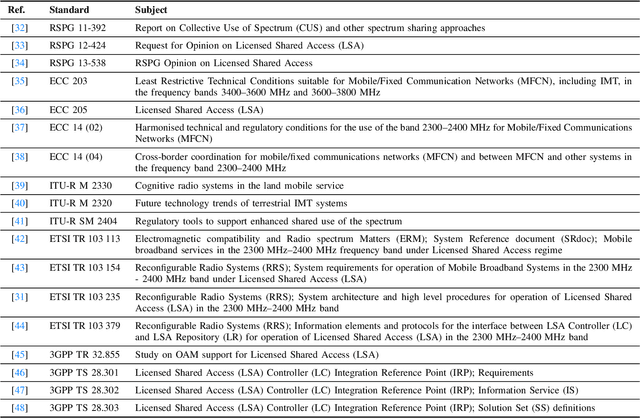

A Comprehensive Survey of Spectrum Sharing Schemes from a Standardization and Implementation Perspective

Mar 21, 2022

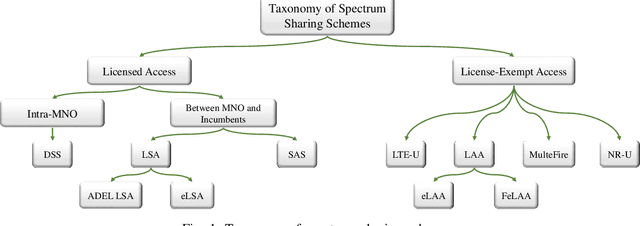

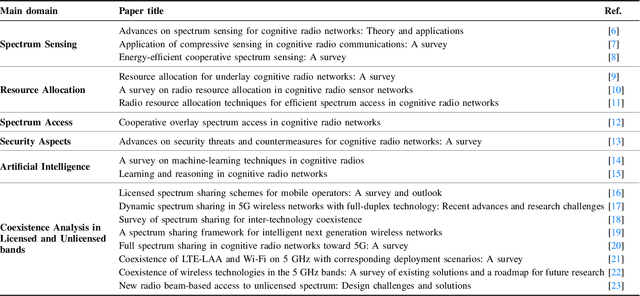

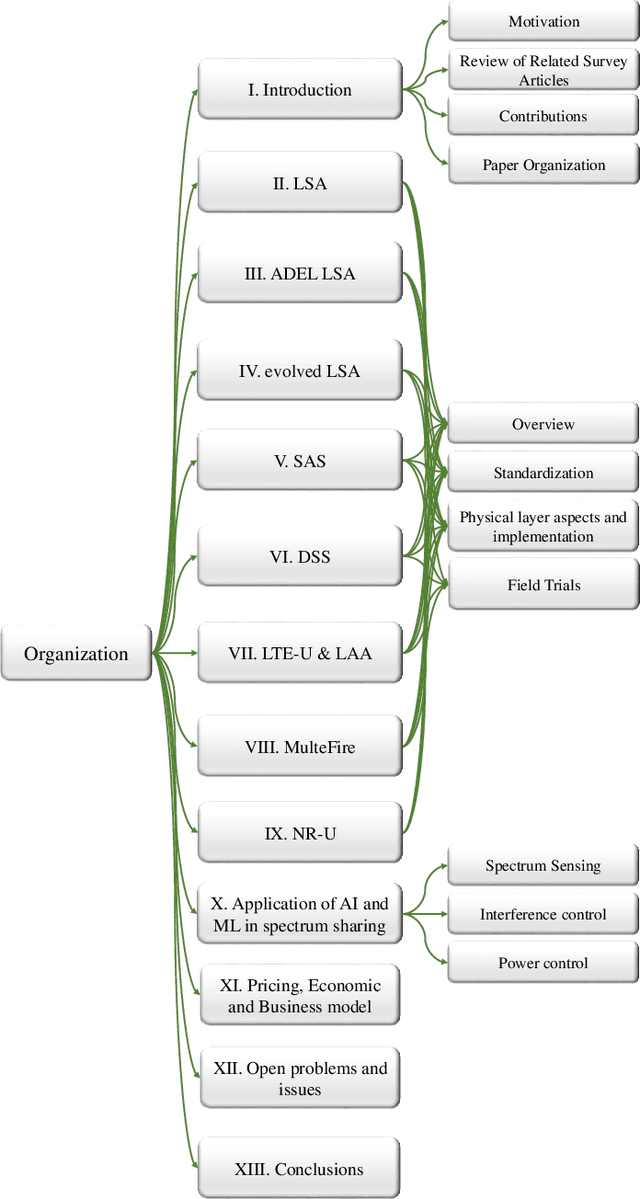

Abstract:As the services and requirements of next-generation wireless networks become increasingly diversified, it is estimated that the current frequency bands of mobile network operators (MNOs) will be unable to cope with the immensity of anticipated demands. Due to spectrum scarcity, there has been a growing trend among stakeholders toward identifying practical solutions to make the most productive use of the exclusively allocated bands on a shared basis through spectrum sharing mechanisms. However, due to the technical complexities of these mechanisms, their design presents challenges, as it requires coordination among multiple entities. To address this challenge, in this paper, we begin with a detailed review of the recent literature on spectrum sharing methods, classifying them on the basis of their operational frequency regime that is, whether they are implemented to operate in licensed bands (e.g., licensed shared access (LSA), spectrum access system (SAS), and dynamic spectrum sharing (DSS)) or unlicensed bands (e.g., LTE-unlicensed (LTE-U), licensed assisted access (LAA), MulteFire, and new radio-unlicensed (NR-U)). Then, in order to narrow the gap between the standardization and vendor-specific implementations, we provide a detailed review of the potential implementation scenarios and necessary amendments to legacy cellular networks from the perspective of telecom vendors and regulatory bodies. Next, we analyze applications of artificial intelligence (AI) and machine learning (ML) techniques for facilitating spectrum sharing mechanisms and leveraging the full potential of autonomous sharing scenarios. Finally, we conclude the paper by presenting open research challenges, which aim to provide insights into prospective research endeavors.

Toward a Smart Resource Allocation Policy via Artificial Intelligence in 6G Networks: Centralized or Decentralized?

Feb 18, 2022

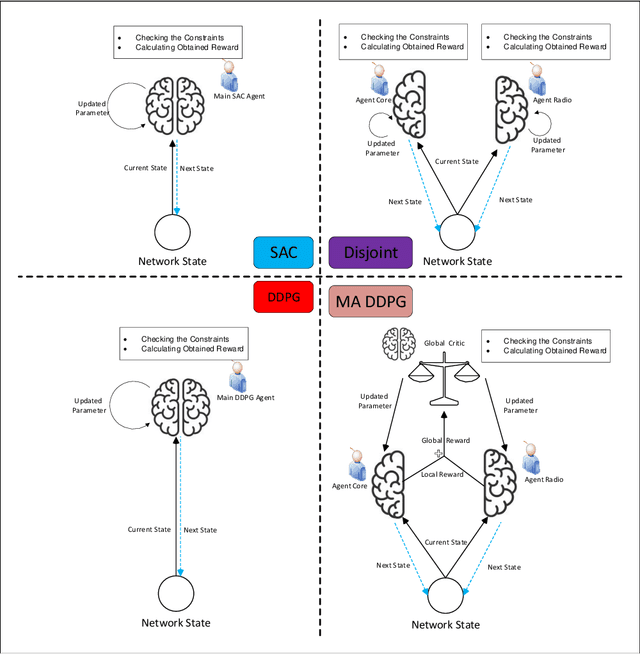

Abstract:In this paper, we design a new smart softwaredefined radio access network (RAN) architecture with important properties like flexibility and traffic awareness for sixth generation (6G) wireless networks. In particular, we consider a hierarchical resource allocation framework for the proposed smart soft-RAN model, where the software-defined network (SDN) controller is the first and foremost layer of the framework. This unit dynamically monitors the network to select a network operation type on the basis of distributed or centralized resource allocation architectures to perform decision-making intelligently. In this paper, our aim is to make the network more scalable and more flexible in terms of achievable data rate, overhead, and complexity indicators. To this end, we introduce a new metric, throughput overhead complexity (TOC), for the proposed machine learning-based algorithm, which makes a trade-off between these performance indicators. In particular, the decision making based on TOC is solved via deep reinforcement learning (DRL), which determines an appropriate resource allocation policy. Furthermore, for the selected algorithm, we employ the soft actor-critic method, which is more accurate, scalable, and robust than other learning methods. Simulation results demonstrate that the proposed smart network achieves better performance in terms of TOC compared to fixed centralized or distributed resource management schemes that lack dynamism. Moreover, our proposed algorithm outperforms conventional learning methods employed in other state-of-the-art network designs.

AI-based Robust Resource Allocation in End-to-End Network Slicing under Demand and CSI Uncertainties

Feb 10, 2022Abstract:Network slicing (NwS) is one of the main technologies in the fifth-generation of mobile communication and beyond (5G+). One of the important challenges in the NwS is information uncertainty which mainly involves demand and channel state information (CSI). Demand uncertainty is divided into three types: number of users requests, amount of bandwidth, and requested virtual network functions workloads. Moreover, the CSI uncertainty is modeled by three methods: worst-case, probabilistic, and hybrid. In this paper, our goal is to maximize the utility of the infrastructure provider by exploiting deep reinforcement learning algorithms in end-to-end NwS resource allocation under demand and CSI uncertainties. The proposed formulation is a nonconvex mixed-integer non-linear programming problem. To perform robust resource allocation in problems that involve uncertainty, we need a history of previous information. To this end, we use a recurrent deterministic policy gradient (RDPG) algorithm, a recurrent and memory-based approach in deep reinforcement learning. Then, we compare the RDPG method in different scenarios with soft actor-critic (SAC), deep deterministic policy gradient (DDPG), distributed, and greedy algorithms. The simulation results show that the SAC method is better than the DDPG, distributed, and greedy methods, respectively. Moreover, the RDPG method out performs the SAC approach on average by 70%.

Multi Agent Reinforcement Learning Trajectory Design and Two-Stage Resource Management in CoMP UAV VLC Networks

Dec 03, 2021

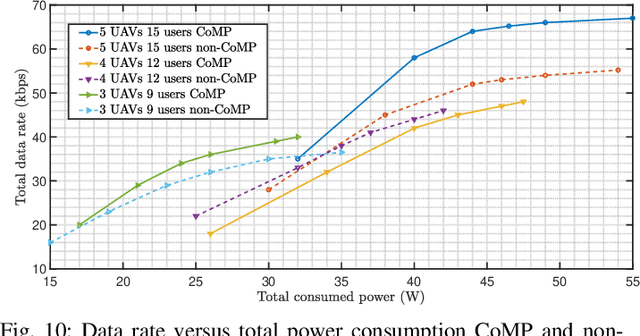

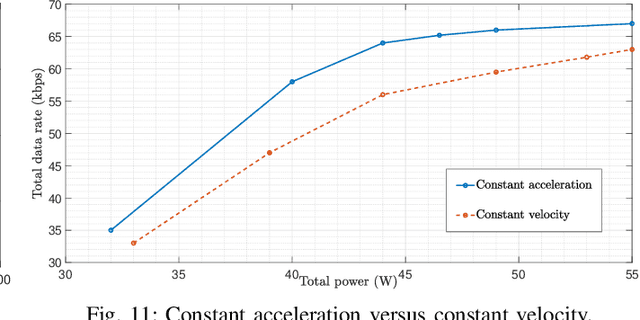

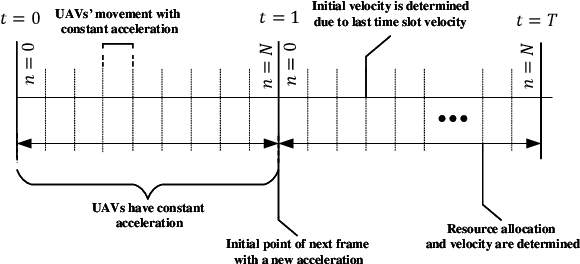

Abstract:In this paper, we consider unmanned aerial vehicles (UAVs) equipped with a visible light communication (VLC) access point and coordinated multipoint (CoMP) capability that allows users to connect to more than one UAV. UAVs can move in 3-dimensional (3D) at a constant acceleration, where a central server is responsible for synchronization and cooperation among UAVs. The effect of accelerated motion in UAV is necessary to be considered. Unlike most existing works, we examine the effects of variable speed on kinetics and radio resource allocations. For the proposed system model, we define two different time frames. In the frame, the acceleration of each UAV is specified, and in each slot, radio resources are allocated. Our goal is to formulate a multiobjective optimization problem where the total data rate is maximized, and the total communication power consumption is minimized simultaneously. To handle this multiobjective optimization, we first apply the scalarization method and then apply multi-agent deep deterministic policy gradient (MADDPG). We improve this solution method by adding two critic networks together with two-stage resources allocation. Simulation results indicate that the constant acceleration motion of UAVs shows about 8\% better results than conventional motion systems in terms of performance.

AI-based Radio Resource Management and Trajectory Design for PD-NOMA Communication in IRS-UAV Assisted Networks

Nov 06, 2021

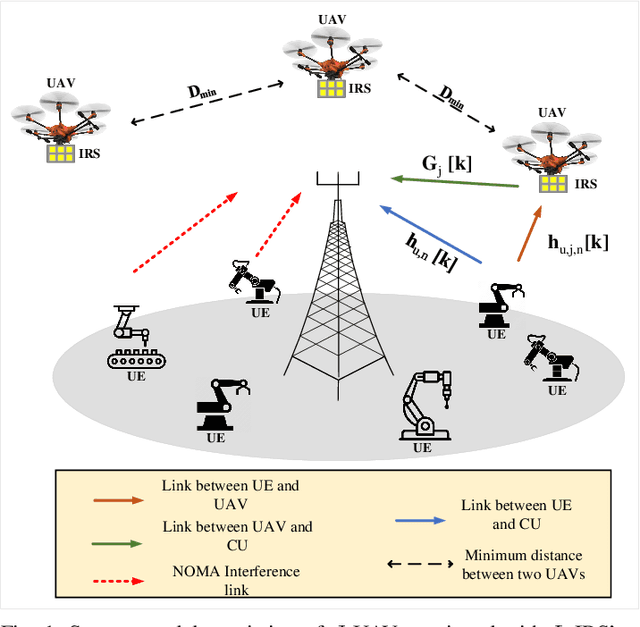

Abstract:In this paper, we consider that the unmanned aerial vehicles (UAVs) with attached intelligent reflecting surfaces (IRSs) play the role of flying reflectors that reflect the signal of users to the destination, and utilize the power-domain non-orthogonal multiple access (PD-NOMA) scheme in the uplink. We investigate the benefits of the UAV-IRS on the internet of things (IoT) networks that improve the freshness of collected data of the IoT devices via optimizing power, sub-carrier, and trajectory variables, as well as, the phase shift matrix elements. We consider minimizing the average age-of-information (AAoI) of users subject to the maximum transmit power limitations, PD-NOMA-related restriction, and the constraints related to UAV's movement. The optimization problem consists of discrete and continuous variables. Hence, we divide the resource allocation problem into two sub-problems and use two different reinforcement learning (RL) based algorithms to solve them, namely the double deep Qnetwork (DDQN) and a proximal policy optimization (PPO). Our numerical results illustrate the performance gains that can be achieved for IRS enabled UAV communication systems. Moreover, we compare our deep RL (DRL) based algorithm with matching algorithm and random trajectory, showing the combination of DDQN and PPO algorithm proposed in this paper performs 10% and 15% better than matching algorithm and random-trajectory algorithm, respectively.

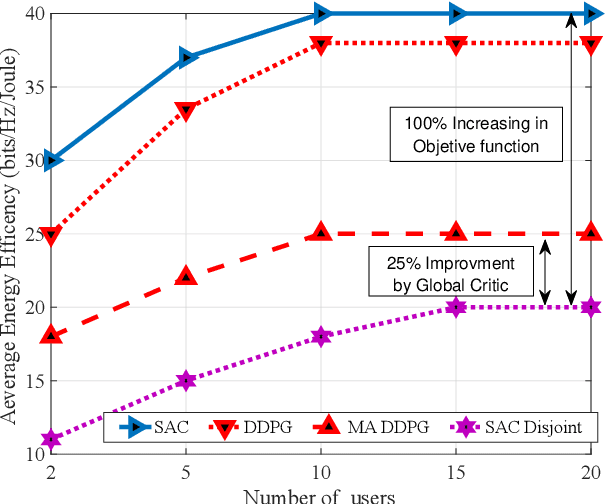

Learning based E2E Energy Efficient in Joint Radio and NFV Resource Allocation for 5G and Beyond Networks

Jul 13, 2021

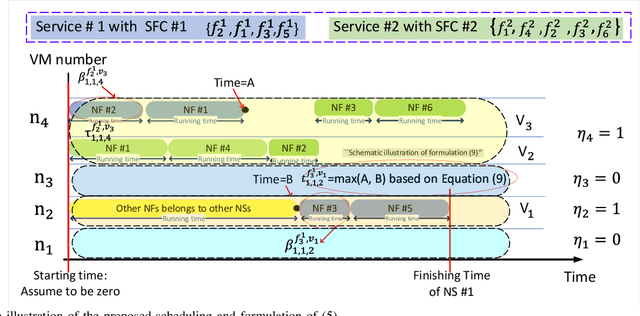

Abstract:In this paper, we propose a joint radio and core resource allocation framework for NFV-enabled networks. In the proposed system model, the goal is to maximize energy efficiency (EE), by guaranteeing end-to-end (E2E) quality of service (QoS) for different service types. To this end, we formulate an optimization problem in which power and spectrum resources are allocated in the radio part. In the core part, the chaining, placement, and scheduling of functions are performed to ensure the QoS of all users. This joint optimization problem is modeled as a Markov decision process (MDP), considering time-varying characteristics of the available resources and wireless channels. A soft actor-critic deep reinforcement learning (SAC-DRL) algorithm based on the maximum entropy framework is subsequently utilized to solve the above MDP. Numerical results reveal that the proposed joint approach based on the SAC-DRL algorithm could significantly reduce energy consumption compared to the case in which R-RA and NFV-RA problems are optimized separately.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge