Narges Gholipoor

Liquid State Machine-Empowered Reflection Tracking in RIS-Aided THz Communications

Aug 08, 2022

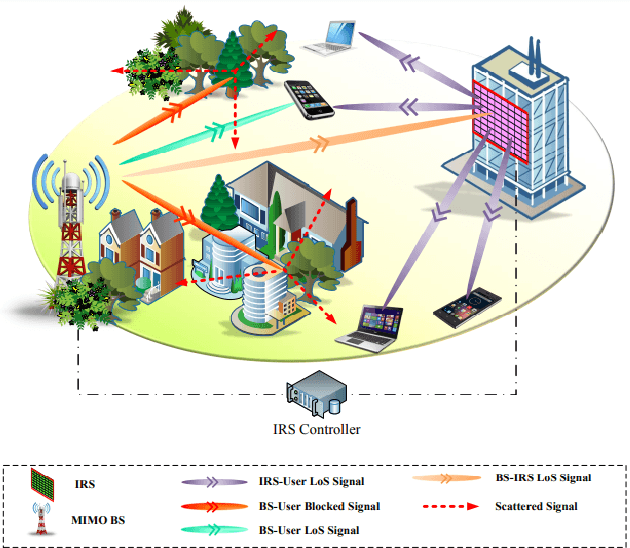

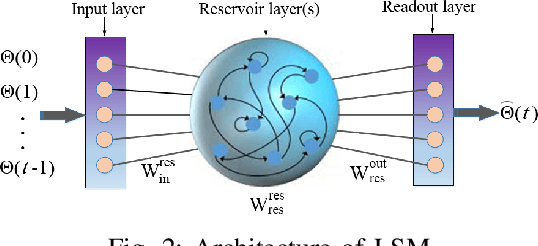

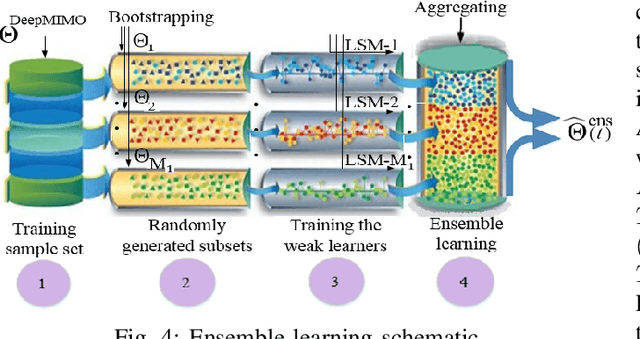

Abstract:Passive beamforming in reconfigurable intelligent surfaces (RISs) enables a feasible and efficient way of communication when the RIS reflection coefficients are precisely adjusted. In this paper, we present a framework to track the RIS reflection coefficients with the aid of deep learning from a time-series prediction perspective in a terahertz (THz) communication system. The proposed framework achieves a two-step enhancement over the similar learning-driven counterparts. Specifically, in the first step, we train a liquid state machine (LSM) to track the historical RIS reflection coefficients at prior time steps (known as a time-series sequence) and predict their upcoming time steps. We also fine-tune the trained LSM through Xavier initialization technique to decrease the prediction variance, thus resulting in a higher prediction accuracy. In the second step, we use ensemble learning technique which leverages on the prediction power of multiple LSMs to minimize the prediction variance and improve the precision of the first step. It is numerically demonstrated that, in the first step, employing the Xavier initialization technique to fine-tune the LSM results in at most 26% lower LSM prediction variance and as much as 46% achievable spectral efficiency (SE) improvement over the existing counterparts, when an RIS of size 11x11 is deployed. In the second step, under the same computational complexity of training a single LSM, the ensemble learning with multiple LSMs degrades the prediction variance of a single LSM up to 66% and improves the system achievable SE at most 54%.

Learning based E2E Energy Efficient in Joint Radio and NFV Resource Allocation for 5G and Beyond Networks

Jul 13, 2021

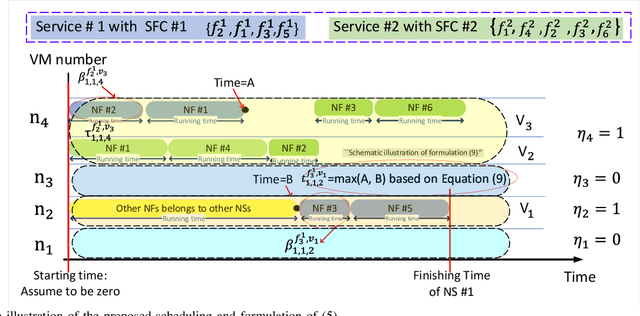

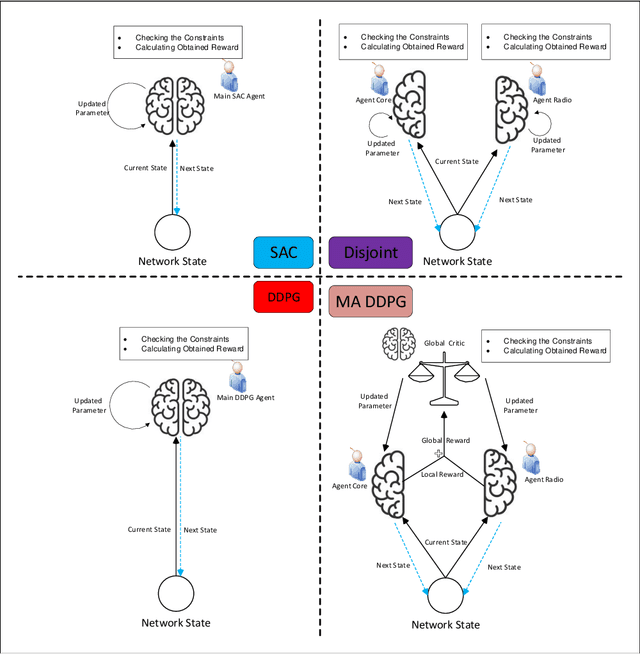

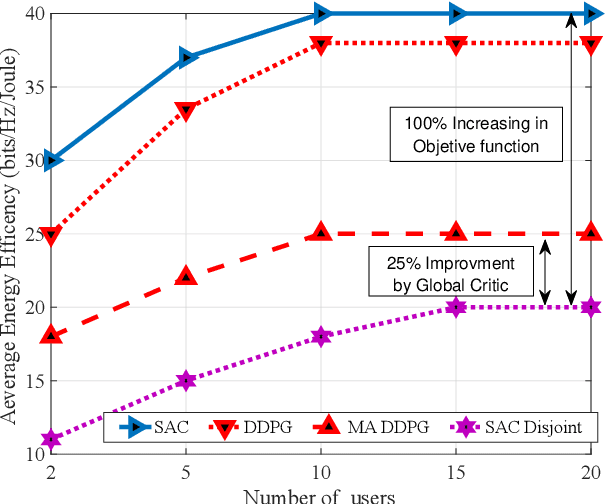

Abstract:In this paper, we propose a joint radio and core resource allocation framework for NFV-enabled networks. In the proposed system model, the goal is to maximize energy efficiency (EE), by guaranteeing end-to-end (E2E) quality of service (QoS) for different service types. To this end, we formulate an optimization problem in which power and spectrum resources are allocated in the radio part. In the core part, the chaining, placement, and scheduling of functions are performed to ensure the QoS of all users. This joint optimization problem is modeled as a Markov decision process (MDP), considering time-varying characteristics of the available resources and wireless channels. A soft actor-critic deep reinforcement learning (SAC-DRL) algorithm based on the maximum entropy framework is subsequently utilized to solve the above MDP. Numerical results reveal that the proposed joint approach based on the SAC-DRL algorithm could significantly reduce energy consumption compared to the case in which R-RA and NFV-RA problems are optimized separately.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge