Mehdi Rasti

6G Resilience -- White Paper

Sep 10, 2025

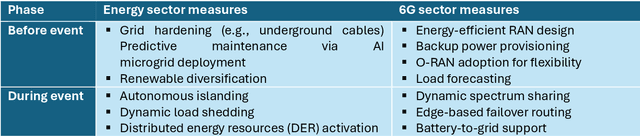

Abstract:6G must be designed to withstand, adapt to, and evolve amid prolonged, complex disruptions. Mobile networks' shift from efficiency-first to sustainability-aware has motivated this white paper to assert that resilience is a primary design goal, alongside sustainability and efficiency, encompassing technology, architecture, and economics. We promote resilience by analysing dependencies between mobile networks and other critical systems, such as energy, transport, and emergency services, and illustrate how cascading failures spread through infrastructures. We formalise resilience using the 3R framework: reliability, robustness, resilience. Subsequently, we translate this into measurable capabilities: graceful degradation, situational awareness, rapid reconfiguration, and learning-driven improvement and recovery. Architecturally, we promote edge-native and locality-aware designs, open interfaces, and programmability to enable islanded operations, fallback modes, and multi-layer diversity (radio, compute, energy, timing). Key enablers include AI-native control loops with verifiable behaviour, zero-trust security rooted in hardware and supply-chain integrity, and networking techniques that prioritise critical traffic, time-sensitive flows, and inter-domain coordination. Resilience also has a techno-economic aspect: open platforms and high-quality complementors generate ecosystem externalities that enhance resilience while opening new markets. We identify nine business-model groups and several patterns aligned with the 3R objectives, and we outline governance and standardisation. This white paper serves as an initial step and catalyst for 6G resilience. It aims to inspire researchers, professionals, government officials, and the public, providing them with the essential components to understand and shape the development of 6G resilience.

Highly Dynamic and Flexible Spatio-Temporal Spectrum Management with AI-Driven O-RAN: A Multi-Granularity Marketplace Framework

Feb 19, 2025Abstract:Current spectrum-sharing frameworks struggle with adaptability, often being either static or insufficiently dynamic. They primarily emphasize temporal sharing while overlooking spatial and spectral dimensions. We propose an adaptive, AI-driven spectrum-sharing framework within the O-RAN architecture, integrating discriminative and generative AI (GenAI) to forecast spectrum needs across multiple timescales and spatial granularities. A marketplace model, managed by an authorized spectrum broker, enables operators to trade spectrum dynamically, balancing static assignments with real-time trading. GenAI enhances traffic prediction, spectrum estimation, and allocation, optimizing utilization while reducing costs. This modular, flexible approach fosters operator collaboration, maximizing efficiency and revenue. A key research challenge is refining allocation granularity and spatio-temporal dynamics beyond existing models.

A Study on Characterization of Near-Field Sub-Regions For Phased-Array Antennas

Oct 23, 2024

Abstract:We characterize three near-field sub-regions for phased array antennas by elaborating on the boundaries {\it Fraunhofer}, {\it radial-focal}, and {\it non-radiating} distances. The {\it Fraunhofer distance} which is the boundary between near and far field has been well studied in the literature on the principal axis (PA) of single-element center-fed antennas, where PA denotes the axis perpendicular to the antenna surface passing from the antenna center. The results are also valid for phased arrays if the PA coincides with the boresight, which is not commonly the case in practice. In this work, we completely characterize the Fraunhofer distance by considering various angles between the PA and the boresight. For the {\it radial-focal distance}, below which beamfocusing is feasible in the radial domain, a formal characterization of the corresponding region based on the general model of near-field channels (GNC) is missing in the literature. We investigate this and elaborate that the maximum-ratio-transmission (MRT) beamforming based on the simple uniform spherical wave (USW) channel model results in a radial gap between the achieved and the desired focal points. While the gap vanishes when the array size $N$ becomes sufficiently large, we propose a practical algorithm to remove this gap in the non-asymptotic case when $N$ is not very large. Finally, the {\it non-radiating} distance, below which the reactive power dominates active power, has been studied in the literature for single-element antennas. We analytically explore this for phased arrays and show how different excitation phases of the antenna array impact it. We also clarify some misconceptions about the non-radiating and Fresnel distances prevailing in the literature.

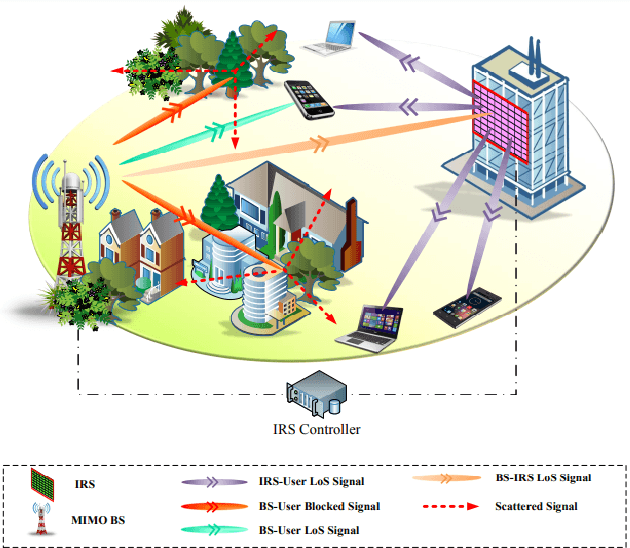

Beyond Diagonal RIS for Multi-Band Multi-Cell MIMO Networks: A Practical Frequency-Dependent Model and Performance Analysis

Jan 12, 2024

Abstract:This paper delves into the unexplored frequency-dependent characteristics of beyond diagonal reconfigurable intelligent surfaces (BD-RISs). A generalized practical frequency-dependent reflection model is proposed as a fundamental framework for configuring fully-connected and group-connected RISs in a multi-band multi-base station (BS) multiple-input multiple-output (MIMO) network. Leveraging this practical model, multi-objective optimization strategies are formulated to maximize the received power at multiple users connected to different BSs, each operating under a distinct carrier frequency. By relying on matrix theory and exploiting the symmetric structure of the reflection matrices inherent to BD-RISs, closed-form relaxed solutions for the challenging optimization problems are derived. The ideal solutions are then combined with codebook-based approaches to configure the practical capacitance values for the BD-RISs. Simulation results reveal the frequency-dependent behaviors of different RIS architectures and demonstrate the effectiveness of the proposed schemes. Notably, BD-RISs exhibit superior resilience to frequency deviations compared to conventional single-connected RISs. Moreover, the proposed optimization approaches prove effective in enabling the targeted operation of BD-RISs across one or more carrier frequencies. The results also shed light on the potential for harmful interference in the absence of proper synchronization between RISs and adjacent BSs.

Indoor Positioning via Gradient Boosting Enhanced with Feature Augmentation using Deep Learning

Nov 16, 2022

Abstract:With the emerge of the Internet of Things (IoT), localization within indoor environments has become inevitable and has attracted a great deal of attention in recent years. Several efforts have been made to cope with the challenges of accurate positioning systems in the presence of signal interference. In this paper, we propose a novel deep learning approach through Gradient Boosting Enhanced with Step-Wise Feature Augmentation using Artificial Neural Network (AugBoost-ANN) for indoor localization applications as it trains over labeled data. For this purpose, we propose an IoT architecture using a star network topology to collect the Received Signal Strength Indicator (RSSI) of Bluetooth Low Energy (BLE) modules by means of a Raspberry Pi as an Access Point (AP) in an indoor environment. The dataset for the experiments is gathered in the real world in different periods to match the real environments. Next, we address the challenges of the AugBoost-ANN training which augments features in each iteration of making a decision tree using a deep neural network and the transfer learning technique. Experimental results show more than 8\% improvement in terms of accuracy in comparison with the existing gradient boosting and deep learning methods recently proposed in the literature, and our proposed model acquires a mean location accuracy of 0.77 m.

Liquid State Machine-Empowered Reflection Tracking in RIS-Aided THz Communications

Aug 08, 2022

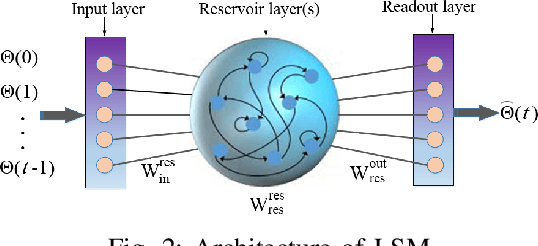

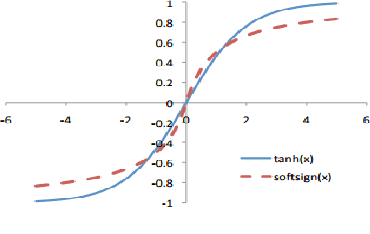

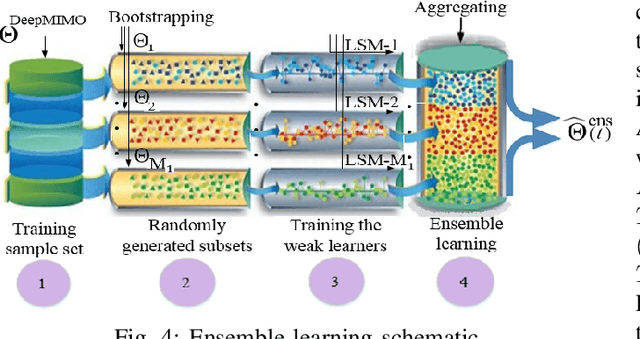

Abstract:Passive beamforming in reconfigurable intelligent surfaces (RISs) enables a feasible and efficient way of communication when the RIS reflection coefficients are precisely adjusted. In this paper, we present a framework to track the RIS reflection coefficients with the aid of deep learning from a time-series prediction perspective in a terahertz (THz) communication system. The proposed framework achieves a two-step enhancement over the similar learning-driven counterparts. Specifically, in the first step, we train a liquid state machine (LSM) to track the historical RIS reflection coefficients at prior time steps (known as a time-series sequence) and predict their upcoming time steps. We also fine-tune the trained LSM through Xavier initialization technique to decrease the prediction variance, thus resulting in a higher prediction accuracy. In the second step, we use ensemble learning technique which leverages on the prediction power of multiple LSMs to minimize the prediction variance and improve the precision of the first step. It is numerically demonstrated that, in the first step, employing the Xavier initialization technique to fine-tune the LSM results in at most 26% lower LSM prediction variance and as much as 46% achievable spectral efficiency (SE) improvement over the existing counterparts, when an RIS of size 11x11 is deployed. In the second step, under the same computational complexity of training a single LSM, the ensemble learning with multiple LSMs degrades the prediction variance of a single LSM up to 66% and improves the system achievable SE at most 54%.

MIX-MAB: Reinforcement Learning-based Resource Allocation Algorithm for LoRaWAN

Jun 07, 2022

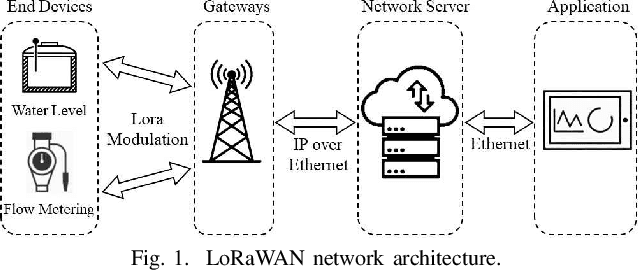

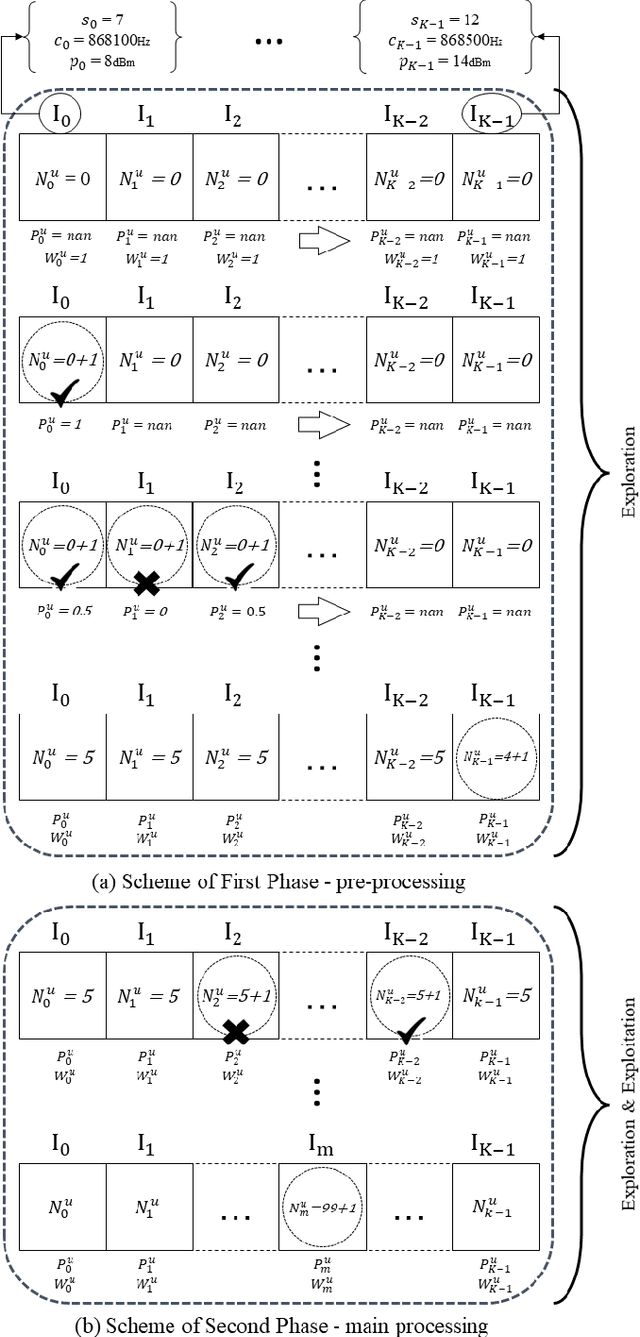

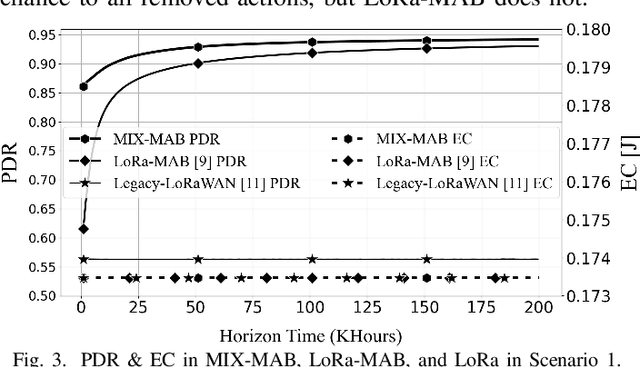

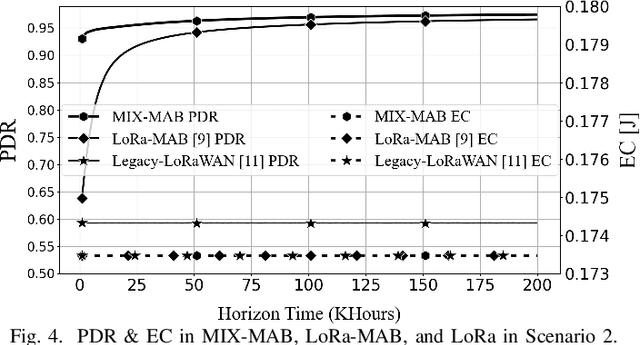

Abstract:This paper focuses on improving the resource allocation algorithm in terms of packet delivery ratio (PDR), i.e., the number of successfully received packets sent by end devices (EDs) in a long-range wide-area network (LoRaWAN). Setting the transmission parameters significantly affects the PDR. Employing reinforcement learning (RL), we propose a resource allocation algorithm that enables the EDs to configure their transmission parameters in a distributed manner. We model the resource allocation problem as a multi-armed bandit (MAB) and then address it by proposing a two-phase algorithm named MIX-MAB, which consists of the exponential weights for exploration and exploitation (EXP3) and successive elimination (SE) algorithms. We evaluate the MIX-MAB performance through simulation results and compare it with other existing approaches. Numerical results show that the proposed solution performs better than the existing schemes in terms of convergence time and PDR.

Xavier-Enabled Extreme Reservoir Machine for Millimeter-Wave Beamspace Channel Tracking

Jun 01, 2022

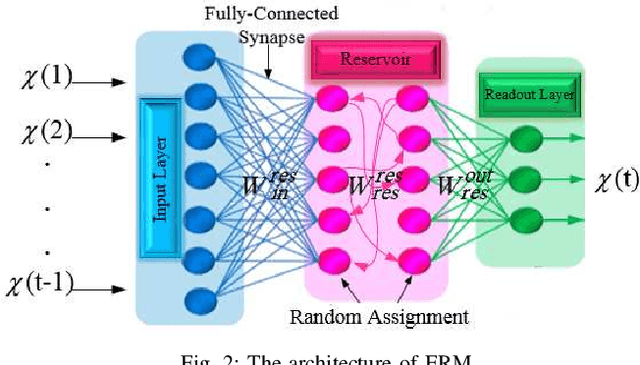

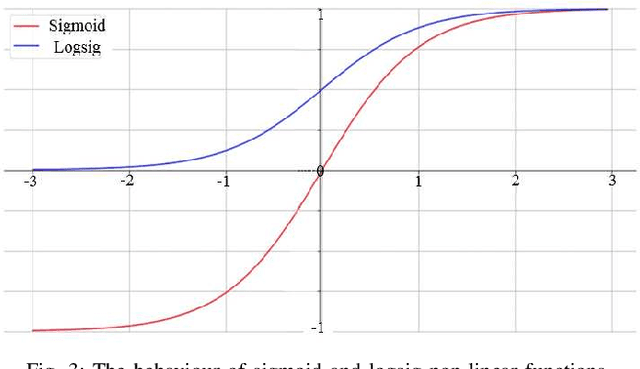

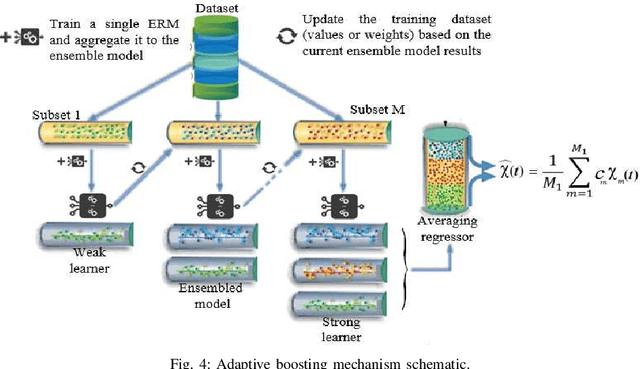

Abstract:In this paper, we propose an accurate two-phase millimeter-Wave (mmWave) beamspace channel tracking mechanism. Particularly in the first phase, we train an extreme reservoir machine (ERM) for tracking the historical features of the mmWave beamspace channel and predicting them in upcoming time steps. Towards a more accurate prediction, we further fine-tune the ERM by means of Xavier initializer technique, whereby the input weights in ERM are initially derived from a zero mean and finite variance Gaussian distribution, leading to 49% degradation in prediction variance of the conventional ERM. The proposed method numerically improves the achievable spectral efficiency (SE) of the existing counterparts, by 13%, when signal-to-noise-ratio (SNR) is 15dB. We further investigate an ensemble learning technique in the second phase by sequentially incorporating multiple ERMs to form an ensembled model, namely adaptive boosting (AdaBoost), which further reduces the prediction variance in conventional ERM by 56%, and concludes in 21% enhancement of achievable SE upon the existing schemes at SNR=15dB.

A Learning Approach for Joint Design of Event-triggered Control and Power-Efficient Resource Allocation

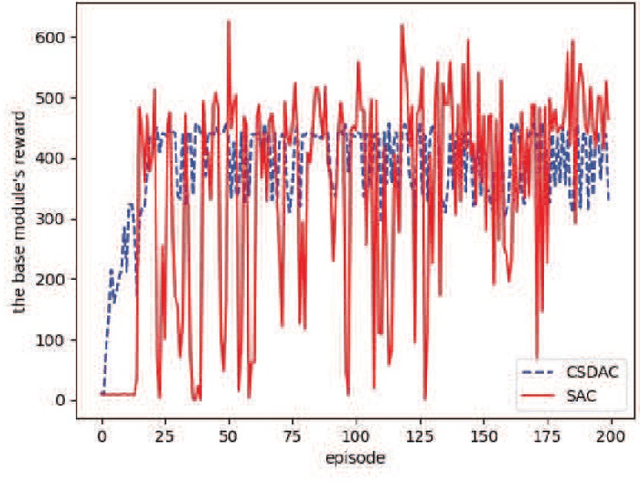

May 14, 2022

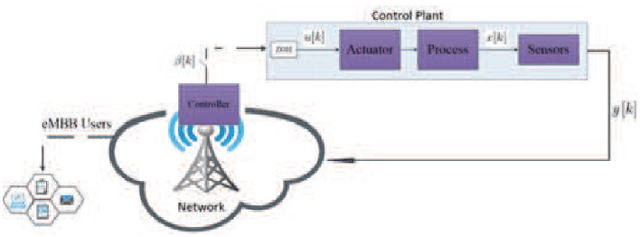

Abstract:In emerging Industrial Cyber-Physical Systems (ICPSs), the joint design of communication and control sub-systems is essential, as these sub-systems are interconnected. In this paper, we study the joint design problem of an event-triggered control and an energy-efficient resource allocation in a fifth generation (5G) wireless network. We formally state the problem as a multi-objective optimization one, aiming to minimize the number of updates on the actuators' input and the power consumption in the downlink transmission. To address the problem, we propose a model-free hierarchical reinforcement learning approach \textcolor{blue}{with uniformly ultimate boundedness stability guarantee} that learns four policies simultaneously. These policies contain an update time policy on the actuators' input, a control policy, and energy-efficient sub-carrier and power allocation policies. Our simulation results show that the proposed approach can properly control a simulated ICPS and significantly decrease the number of updates on the actuators' input as well as the downlink power consumption.

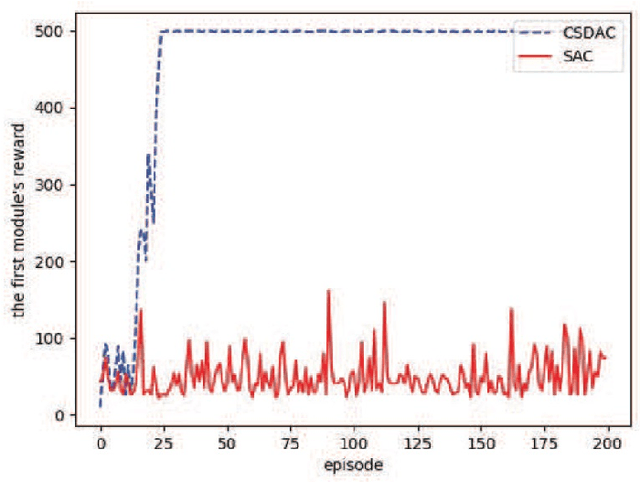

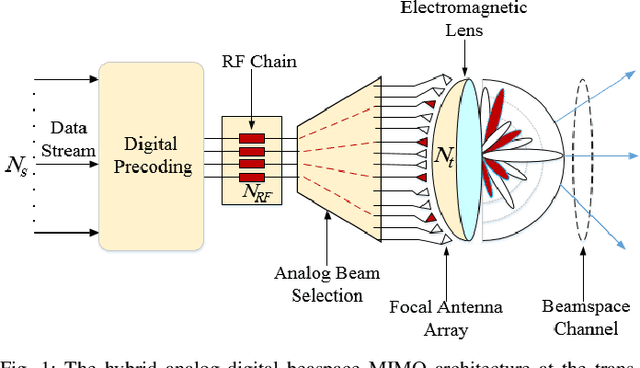

Swish-Driven GoogleNet for Intelligent Analog Beam Selection in Terahertz Beamspace MIMO

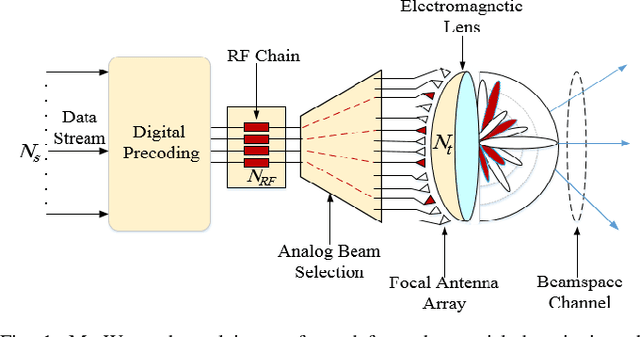

Oct 12, 2021

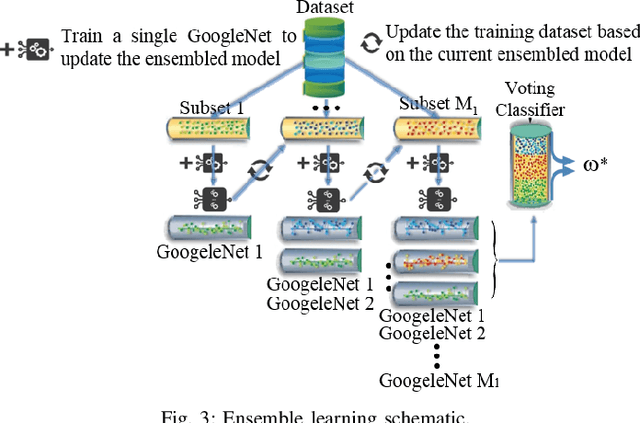

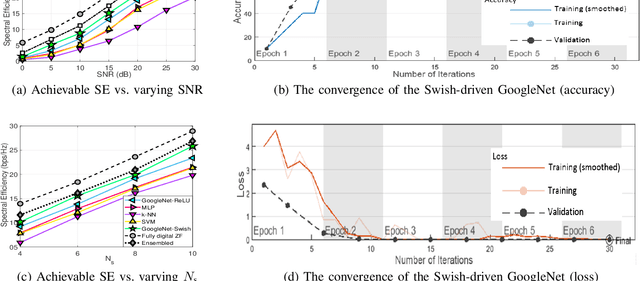

Abstract:In this paper, we propose an intelligent analog beam selection strategy in a terahertz (THz) band beamspace multiple-input multiple-output (MIMO) system. First inspired by transfer learning, we fine-tune the pre-trained off-the-shelf GoogleNet classifier, to learn analog beam selection as a multi-class mapping problem. Simulation results show 83% accuracy for the analog beam selection, which subsequently results in 12% spectral efficiency (SE) gain, upon the existing counterparts. Towards a more accurate classifier, we replace the conventional rectified linear unit (ReLU) activation function of the GoogleNet with the recently proposed Swish and retrain the fine-tuned GoogleNet to learn analog beam selection. It is numerically indicated that the fine-tuned Swish-driven GoogleNet achieves 86% accuracy, as well as 18% improvement in achievable SE, upon the similar schemes. Eventually, a strong ensembled classifier is developed to learn analog beam selection by sequentially training multiple fine-tuned Swish-driven GoogleNet classifiers. According to the simulations, the strong ensembled model is 90% accurate and yields 27% gain in achievable SE, in comparison with prior methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge