Matti Latva-aho

Centre for Wireless Communications

Conditional Denoising Diffusion Autoencoders for Wireless Semantic Communications

Sep 26, 2025

Abstract:Semantic communication (SemCom) systems aim to learn the mapping from low-dimensional semantics to high-dimensional ground-truth. While this is more akin to a "domain translation" problem, existing frameworks typically emphasize on channel-adaptive neural encoding-decoding schemes, lacking full exploration of signal distribution. Moreover, such methods so far have employed autoencoder-based architectures, where the encoding is tightly coupled to a matched decoder, causing scalability issues in practice. To address these gaps, diffusion autoencoder models are proposed for wireless SemCom. The goal is to learn a "semantic-to-clean" mapping, from the semantic space to the ground-truth probability distribution. A neural encoder at semantic transmitter extracts the high-level semantics, and a conditional diffusion model (CDiff) at the semantic receiver exploits the source distribution for signal-space denoising, while the received semantic latents are incorporated as the conditioning input to "steer" the decoding process towards the semantics intended by the transmitter. It is analytically proved that the proposed decoder model is a consistent estimator of the ground-truth data. Furthermore, extensive simulations over CIFAR-10 and MNIST datasets are provided along with design insights, highlighting the performance compared to legacy autoencoders and variational autoencoders (VAE). Simulations are further extended to the multi-user SemCom, identifying the dominating factors in a more realistic setup.

On the Deployment of Multiple Radio Stripes for Large-Scale Near-Field RF Wireless Power Transfer

Aug 29, 2025Abstract:This paper investigates the deployment of radio stripe systems for indoor radio-frequency (RF) wireless power transfer (WPT) in line-of-sight near-field scenarios. The focus is on environments where energy demand is concentrated in specific areas, referred to as 'hotspots', spatial zones with higher user density or consistent energy requirements. We formulate a joint clustering and radio stripe deployment problem that aims to maximize the minimum received power across all hotspots. To address the complexity, we decouple the problem into two stages: i) clustering for assigning radio stripes to hotspots based on their spatial positions and near-field propagation characteristics, and ii) antenna element placement optimization. In particular, we propose four radio stripe deployment algorithms. Two are based on general successive convex approximation (SCA) and signomial programming (SGP) methods. The other two are shape-constrained solutions where antenna elements are arranged along either straight lines or regular polygons, enabling simpler deployment. Numerical results show that the proposed clustering method converges effectively, with Chebyshev initialization significantly outperforming random initialization. The optimized deployments consistently outperform baseline benchmarks across a wide range of frequencies and radio stripe lengths, while the polygon-shaped deployment achieves better performance compared to other approaches. Meanwhile, the line-shaped deployment demonstrates an advantage under high boresight gain settings, benefiting from increased spatial diversity and broader angular coverage.

On the Robustness of RSMA to Adversarial BD-RIS-Induced Interference

May 26, 2025Abstract:This article investigates the robustness of rate-splitting multiple access (RSMA) in multi-user multiple-input multiple-output (MIMO) systems to interference attacks against channel acquisition induced by beyond-diagonal RISs (BD-RISs). Two primary attack strategies, random and aligned interference, are proposed for fully connected and group-connected BD-RIS architectures. Valid random reflection coefficients are generated exploiting the Takagi factorization, while potent aligned interference attacks are achieved through optimization strategies based on a quadratically constrained quadratic program (QCQP) reformulation followed by projections onto the unitary manifold. Our numerical findings reveal that, when perfect channel state information (CSI) is available, RSMA behaves similarly to space-division multiple access (SDMA) and thus is highly susceptible to the attack, with BD-RIS inducing severe performance loss and significantly outperforming diagonal RIS. However, under imperfect CSI, RSMA consistently demonstrates significantly greater robustness than SDMA, particularly as the system's transmit power increases.

Sense-then-Charge: Wireless Power Transfer to Unresponsive Devices with Unknown Location

Apr 29, 2025

Abstract:This paper explores a multi-antenna dual-functional radio frequency (RF) wireless power transfer (WPT) and radar system to charge multiple unresponsive devices. We formulate a beamforming problem to maximize the minimum received power at the devices without prior location and channel state information (CSI) knowledge. We propose dividing transmission blocks into sensing and charging phases. First, the location of the devices is estimated by sending sensing signals and performing multiple signal classification and least square estimation on the received echo. Then, the estimations are used for CSI prediction and RF-WPT beamforming. Simulation results reveal that there is an optimal number of blocks allocated for sensing and charging depending on the system setup. Our sense-then-charge (STC) protocol can outperform CSI-free benchmarks and achieve near-optimal performance with a sufficient number of receive antennas and transmit power. However, STC struggles if using insufficient antennas or power as device numbers grow.

Vessel Length Estimation from Magnetic Wake Signature: A Physics-Informed Residual Neural Network Approach

Apr 27, 2025

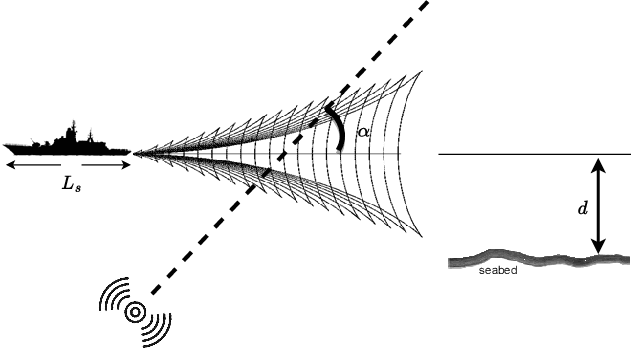

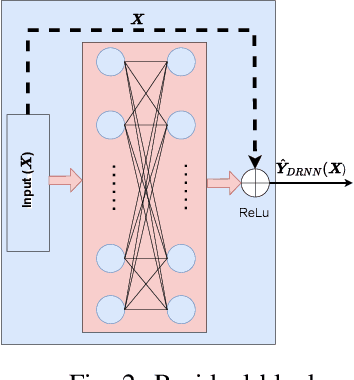

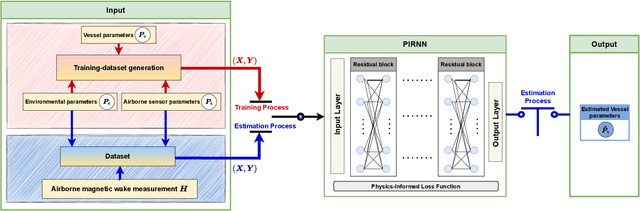

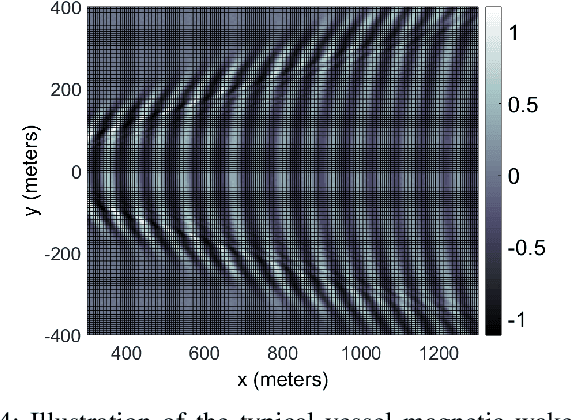

Abstract:Marine remote sensing enhances maritime surveillance, environmental monitoring, and naval operations. Vessel length estimation, a key component of this technology, supports effective maritime surveillance by empowering features such as vessel classification. Departing from traditional methods relying on two-dimensional hydrodynamic wakes or computationally intensive satellite imagery, this paper introduces an innovative approach for vessel length estimation that leverages the subtle magnetic wake signatures of vessels, captured through a low-complexity one-dimensional profile from a single airborne magnetic sensor scan. The proposed method centers around our characterized nonlinear integral equations that connect the magnetic wake to the vessel length within a realistic finite-depth marine environment. To solve the derived equations, we initially leverage a deep residual neural network (DRNN). The proposed DRNN-based solution framework is shown to be unable to exactly learn the intricate relationships between parameters when constrained by a limited training-dataset. To overcome this issue, we introduce an innovative approach leveraging a physics-informed residual neural network (PIRNN). This model integrates physical formulations directly into the loss function, leading to improved performance in terms of both accuracy and convergence speed. Considering a sensor scan angle of less than $15^\circ$, which maintains a reasonable margin below Kelvin's limit angle of $19.5^\circ$, we explore the impact of various parameters on the accuracy of the vessel length estimation, including sensor scan angle, vessel speed, and sea depth. Numerical simulations demonstrate the superiority of the proposed PIRNN method, achieving mean length estimation errors consistently below 5\% for vessels longer than 100m. For shorter vessels, the errors generally remain under 10\%.

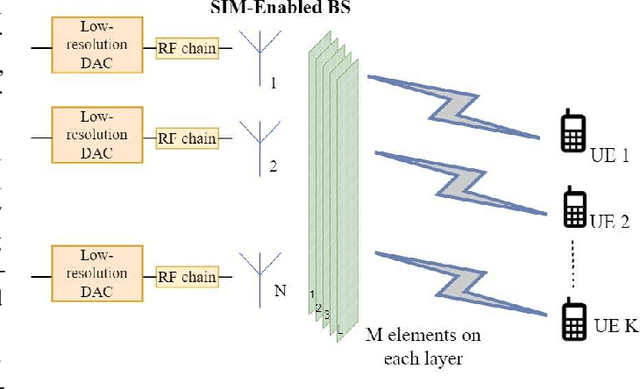

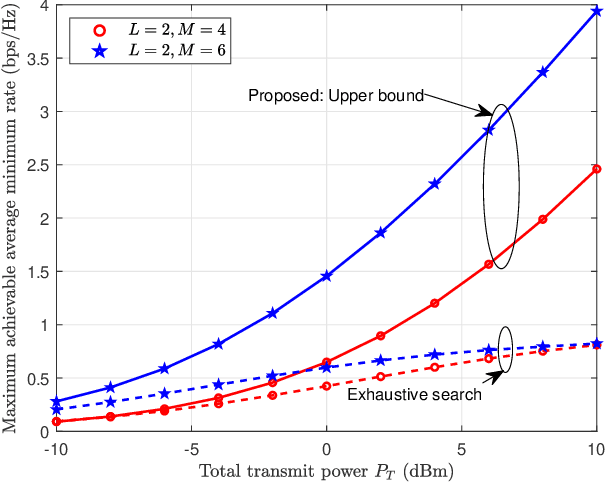

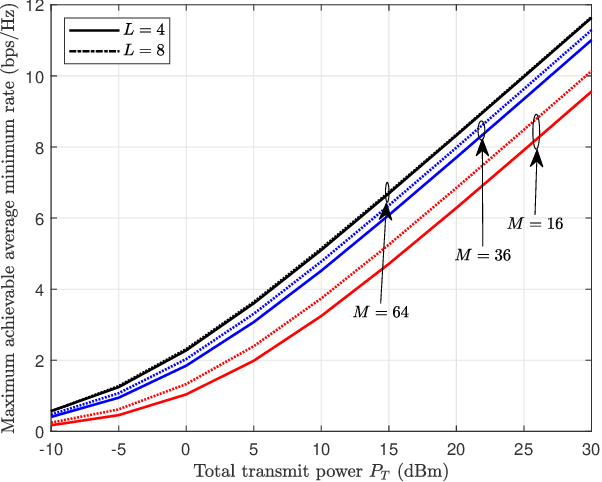

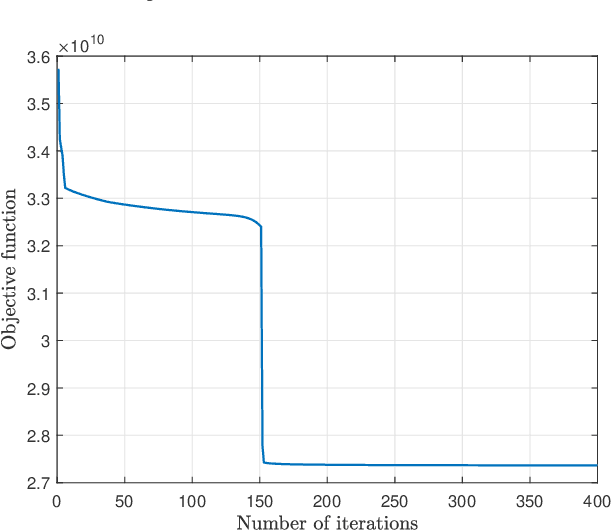

Max-Min Fairness for Stacked Intelligent Metasurface-Assisted Multi-User MISO Systems

Apr 20, 2025

Abstract:Stacked intelligent metasurface (SIM) is an emerging technology that uses multiple reconfigurable surface layers to enable flexible wave-based beamforming. In this paper, we focus on an \ac{SIM}-assisted multi-user multiple-input single-output system, where it is essential to ensure that all users receive a fair and reliable service level. To this end, we develop two max-min fairness algorithms based on instantaneous channel state information (CSI) and statistical CSI. For the instantaneous CSI case, we propose an alternating optimization algorithm that jointly optimizes power allocation using geometric programming and wave-based beamforming coefficients using the gradient descent-ascent method. For the statistical CSI case, since deriving an exact expression for the average minimum achievable rate is analytically intractable, we derive a tight upper bound and thereby formulate a stochastic optimization problem. This problem is then solved, capitalizing on an alternating approach combining geometric programming and gradient descent algorithms, to obtain the optimal policies. Our numerical results show significant improvements in the minimum achievable rate compared to the benchmark schemes. In particular, for the instantaneous CSI scenario, the individual impact of the optimal wave-based beamforming is significantly higher than that of the power allocation strategy. Moreover, the proposed upper bound is shown to be tight in the low signal-to-noise ratio regime under the statistical CSI.

A CNN-based End-to-End Learning for RIS-assisted Communication System

Mar 18, 2025Abstract:Reconfigurable intelligent surface (RIS) is an emerging technology that is used to improve the system performance in beyond 5G systems. In this letter, we propose a novel convolutional neural network (CNN)-based autoencoder to jointly optimize the transmitter, the receiver, and the RIS of a RIS-assisted communication system. The proposed system jointly optimizes the sub-tasks of the transmitter, the receiver, and the RIS such as encoding/decoding, channel estimation, phase optimization, and modulation/demodulation. Numerically we have shown that the bit error rate (BER) performance of the CNN-based autoencoder system is better than the theoretical BER performance of the RIS-assisted communication systems.

Highly Dynamic and Flexible Spatio-Temporal Spectrum Management with AI-Driven O-RAN: A Multi-Granularity Marketplace Framework

Feb 19, 2025Abstract:Current spectrum-sharing frameworks struggle with adaptability, often being either static or insufficiently dynamic. They primarily emphasize temporal sharing while overlooking spatial and spectral dimensions. We propose an adaptive, AI-driven spectrum-sharing framework within the O-RAN architecture, integrating discriminative and generative AI (GenAI) to forecast spectrum needs across multiple timescales and spatial granularities. A marketplace model, managed by an authorized spectrum broker, enables operators to trade spectrum dynamically, balancing static assignments with real-time trading. GenAI enhances traffic prediction, spectrum estimation, and allocation, optimizing utilization while reducing costs. This modular, flexible approach fosters operator collaboration, maximizing efficiency and revenue. A key research challenge is refining allocation granularity and spatio-temporal dynamics beyond existing models.

Uplink Rate Splitting Multiple Access with Imperfect Channel State Information and Interference Cancellation

Jan 31, 2025

Abstract:This article investigates the performance of uplink rate splitting multiple access (RSMA) in a two-user scenario, addressing an under-explored domain compared to its downlink counterpart. With the increasing demand for uplink communication in applications like the Internet-of-Things, it is essential to account for practical imperfections, such as inaccuracies in channel state information at the receiver (CSIR) and limitations in successive interference cancellation (SIC), to provide realistic assessments of system performance. Specifically, we derive closed-form expressions for the outage probability, throughput, and asymptotic outage behavior of uplink users, considering imperfect CSIR and SIC. We validate the accuracy of these derived expressions using Monte Carlo simulations. Our findings reveal that at low transmit power levels, imperfect CSIR significantly affects system performance more severely than SIC imperfections. However, as the transmit power increases, the impact of imperfect CSIR diminishes, while the influence of SIC imperfections becomes more pronounced. Moreover, we highlight the impact of the rate allocation factor on user performance. Finally, our comparison with non-orthogonal multiple access (NOMA) highlights the outage performance trade-offs between RSMA and NOMA. RSMA proves to be more effective in managing imperfect CSIR and enhances performance through strategic message splitting, resulting in more robust communication.

Efficient Channel Prediction for Beyond Diagonal RIS-Assisted MIMO Systems with Channel Aging

Nov 21, 2024

Abstract:Novel reconfigurable intelligent surface (RIS) architectures, known as beyond diagonal RISs (BD-RISs), have been proposed to enhance reflection efficiency and expand RIS capabilities. However, their passive nature, non-diagonal reflection matrix, and the large number of coupled reflecting elements complicate the channel state information (CSI) estimation process. The challenge further escalates in scenarios with fast-varying channels. In this paper, we address this challenge by proposing novel joint channel estimation and prediction strategies with low overhead and high accuracy for two different RIS architectures in a BD-RIS-assisted multiple-input multiple-output system under correlated fast-fading environments with channel aging. The channel estimation procedure utilizes the Tucker2 decomposition with bilinear alternative least squares, which is exploited to decompose the cascade channels of the BD-RIS-assisted system into effective channels of reduced dimension. The channel prediction framework is based on a convolutional neural network combined with an autoregressive predictor. The estimated/predicted CSI is then utilized to optimize the RIS phase shifts aiming at the maximization of the downlink sum rate. Insightful simulation results demonstrate that our proposed approach is robust to channel aging, and exhibits a high estimation accuracy. Moreover, our scheme can deliver a high average downlink sum rate, outperforming other state-of-the-art channel estimation methods. The results also reveal a remarkable reduction in pilot overhead of up to 98\% compared to baseline schemes, all imposing low computational complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge