Nandana Rajatheva

Max-Min Fairness for Stacked Intelligent Metasurface-Assisted Multi-User MISO Systems

Apr 20, 2025

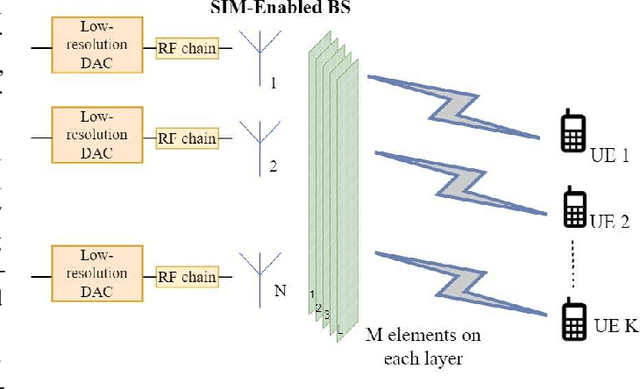

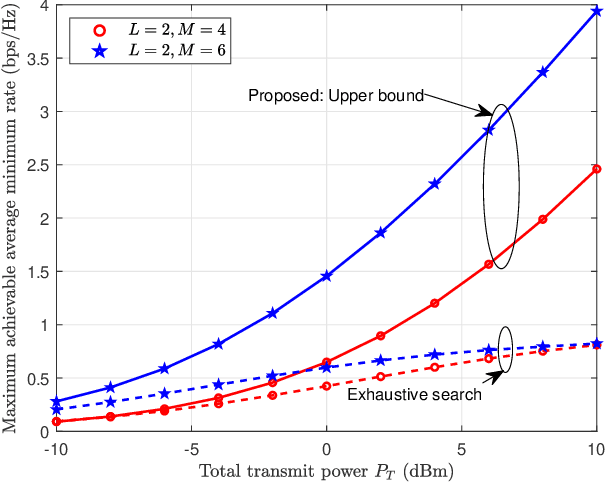

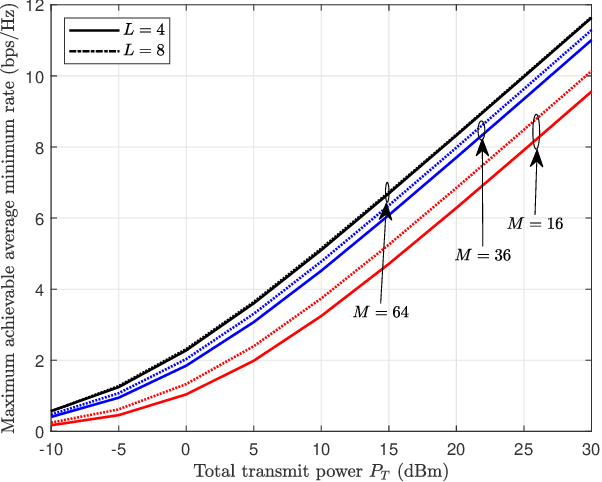

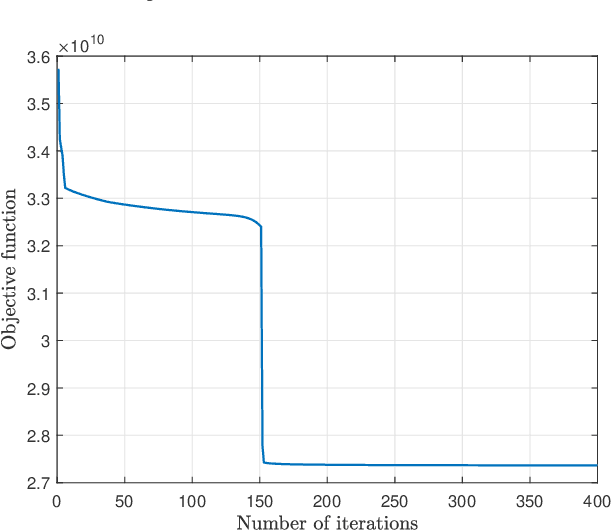

Abstract:Stacked intelligent metasurface (SIM) is an emerging technology that uses multiple reconfigurable surface layers to enable flexible wave-based beamforming. In this paper, we focus on an \ac{SIM}-assisted multi-user multiple-input single-output system, where it is essential to ensure that all users receive a fair and reliable service level. To this end, we develop two max-min fairness algorithms based on instantaneous channel state information (CSI) and statistical CSI. For the instantaneous CSI case, we propose an alternating optimization algorithm that jointly optimizes power allocation using geometric programming and wave-based beamforming coefficients using the gradient descent-ascent method. For the statistical CSI case, since deriving an exact expression for the average minimum achievable rate is analytically intractable, we derive a tight upper bound and thereby formulate a stochastic optimization problem. This problem is then solved, capitalizing on an alternating approach combining geometric programming and gradient descent algorithms, to obtain the optimal policies. Our numerical results show significant improvements in the minimum achievable rate compared to the benchmark schemes. In particular, for the instantaneous CSI scenario, the individual impact of the optimal wave-based beamforming is significantly higher than that of the power allocation strategy. Moreover, the proposed upper bound is shown to be tight in the low signal-to-noise ratio regime under the statistical CSI.

A CNN-based End-to-End Learning for RIS-assisted Communication System

Mar 18, 2025Abstract:Reconfigurable intelligent surface (RIS) is an emerging technology that is used to improve the system performance in beyond 5G systems. In this letter, we propose a novel convolutional neural network (CNN)-based autoencoder to jointly optimize the transmitter, the receiver, and the RIS of a RIS-assisted communication system. The proposed system jointly optimizes the sub-tasks of the transmitter, the receiver, and the RIS such as encoding/decoding, channel estimation, phase optimization, and modulation/demodulation. Numerically we have shown that the bit error rate (BER) performance of the CNN-based autoencoder system is better than the theoretical BER performance of the RIS-assisted communication systems.

Comprehensive Review of Deep Unfolding Techniques for Next-Generation Wireless Communication Systems

Feb 09, 2025

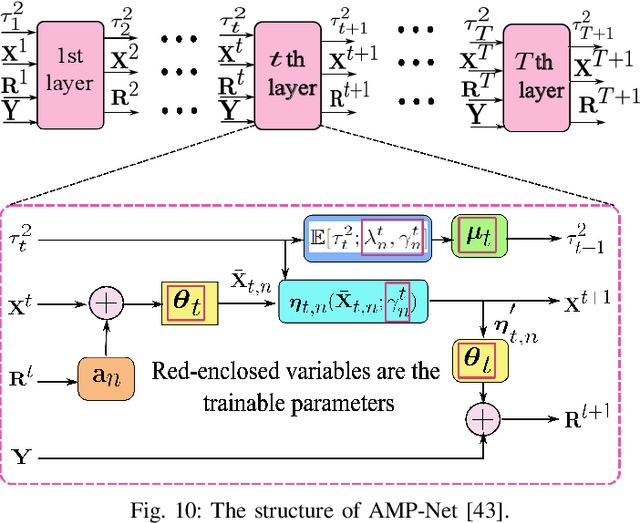

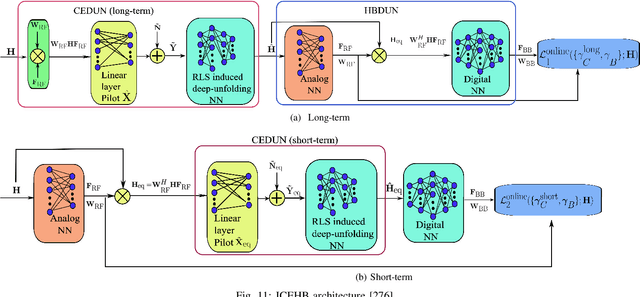

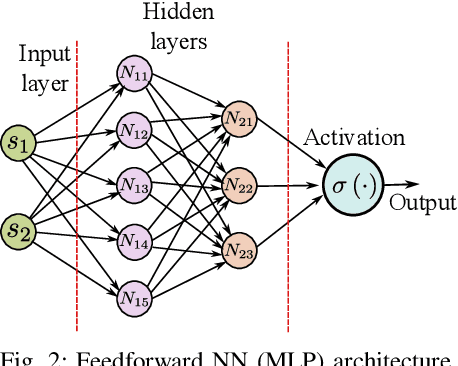

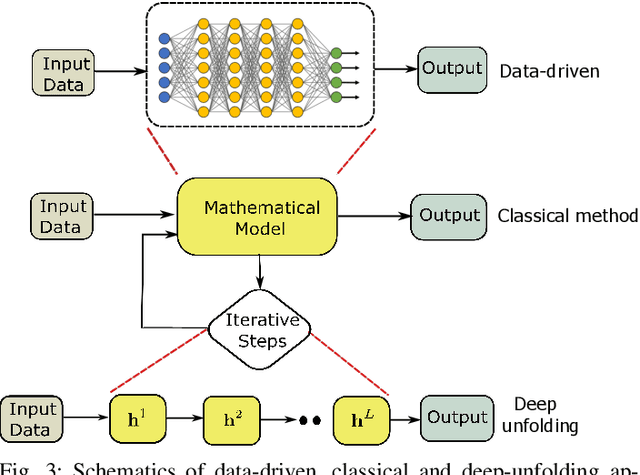

Abstract:The application of machine learning in wireless communications has been extensively explored, with deep unfolding emerging as a powerful model-based technique. Deep unfolding enhances interpretability by transforming complex iterative algorithms into structured layers of deep neural networks (DNNs). This approach seamlessly integrates domain knowledge with deep learning (DL), leveraging the strengths of both methods to simplify complex signal processing tasks in communication systems. To provide a solid foundation, we first present a brief overview of DL and deep unfolding. We then explore the applications of deep unfolding in key areas, including signal detection, channel estimation, beamforming design, decoding for error-correcting codes, sensing and communication, power allocation, and security. Each section focuses on a specific task, highlighting its significance in emerging 6G technologies and reviewing recent advancements in deep unfolding-based solutions. Finally, we discuss the challenges associated with developing deep unfolding techniques and propose potential improvements to enhance their applicability across diverse wireless communication scenarios.

Efficient Channel Prediction for Beyond Diagonal RIS-Assisted MIMO Systems with Channel Aging

Nov 21, 2024

Abstract:Novel reconfigurable intelligent surface (RIS) architectures, known as beyond diagonal RISs (BD-RISs), have been proposed to enhance reflection efficiency and expand RIS capabilities. However, their passive nature, non-diagonal reflection matrix, and the large number of coupled reflecting elements complicate the channel state information (CSI) estimation process. The challenge further escalates in scenarios with fast-varying channels. In this paper, we address this challenge by proposing novel joint channel estimation and prediction strategies with low overhead and high accuracy for two different RIS architectures in a BD-RIS-assisted multiple-input multiple-output system under correlated fast-fading environments with channel aging. The channel estimation procedure utilizes the Tucker2 decomposition with bilinear alternative least squares, which is exploited to decompose the cascade channels of the BD-RIS-assisted system into effective channels of reduced dimension. The channel prediction framework is based on a convolutional neural network combined with an autoregressive predictor. The estimated/predicted CSI is then utilized to optimize the RIS phase shifts aiming at the maximization of the downlink sum rate. Insightful simulation results demonstrate that our proposed approach is robust to channel aging, and exhibits a high estimation accuracy. Moreover, our scheme can deliver a high average downlink sum rate, outperforming other state-of-the-art channel estimation methods. The results also reveal a remarkable reduction in pilot overhead of up to 98\% compared to baseline schemes, all imposing low computational complexity.

Constellation Shaping under Phase Noise Impairment for Sub-THz Communications

Nov 21, 2023Abstract:The large untapped spectrum in the sub-THz allows for ultra-high throughput communication to realize many seemingly impossible applications in 6G. One of the challenges in radio communications in sub-THz is the hardware impairments. Specifically, phase noise is one key hardware impairment, which is accentuated as we increase the frequency and bandwidth. Furthermore, the modest output power of the sub-THz power amplifier demands limits on peak to average power ratio (PAPR) signal design. Single carrier frequency domain equalization (SC-FDE) waveform has been identified as a suitable candidate for sub-THz, although some challenges such as phase noise and PAPR still remain to be tackled. In this work, we design a phase noise robust, low PAPR SC-FDE waveform by geometrically shaping the constellation under practical conditions. We formulate the waveform optimization problem in its augmented Lagrangian form and use a back-propagation-inspired technique to obtain a constellation design that is numerically robust to phase noise, while maintaining a low PAPR.

GDOP Based BS Selection for Positioning in mmWave 5G NR Networks

Oct 23, 2023

Abstract:The fifth-generation (5G) of mobile communication supported by millimetre-wave (mmWave) technology and higher base station (BS) densification facilitate to enhance user equipment (UE) positioning. Therefore, 5G cellular system is designed with many positioning measurements and special positioning reference signals with a multitude of configurations for a variety of use cases, expecting stringent positioning accuracies. One of the major factors that the accuracy of a particular position estimate depends on is the geometry of the nodes in the system, which could be measured with the geometric dilution of precision (GDOP). Hence in this paper, we investigate the time difference of arrival (TDOA) measurements based UE positioning accuracy improvement, exploiting the geometric distribution of BSs in mixed LOS and NLOS environment. We propose a BS selection algorithm for UE positioning based on the GDOP of the BSs participating in the positioning process. Simulations are conducted for indoor and outdoor scenarios that use antenna arrays with beam-based mmWave NR communication. Results demonstrate that the proposed BS selection can achieve higher positioning accuracy with fewer radio resources compared to the other BS selection methods.

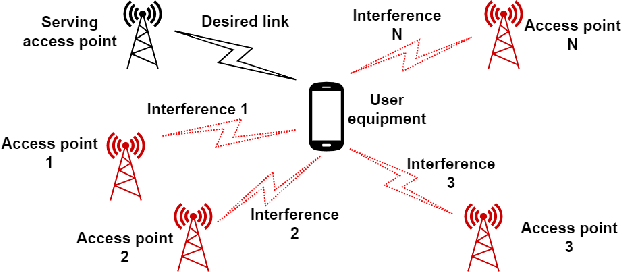

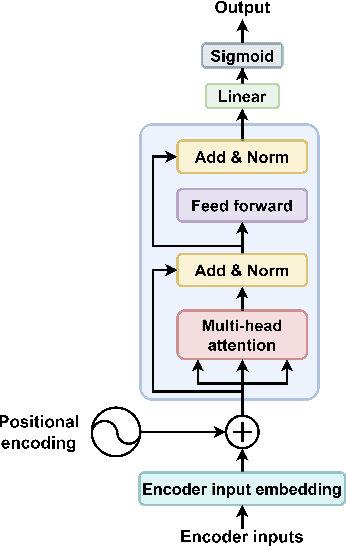

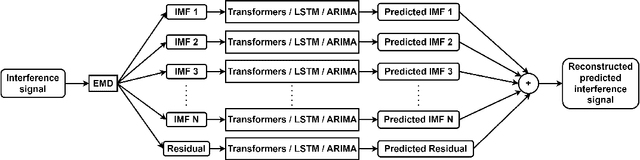

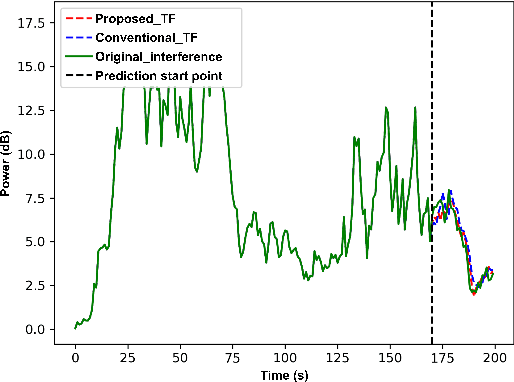

Decomposition Based Interference Management Framework for Local 6G Networks

Oct 09, 2023

Abstract:Managing inter-cell interference is among the major challenges in a wireless network, more so when strict quality of service needs to be guaranteed such as in ultra-reliable low latency communications (URLLC) applications. This study introduces a novel intelligent interference management framework for a local 6G network that allocates resources based on interference prediction. The proposed algorithm involves an advanced signal pre-processing technique known as empirical mode decomposition followed by prediction of each decomposed component using the sequence-to-one transformer algorithm. The predicted interference power is then used to estimate future signal-to-interference plus noise ratio, and subsequently allocate resources to guarantee the high reliability required by URLLC applications. Finally, an interference cancellation scheme is explored based on the predicted interference signal with the transformer model. The proposed sequence-to-one transformer model exhibits its robustness for interference prediction. The proposed scheme is numerically evaluated against two baseline algorithms, and is found that the root mean squared error is reduced by up to 55% over a baseline scheme.

Minimizing Energy Consumption in MU-MIMO via Antenna Muting by Neural Networks with Asymmetric Loss

Jun 08, 2023Abstract:Transmit antenna muting (TAM) in multiple-user multiple-input multiple-output (MU-MIMO) networks allows reducing the power consumption of the base station (BS) by properly utilizing only a subset of antennas in the BS. In this paper, we consider the downlink transmission of an MU-MIMO network where TAM is formulated to minimize the number of active antennas in the BS while guaranteeing the per-user throughput requirements. To address the computational complexity of the combinatorial optimization problem, we propose an algorithm called neural antenna muting (NAM) with an asymmetric custom loss function. NAM is a classification neural network trained in a supervised manner. The classification error in this scheme leads to either sub-optimal energy consumption or lower quality of service (QoS) for the communication link. We control the classification error probability distribution by designing an asymmetric loss function such that the erroneous classification outputs are more likely to result in fulfilling the QoS requirements. Furthermore, we present three heuristic algorithms and compare them with the NAM. Using a 3GPP compliant system-level simulator, we show that NAM achieves $\sim73\%$ energy saving compared to the full antenna configuration in the BS with $\sim95\%$ reliability in achieving the user throughput requirements while being around $1000\times$ and $24\times$ less computationally intensive than the greedy heuristic algorithm and the fixed column antenna muting algorithm, respectively.

Predictive Resource Allocation for URLLC using Empirical Mode Decomposition

Apr 04, 2023

Abstract:Effective resource allocation is a crucial requirement to achieve the stringent performance targets of ultra-reliable low-latency communication (URLLC) services. Predicting future interference and utilizing it to design efficient interference management algorithms is one way to allocate resources for URLLC services effectively. This paper proposes an empirical mode decomposition (EMD) based hybrid prediction method to predict the interference and allocate resources for downlink based on the prediction results. EMD is used to decompose the past interference values faced by the user equipment. Long short-term memory and auto-regressive integrated moving average methods are used to predict the decomposed components. The final predicted interference value is reconstructed using individual predicted values of decomposed components. It is found that such a decomposition-based prediction method reduces the root mean squared error of the prediction by $20 - 25\%$. The proposed resource allocation algorithm utilizing the EMD-based interference prediction was found to meet near-optimal allocation of resources and correspondingly results in $2-3$ orders of magnitude lower outage compared to state-of-the-art baseline prediction algorithm-based resource allocation.

Wireless End-to-End Image Transmission System using Semantic Communications

Feb 27, 2023

Abstract:Semantic communication is considered the future of mobile communication, which aims to transmit data beyond Shannon's theorem of communications by transmitting the semantic meaning of the data rather than the bit-by-bit reconstruction of the data at the receiver's end. The semantic communication paradigm aims to bridge the gap of limited bandwidth problems in modern high-volume multimedia application content transmission. Integrating AI technologies with the 6G communications networks paved the way to develop semantic communication-based end-to-end communication systems. In this study, we have implemented a semantic communication-based end-to-end image transmission system, and we discuss potential design considerations in developing semantic communication systems in conjunction with physical channel characteristics. A Pre-trained GAN network is used at the receiver as the transmission task to reconstruct the realistic image based on the Semantic segmented image at the receiver input. The semantic segmentation task at the transmitter (encoder) and the GAN network at the receiver (decoder) is trained on a common knowledge base, the COCO-Stuff dataset. The research shows that the resource gain in the form of bandwidth saving is immense when transmitting the semantic segmentation map through the physical channel instead of the ground truth image in contrast to conventional communication systems. Furthermore, the research studies the effect of physical channel distortions and quantization noise on semantic communication-based multimedia content transmission.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge