Thushan Sivalingam

Decomposition Based Interference Management Framework for Local 6G Networks

Oct 09, 2023

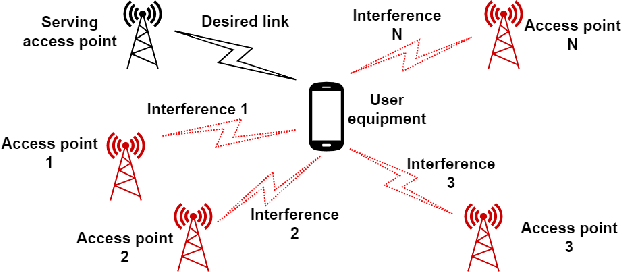

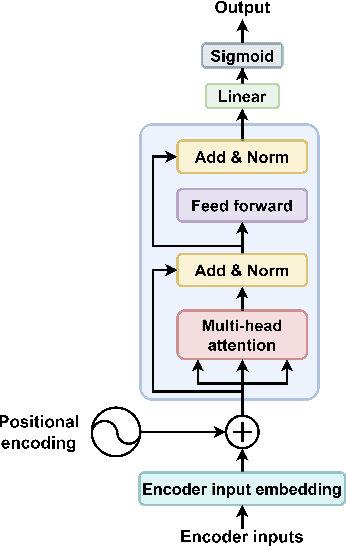

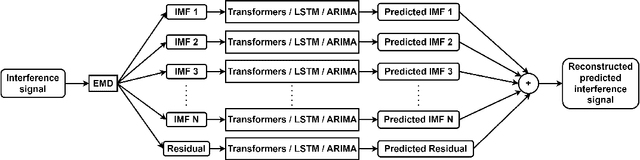

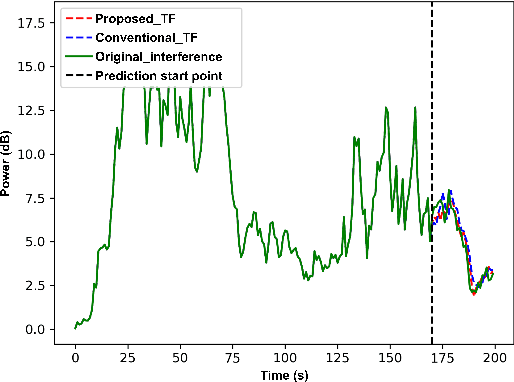

Abstract:Managing inter-cell interference is among the major challenges in a wireless network, more so when strict quality of service needs to be guaranteed such as in ultra-reliable low latency communications (URLLC) applications. This study introduces a novel intelligent interference management framework for a local 6G network that allocates resources based on interference prediction. The proposed algorithm involves an advanced signal pre-processing technique known as empirical mode decomposition followed by prediction of each decomposed component using the sequence-to-one transformer algorithm. The predicted interference power is then used to estimate future signal-to-interference plus noise ratio, and subsequently allocate resources to guarantee the high reliability required by URLLC applications. Finally, an interference cancellation scheme is explored based on the predicted interference signal with the transformer model. The proposed sequence-to-one transformer model exhibits its robustness for interference prediction. The proposed scheme is numerically evaluated against two baseline algorithms, and is found that the root mean squared error is reduced by up to 55% over a baseline scheme.

Predictive Resource Allocation for URLLC using Empirical Mode Decomposition

Apr 04, 2023

Abstract:Effective resource allocation is a crucial requirement to achieve the stringent performance targets of ultra-reliable low-latency communication (URLLC) services. Predicting future interference and utilizing it to design efficient interference management algorithms is one way to allocate resources for URLLC services effectively. This paper proposes an empirical mode decomposition (EMD) based hybrid prediction method to predict the interference and allocate resources for downlink based on the prediction results. EMD is used to decompose the past interference values faced by the user equipment. Long short-term memory and auto-regressive integrated moving average methods are used to predict the decomposed components. The final predicted interference value is reconstructed using individual predicted values of decomposed components. It is found that such a decomposition-based prediction method reduces the root mean squared error of the prediction by $20 - 25\%$. The proposed resource allocation algorithm utilizing the EMD-based interference prediction was found to meet near-optimal allocation of resources and correspondingly results in $2-3$ orders of magnitude lower outage compared to state-of-the-art baseline prediction algorithm-based resource allocation.

Wireless End-to-End Image Transmission System using Semantic Communications

Feb 27, 2023

Abstract:Semantic communication is considered the future of mobile communication, which aims to transmit data beyond Shannon's theorem of communications by transmitting the semantic meaning of the data rather than the bit-by-bit reconstruction of the data at the receiver's end. The semantic communication paradigm aims to bridge the gap of limited bandwidth problems in modern high-volume multimedia application content transmission. Integrating AI technologies with the 6G communications networks paved the way to develop semantic communication-based end-to-end communication systems. In this study, we have implemented a semantic communication-based end-to-end image transmission system, and we discuss potential design considerations in developing semantic communication systems in conjunction with physical channel characteristics. A Pre-trained GAN network is used at the receiver as the transmission task to reconstruct the realistic image based on the Semantic segmented image at the receiver input. The semantic segmentation task at the transmitter (encoder) and the GAN network at the receiver (decoder) is trained on a common knowledge base, the COCO-Stuff dataset. The research shows that the resource gain in the form of bandwidth saving is immense when transmitting the semantic segmentation map through the physical channel instead of the ground truth image in contrast to conventional communication systems. Furthermore, the research studies the effect of physical channel distortions and quantization noise on semantic communication-based multimedia content transmission.

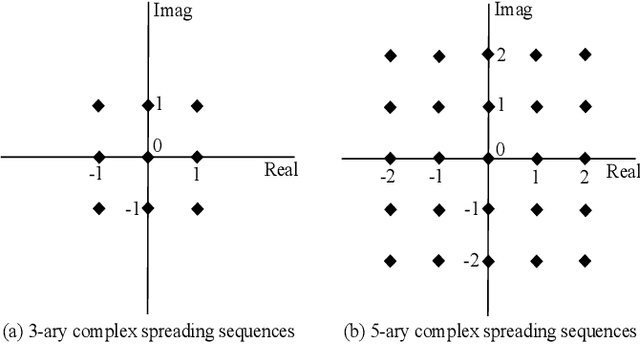

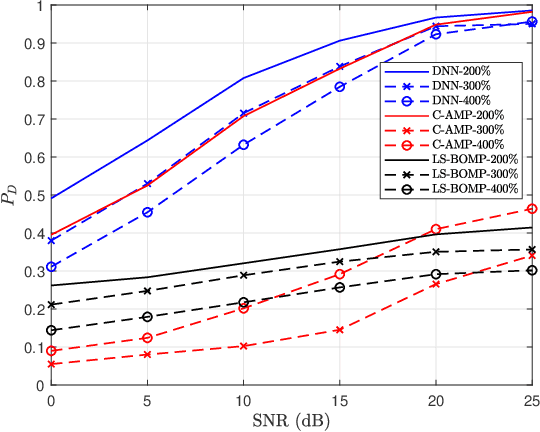

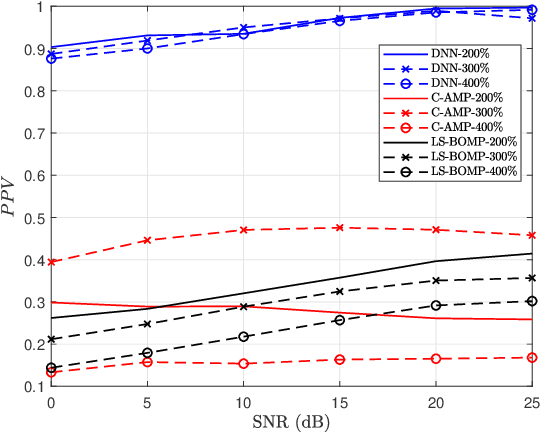

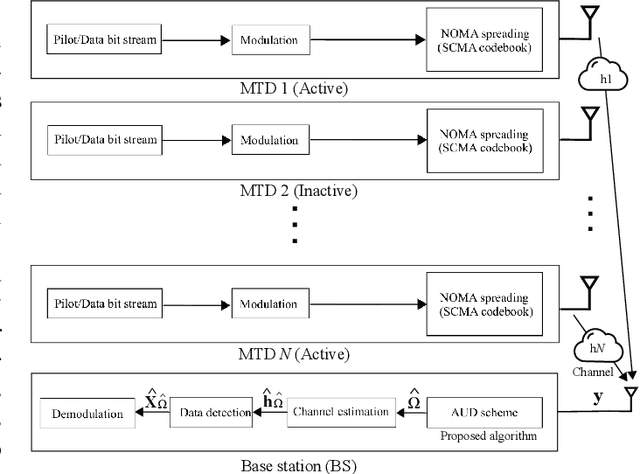

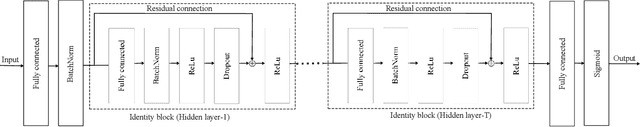

Deep Neural Network-Based Blind Multiple User Detection for Grant-free Multi-User Shared Access

Jun 21, 2021

Abstract:Multi-user shared access (MUSA) is introduced as advanced code domain non-orthogonal complex spreading sequences to support a massive number of machine-type communications (MTC) devices. In this paper, we propose a novel deep neural network (DNN)-based multiple user detection (MUD) for grant-free MUSA systems. The DNN-based MUD model determines the structure of the sensing matrix, randomly distributed noise, and inter-device interference during the training phase of the model by several hidden nodes, neuron activation units, and a fit loss function. The thoroughly learned DNN model is capable of distinguishing the active devices of the received signal without any a priori knowledge of the device sparsity level and the channel state information. Our numerical evaluation shows that with a higher percentage of active devices, the DNN-MUD achieves a significantly increased probability of detection compared to the conventional approaches.

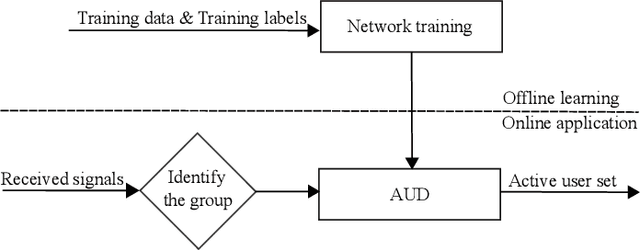

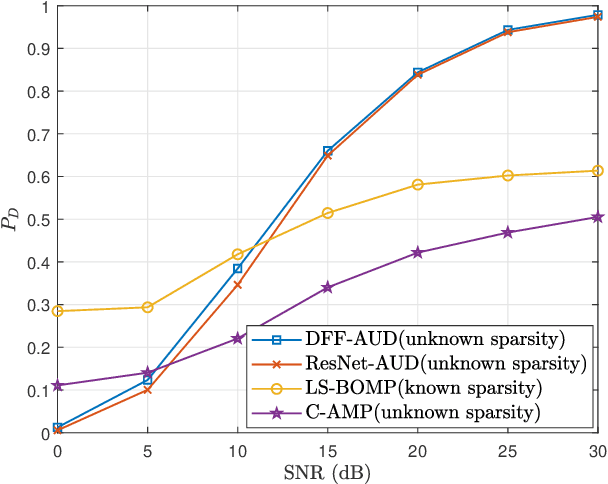

Deep Learning-Based Active User Detection for Grant-free SCMA Systems

Jun 21, 2021

Abstract:Grant-free random access and uplink non-orthogonal multiple access (NOMA) have been introduced to reduce transmission latency and signaling overhead in massive machine-type communication (mMTC). In this paper, we propose two novel group-based deep neural network active user detection (AUD) schemes for the grant-free sparse code multiple access (SCMA) system in mMTC uplink framework. The proposed AUD schemes learn the nonlinear mapping, i.e., multi-dimensional codebook structure and the channel characteristic. This is accomplished through the received signal which incorporates the sparse structure of device activity with the training dataset. Moreover, the offline pre-trained model is able to detect the active devices without any channel state information and prior knowledge of the device sparsity level. Simulation results show that with several active devices, the proposed schemes obtain more than twice the probability of detection compared to the conventional AUD schemes over the signal to noise ratio range of interest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge