Samad Ali

Conditional Denoising Diffusion Autoencoders for Wireless Semantic Communications

Sep 26, 2025

Abstract:Semantic communication (SemCom) systems aim to learn the mapping from low-dimensional semantics to high-dimensional ground-truth. While this is more akin to a "domain translation" problem, existing frameworks typically emphasize on channel-adaptive neural encoding-decoding schemes, lacking full exploration of signal distribution. Moreover, such methods so far have employed autoencoder-based architectures, where the encoding is tightly coupled to a matched decoder, causing scalability issues in practice. To address these gaps, diffusion autoencoder models are proposed for wireless SemCom. The goal is to learn a "semantic-to-clean" mapping, from the semantic space to the ground-truth probability distribution. A neural encoder at semantic transmitter extracts the high-level semantics, and a conditional diffusion model (CDiff) at the semantic receiver exploits the source distribution for signal-space denoising, while the received semantic latents are incorporated as the conditioning input to "steer" the decoding process towards the semantics intended by the transmitter. It is analytically proved that the proposed decoder model is a consistent estimator of the ground-truth data. Furthermore, extensive simulations over CIFAR-10 and MNIST datasets are provided along with design insights, highlighting the performance compared to legacy autoencoders and variational autoencoders (VAE). Simulations are further extended to the multi-user SemCom, identifying the dominating factors in a more realistic setup.

A Bayesian Framework of Deep Reinforcement Learning for Joint O-RAN/MEC Orchestration

Dec 26, 2023

Abstract:Multi-access Edge Computing (MEC) can be implemented together with Open Radio Access Network (O-RAN) over commodity platforms to offer low-cost deployment and bring the services closer to end-users. In this paper, a joint O-RAN/MEC orchestration using a Bayesian deep reinforcement learning (RL)-based framework is proposed that jointly controls the O-RAN functional splits, the allocated resources and hosting locations of the O-RAN/MEC services across geo-distributed platforms, and the routing for each O-RAN/MEC data flow. The goal is to minimize the long-term overall network operation cost and maximize the MEC performance criterion while adapting possibly time-varying O-RAN/MEC demands and resource availability. This orchestration problem is formulated as Markov decision process (MDP). However, the system consists of multiple BSs that share the same resources and serve heterogeneous demands, where their parameters have non-trivial relations. Consequently, finding the exact model of the underlying system is impractical, and the formulated MDP renders in a large state space with multi-dimensional discrete action. To address such modeling and dimensionality issues, a novel model-free RL agent is proposed for our solution framework. The agent is built from Double Deep Q-network (DDQN) that tackles the large state space and is then incorporated with action branching, an action decomposition method that effectively addresses the multi-dimensional discrete action with linear increase complexity. Further, an efficient exploration-exploitation strategy under a Bayesian framework using Thomson sampling is proposed to improve the learning performance and expedite its convergence. Trace-driven simulations are performed using an O-RAN-compliant model. The results show that our approach is data-efficient (i.e., converges faster) and increases the returned reward by 32\% than its non-Bayesian version.

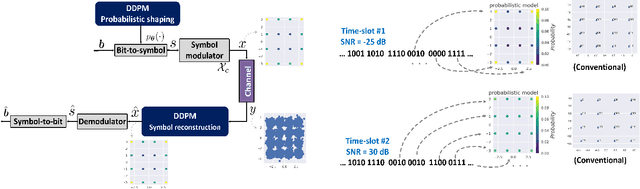

Generative AI-Based Probabilistic Constellation Shaping With Diffusion Models

Nov 15, 2023

Abstract:Diffusion models are at the vanguard of generative AI research with renowned solutions such as ImageGen by Google Brain and DALL.E 3 by OpenAI. Nevertheless, the potential merits of diffusion models for communication engineering applications are not fully understood yet. In this paper, we aim to unleash the power of generative AI for PHY design of constellation symbols in communication systems. Although the geometry of constellations is predetermined according to networking standards, e.g., quadrature amplitude modulation (QAM), probabilistic shaping can design the probability of occurrence (generation) of constellation symbols. This can help improve the information rate and decoding performance of communication systems. We exploit the ``denoise-and-generate'' characteristics of denoising diffusion probabilistic models (DDPM) for probabilistic constellation shaping. The key idea is to learn generating constellation symbols out of noise, ``mimicking'' the way the receiver performs symbol reconstruction. This way, we make the constellation symbols sent by the transmitter, and what is inferred (reconstructed) at the receiver become as similar as possible, resulting in as few mismatches as possible. Our results show that the generative AI-based scheme outperforms deep neural network (DNN)-based benchmark and uniform shaping, while providing network resilience as well as robust out-of-distribution performance under low-SNR regimes and non-Gaussian assumptions. Numerical evaluations highlight 30% improvement in terms of cosine similarity and a threefold improvement in terms of mutual information compared to DNN-based approach for 64-QAM geometry.

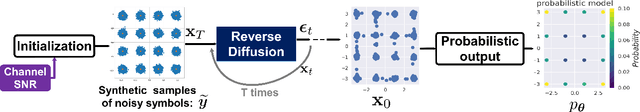

Denoising Diffusion Probabilistic Models for Hardware-Impaired Communication Systems: Towards Wireless Generative AI

Oct 30, 2023

Abstract:Thanks to the outstanding achievements from state-of-the-art generative models like ChatGPT and diffusion models, generative AI has gained substantial attention across various industrial and academic domains. In this paper, denoising diffusion probabilistic models (DDPMs) are proposed for a practical finite-precision wireless communication system with hardware-impaired transceivers. The intuition behind DDPM is to decompose the data generation process over the so-called "denoising" steps. Inspired by this, a DDPM-based receiver is proposed for a practical wireless communication scheme that faces realistic non-idealities, including hardware impairments (HWI), channel distortions, and quantization errors. It is shown that our approach provides network resilience under low-SNR regimes, near-invariant reconstruction performance with respect to different HWI levels and quantization errors, and robust out-of-distribution performance against non-Gaussian noise. Moreover, the reconstruction performance of our scheme is evaluated in terms of cosine similarity and mean-squared error (MSE), highlighting more than 25 dB improvement compared to the conventional deep neural network (DNN)-based receivers.

WiGenAI: The Symphony of Wireless and Generative AI via Diffusion Models

Oct 12, 2023Abstract:Innovative foundation models, such as GPT-3 and stable diffusion models, have made a paradigm shift in the realm of artificial intelligence (AI) towards generative AI-based systems. In unison, from data communication and networking perspective, AI and machine learning (AI/ML) algorithms are envisioned to be pervasively incorporated into the future generations of wireless communications systems, highlighting the need for novel AI-native solutions for the emergent communication scenarios. In this article, we outline the applications of generative AI in wireless communication systems to lay the foundations for research in this field. Diffusion-based generative models, as the new state-of-the-art paradigm of generative models, are introduced, and their applications in wireless communication systems are discussed. Two case studies are also presented to showcase how diffusion models can be exploited for the development of resilient AI-native communication systems. Specifically, we propose denoising diffusion probabilistic models (DDPM) for a wireless communication scheme with non-ideal transceivers, where 30% improvement is achieved in terms of bit error rate. As the second application, DDPMs are employed at the transmitter to shape the constellation symbols, highlighting a robust out-of-distribution performance. Finally, future directions and open issues for the development of generative AI-based wireless systems are discussed to promote future research endeavors towards wireless generative AI (WiGenAI).

Denoising Diffusion Probabilistic Models for Hardware-Impaired Communications

Sep 15, 2023Abstract:Generative AI has received significant attention among a spectrum of diverse industrial and academic domains, thanks to the magnificent results achieved from deep generative models such as generative pre-trained transformers (GPT) and diffusion models. In this paper, we explore the applications of denoising diffusion probabilistic models (DDPMs) in wireless communication systems under practical assumptions such as hardware impairments (HWI), low-SNR regime, and quantization error. Diffusion models are a new class of state-of-the-art generative models that have already showcased notable success with some of the popular examples by OpenAI and Google Brain. The intuition behind DDPM is to decompose the data generation process over small "denoising" steps. Inspired by this, we propose using denoising diffusion model-based receiver for a practical wireless communication scheme, while providing network resilience in low-SNR regimes, non-Gaussian noise, different HWI levels, and quantization error. We evaluate the reconstruction performance of our scheme in terms of bit error rate (BER) and mean-squared error (MSE). Our results show that 30% and 20% improvement in BER could be achieved compared to deep neural network (DNN)-based receivers in AWGN and non-Gaussian scenarios, respectively.

Probabilistic Constellation Shaping With Denoising Diffusion Probabilistic Models: A Novel Approach

Sep 15, 2023Abstract:With the incredible results achieved from generative pre-trained transformers (GPT) and diffusion models, generative AI (GenAI) is envisioned to yield remarkable breakthroughs in various industrial and academic domains. In this paper, we utilize denoising diffusion probabilistic models (DDPM), as one of the state-of-the-art generative models, for probabilistic constellation shaping in wireless communications. While the geometry of constellations is predetermined by the networking standards, probabilistic constellation shaping can help enhance the information rate and communication performance by designing the probability of occurrence (generation) of constellation symbols. Unlike conventional methods that deal with an optimization problem over the discrete distribution of constellations, we take a radically different approach. Exploiting the ``denoise-and-generate'' characteristic of DDPMs, the key idea is to learn how to generate constellation symbols out of noise, ``mimicking'' the way the receiver performs symbol reconstruction. By doing so, we make the constellation symbols sent by the transmitter, and what is inferred (reconstructed) at the receiver become as similar as possible. Our simulations show that the proposed scheme outperforms deep neural network (DNN)-based benchmark and uniform shaping, while providing network resilience as well as robust out-of-distribution performance under low-SNR regimes and non-Gaussian noise. Notably, a threefold improvement in terms of mutual information is achieved compared to DNN-based approach for 64-QAM geometry.

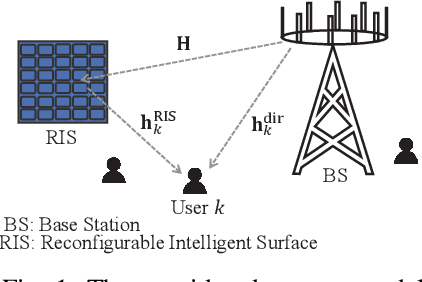

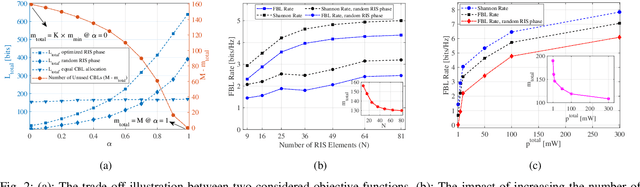

Joint Sum Rate and Blocklength Optimization in RIS-aided Short Packet URLLC Systems

Apr 28, 2022

Abstract:In this paper, a multi-objective optimization problem (MOOP) is proposed for maximizing the achievable finite blocklength (FBL) rate while minimizing the utilized channel blocklengths (CBLs) in a reconfigurable intelligent surface (RIS)-assisted short packet communication system. The formulated MOOP has two objective functions namely maximizing the total FBL rate with a target error probability, and minimizing the total utilized CBLs which is directly proportional to the transmission duration. The considered MOOP variables are the base station (BS) transmit power, number of CBLs, and passive beamforming at the RIS. Since the proposed non-convex problem is intractable to solve, the Tchebyshev method is invoked to transform it into a single-objective OP, then the alternating optimization (AO) technique is employed to iteratively obtain optimized parameters in three main sub-problems. The numerical results show a fundamental trade-off between maximizing the achievable rate in the FBL regime and reducing the transmission duration. Also, the applicability of RIS technology is emphasized in reducing the utilized CBLs while increasing the achievable rate significantly.

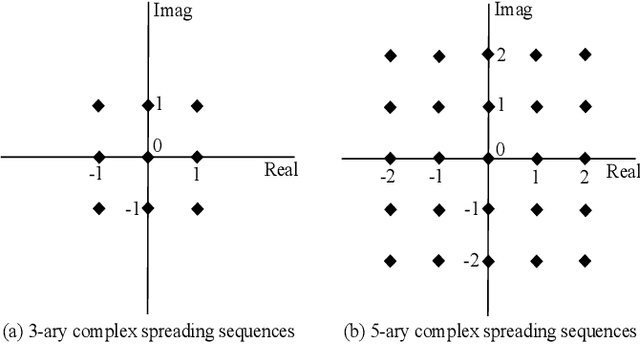

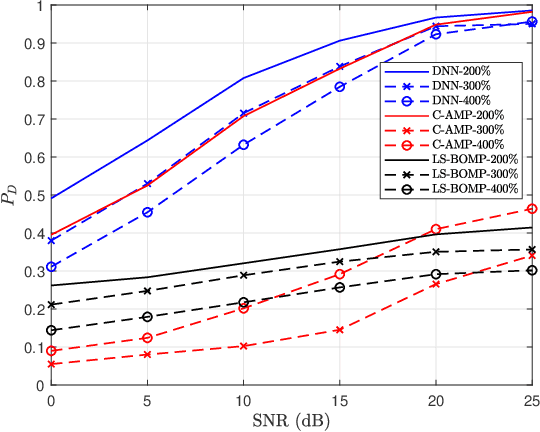

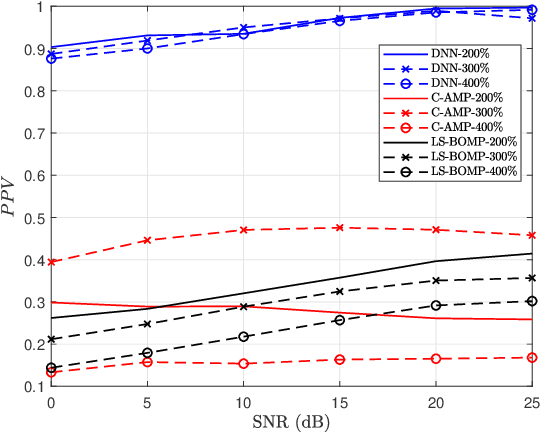

Deep Neural Network-Based Blind Multiple User Detection for Grant-free Multi-User Shared Access

Jun 21, 2021

Abstract:Multi-user shared access (MUSA) is introduced as advanced code domain non-orthogonal complex spreading sequences to support a massive number of machine-type communications (MTC) devices. In this paper, we propose a novel deep neural network (DNN)-based multiple user detection (MUD) for grant-free MUSA systems. The DNN-based MUD model determines the structure of the sensing matrix, randomly distributed noise, and inter-device interference during the training phase of the model by several hidden nodes, neuron activation units, and a fit loss function. The thoroughly learned DNN model is capable of distinguishing the active devices of the received signal without any a priori knowledge of the device sparsity level and the channel state information. Our numerical evaluation shows that with a higher percentage of active devices, the DNN-MUD achieves a significantly increased probability of detection compared to the conventional approaches.

Deep Learning-Based Active User Detection for Grant-free SCMA Systems

Jun 21, 2021

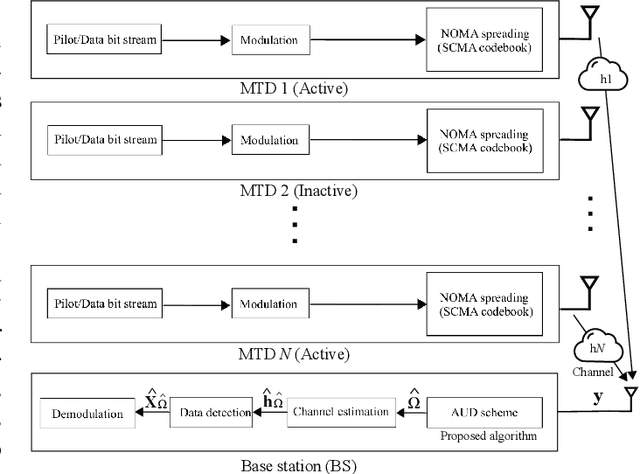

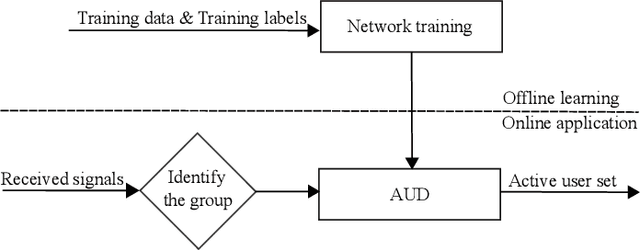

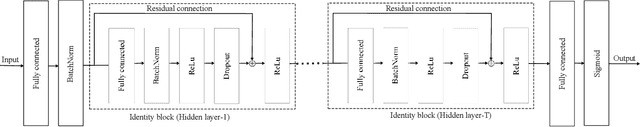

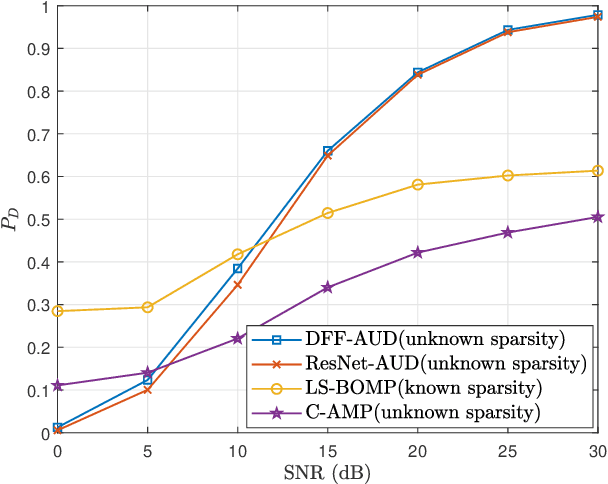

Abstract:Grant-free random access and uplink non-orthogonal multiple access (NOMA) have been introduced to reduce transmission latency and signaling overhead in massive machine-type communication (mMTC). In this paper, we propose two novel group-based deep neural network active user detection (AUD) schemes for the grant-free sparse code multiple access (SCMA) system in mMTC uplink framework. The proposed AUD schemes learn the nonlinear mapping, i.e., multi-dimensional codebook structure and the channel characteristic. This is accomplished through the received signal which incorporates the sparse structure of device activity with the training dataset. Moreover, the offline pre-trained model is able to detect the active devices without any channel state information and prior knowledge of the device sparsity level. Simulation results show that with several active devices, the proposed schemes obtain more than twice the probability of detection compared to the conventional AUD schemes over the signal to noise ratio range of interest.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge