Hirley Alves

6G Resilience -- White Paper

Sep 10, 2025

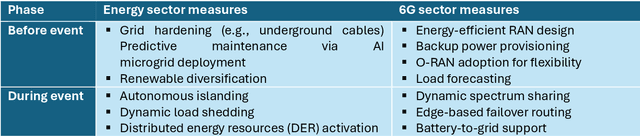

Abstract:6G must be designed to withstand, adapt to, and evolve amid prolonged, complex disruptions. Mobile networks' shift from efficiency-first to sustainability-aware has motivated this white paper to assert that resilience is a primary design goal, alongside sustainability and efficiency, encompassing technology, architecture, and economics. We promote resilience by analysing dependencies between mobile networks and other critical systems, such as energy, transport, and emergency services, and illustrate how cascading failures spread through infrastructures. We formalise resilience using the 3R framework: reliability, robustness, resilience. Subsequently, we translate this into measurable capabilities: graceful degradation, situational awareness, rapid reconfiguration, and learning-driven improvement and recovery. Architecturally, we promote edge-native and locality-aware designs, open interfaces, and programmability to enable islanded operations, fallback modes, and multi-layer diversity (radio, compute, energy, timing). Key enablers include AI-native control loops with verifiable behaviour, zero-trust security rooted in hardware and supply-chain integrity, and networking techniques that prioritise critical traffic, time-sensitive flows, and inter-domain coordination. Resilience also has a techno-economic aspect: open platforms and high-quality complementors generate ecosystem externalities that enhance resilience while opening new markets. We identify nine business-model groups and several patterns aligned with the 3R objectives, and we outline governance and standardisation. This white paper serves as an initial step and catalyst for 6G resilience. It aims to inspire researchers, professionals, government officials, and the public, providing them with the essential components to understand and shape the development of 6G resilience.

Wireless Energy Transfer Beamforming Optimization for Intelligent Transmitting Surface

Jul 09, 2025

Abstract:Radio frequency (RF) wireless energy transfer (WET) is a promising technology for powering the growing ecosystem of Internet of Things (IoT) devices using power beacons (PBs). Recent research focuses on designing efficient PB architectures that can support numerous antennas. In this context, PBs equipped with intelligent surfaces present a promising approach, enabling physically large, reconfigurable arrays. Motivated by these advantages, this work aims to minimize the power consumption of a PB equipped with a passive intelligent transmitting surface (ITS) and a collocated digital beamforming-based feeder to charge multiple single-antenna devices. To model the PB's power consumption accurately, we consider power amplifiers nonlinearities, ITS control power, and feeder-to-ITS air interface losses. The resulting optimization problem is highly nonlinear and nonconvex due to the high-power amplifier (HPA), the received power constraints at the devices, and the unit-modulus constraint imposed by the phase shifter configuration of the ITS. To tackle this issue, we apply successive convex approximation (SCA) to iteratively solve convex subproblems that jointly optimize the digital precoder and phase configuration. Given SCA's sensitivity to initialization, we propose an algorithm that ensures initialization feasibility while balancing convergence speed and solution quality. We compare the proposed ITS-equipped PB's power consumption against benchmark architectures featuring digital and hybrid analog-digital beamforming. Results demonstrate that the proposed architecture efficiently scales with the number of RF chains and ITS elements. We also show that nonuniform ITS power distribution influences beamforming and can shift a device between near- and far-field regions, even with a constant aperture.

Resilient UAV Trajectory Planning via Few-Shot Meta-Offline Reinforcement Learning

Feb 03, 2025

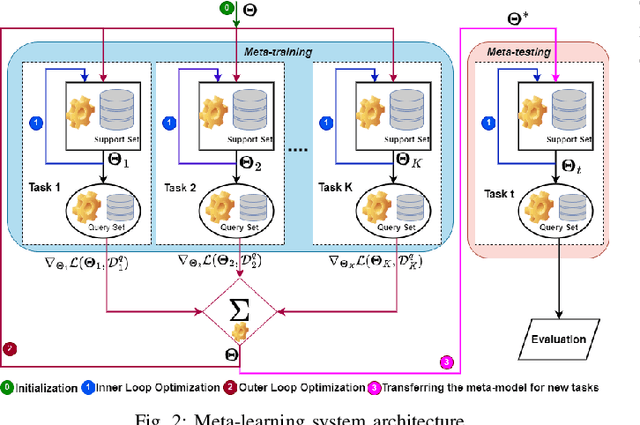

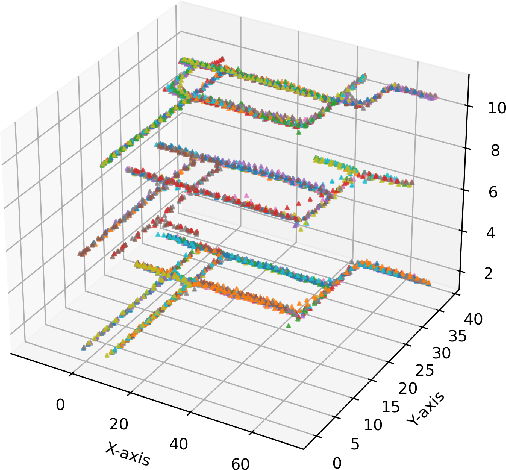

Abstract:Reinforcement learning (RL) has been a promising essence in future 5G-beyond and 6G systems. Its main advantage lies in its robust model-free decision-making in complex and large-dimension wireless environments. However, most existing RL frameworks rely on online interaction with the environment, which might not be feasible due to safety and cost concerns. Another problem with online RL is the lack of scalability of the designed algorithm with dynamic or new environments. This work proposes a novel, resilient, few-shot meta-offline RL algorithm combining offline RL using conservative Q-learning (CQL) and meta-learning using model-agnostic meta-learning (MAML). The proposed algorithm can train RL models using static offline datasets without any online interaction with the environments. In addition, with the aid of MAML, the proposed model can be scaled up to new unseen environments. We showcase the proposed algorithm for optimizing an unmanned aerial vehicle (UAV) 's trajectory and scheduling policy to minimize the age-of-information (AoI) and transmission power of limited-power devices. Numerical results show that the proposed few-shot meta-offline RL algorithm converges faster than baseline schemes, such as deep Q-networks and CQL. In addition, it is the only algorithm that can achieve optimal joint AoI and transmission power using an offline dataset with few shots of data points and is resilient to network failures due to unprecedented environmental changes.

Age and Power Minimization via Meta-Deep Reinforcement Learning in UAV Networks

Jan 24, 2025

Abstract:Age-of-information (AoI) and transmission power are crucial performance metrics in low energy wireless networks, where information freshness is of paramount importance. This study examines a power-limited internet of things (IoT) network supported by a flying unmanned aerial vehicle(UAV) that collects data. Our aim is to optimize the UAV flight trajectory and scheduling policy to minimize a varying AoI and transmission power combination. To tackle this variation, this paper proposes a meta-deep reinforcement learning (RL) approach that integrates deep Q-networks (DQNs) with model-agnostic meta-learning (MAML). DQNs determine optimal UAV decisions, while MAML enables scalability across varying objective functions. Numerical results indicate that the proposed algorithm converges faster and adapts to new objectives more effectively than traditional deep RL methods, achieving minimal AoI and transmission power overall.

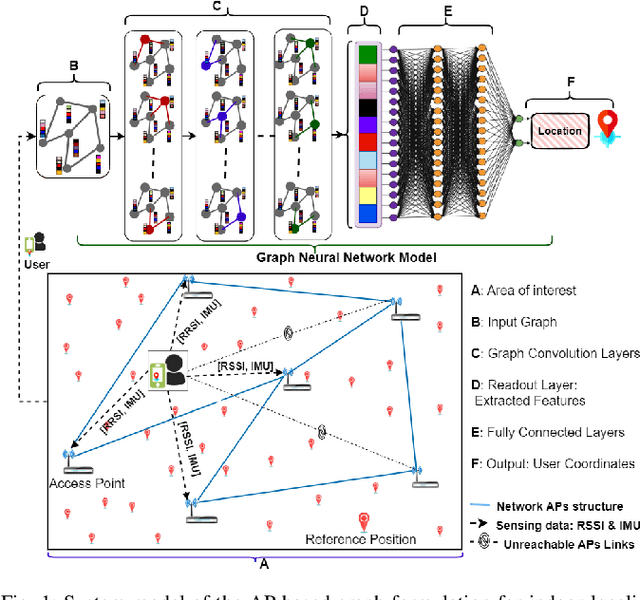

MetaGraphLoc: A Graph-based Meta-learning Scheme for Indoor Localization via Sensor Fusion

Nov 26, 2024

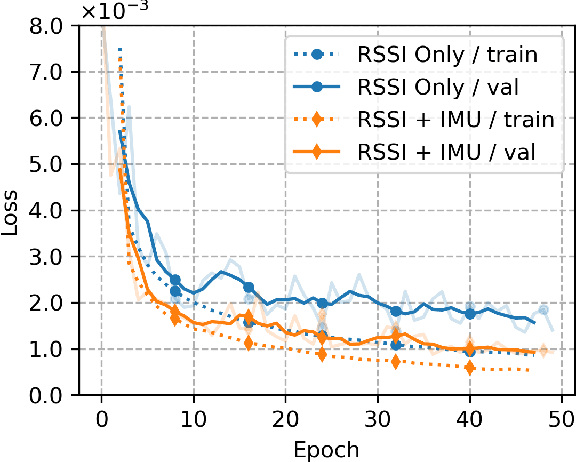

Abstract:Accurate indoor localization remains challenging due to variations in wireless signal environments and limited data availability. This paper introduces MetaGraphLoc, a novel system leveraging sensor fusion, graph neural networks (GNNs), and meta-learning to overcome these limitations. MetaGraphLoc integrates received signal strength indicator measurements with inertial measurement unit data to enhance localization accuracy. Our proposed GNN architecture, featuring dynamic edge construction (DEC), captures the spatial relationships between access points and underlying data patterns. MetaGraphLoc employs a meta-learning framework to adapt the GNN model to new environments with minimal data collection, significantly reducing calibration efforts. Extensive evaluations demonstrate the effectiveness of MetaGraphLoc. Data fusion reduces localization error by 15.92%, underscoring its importance. The GNN with DEC outperforms traditional deep neural networks by up to 30.89%, considering accuracy. Furthermore, the meta-learning approach enables efficient adaptation to new environments, minimizing data collection requirements. These advancements position MetaGraphLoc as a promising solution for indoor localization, paving the way for improved navigation and location-based services in the ever-evolving Internet of Things networks.

Offline and Distributional Reinforcement Learning for Radio Resource Management

Sep 25, 2024

Abstract:Reinforcement learning (RL) has proved to have a promising role in future intelligent wireless networks. Online RL has been adopted for radio resource management (RRM), taking over traditional schemes. However, due to its reliance on online interaction with the environment, its role becomes limited in practical, real-world problems where online interaction is not feasible. In addition, traditional RL stands short in front of the uncertainties and risks in real-world stochastic environments. In this manner, we propose an offline and distributional RL scheme for the RRM problem, enabling offline training using a static dataset without any interaction with the environment and considering the sources of uncertainties using the distributions of the return. Simulation results demonstrate that the proposed scheme outperforms conventional resource management models. In addition, it is the only scheme that surpasses online RL and achieves a $16 \%$ gain over online RL.

Experiment-based Models for Air Time and Current Consumption of LoRaWAN LR-FHSS

Aug 19, 2024

Abstract:Long Range - Frequency Hopping Spread Spectrum (LR-FHSS) is an emerging and promising technology recently introduced into the LoRaWAN protocol specification for both terrestrial and non-terrestrial networks, notably satellites. The higher capacity, long-range and robustness to Doppler effect make LR-FHSS a primary candidate for direct-to-satellite (DtS) connectivity for enabling Internet-of-things (IoT) in remote areas. The LR-FHSS devices envisioned for DtS IoT will be primarily battery-powered. Therefore, it is crucial to investigate the current consumption characteristics and Time-on-Air (ToA) of LR-FHSS technology. However, to our knowledge, no prior research has presented the accurate ToA and current consumption models for this newly introduced scheme. This paper addresses this shortcoming through extensive field measurements and the development of analytical models. Specifically, we have measured the current consumption and ToA for variable transmit power, message payload, and two new LR-FHSS-based Data Rates (DR8 and DR9). We also develop current consumption and ToA analytical models demonstrating a strong correlation with the measurement results exhibiting a relative error of less than 0.3%. Thus, it confirms the validity of our models. Conversely, the existing analytical models exhibit a higher relative error rate of -9.2 to 3.4% compared to our measurement results. The presented in this paper results can be further used for simulators or in analytical studies to accurately model the on-air time and energy consumption of LR-FHSS devices.

On the Spectral Efficiency of Movable and Rotary Access Points under Rician Fading

Aug 15, 2024

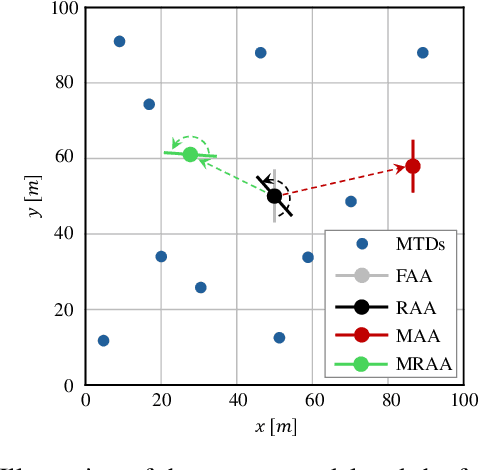

Abstract:Multi-User Multiple-Input Multiple-Output (MU-MIMO) is a pivotal technology in present-day wireless communication systems. In such systems, a base station or Access Point (AP) is equipped with multiple antenna elements and serves multiple active devices simultaneously. Nevertheless, most of the works evaluating the performance of MU-MIMO systems consider APs with static antenna arrays, that is, without any movement capability. Recently, the idea of APs and antenna arrays that are able to move have gained traction among the research community. Many works evaluate the communications performance of antenna systems able to move on the horizontal plane. However, such APs require a very bulky, complex and expensive movement system. In this work, we propose a simpler and cheaper alternative: the utilization of rotary APs, i.e. APs that can rotate. We also analyze the performance of a system in which the AP is able to both move and rotate. The movements and/or rotations of the APs are computed in order to maximize the mean per-user achievable spectral efficiency, based on estimates of the locations of the active devices and using particle swarm optimization. We adopt a spatially correlated Rician fading channel model, and evaluate the resulting optimized performance of the different setups in terms of mean per-user achievable spectral efficiencies. Our numerical results show that both the optimal rotations and movements of the APs can provide substantial performance gains when the line-of-sight components of the channel vectors are strong. Moreover, the simpler rotary APs can outperform the movable APs when their movement area is constrained.

Neural Network-Based Bandit: A Medium Access Control for the IIoT Alarm Scenario

Jul 23, 2024Abstract:Efficient Random Access (RA) is critical for enabling reliable communication in Industrial Internet of Things (IIoT) networks. Herein, we propose a deep reinforcement learning based distributed RA scheme, entitled Neural Network-Based Bandit (NNBB), for the IIoT alarm scenario. In such a scenario, the devices may detect a common critical event, and the goal is to ensure the alarm information is delivered successfully from at least one device. The proposed NNBB scheme is implemented at each device, where it trains itself online and establishes implicit inter-device coordination to achieve the common goal. Devices can transmit simultaneously on multiple orthogonal channels and each possible transmission pattern constitutes a possible action for the NNBB, which uses a deep neural network to determine the action. Our simulation results show that as the number of devices in the network increases, so does the performance gain of the NNBB compared to the Multi-Armed Bandit (MAB) RA benchmark. For instance, NNBB experiences a 7% success rate drop when there are four channels and the number of devices increases from 10 to 60, while MAB faces a 25% drop.

Distributed MIMO Networks with Rotary ULAs for Indoor Scenarios under Rician Fading

Jun 27, 2024

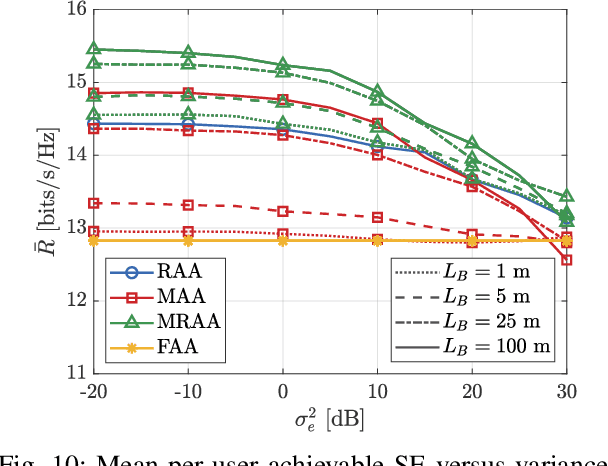

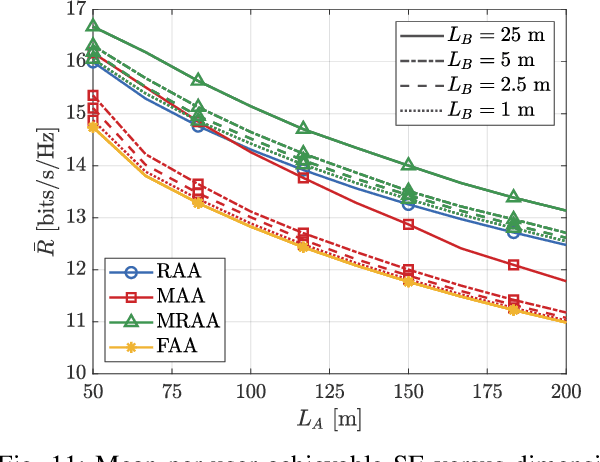

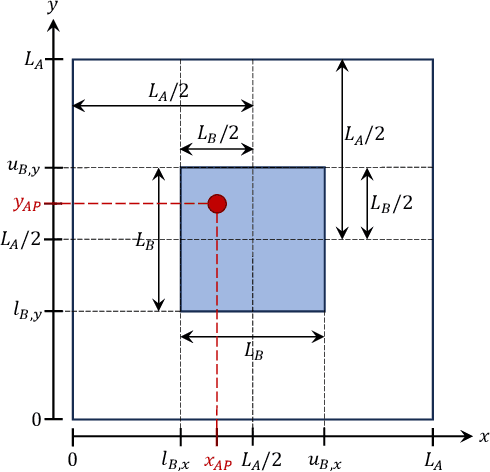

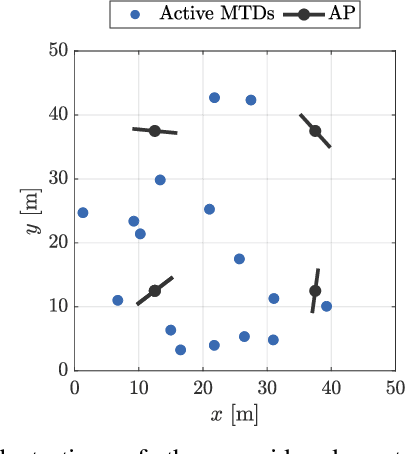

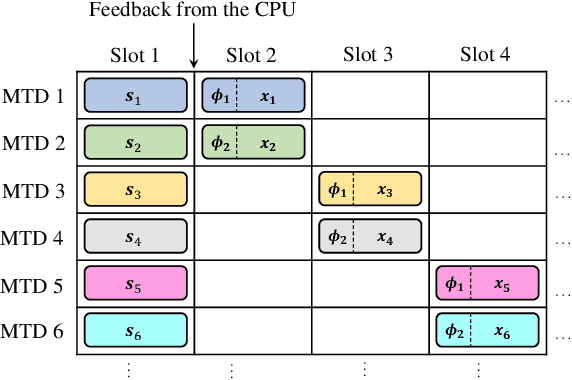

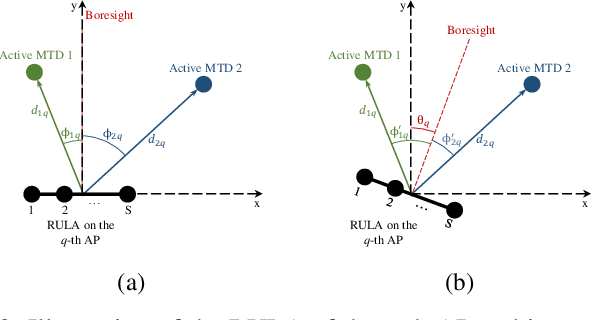

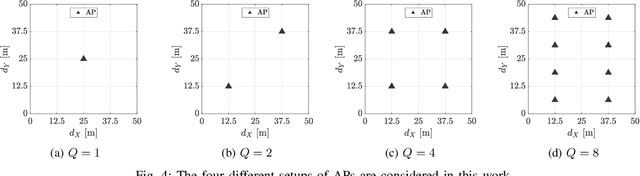

Abstract:The Fifth-Generation (5G) wireless communications networks introduced native support for Machine-Type Communications (MTC) use cases. Nevertheless, current 5G networks cannot fully meet the very stringent requirements regarding latency, reliability, and number of connected devices of most MTC use cases. Industry and academia have been working on the evolution from 5G to Sixth Generation (6G) networks. One of the main novelties is adopting Distributed Multiple-Input Multiple-Output (D-MIMO) networks. However, most works studying D-MIMO consider antenna arrays with no movement capabilities, even though some recent works have shown that this could bring substantial performance improvements. In this work, we propose the utilization of Access Points (APs) equipped with Rotary Uniform Linear Arrays (RULAs) for this purpose. Considering a spatially correlated Rician fading model, the optimal angular position of the RULAs is jointly computed by the central processing unit using particle swarm optimization as a function of the position of the active devices. Considering the impact of imperfect positioning estimates, our numerical results show that the RULAs's optimal rotation brings substantial performance gains in terms of mean per-user spectral efficiency. The improvement grows with the strength of the line-of-sight components of the channel vectors. Given the total number of antenna elements, we study the trade-off between the number of APs and the number of antenna elements per AP, revealing an optimal number of APs for the cases of APs equipped with static ULAs and RULAs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge