Pedro H. J. Nardelli

Semantic-Functional Communications in Cyber-Physical Systems

May 31, 2023Abstract:This paper explores the use of semantic knowledge inherent in the cyber-physical system (CPS) under study in order to minimize the use of explicit communication, which refers to the use of physical radio resources to transmit potentially informative data. It is assumed that the acquired data have a function in the system, usually related to its state estimation, which may trigger control actions. We propose that a semantic-functional approach can leverage the semantic-enabled implicit communication while guaranteeing that the system maintains functionality under the required performance. We illustrate the potential of this proposal through simulations of a swarm of drones jointly performing remote sensing in a given area. Our numerical results demonstrate that the proposed method offers the best design option regarding the ability to accomplish a previously established task -- remote sensing in the addressed case -- while minimising the use of radio resources by controlling the trade-offs that jointly determine the CPS performance and its effectiveness in the use of resources. In this sense, we establish a fundamental relationship between energy, communication, and functionality considering a given end application.

Low-Complexity Dynamic Directional Modulation: Vulnerability and Information Leakage

May 31, 2023

Abstract:In this paper, the privacy of wireless transmissions is improved through the use of an efficient technique termed dynamic directional modulation (DDM), and is subsequently assessed in terms of the measure of information leakage. Recently, a variation of DDM termed low-power dynamic directional modulation (LPDDM) has attracted significant attention as a prominent secure transmission method due to its ability to further improve the privacy of wireless communications. Roughly speaking, this modulation operates by randomly selecting the transmitting antenna from an antenna array whose radiation pattern is well known. Thereafter, the modulator adjusts the constellation phase so as to ensure that only the legitimate receiver recovers the information. To begin with, we highlight some privacy boundaries inherent to the underlying system. In addition, we propose features that the antenna array must meet in order to increase the privacy of a wireless communication system. Last, we adopt a uniform circular monopole antenna array with equiprobable transmitting antennas in order to assess the impact of DDM on the information leakage. It is shown that the bit error rate, while being a useful metric in the evaluation of wireless communication systems, does not provide the full information about the vulnerability of the underlying system.

RSMA for Dual-Polarized Massive MIMO Networks: A SIC-Free Approach

Dec 09, 2022Abstract:Aiming at overcoming practical issues of successive interference cancellation (SIC), this paper proposes a dual-polarized rate-splitting multiple access (RSMA) technique for a downlink massive multiple-input multiple-output (MIMO) network. By modeling the effects of polarization interference, an in-depth theoretical analysis is carried out, in which we derive tight closed-form approximations for the outage probabilities and ergodic sum-rates. Simulation results validate the accuracy of the theoretical analysis and confirm the effectiveness of the proposed approach. For instance, under low to moderate cross-polar interference, our results show that the proposed dual-polarized MIMO-RSMA strategy outperforms the single-polarized MIMO-RSMA counterpart for all considered levels of residual SIC error.

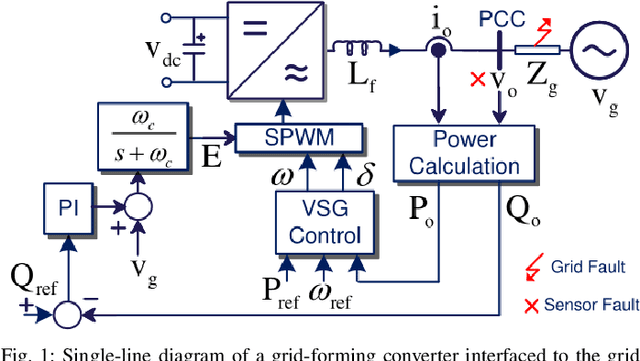

Optimizing a Digital Twin for Fault Diagnosis in Grid Connected Inverters -- A Bayesian Approach

Dec 07, 2022Abstract:In this paper, a hyperparameter tuning based Bayesian optimization of digital twins is carried out to diagnose various faults in grid connected inverters. As fault detection and diagnosis require very high precision, we channelize our efforts towards an online optimization of the digital twins, which, in turn, allows a flexible implementation with limited amount of data. As a result, the proposed framework not only becomes a practical solution for model versioning and deployment of digital twins design with limited data, but also allows integration of deep learning tools to improve the hyperparameter tuning capabilities. For classification performance assessment, we consider different fault cases in virtual synchronous generator (VSG) controlled grid-forming converters and demonstrate the efficacy of our approach. Our research outcomes reveal the increased accuracy and fidelity levels achieved by our digital twin design, overcoming the shortcomings of traditional hyperparameter tuning methods.

Indoor Positioning via Gradient Boosting Enhanced with Feature Augmentation using Deep Learning

Nov 16, 2022

Abstract:With the emerge of the Internet of Things (IoT), localization within indoor environments has become inevitable and has attracted a great deal of attention in recent years. Several efforts have been made to cope with the challenges of accurate positioning systems in the presence of signal interference. In this paper, we propose a novel deep learning approach through Gradient Boosting Enhanced with Step-Wise Feature Augmentation using Artificial Neural Network (AugBoost-ANN) for indoor localization applications as it trains over labeled data. For this purpose, we propose an IoT architecture using a star network topology to collect the Received Signal Strength Indicator (RSSI) of Bluetooth Low Energy (BLE) modules by means of a Raspberry Pi as an Access Point (AP) in an indoor environment. The dataset for the experiments is gathered in the real world in different periods to match the real environments. Next, we address the challenges of the AugBoost-ANN training which augments features in each iteration of making a decision tree using a deep neural network and the transfer learning technique. Experimental results show more than 8\% improvement in terms of accuracy in comparison with the existing gradient boosting and deep learning methods recently proposed in the literature, and our proposed model acquires a mean location accuracy of 0.77 m.

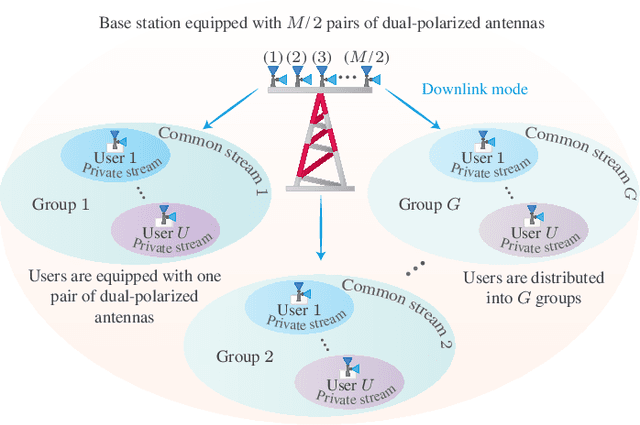

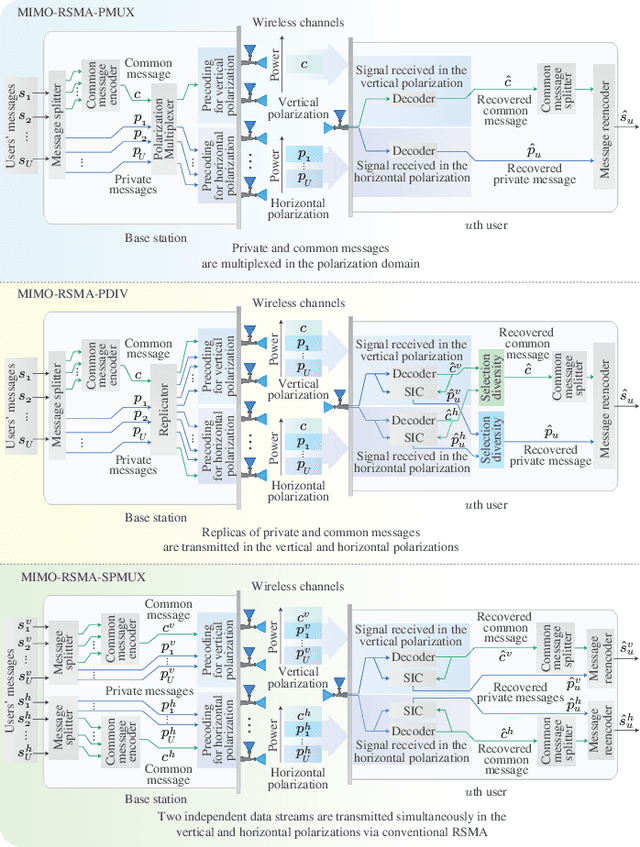

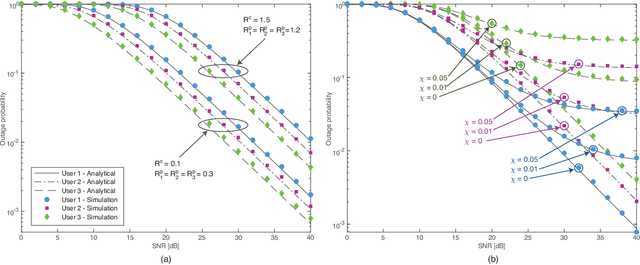

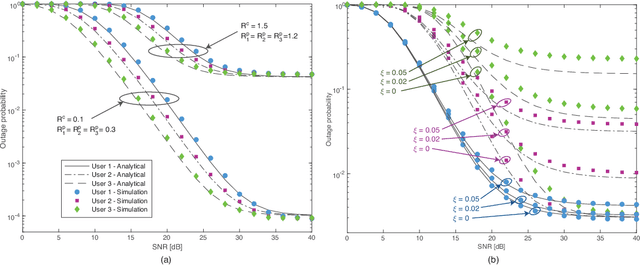

Dual-Polarized Massive MIMO-RSMA Networks: Tackling Imperfect SIC

Nov 02, 2022

Abstract:The polarization domain provides an extra degree of freedom (DoF) for improving the performance of multiple-input multiple-output (MIMO) systems. This paper takes advantage of this additional DoF to alleviate practical issues of successive interference cancellation (SIC) in rate-splitting multiple access (RSMA) schemes. Specifically, we propose three dual-polarized downlink transmission approaches for a massive MIMO-RSMA network under the effects of polarization interference and residual errors of imperfect SIC. The first approach implements polarization multiplexing for transmitting the users' data messages, which removes the need to execute SIC in the reception. The second approach transmits replicas of users' messages in the two polarizations, which enables users to exploit diversity through the polarization domain. The third approach, in its turn, employs the original SIC-based RSMA technique per polarization, and this allows the BS to transmit two independent superimposed data streams simultaneously. An in-depth theoretical analysis is carried out, in which we derive tight closed-form approximations for the outage probabilities of the three proposed approaches. Accurate approximations for the ergodic sum-rates of the two first schemes are also derived. Simulation results validate the theoretical analysis and confirm the effectiveness of the proposed schemes. For instance, under low to moderate cross-polar interference, the results show that, even under high levels of residual SIC error, our dual-polarized MIMO-RSMA strategies outperform the conventional single-polarized MIMO-RSMA counterpart. It is also shown that the performance of all RSMA schemes is impressively higher than that of single and dual-polarized massive MIMO systems employing non-orthogonal multiple access (NOMA) and orthogonal multiple access (OMA) techniques.

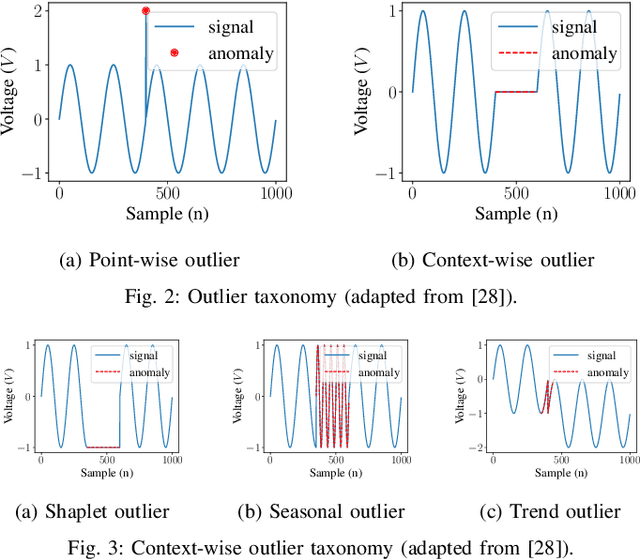

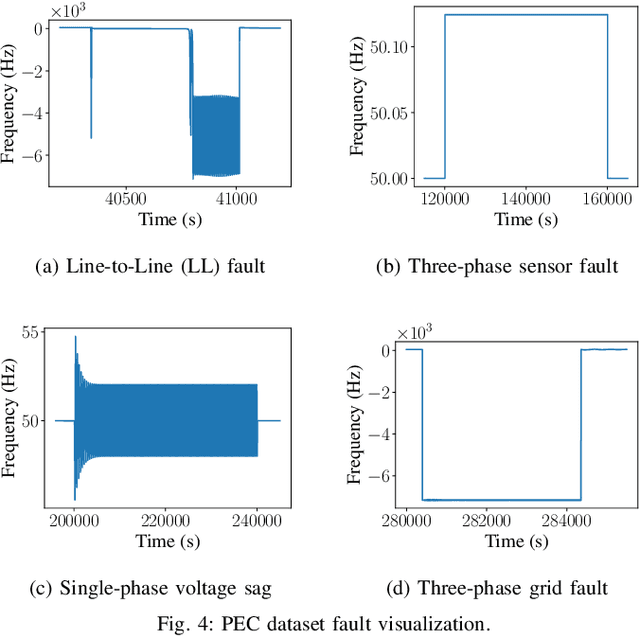

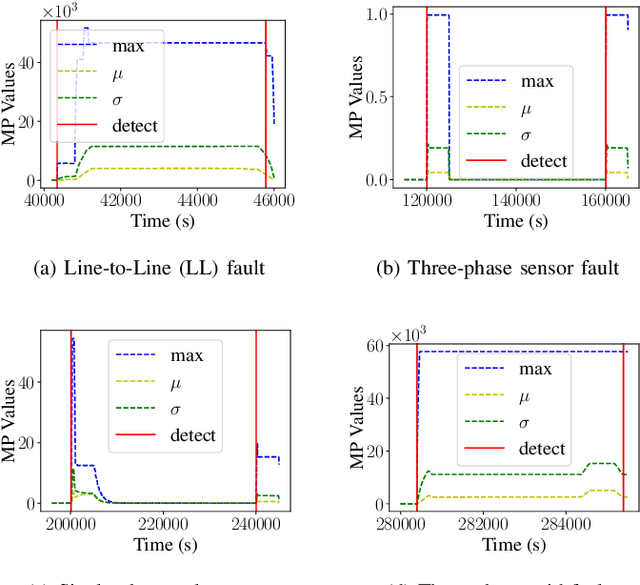

A Robust and Explainable Data-Driven Anomaly Detection Approach For Power Electronics

Sep 23, 2022

Abstract:Timely and accurate detection of anomalies in power electronics is becoming increasingly critical for maintaining complex production systems. Robust and explainable strategies help decrease system downtime and preempt or mitigate infrastructure cyberattacks. This work begins by explaining the types of uncertainty present in current datasets and machine learning algorithm outputs. Three techniques for combating these uncertainties are then introduced and analyzed. We further present two anomaly detection and classification approaches, namely the Matrix Profile algorithm and anomaly transformer, which are applied in the context of a power electronic converter dataset. Specifically, the Matrix Profile algorithm is shown to be well suited as a generalizable approach for detecting real-time anomalies in streaming time-series data. The STUMPY python library implementation of the iterative Matrix Profile is used for the creation of the detector. A series of custom filters is created and added to the detector to tune its sensitivity, recall, and detection accuracy. Our numerical results show that, with simple parameter tuning, the detector provides high accuracy and performance in a variety of fault scenarios.

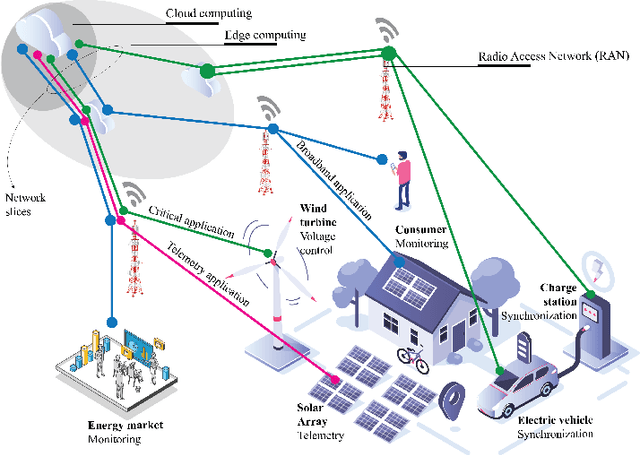

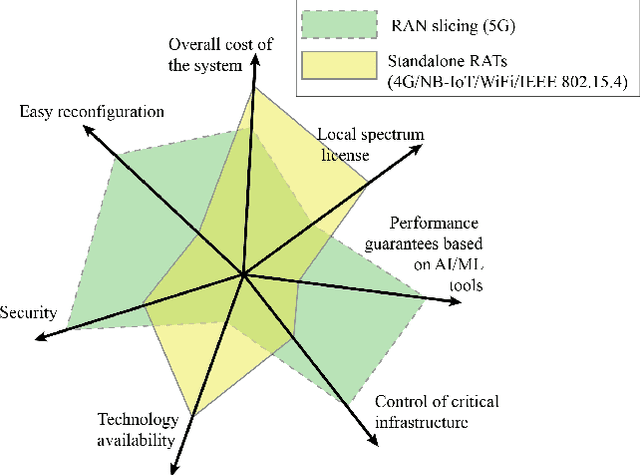

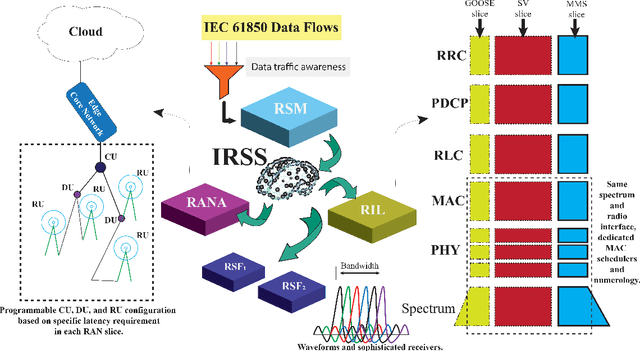

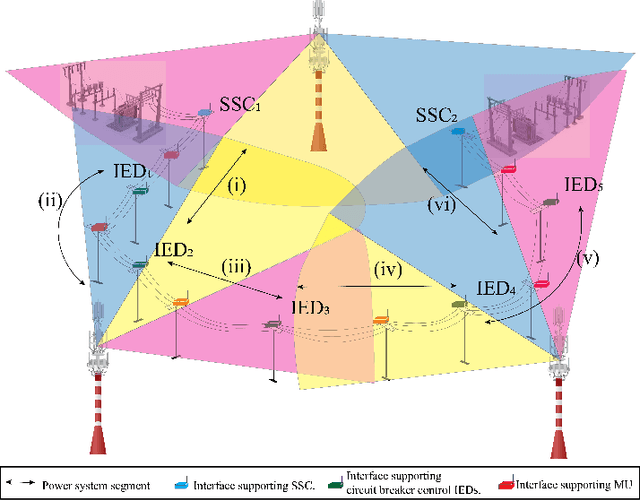

Boosting 5G on Smart Grid Communication: A Smart RAN Slicing Approach

Aug 30, 2022

Abstract:Fifth-generation (5G) and beyond systems are expected to accelerate the ongoing transformation of power systems towards the smart grid. However, the inherent heterogeneity in smart grid services and requirements pose significant challenges towards the definition of a unified network architecture. In this context, radio access network (RAN) slicing emerges as a key 5G enabler to ensure interoperable connectivity and service management in the smart grid. This article introduces a novel RAN slicing framework which leverages the potential of artificial intelligence (AI) to support IEC 61850 smart grid services. With the aid of deep reinforcement learning, efficient radio resource management for RAN slices is attained, while conforming to the stringent performance requirements of a smart grid self-healing use case. Our research outcomes advocate the adoption of emerging AI-native approaches for RAN slicing in beyond-5G systems, and lay the foundations for differentiated service provisioning in the smart grid.

MIX-MAB: Reinforcement Learning-based Resource Allocation Algorithm for LoRaWAN

Jun 07, 2022

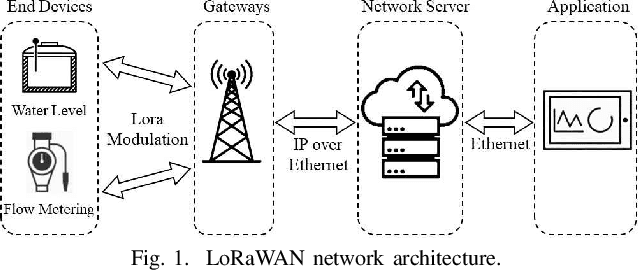

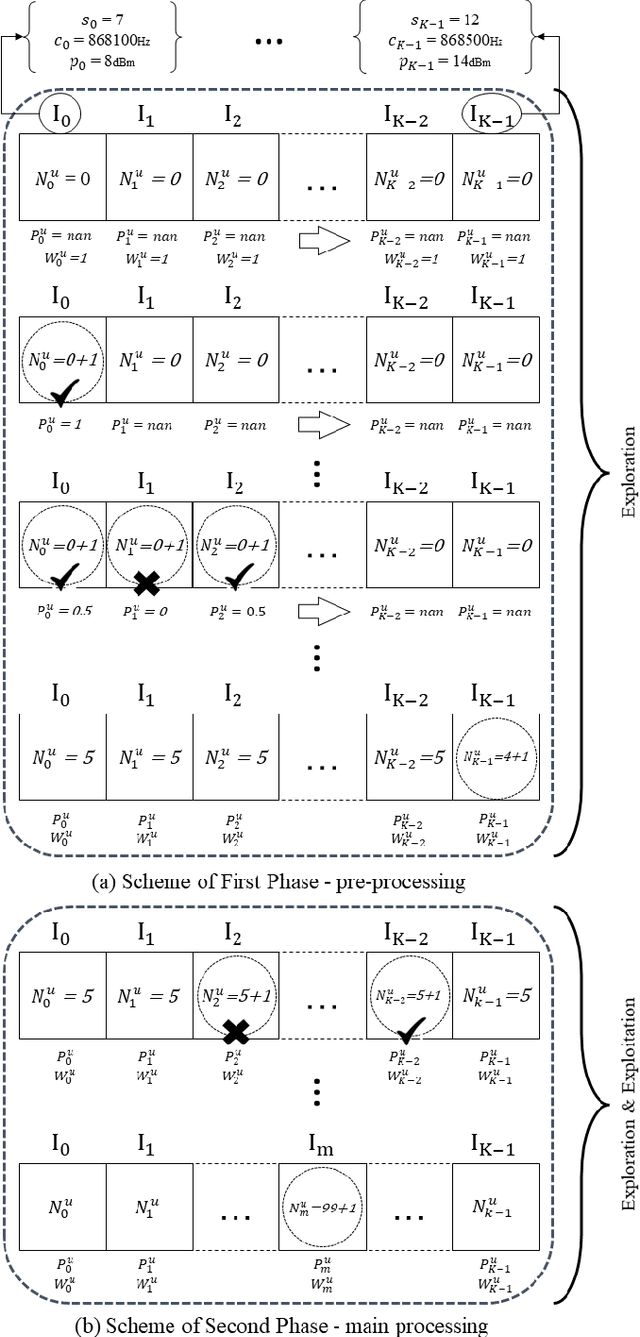

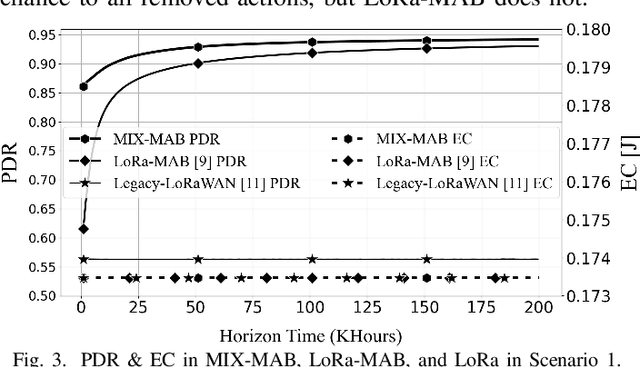

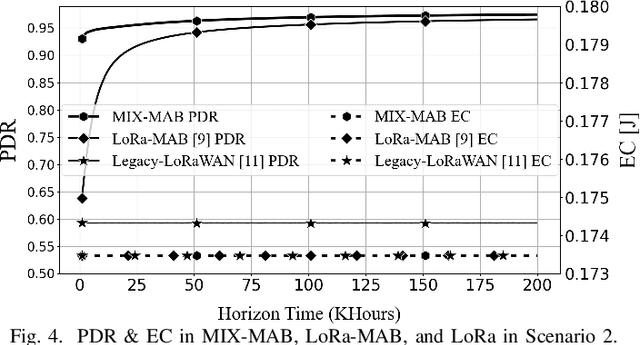

Abstract:This paper focuses on improving the resource allocation algorithm in terms of packet delivery ratio (PDR), i.e., the number of successfully received packets sent by end devices (EDs) in a long-range wide-area network (LoRaWAN). Setting the transmission parameters significantly affects the PDR. Employing reinforcement learning (RL), we propose a resource allocation algorithm that enables the EDs to configure their transmission parameters in a distributed manner. We model the resource allocation problem as a multi-armed bandit (MAB) and then address it by proposing a two-phase algorithm named MIX-MAB, which consists of the exponential weights for exploration and exploitation (EXP3) and successive elimination (SE) algorithms. We evaluate the MIX-MAB performance through simulation results and compare it with other existing approaches. Numerical results show that the proposed solution performs better than the existing schemes in terms of convergence time and PDR.

Xavier-Enabled Extreme Reservoir Machine for Millimeter-Wave Beamspace Channel Tracking

Jun 01, 2022

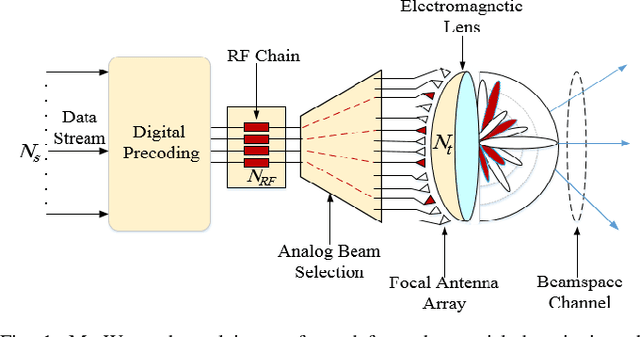

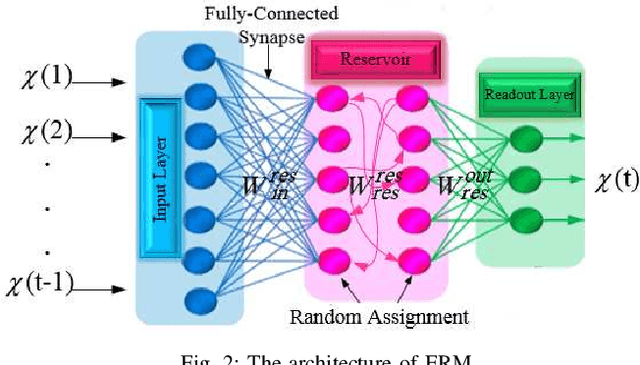

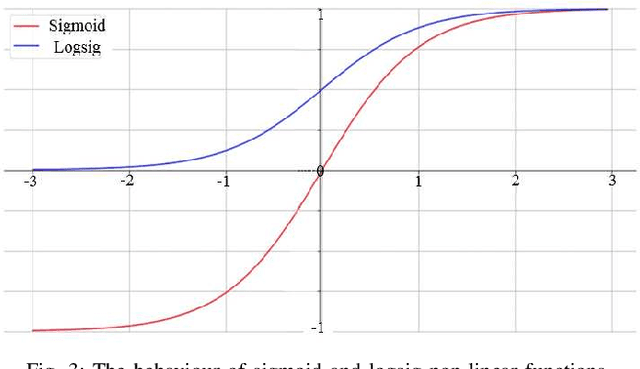

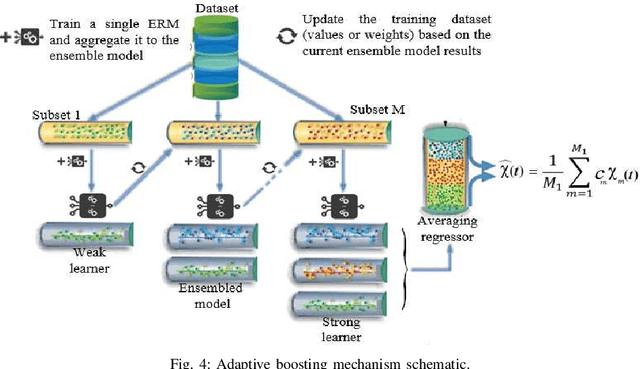

Abstract:In this paper, we propose an accurate two-phase millimeter-Wave (mmWave) beamspace channel tracking mechanism. Particularly in the first phase, we train an extreme reservoir machine (ERM) for tracking the historical features of the mmWave beamspace channel and predicting them in upcoming time steps. Towards a more accurate prediction, we further fine-tune the ERM by means of Xavier initializer technique, whereby the input weights in ERM are initially derived from a zero mean and finite variance Gaussian distribution, leading to 49% degradation in prediction variance of the conventional ERM. The proposed method numerically improves the achievable spectral efficiency (SE) of the existing counterparts, by 13%, when signal-to-noise-ratio (SNR) is 15dB. We further investigate an ensemble learning technique in the second phase by sequentially incorporating multiple ERMs to form an ensembled model, namely adaptive boosting (AdaBoost), which further reduces the prediction variance in conventional ERM by 56%, and concludes in 21% enhancement of achievable SE upon the existing schemes at SNR=15dB.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge