Diomidis S. Michalopoulos

Position Paper: Rethinking AI/ML for Air Interface in Wireless Networks

Jun 13, 2025Abstract:AI/ML research has predominantly been driven by domains such as computer vision, natural language processing, and video analysis. In contrast, the application of AI/ML to wireless networks, particularly at the air interface, remains in its early stages. Although there are emerging efforts to explore this intersection, fully realizing the potential of AI/ML in wireless communications requires a deep interdisciplinary understanding of both fields. We provide an overview of AI/ML-related discussions in 3GPP standardization, highlighting key use cases, architectural considerations, and technical requirements. We outline open research challenges and opportunities where academic and industrial communities can contribute to shaping the future of AI-enabled wireless systems.

Time-based vs. Fingerprinting-based Positioning Using Artificial Neural Networks

Dec 04, 2023

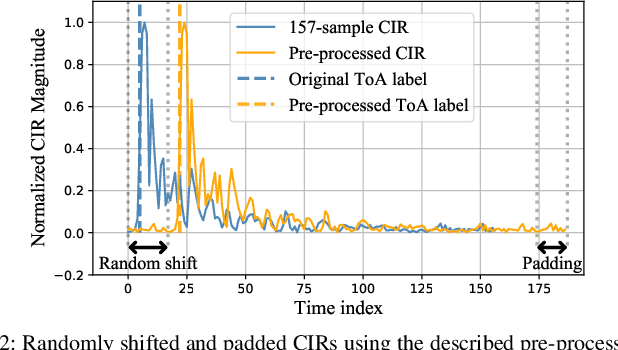

Abstract:High-accuracy positioning has gained significant interest for many use-cases across various domains such as industrial internet of things (IIoT), healthcare and entertainment. Radio frequency (RF) measurements are widely utilized for user localization. However, challenging radio conditions such as non-line-of-sight (NLOS) and multipath propagation can deteriorate the positioning accuracy. Machine learning (ML)-based estimators have been proposed to overcome these challenges. RF measurements can be utilized for positioning in multiple ways resulting in time-based, angle-based and fingerprinting-based methods. Different methods, however, impose different implementation requirements to the system, and may perform differently in terms of accuracy for a given setting. In this paper, we use artificial neural networks (ANNs) to realize time-of-arrival (ToA)-based and channel impulse response (CIR) fingerprinting-based positioning. We compare their performance for different indoor environments based on real-world ultra-wideband (UWB) measurements. We first show that using ML techniques helps to improve the estimation accuracy compared to conventional techniques for time-based positioning. When comparing time-based and fingerprinting schemes using ANNs, we show that the favorable method in terms of positioning accuracy is different for different environments, where the accuracy is affected not only by the radio propagation conditions but also the density and distribution of reference user locations used for fingerprinting.

Time of Arrival Error Estimation for Positioning Using Convolutional Neural Networks

Jan 11, 2023

Abstract:Wireless high-accuracy positioning has recently attracted growing research interest due to diversified nature of applications such as industrial asset tracking, autonomous driving, process automation, and many more. However, obtaining a highly accurate location information is hampered by challenges due to the radio environment. A major source of error for time-based positioning methods is inaccurate time-of-arrival (ToA) or range estimation. Existing machine learning-based solutions to mitigate such errors rely on propagation environment classification hindered by a low number of classes, employ a set of features representing channel measurements only to a limited extent, or account for only device-specific proprietary methods of ToA estimation. In this paper, we propose convolutional neural networks (CNNs) to estimate and mitigate the errors of a variety of ToA estimation methods utilizing channel impulse responses (CIRs). Based on real-world measurements from two independent campaigns, the proposed method yields significant improvements in ranging accuracy (up to 37%) of the state-of-the-art ToA estimators, often eliminating the need of optimizing the underlying conventional methods.

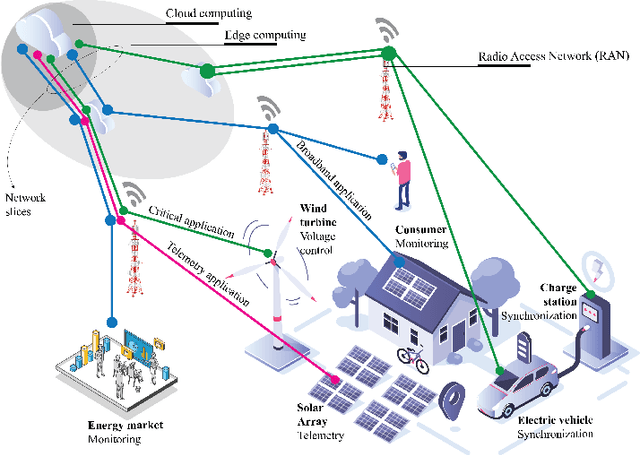

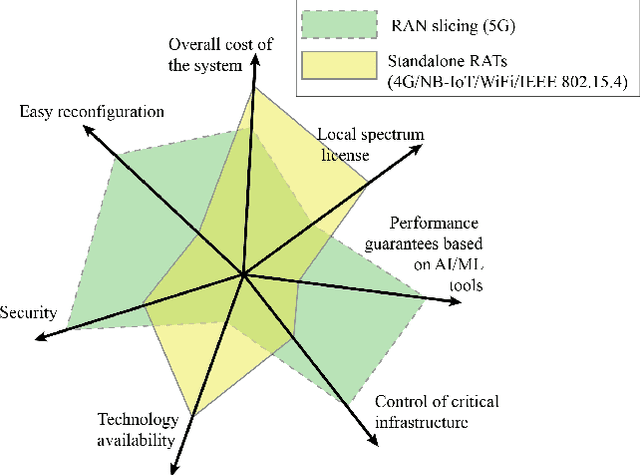

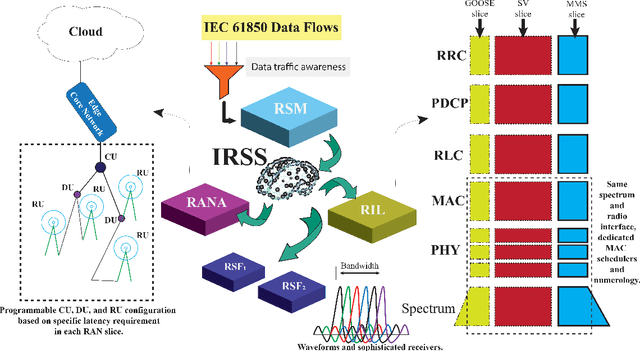

Boosting 5G on Smart Grid Communication: A Smart RAN Slicing Approach

Aug 30, 2022

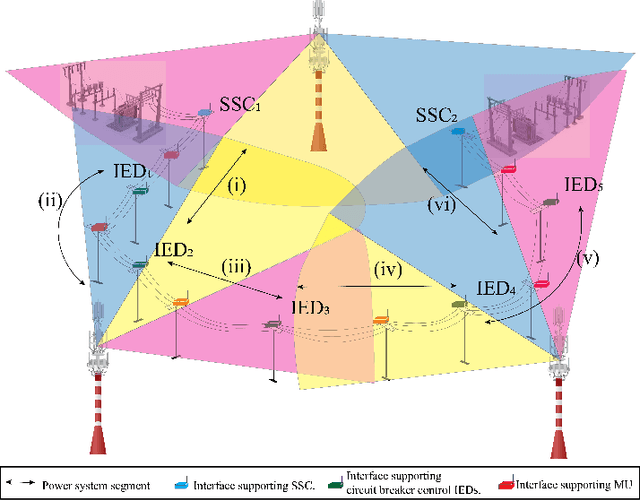

Abstract:Fifth-generation (5G) and beyond systems are expected to accelerate the ongoing transformation of power systems towards the smart grid. However, the inherent heterogeneity in smart grid services and requirements pose significant challenges towards the definition of a unified network architecture. In this context, radio access network (RAN) slicing emerges as a key 5G enabler to ensure interoperable connectivity and service management in the smart grid. This article introduces a novel RAN slicing framework which leverages the potential of artificial intelligence (AI) to support IEC 61850 smart grid services. With the aid of deep reinforcement learning, efficient radio resource management for RAN slices is attained, while conforming to the stringent performance requirements of a smart grid self-healing use case. Our research outcomes advocate the adoption of emerging AI-native approaches for RAN slicing in beyond-5G systems, and lay the foundations for differentiated service provisioning in the smart grid.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge