Taylan Şahin

Time of Arrival Error Estimation for Positioning Using Convolutional Neural Networks

Jan 11, 2023

Abstract:Wireless high-accuracy positioning has recently attracted growing research interest due to diversified nature of applications such as industrial asset tracking, autonomous driving, process automation, and many more. However, obtaining a highly accurate location information is hampered by challenges due to the radio environment. A major source of error for time-based positioning methods is inaccurate time-of-arrival (ToA) or range estimation. Existing machine learning-based solutions to mitigate such errors rely on propagation environment classification hindered by a low number of classes, employ a set of features representing channel measurements only to a limited extent, or account for only device-specific proprietary methods of ToA estimation. In this paper, we propose convolutional neural networks (CNNs) to estimate and mitigate the errors of a variety of ToA estimation methods utilizing channel impulse responses (CIRs). Based on real-world measurements from two independent campaigns, the proposed method yields significant improvements in ranging accuracy (up to 37%) of the state-of-the-art ToA estimators, often eliminating the need of optimizing the underlying conventional methods.

Scheduling Out-of-Coverage Vehicular Communications Using Reinforcement Learning

Jul 13, 2022

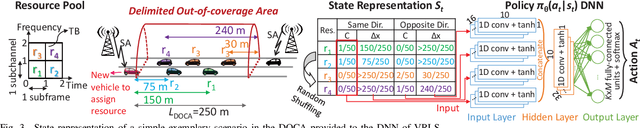

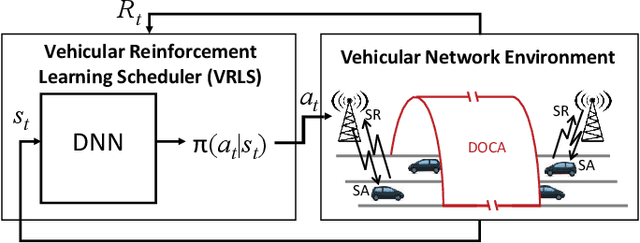

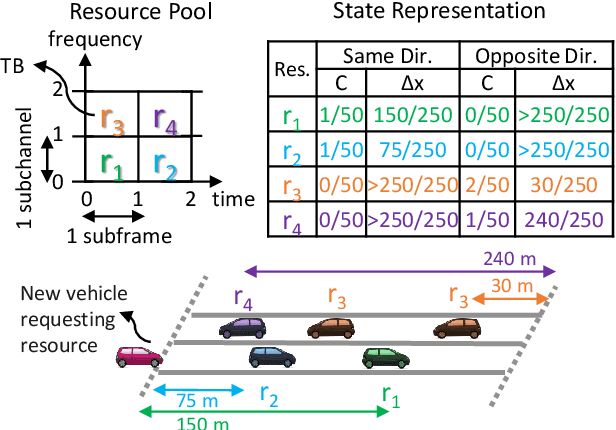

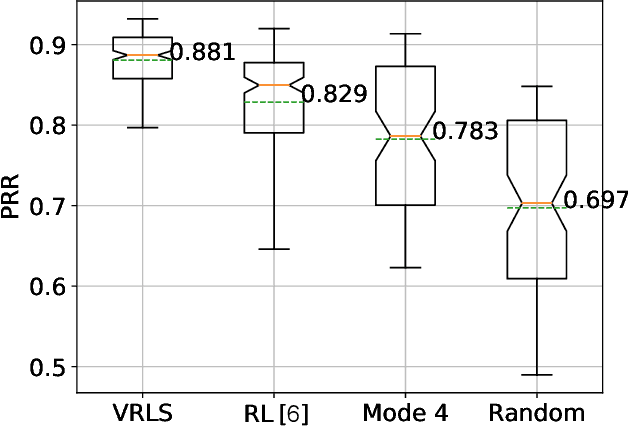

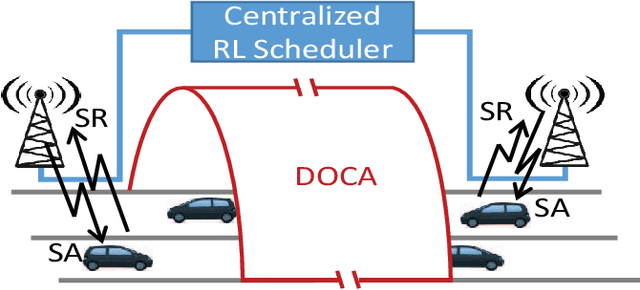

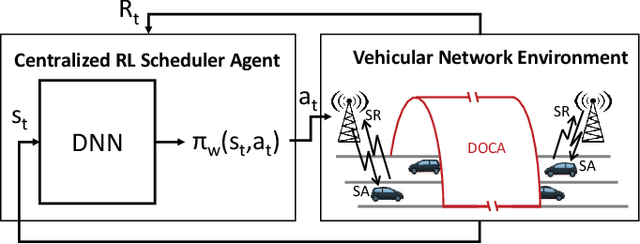

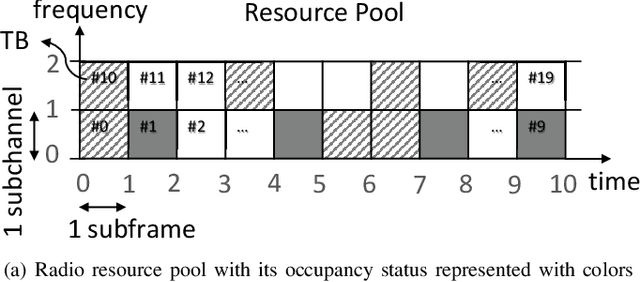

Abstract:Performance of vehicle-to-vehicle (V2V) communications depends highly on the employed scheduling approach. While centralized network schedulers offer high V2V communication reliability, their operation is conventionally restricted to areas with full cellular network coverage. In contrast, in out-of-cellular-coverage areas, comparatively inefficient distributed radio resource management is used. To exploit the benefits of the centralized approach for enhancing the reliability of V2V communications on roads lacking cellular coverage, we propose VRLS (Vehicular Reinforcement Learning Scheduler), a centralized scheduler that proactively assigns resources for out-of-coverage V2V communications \textit{before} vehicles leave the cellular network coverage. By training in simulated vehicular environments, VRLS can learn a scheduling policy that is robust and adaptable to environmental changes, thus eliminating the need for targeted (re-)training in complex real-life environments. We evaluate the performance of VRLS under varying mobility, network load, wireless channel, and resource configurations. VRLS outperforms the state-of-the-art distributed scheduling algorithm in zones without cellular network coverage by reducing the packet error rate by half in highly loaded conditions and achieving near-maximum reliability in low-load scenarios.

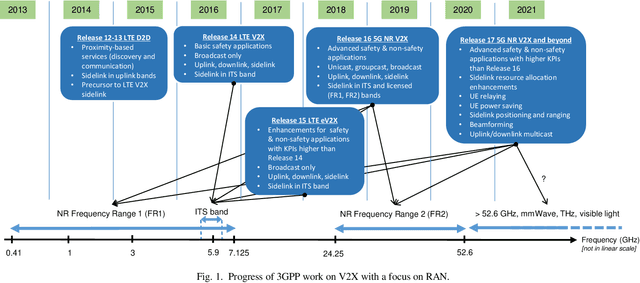

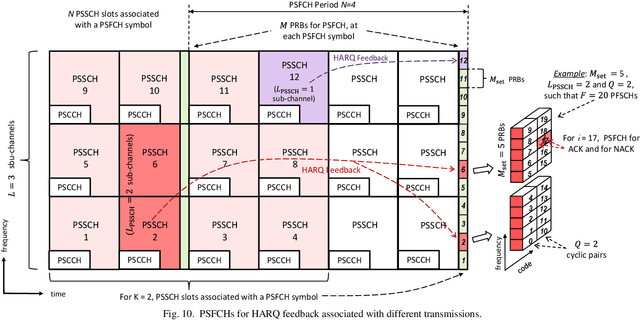

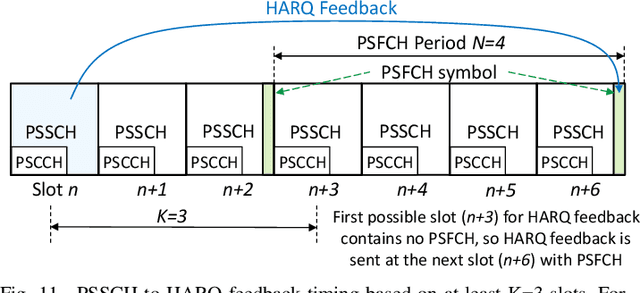

A Tutorial on 5G NR V2X Communications

Feb 08, 2021

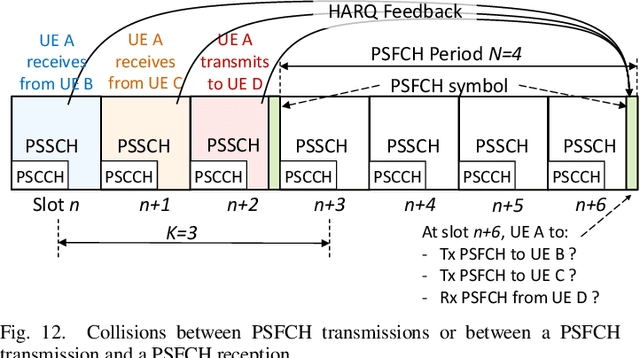

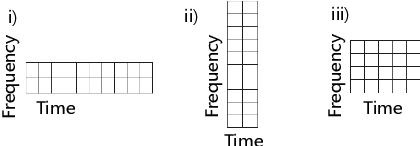

Abstract:The Third Generation Partnership Project (3GPP) has recently published its Release 16 that includes the first Vehicle to-Everything (V2X) standard based on the 5G New Radio (NR) air interface. 5G NR V2X introduces advanced functionalities on top of the 5G NR air interface to support connected and automated driving use cases with stringent requirements. This paper presents an in-depth tutorial of the 3GPP Release 16 5G NR V2X standard for V2X communications, with a particular focus on the sidelink, since it is the most significant part of 5G NR V2X. The main part of the paper is an in-depth treatment of the key aspects of 5G NR V2X: the physical layer, the resource allocation, the quality of service management, the enhancements introduced to the Uu interface and the mobility management for V2N (Vehicle to Network) communications, as well as the co-existence mechanisms between 5G NR V2X and LTE V2X. We also review the use cases, the system architecture, and describe the evaluation methodology and simulation assumptions for 5G NR V2X. Finally, we provide an outlook on possible 5G NR V2X enhancements, including those identified within Release 17.

VRLS: A Unified Reinforcement Learning Scheduler for Vehicle-to-Vehicle Communications

Jul 22, 2019

Abstract:Vehicle-to-vehicle (V2V) communications have distinct challenges that need to be taken into account when scheduling the radio resources. Although centralized schedulers (e.g., located on base stations) could be utilized to deliver high scheduling performance, they cannot be employed in case of coverage gaps. To address the issue of reliable scheduling of V2V transmissions out of coverage, we propose Vehicular Reinforcement Learning Scheduler (VRLS), a centralized scheduler that predictively assigns the resources for V2V communication while the vehicle is still in cellular network coverage. VRLS is a unified reinforcement learning (RL) solution, wherein the learning agent, the state representation, and the reward provided to the agent are applicable to different vehicular environments of interest (in terms of vehicular density, resource configuration, and wireless channel conditions). Such a unified solution eliminates the necessity of redesigning the RL components for a different environment, and facilitates transfer learning from one to another similar environment. We evaluate the performance of VRLS and show its ability to avoid collisions and half-duplex errors, and to reuse the resources better than the state of the art scheduling algorithms. We also show that pre-trained VRLS agent can adapt to different V2V environments with limited retraining, thus enabling real-world deployment in different scenarios.

Reinforcement Learning Scheduler for Vehicle-to-Vehicle Communications Outside Coverage

Apr 29, 2019

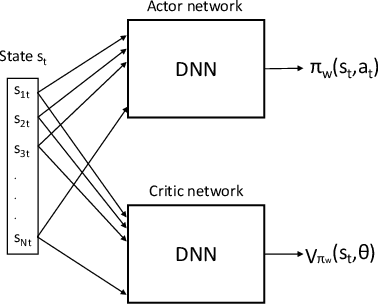

Abstract:Radio resources in vehicle-to-vehicle (V2V) communication can be scheduled either by a centralized scheduler residing in the network (e.g., a base station in case of cellular systems) or a distributed scheduler, where the resources are autonomously selected by the vehicles. The former approach yields a considerably higher resource utilization in case the network coverage is uninterrupted. However, in case of intermittent or out-of-coverage, due to not having input from centralized scheduler, vehicles need to revert to distributed scheduling. Motivated by recent advances in reinforcement learning (RL), we investigate whether a centralized learning scheduler can be taught to efficiently pre-assign the resources to vehicles for out-of-coverage V2V communication. Specifically, we use the actor-critic RL algorithm to train the centralized scheduler to provide non-interfering resources to vehicles before they enter the out-of-coverage area. Our initial results show that a RL-based scheduler can achieve performance as good as or better than the state-of-art distributed scheduler, often outperforming it. Furthermore, the learning process completes within a reasonable time (ranging from a few hundred to a few thousand epochs), thus making the RL-based scheduler a promising solution for V2V communications with intermittent network coverage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge