Wolfgang Gerstacker

SwinLSTM Autoencoder for Temporal-Spatial-Frequency Domain CSI Compression in Massive MIMO Systems

May 07, 2025Abstract:This study presents a parameter-light, low-complexity artificial intelligence/machine learning (AI/ML) model that enhances channel state information (CSI) feedback in wireless systems by jointly exploiting temporal, spatial, and frequency (TSF) domain correlations. While traditional frameworks use autoencoders for CSI compression at the user equipment (UE) and reconstruction at the network (NW) side in spatial-frequency (SF), massive multiple-input multiple-output (mMIMO) systems in low mobility scenarios exhibit strong temporal correlation alongside frequency and spatial correlations. An autoencoder architecture alone is insufficient to exploit the TSF domain correlation in CSI; a recurrent element is also required. To address the vanishing gradients problem, researchers in recent works have proposed state-of-the-art TSF domain CSI compression architectures that combine recurrent networks for temporal correlation exploitation with deep pre-trained autoencoder that handle SF domain CSI compression. However, this approach increases the number of parameters and computational complexity. To jointly utilize correlations across the TSF domain, we propose a novel, parameter-light, low-complexity AI/ML-based recurrent autoencoder architecture to compress CSI at the UE side and reconstruct it on the NW side while minimizing CSI feedback overhead.

Tera-SpaceCom: GNN-based Deep Reinforcement Learning for Joint Resource Allocation and Task Offloading in TeraHertz Band Space Networks

Sep 12, 2024

Abstract:Terahertz (THz) space communications (Tera-SpaceCom) is envisioned as a promising technology to enable various space science and communication applications. Mainly, the realm of Tera-SpaceCom consists of THz sensing for space exploration, data centers in space providing cloud services for space exploration tasks, and a low earth orbit (LEO) mega-constellation relaying these tasks to ground stations (GSs) or data centers via THz links. Moreover, to reduce the computational burden on data centers as well as resource consumption and latency in the relaying process, the LEO mega-constellation provides satellite edge computing (SEC) services to directly compute space exploration tasks without relaying these tasks to data centers. The LEO satellites that receive space exploration tasks offload (i.e., distribute) partial tasks to their neighboring LEO satellites, to further reduce their computational burden. However, efficient joint communication resource allocation and computing task offloading for the Tera-SpaceCom SEC network is an NP-hard mixed-integer nonlinear programming problem (MINLP), due to the discrete nature of space exploration tasks and sub-arrays as well as the continuous nature of transmit power. To tackle this challenge, a graph neural network (GNN)-deep reinforcement learning (DRL)-based joint resource allocation and task offloading (GRANT) algorithm is proposed with the target of long-term resource efficiency (RE). Particularly, GNNs learn relationships among different satellites from their connectivity information. Furthermore, multi-agent and multi-task mechanisms cooperatively train task offloading and resource allocation. Compared with benchmark solutions, GRANT not only achieves the highest RE with relatively low latency, but realizes the fewest trainable parameters and the shortest running time.

Globally Optimal Movable Antenna-Enhanced multi-user Communication: Discrete Antenna Positioning, Motion Power Consumption, and Imperfect CSI

Aug 27, 2024

Abstract:Movable antennas (MAs) represent a promising paradigm to enhance the spatial degrees of freedom of conventional multi-antenna systems by dynamically adapting the positions of antenna elements within a designated transmit area. In particular, by employing electro-mechanical MA drivers, the positions of the MA elements can be adjusted to shape a favorable spatial correlation for improving system performance. Although preliminary research has explored beamforming designs for MA systems, the intricacies of the power consumption and the precise positioning of MA elements are not well understood. Moreover, the assumption of perfect CSI adopted in the literature is impractical due to the significant pilot overhead and the extensive time to acquire perfect CSI. To address these challenges, we model the motion of MA elements through discrete steps and quantify the associated power consumption as a function of these movements. Furthermore, by leveraging the properties of the MA channel model, we introduce a novel CSI error model tailored for MA systems that facilitates robust resource allocation design. In particular, we optimize the beamforming and the MA positions at the BS to minimize the total BS power consumption, encompassing both radiated and MA motion power while guaranteeing a minimum required SINR for each user. To this end, novel algorithms exploiting the branch and bound (BnB) method are developed to obtain the optimal solution for perfect and imperfect CSI. Moreover, to support practical implementation, we propose low-complexity algorithms with guaranteed convergence by leveraging successive convex approximation (SCA). Our numerical results validate the optimality of the proposed BnB-based algorithms. Furthermore, we unveil that both proposed SCA-based algorithms approach the optimal performance within a few iterations, thus highlighting their practical advantages.

Approximate Partially Decentralized Linear EZF Precoding for Massive MU-MIMO Systems

Jul 18, 2024Abstract:Massive multi-user multiple-input multiple-output (MU-MIMO) systems enable high spatial resolution, high spectral efficiency, and improved link reliability compared to traditional MIMO systems due to the large number of antenna elements deployed at the base station (BS). Nevertheless, conventional massive MU-MIMO BS transceiver designs rely on centralized linear precoding algorithms, which entail high interconnect data rates and a prohibitive complexity at the centralized baseband processing unit. In this paper, we consider an MU-MIMO system, where each user device is served with multiple independent data streams in the downlink. To address the aforementioned challenges, we propose a novel decentralized BS architecture, and develop a novel decentralized precoding algorithm based on eigen-zero-forcing (EZF). Our proposed approach relies on parallelizing the baseband processing tasks across multiple antenna clusters at the BS, while minimizing the interconnection requirements between the clusters, and is shown to closely approach the performance of centralized EZF.

Deep Learning-based Joint Channel Prediction and Multibeam Precoding for LEO Satellite Internet of Things

May 27, 2024

Abstract:Low earth orbit (LEO) satellite internet of things (IoT) is a promising way achieving global Internet of Everything, and thus has been widely recognized as an important component of sixth-generation (6G) wireless networks. Yet, due to high-speed movement of the LEO satellite, it is challenging to acquire timely channel state information (CSI) and design effective multibeam precoding for various IoT applications. To this end, this paper provides a deep learning (DL)-based joint channel prediction and multibeam precoding scheme under adverse environments, e.g., high Doppler shift, long propagation delay, and low satellite payload. {Specifically, this paper first designs a DL-based channel prediction scheme by using convolutional neural networks (CNN) and long short term memory (LSTM), which predicts the CSI of current time slot according to that of previous time slots. With the predicted CSI, this paper designs a DL-based robust multibeam precoding scheme by using a channel augmentation method based on variational auto-encoder (VAE).} Finally, extensive simulation results confirm the effectiveness and robustness of the proposed scheme in LEO satellite IoT.

Detection Schemes with Low-Resolution ADCs and Spatial Oversampling for Transmission with Higher-Order Constellations in the Terahertz Band

Feb 07, 2024

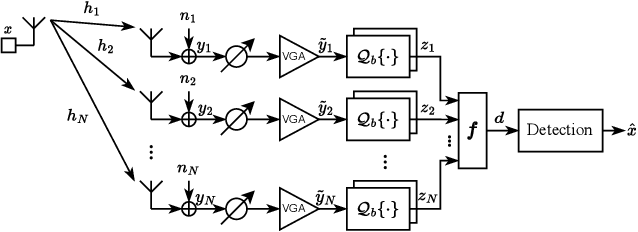

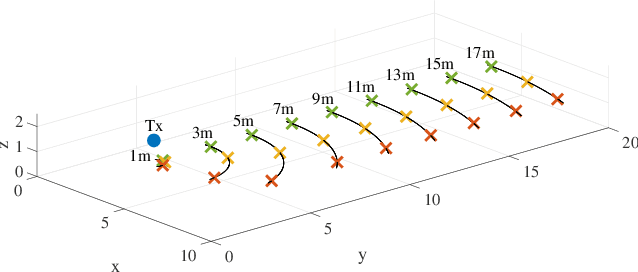

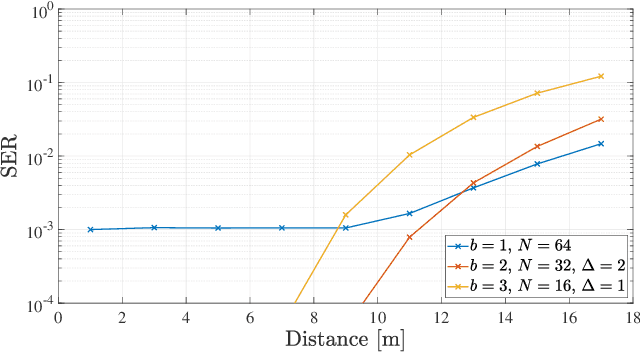

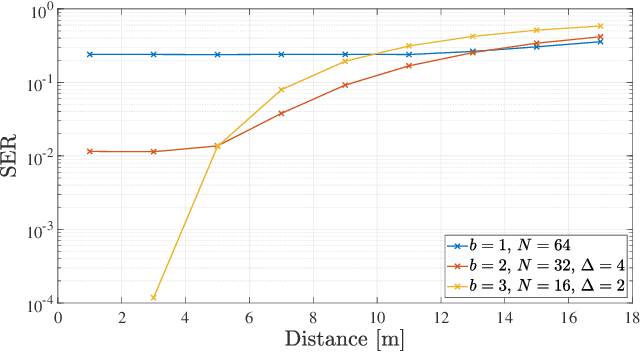

Abstract:In this work, we consider Terahertz (THz) communications with low-resolution uniform quantization and spatial oversampling at the receiver side. We compare different analog-to-digital converter (ADC) parametrizations in a fair manner by keeping the ADC power consumption constant. Here, 1-, 2-, and 3-bit quantization is investigated with different oversampling factors. We analytically compute the statistics of the detection variable, and we propose the optimal as well as several suboptimal detection schemes for arbitrary quantization resolutions. Then, we evaluate the symbol error rate (SER) of the different detectors for a 16- and a 64-ary quadrature amplitude modulation (QAM) constellation. The results indicate that there is a noticeable performance degradation of the suboptimal detection schemes compared to the optimal scheme when the constellation size is larger than the number of quantization levels. Furthermore, at low signal-to-noise ratios (SNRs), 1-bit quantization outperforms 2- and 3-bit quantization, respectively, even when employing higher-order constellations. We confirm our analytical results by Monte Carlo simulations. Both a pure line-of-sight (LoS) and a more realistically modeled indoor THz channel are considered. Then, we optimize the input signal constellation with respect to SER for 1-bit quantization. The results show that the minimum SER can be lowered significantly for 16-QAM by increasing the distance between the inner and outer points of the input constellation. For larger constellations, however, the achievable reduction of the minimum SER is much smaller compared to 16-QAM.

Time-based vs. Fingerprinting-based Positioning Using Artificial Neural Networks

Dec 04, 2023

Abstract:High-accuracy positioning has gained significant interest for many use-cases across various domains such as industrial internet of things (IIoT), healthcare and entertainment. Radio frequency (RF) measurements are widely utilized for user localization. However, challenging radio conditions such as non-line-of-sight (NLOS) and multipath propagation can deteriorate the positioning accuracy. Machine learning (ML)-based estimators have been proposed to overcome these challenges. RF measurements can be utilized for positioning in multiple ways resulting in time-based, angle-based and fingerprinting-based methods. Different methods, however, impose different implementation requirements to the system, and may perform differently in terms of accuracy for a given setting. In this paper, we use artificial neural networks (ANNs) to realize time-of-arrival (ToA)-based and channel impulse response (CIR) fingerprinting-based positioning. We compare their performance for different indoor environments based on real-world ultra-wideband (UWB) measurements. We first show that using ML techniques helps to improve the estimation accuracy compared to conventional techniques for time-based positioning. When comparing time-based and fingerprinting schemes using ANNs, we show that the favorable method in terms of positioning accuracy is different for different environments, where the accuracy is affected not only by the radio propagation conditions but also the density and distribution of reference user locations used for fingerprinting.

Movable Antenna-Enhanced Multiuser Communication: Optimal Discrete Antenna Positioning and Beamforming

Aug 04, 2023

Abstract:Movable antennas (MAs) are a promising paradigm to enhance the spatial degrees of freedom of conventional multi-antenna systems by flexibly adapting the positions of the antenna elements within a given transmit area. In this paper, we model the motion of the MA elements as discrete movements and study the corresponding resource allocation problem for MA-enabled multiuser multiple-input single-output (MISO) communication systems. Specifically, we jointly optimize the beamforming and the MA positions at the base station (BS) for the minimization of the total transmit power while guaranteeing the minimum required signal-to-interference-plus-noise ratio (SINR) of each individual user. To obtain the globally optimal solution to the formulated resource allocation problem, we develop an iterative algorithm capitalizing on the generalized Bender's decomposition with guaranteed convergence. Our numerical results demonstrate that the proposed MA-enabled communication system can significantly reduce the BS transmit power and the number of antenna elements needed to achieve a desired performance compared to state-of-the-art techniques, such as antenna selection. Furthermore, we observe that refining the step size of the MA motion driver improves performance at the expense of a higher computational complexity.

Time of Arrival Error Estimation for Positioning Using Convolutional Neural Networks

Jan 11, 2023

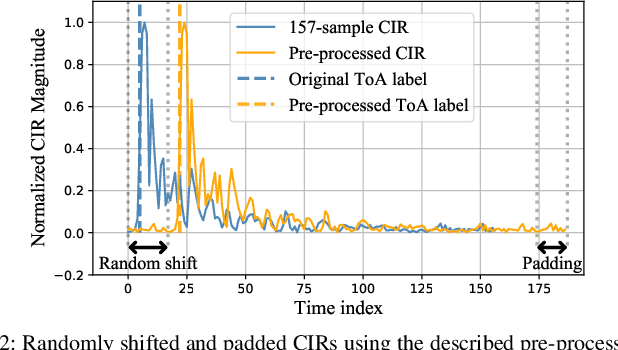

Abstract:Wireless high-accuracy positioning has recently attracted growing research interest due to diversified nature of applications such as industrial asset tracking, autonomous driving, process automation, and many more. However, obtaining a highly accurate location information is hampered by challenges due to the radio environment. A major source of error for time-based positioning methods is inaccurate time-of-arrival (ToA) or range estimation. Existing machine learning-based solutions to mitigate such errors rely on propagation environment classification hindered by a low number of classes, employ a set of features representing channel measurements only to a limited extent, or account for only device-specific proprietary methods of ToA estimation. In this paper, we propose convolutional neural networks (CNNs) to estimate and mitigate the errors of a variety of ToA estimation methods utilizing channel impulse responses (CIRs). Based on real-world measurements from two independent campaigns, the proposed method yields significant improvements in ranging accuracy (up to 37%) of the state-of-the-art ToA estimators, often eliminating the need of optimizing the underlying conventional methods.

Matching Pursuit Based Scheduling for Over-the-Air Federated Learning

Jun 14, 2022

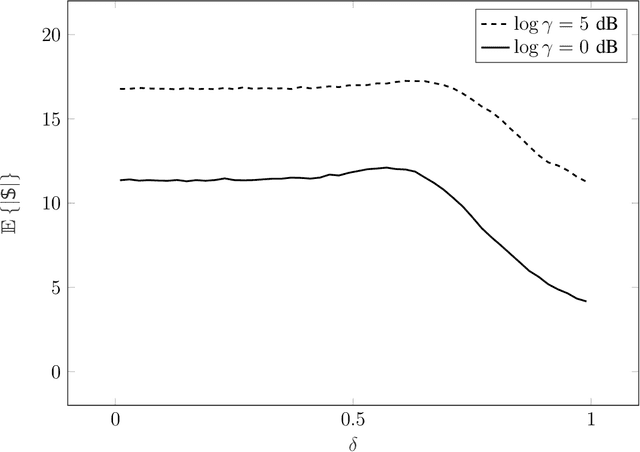

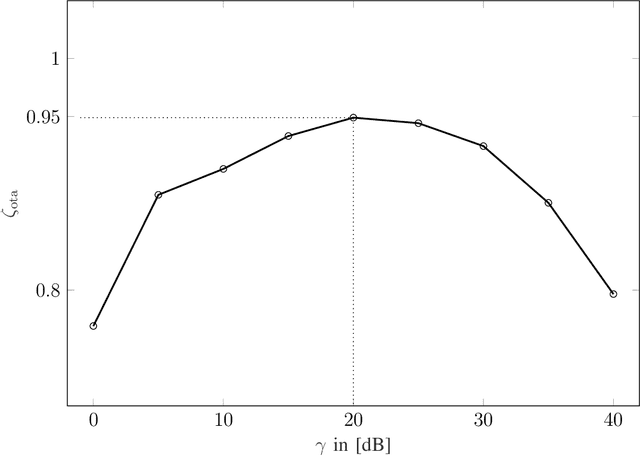

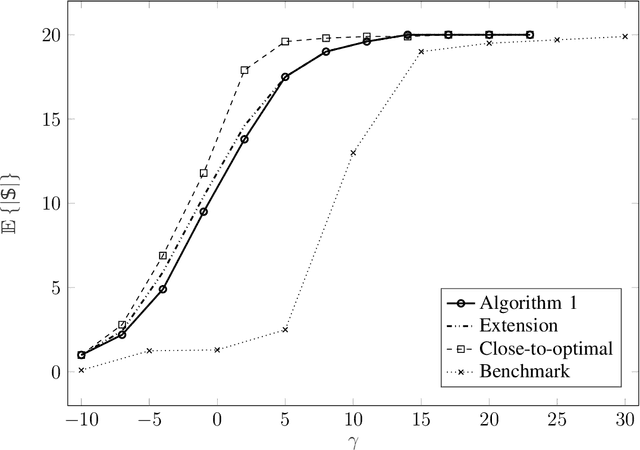

Abstract:This paper develops a class of low-complexity device scheduling algorithms for over-the-air federated learning via the method of matching pursuit. The proposed scheme tracks closely the close-to-optimal performance achieved by difference-of-convex programming, and outperforms significantly the well-known benchmark algorithms based on convex relaxation. Compared to the state-of-the-art, the proposed scheme poses a drastically lower computational load on the system: For $K$ devices and $N$ antennas at the parameter server, the benchmark complexity scales with $\left(N^2+K\right)^3 + N^6$ while the complexity of the proposed scheme scales with $K^p N^q$ for some $0 < p,q \leq 2$. The efficiency of the proposed scheme is confirmed via numerical experiments on the CIFAR-10 dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge