Eduard A. Jorswieck

Enhancing Energy and Spectral Efficiency in IoT-Cellular Networks via Active SIM-Equipped LEO Satellites

Aug 23, 2025

Abstract:This paper investigates a low Earth orbit (LEO) satellite communication system enhanced by an active stacked intelligent metasurface (ASIM), mounted on the backplate of the satellite solar panels to efficiently utilize limited onboard space and reduce the main satellite power amplifier requirements. The system serves multiple ground users via rate-splitting multiple access (RSMA) and IoT devices through a symbiotic radio network. Multi-layer sequential processing in the ASIM improves effective channel gains and suppresses inter-user interference, outperforming active RIS and beyond-diagonal RIS designs. Three optimization approaches are evaluated: block coordinate descent with successive convex approximation (BCD-SCA), model-assisted multi-agent constraint soft actor-critic (MA-CSAC), and multi-constraint proximal policy optimization (MCPPO). Simulation results show that BCD-SCA converges fast and stably in convex scenarios without learning, MCPPO achieves rapid initial convergence with moderate stability, and MA-CSAC attains the highest long-term spectral and energy efficiency in large-scale networks. Energy-spectral efficiency trade-offs are analyzed for different ASIM elements, satellite antennas, and transmit power. Overall, the study demonstrates that integrating multi-layer ASIM with suitable optimization algorithms offers a scalable, energy-efficient, and high-performance solution for next-generation LEO satellite communications.

RIS-Assisted NOMA with Partial CSI and Mutual Coupling: A Machine Learning Approach

Aug 12, 2025

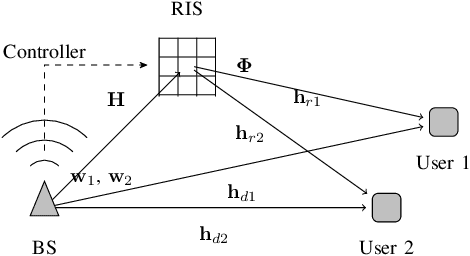

Abstract:Non-orthogonal multiple access (NOMA) is a promising multiple access technique. Its performance depends strongly on the wireless channel property, which can be enhanced by reconfigurable intelligent surfaces (RISs). In this paper, we jointly optimize base station (BS) precoding and RIS configuration with unsupervised machine learning (ML), which looks for the optimal solution autonomously. In particular, we propose a dedicated neural network (NN) architecture RISnet inspired by domain knowledge in communication. Compared to state-of-the-art, the proposed approach combines analytical optimal BS precoding and ML-enabled RIS, has a high scalability to control more than 1000 RIS elements, has a low requirement for channel state information (CSI) in input, and addresses the mutual coupling between RIS elements. Beyond the considered problem, this work is an early contribution to domain knowledge enabled ML, which exploit the domain expertise of communication systems to design better approaches than general ML methods.

Power Efficient Cooperative Communication within IIoT Subnetworks: Relay or RIS?

Nov 19, 2024

Abstract:The forthcoming sixth-generation (6G) industrial Internet-of-Things (IIoT) subnetworks are expected to support ultra-fast control communication cycles for numerous IoT devices. However, meeting the stringent requirements for low latency and high reliability poses significant challenges, particularly due to signal fading and physical obstructions. In this paper, we propose novel time division multiple access (TDMA) and frequency division multiple access (FDMA) communication protocols for cooperative transmission in IIoT subnetworks. These protocols leverage secondary access points (sAPs) as Decode-and-Forward (DF) and Amplify-and-Forward (AF) relays, enabling shorter cycle times while minimizing overall transmit power. A classification mechanism determines whether the highest-gain link for each IoT device is a single-hop or two-hop connection, and selects the corresponding sAP. We then formulate the problem of minimizing transmit power for DF/AF relaying while adhering to the delay and maximum power constraints. In the FDMA case, an additional constraint is introduced for bandwidth allocation to IoT devices during the first and second phases of cooperative transmission. To tackle the nonconvex problem, we employ the sequential parametric convex approximation (SPCA) method. We extend our analysis to a system model with reconfigurable intelligent surfaces (RISs), enabling transmission through direct and RIS-assisted channels, and optimizing for a multi-RIS scenario for comparative analysis. Simulation results show that our cooperative communication approach reduces the emitted power by up to 7.5 dB while maintaining an outage probability and a resource overflow rate below $10^{-6}$. While the RIS-based solution achieves greater power savings, the relay-based protocol outperforms RIS in terms of outage probability.

Distributed Signal Processing for Extremely Large-Scale Antenna Array Systems: State-of-the-Art and Future Directions

Jul 23, 2024Abstract:Extremely large-scale antenna arrays (ELAA) play a critical role in enabling the functionalities of next generation wireless communication systems. However, as the number of antennas increases, ELAA systems face significant bottlenecks, such as excessive interconnection costs and high computational complexity. Efficient distributed signal processing (SP) algorithms show great promise in overcoming these challenges. In this paper, we provide a comprehensive overview of distributed SP algorithms for ELAA systems, tailored to address these bottlenecks. We start by presenting three representative forms of ELAA systems: single-base station ELAA systems, coordinated distributed antenna systems, and ELAA systems integrated with emerging technologies. For each form, we review the associated distributed SP algorithms in the literature. Additionally, we outline several important future research directions that are essential for improving the performance and practicality of ELAA systems.

RISnet: A Domain-Knowledge Driven Neural Network Architecture for RIS Optimization with Mutual Coupling and Partial CSI

Mar 06, 2024

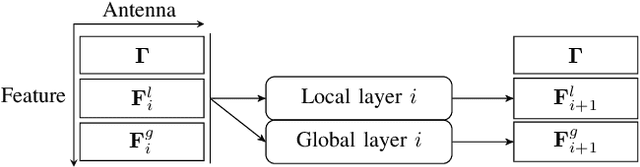

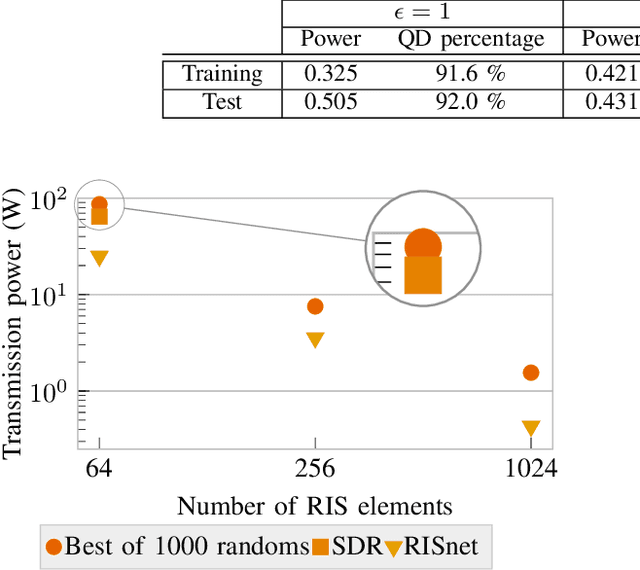

Abstract:Multiple access techniques are cornerstones of wireless communications. Their performance depends on the channel properties, which can be improved by reconfigurable intelligent surfaces (RISs). In this work, we jointly optimize MA precoding at the base station (BS) and RIS configuration. We tackle difficulties of mutual coupling between RIS elements, scalability to more than 1000 RIS elements, and channel estimation. We first derive an RIS-assisted channel model considering mutual coupling, then propose an unsupervised machine learning (ML) approach to optimize the RIS. In particular, we design a dedicated neural network (NN) architecture RISnet with good scalability and desired symmetry. Moreover, we combine ML-enabled RIS configuration and analytical precoding at BS since there exist analytical precoding schemes. Furthermore, we propose another variant of RISnet, which requires the channel state information (CSI) of a small portion of RIS elements (in this work, 16 out of 1296 elements) if the channel comprises a few specular propagation paths. More generally, this work is an early contribution to combine ML technique and domain knowledge in communication for NN architecture design. Compared to generic ML, the problem-specific ML can achieve higher performance, lower complexity and symmetry.

Energy-Efficient Power Allocation in Cell-Free Massive MIMO via Graph Neural Networks

Feb 01, 2024

Abstract:CF-mMIMO systems are a promising solution to enhance the performance in 6G wireless networks. Its distributed nature of the architecture makes it highly reliable, provides sufficient coverage and allows higher performance than cellular networks. EE is an important metric that reduces the operating costs and also better for the environment. In this work, we optimize the downlink EE performance with MRT precoding and power allocation. Our aim is to achieve a less complex, distributed and scalable solution. To achieve this, we apply unsupervised ML with permutation equivariant architecture and use a non-convex objective function with multiple local optima. We compare the performance with the centralized and computationally expensive SCA. The results indicate that the proposed approach can outperform the baseline with significantly less computation time.

A Survey of Advances in Optimization Methods for Wireless Communication System Design

Jan 22, 2024Abstract:Mathematical optimization is now widely regarded as an indispensable modeling and solution tool for the design of wireless communications systems. While optimization has played a significant role in the revolutionary progress in wireless communication and networking technologies from 1G to 5G and onto the future 6G, the innovations in wireless technologies have also substantially transformed the nature of the underlying mathematical optimization problems upon which the system designs are based and have sparked significant innovations in the development of methodologies to understand, to analyze, and to solve those problems. In this paper, we provide a comprehensive survey of recent advances in mathematical optimization theory and algorithms for wireless communication system design. We begin by illustrating common features of mathematical optimization problems arising in wireless communication system design. We discuss various scenarios and use cases and their associated mathematical structures from an optimization perspective. We then provide an overview of recent advances in mathematical optimization theory and algorithms, from nonconvex optimization, global optimization, and integer programming, to distributed optimization and learning-based optimization. The key to successful solution of mathematical optimization problems is in carefully choosing and/or developing suitable optimization algorithms (or neural network architectures) that can exploit the underlying problem structure. We conclude the paper by identifying several open research challenges and outlining future research directions.

Non-Orthogonal Multiple Access Assisted by Reconfigurable Intelligent Surface Using Unsupervised Machine Learning

May 01, 2023

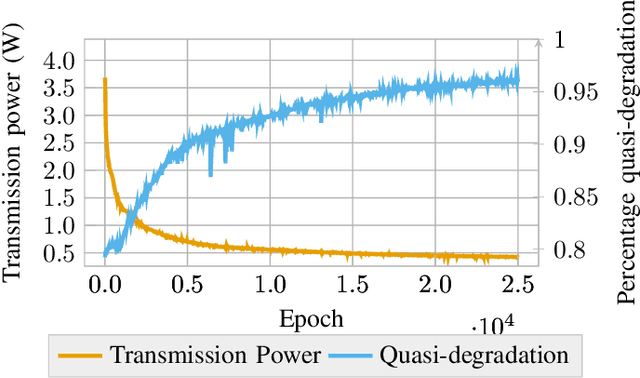

Abstract:Nonorthogonal multiple access (NOMA) with multi-antenna base station (BS) is a promising technology for next-generation wireless communication, which has high potential in performance and user fairness. Since the performance of NOMA depends on the channel conditions, we can combine NOMA and reconfigurable intelligent surface (RIS), which is a large and passive antenna array and can optimize the wireless channel. However, the high dimensionality makes the RIS optimization a complicated problem. In this work, we propose a machine learning approach to solve the problem of joint optimization of precoding and RIS configuration. We apply the RIS to realize the quasi-degradation of the channel, which allows for optimal precoding in closed form. The neural network architecture RISnet is used, which is designed dedicatedly for RIS optimization. The proposed solution is superior than the works in the literature in terms of performance and computation time.

RISnet: A Scalable Approach for Reconfigurable Intelligent Surface Optimization with Partial CSI

May 01, 2023

Abstract:The reconfigurable intelligent surface (RIS) is a promising technology that enables wireless communication systems to achieve improved performance by intelligently manipulating wireless channels. In this paper, we consider the sum-rate maximization problem in a downlink multi-user multi-input-single-output (MISO) channel via space-division multiple access (SDMA). Two major challenges of this problem are the high dimensionality due to the large number of RIS elements and the difficulty to obtain the full channel state information (CSI), which is assumed known in many algorithms proposed in the literature. Instead, we propose a hybrid machine learning approach using the weighted minimum mean squared error (WMMSE) precoder at the base station (BS) and a dedicated neural network (NN) architecture, RISnet, for RIS configuration. The RISnet has a good scalability to optimize 1296 RIS elements and requires partial CSI of only 16 RIS elements as input. We show it achieves a high performance with low requirement for channel estimation for geometric channel models obtained with ray-tracing simulation. The unsupervised learning lets the RISnet find an optimized RIS configuration by itself. Numerical results show that a trained model configures the RIS with low computational effort, considerably outperforms the baselines, and can work with discrete phase shifts.

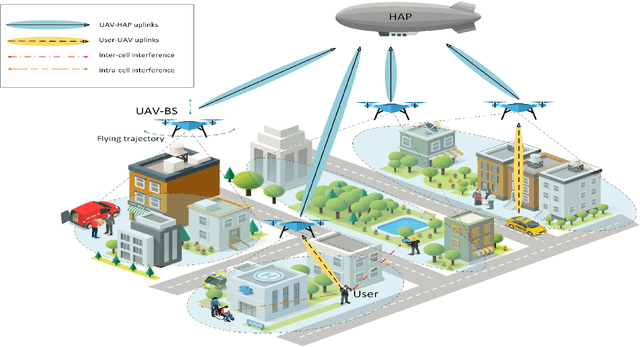

AI-based Radio and Computing Resource Allocation and Path Planning in NOMA NTNs: AoI Minimization under CSI Uncertainty

May 01, 2023

Abstract:In this paper, we develop a hierarchical aerial computing framework composed of high altitude platform (HAP) and unmanned aerial vehicles (UAVs) to compute the fully offloaded tasks of terrestrial mobile users which are connected through an uplink non-orthogonal multiple access (UL-NOMA). In particular, the problem is formulated to minimize the AoI of all users with elastic tasks, by adjusting UAVs trajectory and resource allocation on both UAVs and HAP, which is restricted by the channel state information (CSI) uncertainty and multiple resource constraints of UAVs and HAP. In order to solve this non-convex optimization problem, two methods of multi-agent deep deterministic policy gradient (MADDPG) and federated reinforcement learning (FRL) are proposed to design the UAVs trajectory and obtain channel, power, and CPU allocations. It is shown that task scheduling significantly reduces the average AoI. This improvement is more pronounced for larger task sizes. On the one hand, it is shown that power allocation has a marginal effect on the average AoI compared to using full transmission power for all users. On the other hand, compared with traditional transmissions (fixed method) simulation result shows that our scheduling scheme has a lower average AoI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge