Ramoni Adeogun

XR Capacity Enhancement through Multi-Connected XR Tethering Groups

Dec 13, 2025

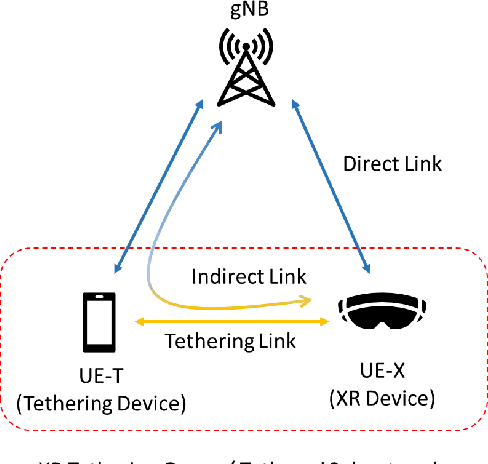

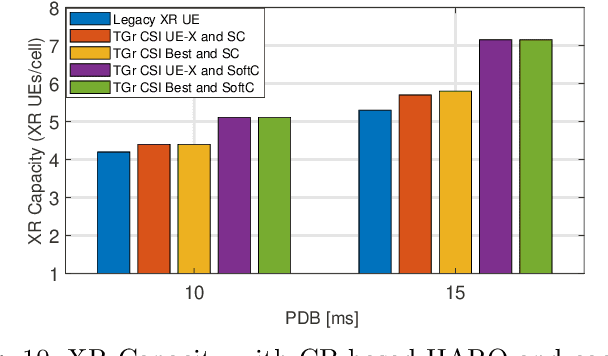

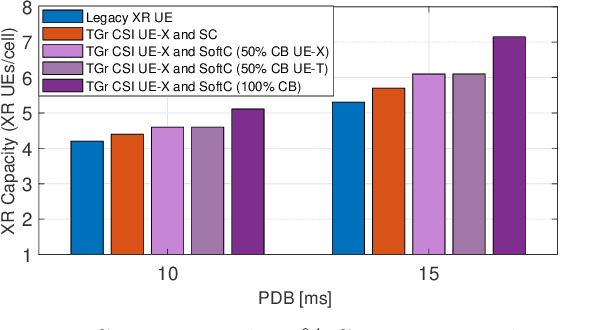

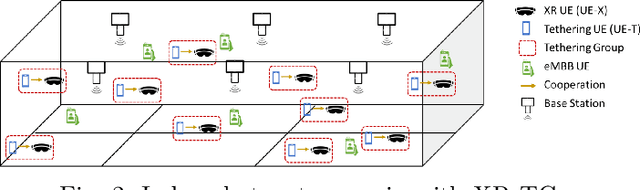

Abstract:Extended Reality (XR) applications have limited capacity in 5th generation-advanced (5G-A) cellular networks due to high throughput requirements coupled with strict latency and high reliability constraints. To enhance XR capacity in the downlink (DL), this paper investigates multi-connected XR tethering groups (TGrs), comprising an XR device and a cooperating 5G-A device. This paper presents investigations for two types of cooperation within XR TGr, i.e., selection combining (SC) and soft combining and their impact on the XR capacity of the network. These investigations consider joint hybrid automatic repeat request (HARQ) feedback processing algorithm and also propose enhanced joint Outer Loop Link Adaptation (OLLA) algorithm to leverage the benefits of multi-connectivity. These enhancements aim to improve the spectral efficiency of the network by limiting HARQ retransmissions and enabling the use of higher modulation and coding scheme (MCS) indices for given signal-to-interference-plus-noise ratio (SINR), all while maintaining or operating below than the target block error rate (BLER). Dynamic system-level simulation demonstrate that XR TGrs with soft combining achieve performance improvements of 23 - 42% in XR capacity with only XR users and 38-173% in the coexistence scenarios consisting of XR users and enhanced mobile broadband (eMBB) user. Furthermore, the enhanced joint OLLA algorithm enables similar performance gains even when only one device per XR TGr provides channel state information (CSI) reports, compared to scenarios where both devices report CSI. Notably, XR TGrs with soft combining also enhance eMBB throughput in coexistence scenarios.

Learning Power Control Protocol for In-Factory 6G Subnetworks

May 09, 2025Abstract:In-X Subnetworks are envisioned to meet the stringent demands of short-range communication in diverse 6G use cases. In the context of In-Factory scenarios, effective power control is critical to mitigating the impact of interference resulting from potentially high subnetwork density. Existing approaches to power control in this domain have predominantly emphasized the data plane, often overlooking the impact of signaling overhead. Furthermore, prior work has typically adopted a network-centric perspective, relying on the assumption of complete and up-to-date channel state information (CSI) being readily available at the central controller. This paper introduces a novel multi-agent reinforcement learning (MARL) framework designed to enable access points to autonomously learn both signaling and power control protocols in an In-Factory Subnetwork environment. By formulating the problem as a partially observable Markov decision process (POMDP) and leveraging multi-agent proximal policy optimization (MAPPO), the proposed approach achieves significant advantages. The simulation results demonstrate that the learning-based method reduces signaling overhead by a factor of 8 while maintaining a buffer flush rate that lags the ideal "Genie" approach by only 5%.

Multi-User Beamforming with Deep Reinforcement Learning in Sensing-Aided Communication

May 09, 2025Abstract:Mobile users are prone to experience beam failure due to beam drifting in millimeter wave (mmWave) communications. Sensing can help alleviate beam drifting with timely beam changes and low overhead since it does not need user feedback. This work studies the problem of optimizing sensing-aided communication by dynamically managing beams allocated to mobile users. A multi-beam scheme is introduced, which allocates multiple beams to the users that need an update on the angle of departure (AoD) estimates and a single beam to the users that have satisfied AoD estimation precision. A deep reinforcement learning (DRL) assisted method is developed to optimize the beam allocation policy, relying only upon the sensing echoes. For comparison, a heuristic AoD-based method using approximated Cram\'er-Rao lower bound (CRLB) for allocation is also presented. Both methods require neither user feedback nor prior state evolution information. Results show that the DRL-assisted method achieves a considerable gain in throughput than the conventional beam sweeping method and the AoD-based method, and it is robust to different user speeds.

Power Efficient Cooperative Communication within IIoT Subnetworks: Relay or RIS?

Nov 19, 2024

Abstract:The forthcoming sixth-generation (6G) industrial Internet-of-Things (IIoT) subnetworks are expected to support ultra-fast control communication cycles for numerous IoT devices. However, meeting the stringent requirements for low latency and high reliability poses significant challenges, particularly due to signal fading and physical obstructions. In this paper, we propose novel time division multiple access (TDMA) and frequency division multiple access (FDMA) communication protocols for cooperative transmission in IIoT subnetworks. These protocols leverage secondary access points (sAPs) as Decode-and-Forward (DF) and Amplify-and-Forward (AF) relays, enabling shorter cycle times while minimizing overall transmit power. A classification mechanism determines whether the highest-gain link for each IoT device is a single-hop or two-hop connection, and selects the corresponding sAP. We then formulate the problem of minimizing transmit power for DF/AF relaying while adhering to the delay and maximum power constraints. In the FDMA case, an additional constraint is introduced for bandwidth allocation to IoT devices during the first and second phases of cooperative transmission. To tackle the nonconvex problem, we employ the sequential parametric convex approximation (SPCA) method. We extend our analysis to a system model with reconfigurable intelligent surfaces (RISs), enabling transmission through direct and RIS-assisted channels, and optimizing for a multi-RIS scenario for comparative analysis. Simulation results show that our cooperative communication approach reduces the emitted power by up to 7.5 dB while maintaining an outage probability and a resource overflow rate below $10^{-6}$. While the RIS-based solution achieves greater power savings, the relay-based protocol outperforms RIS in terms of outage probability.

Phase Optimization and Relay Selection for Joint Relay and IRS-Assisted Communication

Aug 29, 2024Abstract:The use of Intelligent Reflecting Surfaces (IRSs) is considered a potential enabling technology for enhancing the spectral and energy efficiency of beyond 5G communication systems. In this paper, a joint relay and intelligent reflecting surface (IRS)-assisted communication is considered to investigate the gains of optimizing both the phase angles and selection of relays. The combination of successive refinement and reinforcement learning is proposed. Successive refinement algorithm is used for phase optimization and reinforcement learning is used for relay selection. Experimental results indicate that the proposed approach offers improved achievable rate performance and scales better with number of relays compared to considered benchmark approaches.

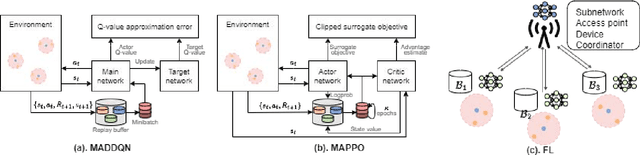

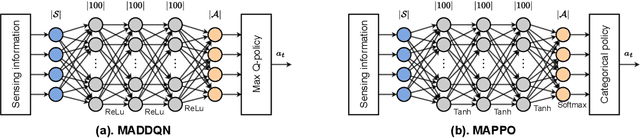

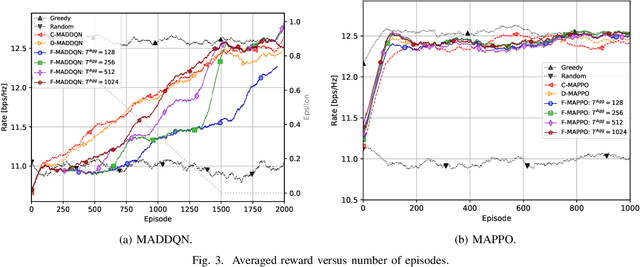

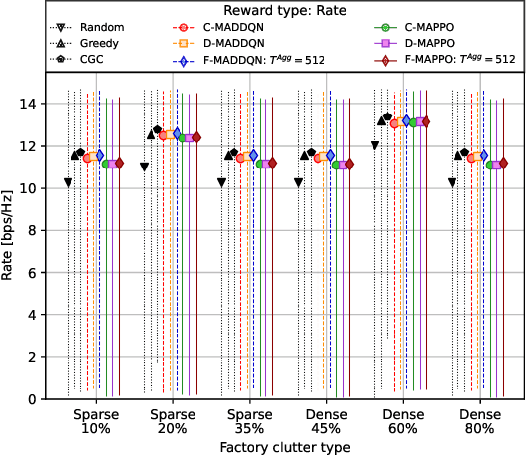

Federated Multi-Agent DRL for Radio Resource Management in Industrial 6G in-X subnetworks

Jun 11, 2024

Abstract:Recently, 6G in-X subnetworks have been proposed as low-power short-range radio cells to support localized extreme wireless connectivity inside entities such as industrial robots, vehicles, and the human body. Deployment of in-X subnetworks within these entities may result in rapid changes in interference levels and thus, varying link quality. This paper investigates distributed dynamic channel allocation to mitigate inter-subnetwork interference in dense in-factory deployments of 6G in-X subnetworks. This paper introduces two new techniques, Federated Multi-Agent Double Deep Q-Network (F-MADDQN) and Federated Multi-Agent Deep Proximal Policy Optimization (F-MADPPO), for channel allocation in 6G in-X subnetworks. These techniques are based on a client-to-server horizontal federated reinforcement learning framework. The methods require sharing only local model weights with a centralized gNB for federated aggregation thereby preserving local data privacy and security. Simulations were conducted using a practical indoor factory environment proposed by 5G-ACIA and 3GPP models for in-factory environments. The results showed that the proposed methods achieved slightly better performance than baseline schemes with significantly reduced signaling overhead compared to the baseline solutions. The schemes also showed better robustness and generalization ability to changes in deployment densities and propagation parameters.

Unsupervised Graph-based Learning Method for Sub-band Allocation in 6G Subnetworks

Dec 13, 2023Abstract:In this paper, we present an unsupervised approach for frequency sub-band allocation in wireless networks using graph-based learning. We consider a dense deployment of subnetworks in the factory environment with a limited number of sub-bands which must be optimally allocated to coordinate inter-subnetwork interference. We model the subnetwork deployment as a conflict graph and propose an unsupervised learning approach inspired by the graph colouring heuristic and the Potts model to optimize the sub-band allocation using graph neural networks. The numerical evaluation shows that the proposed method achieves close performance to the centralized greedy colouring sub-band allocation heuristic with lower computational time complexity. In addition, it incurs reduced signalling overhead compared to iterative optimization heuristics that require all the mutual interfering channel information. We further demonstrate that the method is robust to different network settings.

Proximal Policy Optimization for Integrated Sensing and Communication in mmWave Systems

Jun 27, 2023Abstract:In wireless communication systems, mmWave beam tracking is a critical task that affects both sensing and communications, as it is related to the knowledge of the wireless channel. We consider a setup in which a Base Station (BS) needs to dynamically choose whether the resource will be allocated for one of the three operations: sensing (beam tracking), downlink, or uplink transmission. We devise an approach based on the Proximal Policy Optimization (PPO) algorithm for choosing the resource allocation and beam tracking at a given time slot. The proposed framework takes into account the variable quality of the wireless channel and optimizes the decisions in a coordinated manner. Simulation results demonstrate that the proposed method achieves significant performance improvements in terms of average packet error rate (PER) compared to the baseline methods while providing a significant reduction in beam tracking overhead. We also show that our proposed PPO-based framework provides an effective solution to the resource allocation problem in beam tracking and communication, exhibiting a great generalization performance regardless of the stochastic behavior of the system.

Unsupervised Deep Unfolded PGD for Transmit Power Allocation in Wireless Systems

Jun 20, 2023Abstract:Transmit power control (TPC) is a key mechanism for managing interference, energy utilization, and connectivity in wireless systems. In this paper, we propose a simple low-complexity TPC algorithm based on the deep unfolding of the iterative projected gradient descent (PGD) algorithm into layers of a deep neural network and learning the step-size parameters. An unsupervised learning method with either online learning or offline pretraining is applied for optimizing the weights of the DNN. Performance evaluation in dense device-to-device (D2D) communication scenarios showed that the proposed method can achieve better performance than the iterative algorithm with more than a factor of 2 lower number of iterations.

On the required radio resources for ultra-reliable communication in highly interfered scenarios

Jun 10, 2023Abstract:Future wireless systems are expected to support mission-critical services demanding higher and higher reliability. In this letter, we dimension the radio resources needed to achieve a given failure probability target for ultra-reliable wireless systems in high interference conditions, assuming a protocol with frequency hopping combined with packet repetitions. We resort to packet erasure channel models and derive the minimum amount of resource units in the case of receiver with and without collision resolution capability, as well as the number of packet repetitions needed for achieving the failure probability target. Analytical results are numerically validated and can be used as a benchmark for realistic system simulations

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge