Federated Multi-Agent DRL for Radio Resource Management in Industrial 6G in-X subnetworks

Paper and Code

Jun 11, 2024

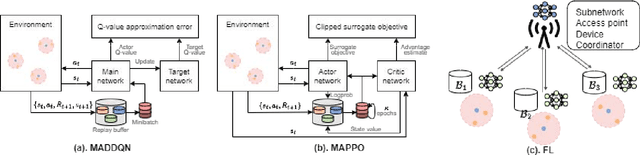

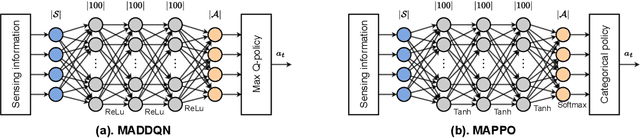

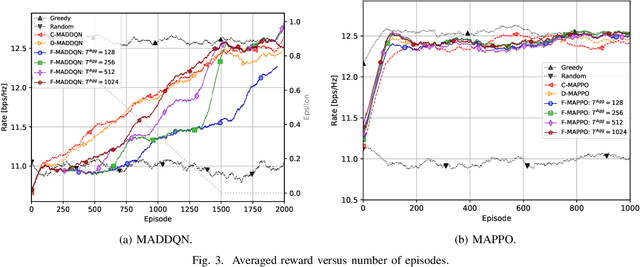

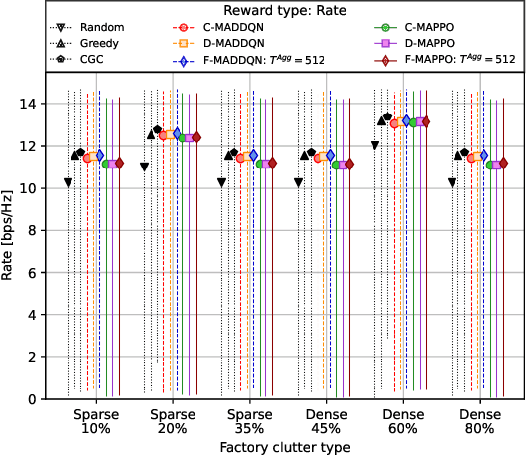

Recently, 6G in-X subnetworks have been proposed as low-power short-range radio cells to support localized extreme wireless connectivity inside entities such as industrial robots, vehicles, and the human body. Deployment of in-X subnetworks within these entities may result in rapid changes in interference levels and thus, varying link quality. This paper investigates distributed dynamic channel allocation to mitigate inter-subnetwork interference in dense in-factory deployments of 6G in-X subnetworks. This paper introduces two new techniques, Federated Multi-Agent Double Deep Q-Network (F-MADDQN) and Federated Multi-Agent Deep Proximal Policy Optimization (F-MADPPO), for channel allocation in 6G in-X subnetworks. These techniques are based on a client-to-server horizontal federated reinforcement learning framework. The methods require sharing only local model weights with a centralized gNB for federated aggregation thereby preserving local data privacy and security. Simulations were conducted using a practical indoor factory environment proposed by 5G-ACIA and 3GPP models for in-factory environments. The results showed that the proposed methods achieved slightly better performance than baseline schemes with significantly reduced signaling overhead compared to the baseline solutions. The schemes also showed better robustness and generalization ability to changes in deployment densities and propagation parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge