Hamid Saeedi

AI-enabled Priority and Auction-Based Spectrum Management for 6G

Jan 12, 2024

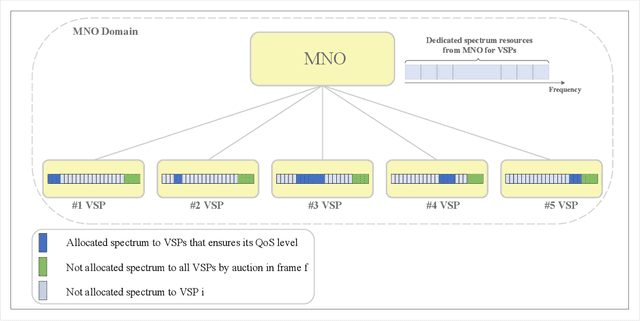

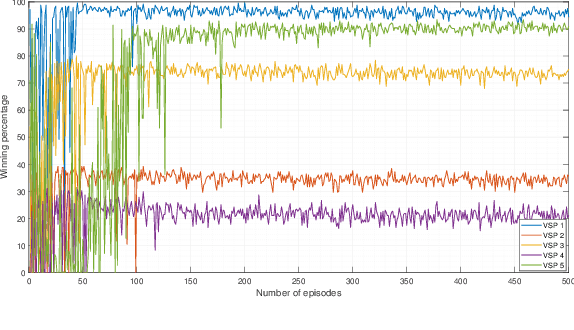

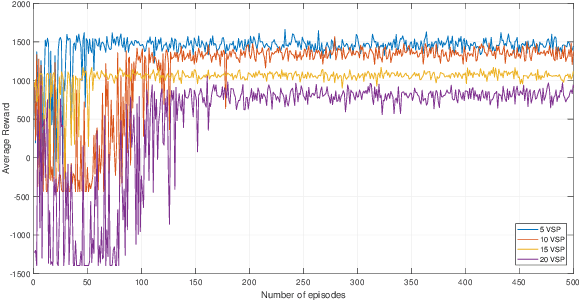

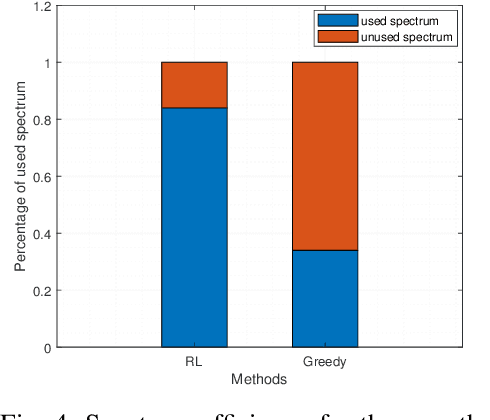

Abstract:In this paper, we present a quality of service (QoS)-aware priority-based spectrum management scheme to guarantee the minimum required bit rate of vertical sector players (VSPs) in the 5G and beyond generation, including the 6th generation (6G). VSPs are considered as spectrum leasers to optimize the overall spectrum efficiency of the network from the perspective of the mobile network operator (MNO) as the spectrum licensee and auctioneer. We exploit a modified Vickrey-Clarke-Groves (VCG) auction mechanism to allocate the spectrum to them where the QoS and the truthfulness of bidders are considered as two important parameters for prioritization of VSPs. The simulation is done with the help of deep deterministic policy gradient (DDPG) as a deep reinforcement learning (DRL)-based algorithm. Simulation results demonstrate that deploying the DDPG algorithm results in significant advantages. In particular, the efficiency of the proposed spectrum management scheme is about %85 compared to the %35 efficiency in traditional auction methods.

AI-based Radio and Computing Resource Allocation and Path Planning in NOMA NTNs: AoI Minimization under CSI Uncertainty

May 01, 2023

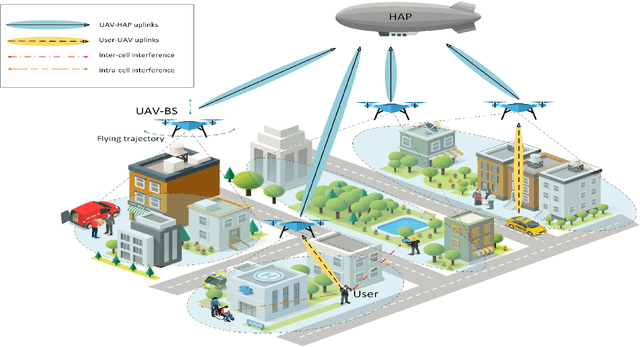

Abstract:In this paper, we develop a hierarchical aerial computing framework composed of high altitude platform (HAP) and unmanned aerial vehicles (UAVs) to compute the fully offloaded tasks of terrestrial mobile users which are connected through an uplink non-orthogonal multiple access (UL-NOMA). In particular, the problem is formulated to minimize the AoI of all users with elastic tasks, by adjusting UAVs trajectory and resource allocation on both UAVs and HAP, which is restricted by the channel state information (CSI) uncertainty and multiple resource constraints of UAVs and HAP. In order to solve this non-convex optimization problem, two methods of multi-agent deep deterministic policy gradient (MADDPG) and federated reinforcement learning (FRL) are proposed to design the UAVs trajectory and obtain channel, power, and CPU allocations. It is shown that task scheduling significantly reduces the average AoI. This improvement is more pronounced for larger task sizes. On the one hand, it is shown that power allocation has a marginal effect on the average AoI compared to using full transmission power for all users. On the other hand, compared with traditional transmissions (fixed method) simulation result shows that our scheduling scheme has a lower average AoI.

Two-Hop Age of Information Scheduling for Multi-UAV Assisted Mobile Edge Computing: FRL vs MADDPG

Jun 19, 2022

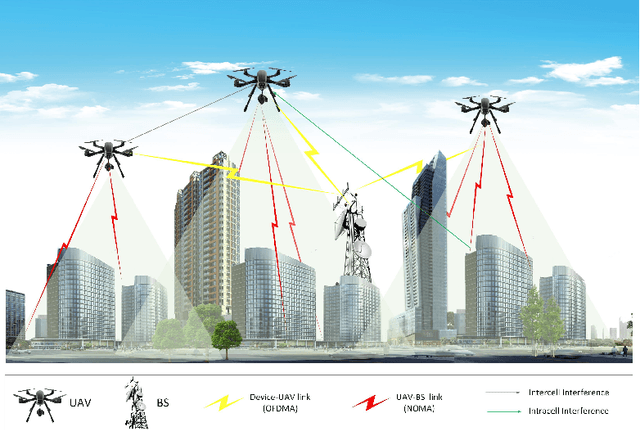

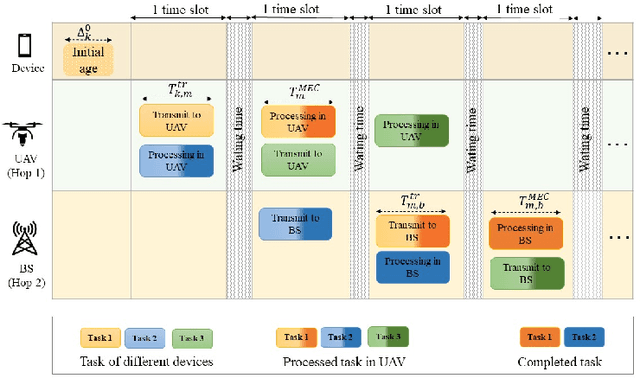

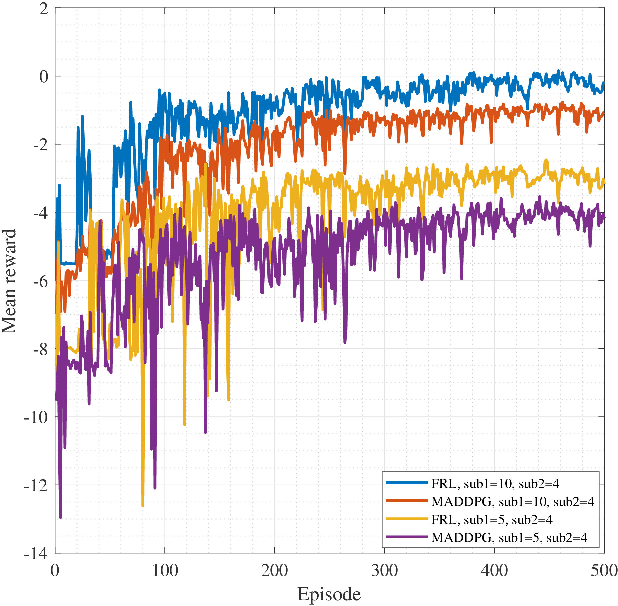

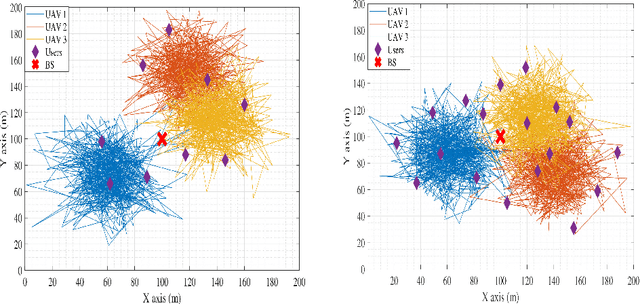

Abstract:In this work, we adopt the emerging technology of mobile edge computing (MEC) in the Unmanned aerial vehicles (UAVs) for communication-computing systems, to optimize the age of information (AoI) in the network. We assume that tasks are processed jointly on UAVs and BS to enhance edge performance with limited connectivity and computing. Using UAVs and BS jointly with MEC can reduce AoI on the network. To maintain the freshness of the tasks, we formulate the AoI minimization in two-hop communication framework, the first hop at the UAVs and the second hop at the BS. To approach the challenge, we optimize the problem using a deep reinforcement learning (DRL) framework, called federated reinforcement learning (FRL). In our network we have two types of agents with different states and actions but with the same policy. Our FRL enables us to handle the two-step AoI minimization and UAV trajectory problems. In addition, we compare our proposed algorithm, which has a centralized processing unit to update the weights, with fully decentralized multi-agent deep deterministic policy gradient (MADDPG), which enhances the agent's performance. As a result, the suggested algorithm outperforms the MADDPG by about 38\%

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge