Mikita Sazanovich

Optimizing Memory Mapping Using Deep Reinforcement Learning

May 11, 2023

Abstract:Resource scheduling and allocation is a critical component of many high impact systems ranging from congestion control to cloud computing. Finding more optimal solutions to these problems often has significant impact on resource and time savings, reducing device wear-and-tear, and even potentially improving carbon emissions. In this paper, we focus on a specific instance of a scheduling problem, namely the memory mapping problem that occurs during compilation of machine learning programs: That is, mapping tensors to different memory layers to optimize execution time. We introduce an approach for solving the memory mapping problem using Reinforcement Learning. RL is a solution paradigm well-suited for sequential decision making problems that are amenable to planning, and combinatorial search spaces with high-dimensional data inputs. We formulate the problem as a single-player game, which we call the mallocGame, such that high-reward trajectories of the game correspond to efficient memory mappings on the target hardware. We also introduce a Reinforcement Learning agent, mallocMuZero, and show that it is capable of playing this game to discover new and improved memory mapping solutions that lead to faster execution times on real ML workloads on ML accelerators. We compare the performance of mallocMuZero to the default solver used by the Accelerated Linear Algebra (XLA) compiler on a benchmark of realistic ML workloads. In addition, we show that mallocMuZero is capable of improving the execution time of the recently published AlphaTensor matrix multiplication model.

Solving Black-Box Optimization Challenge via Learning Search Space Partition for Local Bayesian Optimization

Dec 18, 2020

Abstract:This paper describes our approach to solving the black-box optimization challenge through learning search space partition for local Bayesian optimization. We develop an algorithm for low budget optimization. We further optimize the hyper-parameters of our algorithm using Bayesian optimization. Our approach has been ranked 3rd in the competition.

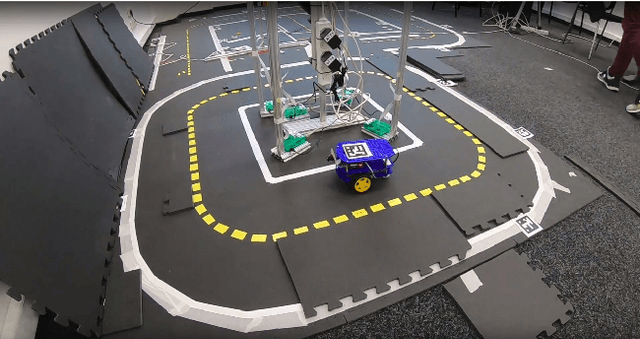

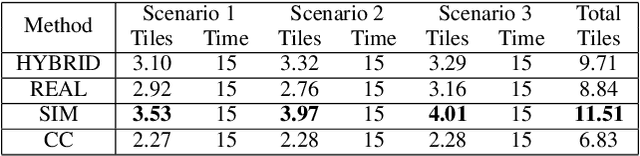

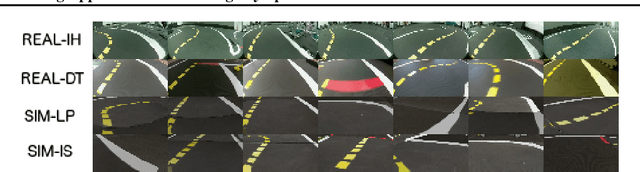

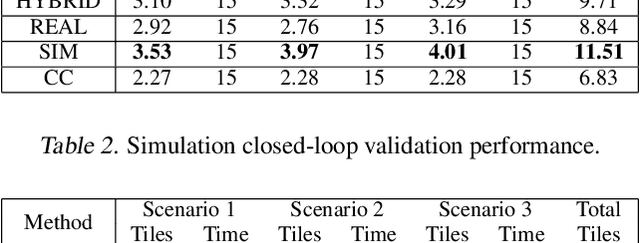

Imitation Learning Approach for AI Driving Olympics Trained on Real-world and Simulation Data Simultaneously

Jul 07, 2020

Abstract:In this paper, we describe our winning approach to solving the Lane Following Challenge at the AI Driving Olympics Competition through imitation learning on a mixed set of simulation and real-world data. AI Driving Olympics is a two-stage competition: at stage one, algorithms compete in a simulated environment with the best ones advancing to a real-world final. One of the main problems that participants encounter during the competition is that algorithms trained for the best performance in simulated environments do not hold up in a real-world environment and vice versa. Classic control algorithms also do not translate well between tasks since most of them have to be tuned to specific driving conditions such as lighting, road type, camera position, etc. To overcome this problem, we employed the imitation learning algorithm and trained it on a dataset collected from sources both from simulation and real-world, forcing our model to perform equally well in all environments.

LiDARsim: Realistic LiDAR Simulation by Leveraging the Real World

Jun 16, 2020

Abstract:We tackle the problem of producing realistic simulations of LiDAR point clouds, the sensor of preference for most self-driving vehicles. We argue that, by leveraging real data, we can simulate the complex world more realistically compared to employing virtual worlds built from CAD/procedural models. Towards this goal, we first build a large catalog of 3D static maps and 3D dynamic objects by driving around several cities with our self-driving fleet. We can then generate scenarios by selecting a scene from our catalog and "virtually" placing the self-driving vehicle (SDV) and a set of dynamic objects from the catalog in plausible locations in the scene. To produce realistic simulations, we develop a novel simulator that captures both the power of physics-based and learning-based simulation. We first utilize ray casting over the 3D scene and then use a deep neural network to produce deviations from the physics-based simulation, producing realistic LiDAR point clouds. We showcase LiDARsim's usefulness for perception algorithms-testing on long-tail events and end-to-end closed-loop evaluation on safety-critical scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge