Mengmeng Liu

4DSTR: Advancing Generative 4D Gaussians with Spatial-Temporal Rectification for High-Quality and Consistent 4D Generation

Nov 10, 2025Abstract:Remarkable advances in recent 2D image and 3D shape generation have induced a significant focus on dynamic 4D content generation. However, previous 4D generation methods commonly struggle to maintain spatial-temporal consistency and adapt poorly to rapid temporal variations, due to the lack of effective spatial-temporal modeling. To address these problems, we propose a novel 4D generation network called 4DSTR, which modulates generative 4D Gaussian Splatting with spatial-temporal rectification. Specifically, temporal correlation across generated 4D sequences is designed to rectify deformable scales and rotations and guarantee temporal consistency. Furthermore, an adaptive spatial densification and pruning strategy is proposed to address significant temporal variations by dynamically adding or deleting Gaussian points with the awareness of their pre-frame movements. Extensive experiments demonstrate that our 4DSTR achieves state-of-the-art performance in video-to-4D generation, excelling in reconstruction quality, spatial-temporal consistency, and adaptation to rapid temporal movements.

TopoLiDM: Topology-Aware LiDAR Diffusion Models for Interpretable and Realistic LiDAR Point Cloud Generation

Jul 30, 2025Abstract:LiDAR scene generation is critical for mitigating real-world LiDAR data collection costs and enhancing the robustness of downstream perception tasks in autonomous driving. However, existing methods commonly struggle to capture geometric realism and global topological consistency. Recent LiDAR Diffusion Models (LiDMs) predominantly embed LiDAR points into the latent space for improved generation efficiency, which limits their interpretable ability to model detailed geometric structures and preserve global topological consistency. To address these challenges, we propose TopoLiDM, a novel framework that integrates graph neural networks (GNNs) with diffusion models under topological regularization for high-fidelity LiDAR generation. Our approach first trains a topological-preserving VAE to extract latent graph representations by graph construction and multiple graph convolutional layers. Then we freeze the VAE and generate novel latent topological graphs through the latent diffusion models. We also introduce 0-dimensional persistent homology (PH) constraints, ensuring the generated LiDAR scenes adhere to real-world global topological structures. Extensive experiments on the KITTI-360 dataset demonstrate TopoLiDM's superiority over state-of-the-art methods, achieving improvements of 22.6% lower Frechet Range Image Distance (FRID) and 9.2% lower Minimum Matching Distance (MMD). Notably, our model also enables fast generation speed with an average inference time of 1.68 samples/s, showcasing its scalability for real-world applications. We will release the related codes at https://github.com/IRMVLab/TopoLiDM.

Factual Serialization Enhancement: A Key Innovation for Chest X-ray Report Generation

May 15, 2024Abstract:The automation of writing imaging reports is a valuable tool for alleviating the workload of radiologists. Crucial steps in this process involve the cross-modal alignment between medical images and reports, as well as the retrieval of similar historical cases. However, the presence of presentation-style vocabulary (e.g., sentence structure and grammar) in reports poses challenges for cross-modal alignment. Additionally, existing methods for similar historical cases retrieval face suboptimal performance owing to the modal gap issue. In response, this paper introduces a novel method, named Factual Serialization Enhancement (FSE), for chest X-ray report generation. FSE begins with the structural entities approach to eliminate presentation-style vocabulary in reports, providing specific input for our model. Then, uni-modal features are learned through cross-modal alignment between images and factual serialization in reports. Subsequently, we present a novel approach to retrieve similar historical cases from the training set, leveraging aligned image features. These features implicitly preserve semantic similarity with their corresponding reference reports, enabling us to calculate similarity solely among aligned features. This effectively eliminates the modal gap issue for knowledge retrieval without the requirement for disease labels. Finally, the cross-modal fusion network is employed to query valuable information from these cases, enriching image features and aiding the text decoder in generating high-quality reports. Experiments on MIMIC-CXR and IU X-ray datasets from both specific and general scenarios demonstrate the superiority of FSE over state-of-the-art approaches in both natural language generation and clinical efficacy metrics.

Tracing the Influence of Predecessors on Trajectory Prediction

Aug 10, 2023Abstract:In real-world traffic scenarios, agents such as pedestrians and car drivers often observe neighboring agents who exhibit similar behavior as examples and then mimic their actions to some extent in their own behavior. This information can serve as prior knowledge for trajectory prediction, which is unfortunately largely overlooked in current trajectory prediction models. This paper introduces a novel Predecessor-and-Successor (PnS) method that incorporates a predecessor tracing module to model the influence of predecessors (identified from concurrent neighboring agents) on the successor (target agent) within the same scene. The method utilizes the moving patterns of these predecessors to guide the predictor in trajectory prediction. PnS effectively aligns the motion encodings of the successor with multiple potential predecessors in a probabilistic manner, facilitating the decoding process. We demonstrate the effectiveness of PnS by integrating it into a graph-based predictor for pedestrian trajectory prediction on the ETH/UCY datasets, resulting in a new state-of-the-art performance. Furthermore, we replace the HD map-based scene-context module with our PnS method in a transformer-based predictor for vehicle trajectory prediction on the nuScenes dataset, showing that the predictor maintains good prediction performance even without relying on any map information.

Reduced-Complexity Cross-Domain Iterative Detection for OTFS Modulation via Delay-Doppler Decoupling

Jul 03, 2023Abstract:In this paper, a reduced-complexity cross-domain iterative detection for orthogonal time frequency space (OTFS) modulation is proposed, which exploits channel properties in both time and delay-Doppler domains. Specifically, we first show that in the time domain effective channel, the path delay only introduces interference among samples in adjacent time slots, while the Doppler becomes a phase term that does not affect the channel sparsity. This ``band-limited'' matrix structure motivates us to apply a reduced-size linear minimum mean square error (LMMSE) filter to eliminate the effect of delay in the time domain, while exploiting the cross-domain iteration for minimizing the effect of Doppler by noticing that the time and Doppler are a pair of Fourier dual. The state (MSE) evolution was derived and compared with bounds to verify the effectiveness of the proposed scheme. Simulation results demonstrate that the proposed scheme achieves almost the same error performance as the optimal detection, but only requires a reduced complexity.

LAformer: Trajectory Prediction for Autonomous Driving with Lane-Aware Scene Constraints

Feb 27, 2023Abstract:Trajectory prediction for autonomous driving must continuously reason the motion stochasticity of road agents and comply with scene constraints. Existing methods typically rely on one-stage trajectory prediction models, which condition future trajectories on observed trajectories combined with fused scene information. However, they often struggle with complex scene constraints, such as those encountered at intersections. To this end, we present a novel method, called LAformer. It uses a temporally dense lane-aware estimation module to select only the top highly potential lane segments in an HD map, which effectively and continuously aligns motion dynamics with scene information, reducing the representation requirements for the subsequent attention-based decoder by filtering out irrelevant lane segments. Additionally, unlike one-stage prediction models, LAformer utilizes predictions from the first stage as anchor trajectories and adds a second-stage motion refinement module to further explore temporal consistency across the complete time horizon. Extensive experiments on Argoverse 1 and nuScenes demonstrate that LAformer achieves excellent performance for multimodal trajectory prediction.

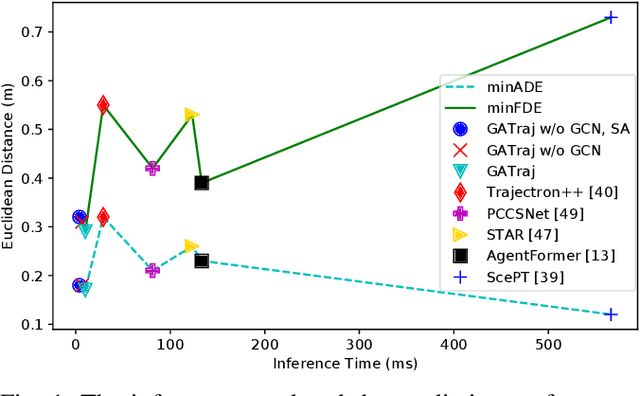

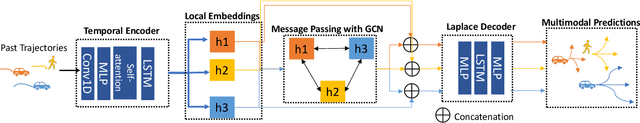

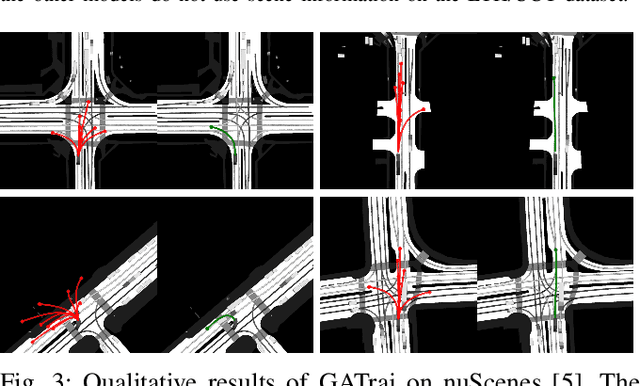

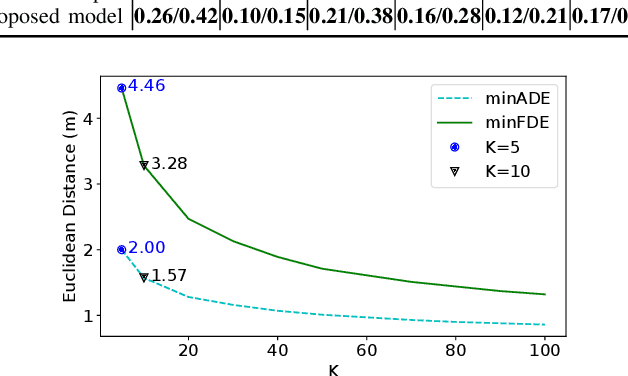

GATraj: A Graph- and Attention-based Multi-Agent Trajectory Prediction Model

Sep 16, 2022

Abstract:Trajectory prediction has been a long-standing problem in intelligent systems such as autonomous driving and robot navigation. Recent state-of-the-art models trained on large-scale benchmarks have been pushing the limit of performance rapidly, mainly focusing on improving prediction accuracy. However, those models put less emphasis on efficiency, which is critical for real-time applications. This paper proposes an attention-based graph model named GATraj with a much higher prediction speed. Spatial-temporal dynamics of agents, e.g., pedestrians or vehicles, are modeled by attention mechanisms. Interactions among agents are modeled by a graph convolutional network. We also implement a Laplacian mixture decoder to mitigate mode collapse and generate diverse multimodal predictions for each agent. Our model achieves performance on par with the state-of-the-art models at a much higher prediction speed tested on multiple open datasets.

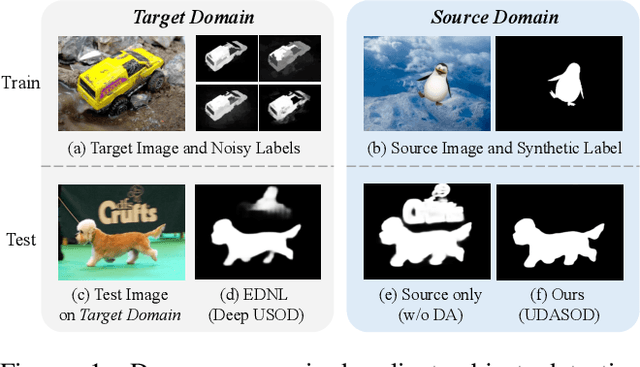

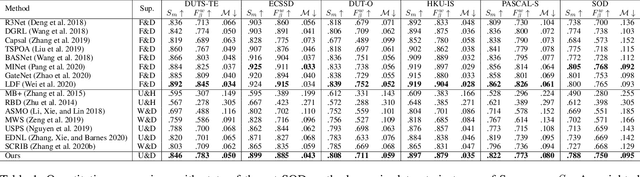

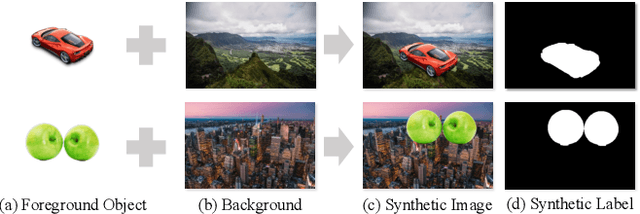

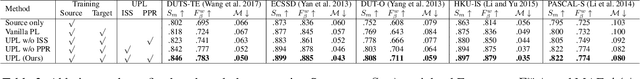

Unsupervised Domain Adaptive Salient Object Detection Through Uncertainty-Aware Pseudo-Label Learning

Feb 26, 2022

Abstract:Recent advances in deep learning significantly boost the performance of salient object detection (SOD) at the expense of labeling larger-scale per-pixel annotations. To relieve the burden of labor-intensive labeling, deep unsupervised SOD methods have been proposed to exploit noisy labels generated by handcrafted saliency methods. However, it is still difficult to learn accurate saliency details from rough noisy labels. In this paper, we propose to learn saliency from synthetic but clean labels, which naturally has higher pixel-labeling quality without the effort of manual annotations. Specifically, we first construct a novel synthetic SOD dataset by a simple copy-paste strategy. Considering the large appearance differences between the synthetic and real-world scenarios, directly training with synthetic data will lead to performance degradation on real-world scenarios. To mitigate this problem, we propose a novel unsupervised domain adaptive SOD method to adapt between these two domains by uncertainty-aware self-training. Experimental results show that our proposed method outperforms the existing state-of-the-art deep unsupervised SOD methods on several benchmark datasets, and is even comparable to fully-supervised ones.

Road Network Guided Fine-Grained Urban Traffic Flow Inference

Sep 29, 2021

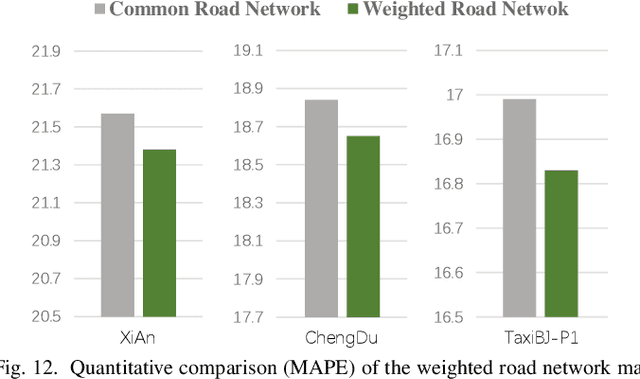

Abstract:Accurate inference of fine-grained traffic flow from coarse-grained one is an emerging yet crucial problem, which can help greatly reduce the number of traffic monitoring sensors for cost savings. In this work, we notice that traffic flow has a high correlation with road network, which was either completely ignored or simply treated as an external factor in previous works. To facilitate this problem, we propose a novel Road-Aware Traffic Flow Magnifier (RATFM) that explicitly exploits the prior knowledge of road networks to fully learn the road-aware spatial distribution of fine-grained traffic flow. Specifically, a multi-directional 1D convolutional layer is first introduced to extract the semantic feature of the road network. Subsequently, we incorporate the road network feature and coarse-grained flow feature to regularize the short-range spatial distribution modeling of road-relative traffic flow. Furthermore, we take the road network feature as a query to capture the long-range spatial distribution of traffic flow with a transformer architecture. Benefiting from the road-aware inference mechanism, our method can generate high-quality fine-grained traffic flow maps. Extensive experiments on three real-world datasets show that the proposed RATFM outperforms state-of-the-art models under various scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge