Mehdi Astaraki

Leveraging Imperfection with MEDLEY A Multi-Model Approach Harnessing Bias in Medical AI

Aug 29, 2025

Abstract:Bias in medical artificial intelligence is conventionally viewed as a defect requiring elimination. However, human reasoning inherently incorporates biases shaped by education, culture, and experience, suggesting their presence may be inevitable and potentially valuable. We propose MEDLEY (Medical Ensemble Diagnostic system with Leveraged diversitY), a conceptual framework that orchestrates multiple AI models while preserving their diverse outputs rather than collapsing them into a consensus. Unlike traditional approaches that suppress disagreement, MEDLEY documents model-specific biases as potential strengths and treats hallucinations as provisional hypotheses for clinician verification. A proof-of-concept demonstrator was developed using over 30 large language models, creating a minimum viable product that preserved both consensus and minority views in synthetic cases, making diagnostic uncertainty and latent biases transparent for clinical oversight. While not yet a validated clinical tool, the demonstration illustrates how structured diversity can enhance medical reasoning under clinician supervision. By reframing AI imperfection as a resource, MEDLEY offers a paradigm shift that opens new regulatory, ethical, and innovation pathways for developing trustworthy medical AI systems.

Lesion Segmentation in Whole-Body Multi-Tracer PET-CT Images; a Contribution to AutoPET 2024 Challenge

Sep 22, 2024

Abstract:The automatic segmentation of pathological regions within whole-body PET-CT volumes has the potential to streamline various clinical applications such as diagno-sis, prognosis, and treatment planning. This study aims to address this challenge by contributing to the AutoPET MICCAI 2024 challenge through a proposed workflow that incorporates image preprocessing, tracer classification, and lesion segmentation steps. The implementation of this pipeline led to a significant enhancement in the segmentation accuracy of the models. This improvement is evidenced by an average overall Dice score of 0.548 across 1611 training subjects, 0.631 and 0.559 for classi-fied FDG and PSMA subjects of the training set, and 0.792 on the preliminary testing phase dataset.

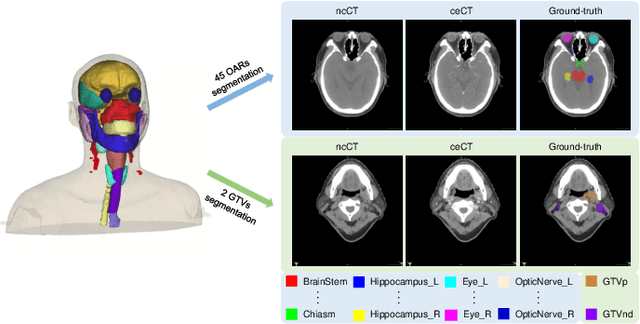

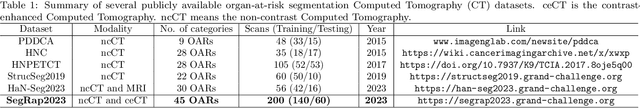

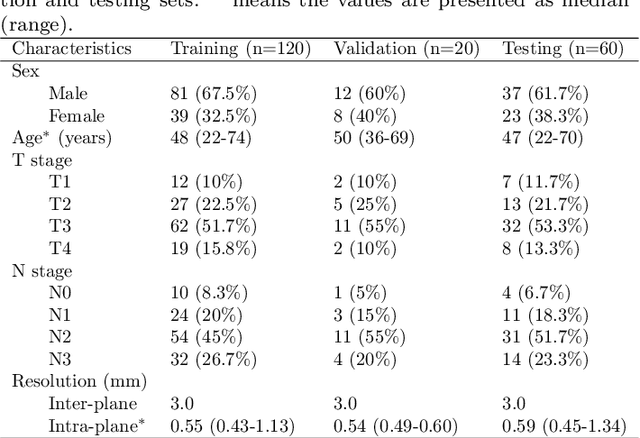

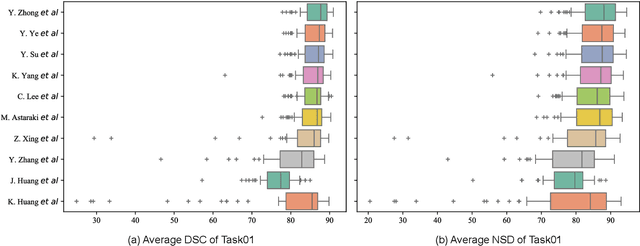

SegRap2023: A Benchmark of Organs-at-Risk and Gross Tumor Volume Segmentation for Radiotherapy Planning of Nasopharyngeal Carcinoma

Dec 15, 2023

Abstract:Radiation therapy is a primary and effective NasoPharyngeal Carcinoma (NPC) treatment strategy. The precise delineation of Gross Tumor Volumes (GTVs) and Organs-At-Risk (OARs) is crucial in radiation treatment, directly impacting patient prognosis. Previously, the delineation of GTVs and OARs was performed by experienced radiation oncologists. Recently, deep learning has achieved promising results in many medical image segmentation tasks. However, for NPC OARs and GTVs segmentation, few public datasets are available for model development and evaluation. To alleviate this problem, the SegRap2023 challenge was organized in conjunction with MICCAI2023 and presented a large-scale benchmark for OAR and GTV segmentation with 400 Computed Tomography (CT) scans from 200 NPC patients, each with a pair of pre-aligned non-contrast and contrast-enhanced CT scans. The challenge's goal was to segment 45 OARs and 2 GTVs from the paired CT scans. In this paper, we detail the challenge and analyze the solutions of all participants. The average Dice similarity coefficient scores for all submissions ranged from 76.68\% to 86.70\%, and 70.42\% to 73.44\% for OARs and GTVs, respectively. We conclude that the segmentation of large-size OARs is well-addressed, and more efforts are needed for GTVs and small-size or thin-structure OARs. The benchmark will remain publicly available here: https://segrap2023.grand-challenge.org

Fully Automatic Segmentation of Gross Target Volume and Organs-at-Risk for Radiotherapy Planning of Nasopharyngeal Carcinoma

Oct 04, 2023

Abstract:Target segmentation in CT images of Head&Neck (H&N) region is challenging due to low contrast between adjacent soft tissue. The SegRap 2023 challenge has been focused on benchmarking the segmentation algorithms of Nasopharyngeal Carcinoma (NPC) which would be employed as auto-contouring tools for radiation treatment planning purposes. We propose a fully-automatic framework and develop two models for a) segmentation of 45 Organs at Risk (OARs) and b) two Gross Tumor Volumes (GTVs). To this end, we preprocess the image volumes by harmonizing the intensity distributions and then automatically cropping the volumes around the target regions. The preprocessed volumes were employed to train a standard 3D U-Net model for each task, separately. Our method took second place for each of the tasks in the validation phase of the challenge. The proposed framework is available at https://github.com/Astarakee/segrap2023

AutoPaint: A Self-Inpainting Method for Unsupervised Anomaly Detection

May 21, 2023Abstract:Robust and accurate detection and segmentation of heterogenous tumors appearing in different anatomical organs with supervised methods require large-scale labeled datasets covering all possible types of diseases. Due to the unavailability of such rich datasets and the high cost of annotations, unsupervised anomaly detection (UAD) methods have been developed aiming to detect the pathologies as deviation from the normality by utilizing the unlabeled healthy image data. However, developed UAD models are often trained with an incomplete distribution of healthy anatomies and have difficulties in preserving anatomical constraints. This work intends to, first, propose a robust inpainting model to learn the details of healthy anatomies and reconstruct high-resolution images by preserving anatomical constraints. Second, we propose an autoinpainting pipeline to automatically detect tumors, replace their appearance with the learned healthy anatomies, and based on that segment the tumoral volumes in a purely unsupervised fashion. Three imaging datasets, including PET, CT, and PET-CT scans of lung tumors and head and neck tumors, are studied as benchmarks for evaluation. Experimental results demonstrate the significant superiority of the proposed method over a wide range of state-of-the-art UAD methods. Moreover, the unsupervised method we propose produces comparable results to a robust supervised segmentation method when applied to multimodal images.

PriorNet: lesion segmentation in PET-CT including prior tumor appearance information

Oct 05, 2022

Abstract:Tumor segmentation in PET-CT images is challenging due to the dual nature of the acquired information: low metabolic information in CT and low spatial resolution in PET. U-Net architecture is the most common and widely recognized approach when developing a fully automatic image segmentation method in the medical field. We proposed a two-step approach, aiming to refine and improve the segmentation performances of tumoral lesions in PET-CT. The first step generates a prior tumor appearance map from the PET-CT volumes, regarded as prior tumor information. The second step, consisting of a standard U-Net, receives the prior tumor appearance map and PET-CT images to generate the lesion mask. We evaluated the method on the 1014 cases available for the AutoPET 2022 challenge, and the results showed an average Dice score of 0.701 on the positive cases.

Development and evaluation of a 3D annotation software for interactive COVID-19 lesion segmentation in chest CT

Jan 13, 2021

Abstract:Segmentation of COVID-19 lesions from chest CT scans is of great importance for better diagnosing the disease and investigating its extent. However, manual segmentation can be very time consuming and subjective, given the lesions' large variation in shape, size and position. On the other hand, we still lack large manually segmented datasets that could be used for training machine learning-based models for fully automatic segmentation. In this work, we propose a new interactive and user-friendly tool for COVID-19 lesion segmentation, which works by alternating automatic steps (based on level-set segmentation and statistical shape modeling) with manual correction steps. The present software was tested by two different expertise groups: one group of three radiologists and one of three users with an engineering background. Promising segmentation results were obtained by both groups, which achieved satisfactory agreement both between- and within-group. Moreover, our interactive tool was shown to significantly speed up the lesion segmentation process, when compared to fully manual segmentation. Finally, we investigated inter-observer variability and how it is strongly influenced by several subjective factors, showing the importance for AI researchers and clinical doctors to be aware of the uncertainty in lesion segmentation results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge