Matthew Malencia

Online Multi-Robot Coordination and Cooperation with Task Precedence Relationships

Sep 18, 2025

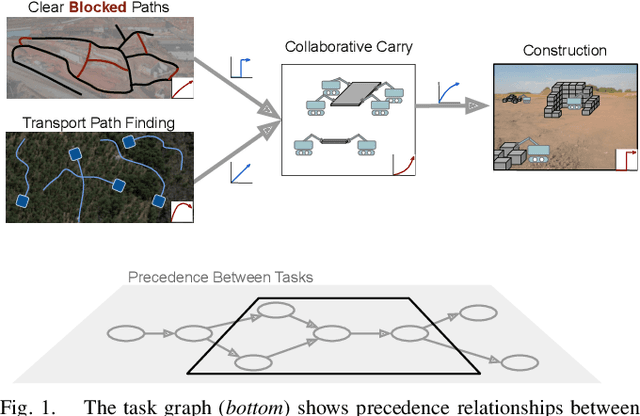

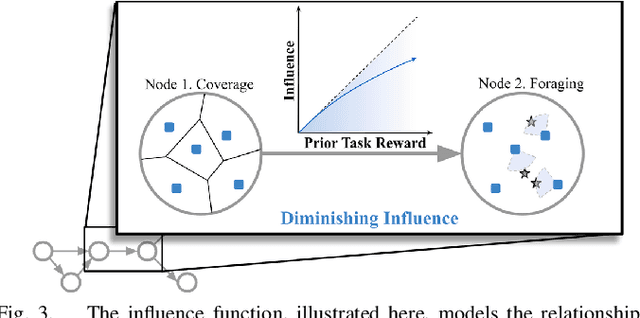

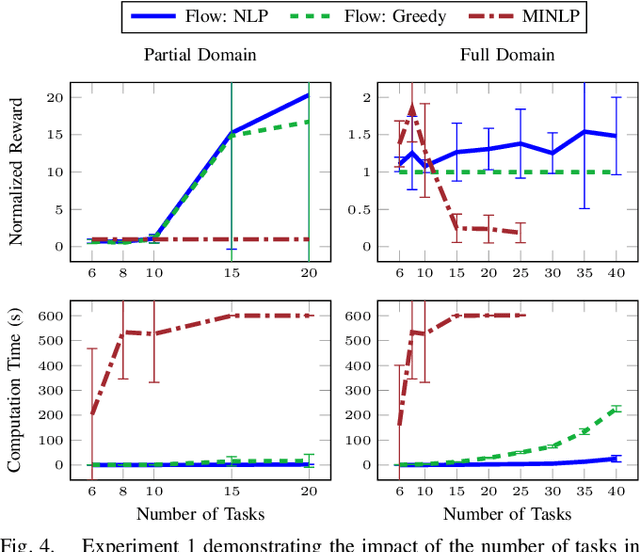

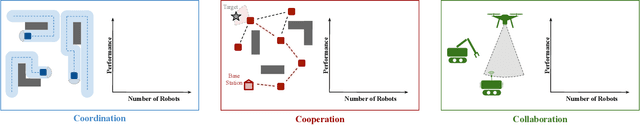

Abstract:We propose a new formulation for the multi-robot task allocation problem that incorporates (a) complex precedence relationships between tasks, (b) efficient intra-task coordination, and (c) cooperation through the formation of robot coalitions. A task graph specifies the tasks and their relationships, and a set of reward functions models the effects of coalition size and preceding task performance. Maximizing task rewards is NP-hard; hence, we propose network flow-based algorithms to approximate solutions efficiently. A novel online algorithm performs iterative re-allocation, providing robustness to task failures and model inaccuracies to achieve higher performance than offline approaches. We comprehensively evaluate the algorithms in a testbed with random missions and reward functions and compare them to a mixed-integer solver and a greedy heuristic. Additionally, we validate the overall approach in an advanced simulator, modeling reward functions based on realistic physical phenomena and executing the tasks with realistic robot dynamics. Results establish efficacy in modeling complex missions and efficiency in generating high-fidelity task plans while leveraging task relationships.

Multi-Robot Coordination and Cooperation with Task Precedence Relationships

Sep 28, 2022

Abstract:We propose a new formulation for the multi-robot task planning and allocation problem that incorporates (a) precedence relationships between tasks; (b) coordination for tasks allowing multiple robots to achieve increased efficiency; and (c) cooperation through the formation of robot coalitions for tasks that cannot be performed by individual robots alone. In our formulation, the tasks and the relationships between the tasks are specified by a task graph. We define a set of reward functions over the task graph's nodes and edges. These functions model the effect of robot coalition size on the task performance, and incorporate the influence of one task's performance on a dependent task. Solving this problem optimally is NP-hard. However, using the task graph formulation allows us to leverage min-cost network flow approaches to obtain approximate solutions efficiently. Additionally, we explore a mixed integer programming approach, which gives optimal solutions for small instances of the problem but is computationally expensive. We also develop a greedy heuristic algorithm as a baseline. Our modeling and solution approaches result in task plans that leverage task precedence relationships and robot coordination and cooperation to achieve high mission performance, even in large missions with many agents.

Adaptive Sampling of Latent Phenomena using Heterogeneous Robot Teams (ASLaP-HR)

Aug 11, 2022

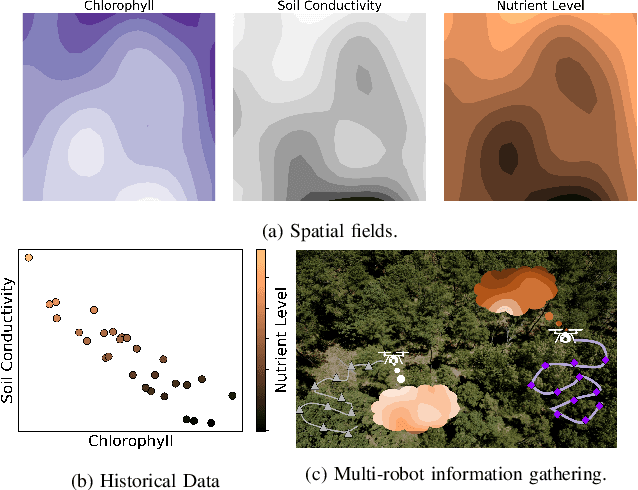

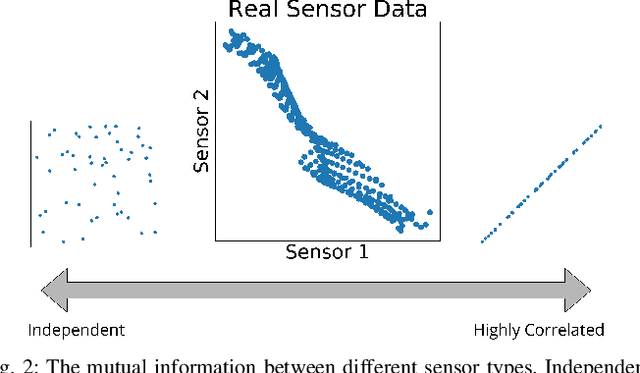

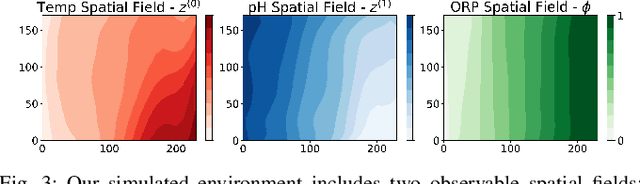

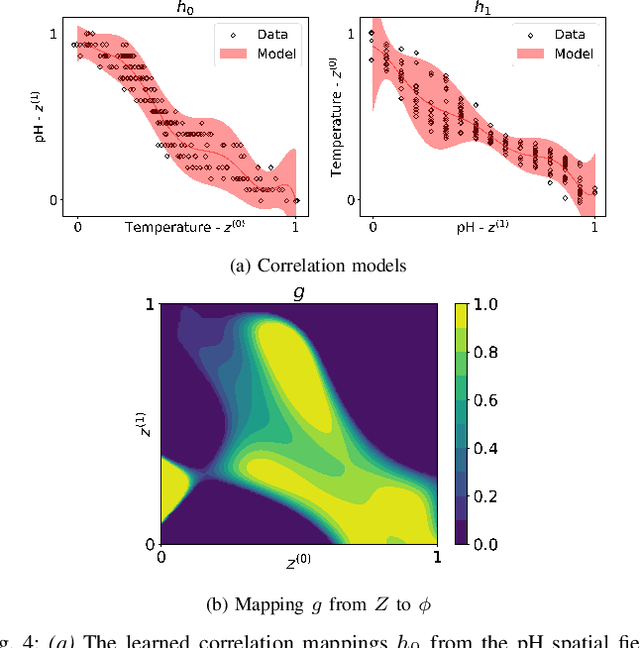

Abstract:In this paper, we present an online adaptive planning strategy for a team of robots with heterogeneous sensors to sample from a latent spatial field using a learned model for decision making. Current robotic sampling methods seek to gather information about an observable spatial field. However, many applications, such as environmental monitoring and precision agriculture, involve phenomena that are not directly observable or are costly to measure, called latent phenomena. In our approach, we seek to reason about the latent phenomenon in real-time by effectively sampling the observable spatial fields using a team of robots with heterogeneous sensors, where each robot has a distinct sensor to measure a different observable field. The information gain is estimated using a learned model that maps from the observable spatial fields to the latent phenomenon. This model captures aleatoric uncertainty in the relationship to allow for information theoretic measures. Additionally, we explicitly consider the correlations among the observable spatial fields, capturing the relationship between sensor types whose observations are not independent. We show it is possible to learn these correlations, and investigate the impact of the learned correlation models on the performance of our sampling approach. Through our qualitative and quantitative results, we illustrate that empirically learned correlations improve the overall sampling efficiency of the team. We simulate our approach using a data set of sensor measurements collected on Lac Hertel, in Quebec, which we make publicly available.

Graph Neural Network Guided Local Search for the Traveling Salesperson Problem

Oct 12, 2021

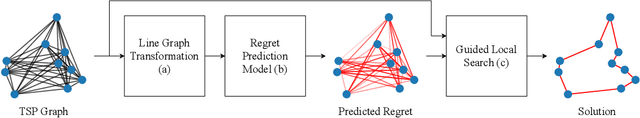

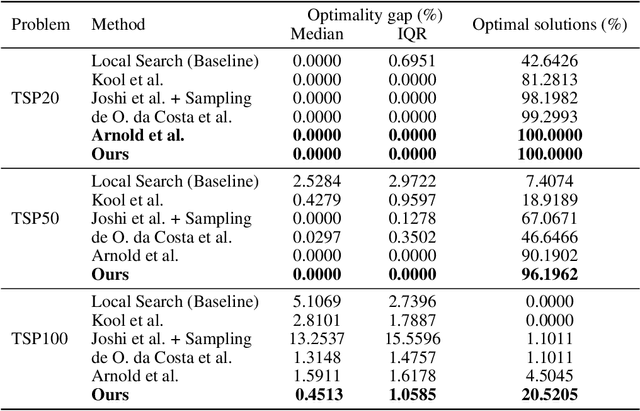

Abstract:Solutions to the Traveling Salesperson Problem (TSP) have practical applications to processes in transportation, logistics, and automation, yet must be computed with minimal delay to satisfy the real-time nature of the underlying tasks. However, solving large TSP instances quickly without sacrificing solution quality remains challenging for current approximate algorithms. To close this gap, we present a hybrid data-driven approach for solving the TSP based on Graph Neural Networks (GNNs) and Guided Local Search (GLS). Our model predicts the regret of including each edge of the problem graph in the solution; GLS uses these predictions in conjunction with the original problem graph to find solutions. Our experiments demonstrate that this approach converges to optimal solutions at a faster rate than state-of-the-art learning-based approaches and non-learning GLS algorithms for the TSP, notably finding optimal solutions to 96% of the 50-node problem set, 7% more than the next best benchmark, and to 20% of the 100-node problem set, 4.5x more than the next best benchmark. When generalizing from 20-node problems to the 100-node problem set, our approach finds solutions with an average optimality gap of 2.5%, a 10x improvement over the next best learning-based benchmark.

Beyond Robustness: A Taxonomy of Approaches towards Resilient Multi-Robot Systems

Sep 25, 2021

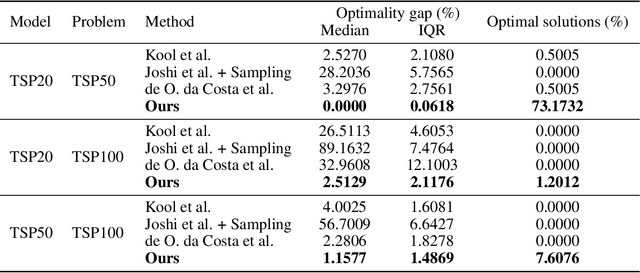

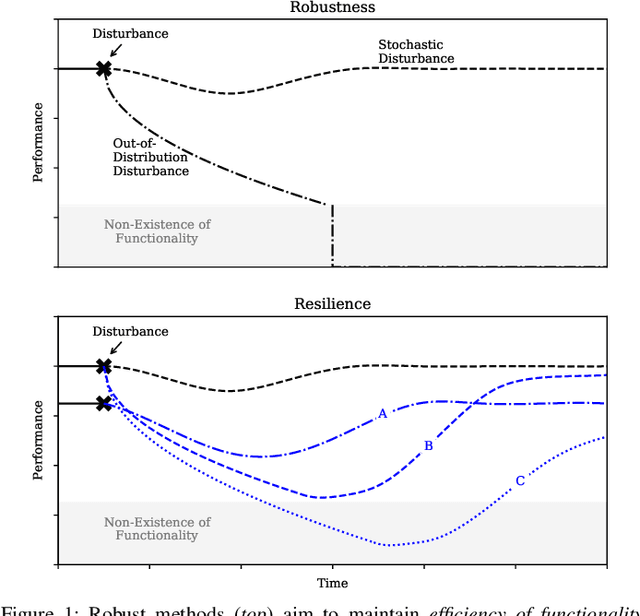

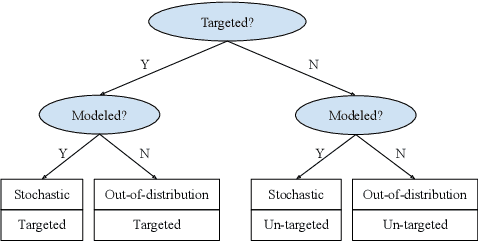

Abstract:Robustness is key to engineering, automation, and science as a whole. However, the property of robustness is often underpinned by costly requirements such as over-provisioning, known uncertainty and predictive models, and known adversaries. These conditions are idealistic, and often not satisfiable. Resilience on the other hand is the capability to endure unexpected disruptions, to recover swiftly from negative events, and bounce back to normality. In this survey article, we analyze how resilience is achieved in networks of agents and multi-robot systems that are able to overcome adversity by leveraging system-wide complementarity, diversity, and redundancy - often involving a reconfiguration of robotic capabilities to provide some key ability that was not present in the system a priori. As society increasingly depends on connected automated systems to provide key infrastructure services (e.g., logistics, transport, and precision agriculture), providing the means to achieving resilient multi-robot systems is paramount. By enumerating the consequences of a system that is not resilient (fragile), we argue that resilience must become a central engineering design consideration. Towards this goal, the community needs to gain clarity on how it is defined, measured, and maintained. We address these questions across foundational robotics domains, spanning perception, control, planning, and learning. One of our key contributions is a formal taxonomy of approaches, which also helps us discuss the defining factors and stressors for a resilient system. Finally, this survey article gives insight as to how resilience may be achieved. Importantly, we highlight open problems that remain to be tackled in order to reap the benefits of resilient robotic systems.

Agree to Disagree: Subjective Fairness in Privacy-Restricted Decentralised Conflict Resolution

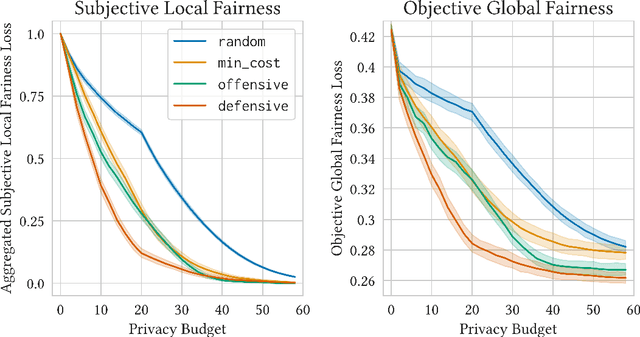

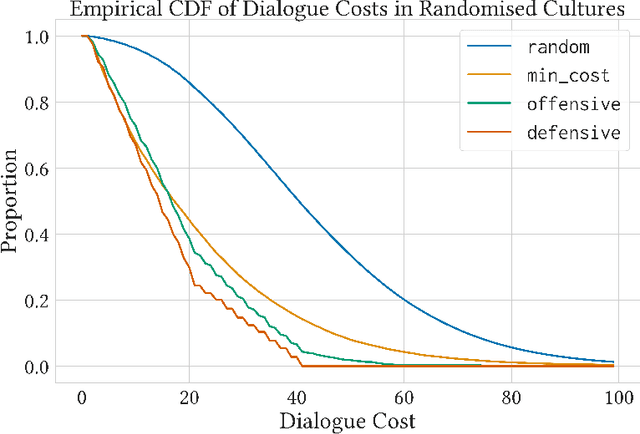

Jun 30, 2021

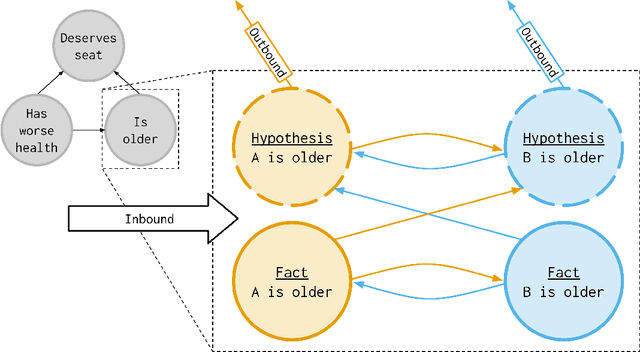

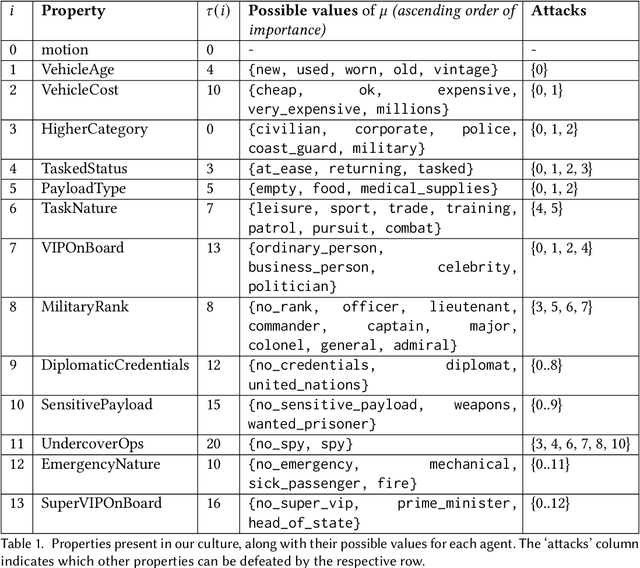

Abstract:Fairness is commonly seen as a property of the global outcome of a system and assumes centralisation and complete knowledge. However, in real decentralised applications, agents only have partial observation capabilities. Under limited information, agents rely on communication to divulge some of their private (and unobservable) information to others. When an agent deliberates to resolve conflicts, limited knowledge may cause its perspective of a correct outcome to differ from the actual outcome of the conflict resolution. This is subjective unfairness. To enable decentralised, fairness-aware conflict resolution under privacy constraints, we have two contributions: (1) a novel interaction approach and (2) a formalism of the relationship between privacy and fairness. Our proposed interaction approach is an architecture for privacy-aware explainable conflict resolution where agents engage in a dialogue of hypotheses and facts. To measure the privacy-fairness relationship, we define subjective and objective fairness on both the local and global scope and formalise the impact of partial observability due to privacy in these different notions of fairness. We first study our proposed architecture and the privacy-fairness relationship in the abstract, testing different argumentation strategies on a large number of randomised cultures. We empirically demonstrate the trade-off between privacy, objective fairness, and subjective fairness and show that better strategies can mitigate the effects of privacy in distributed systems. In addition to this analysis across a broad set of randomised abstract cultures, we analyse a case study for a specific scenario: we instantiate our architecture in a multi-agent simulation of prioritised rule-aware collision avoidance with limited information disclosure.

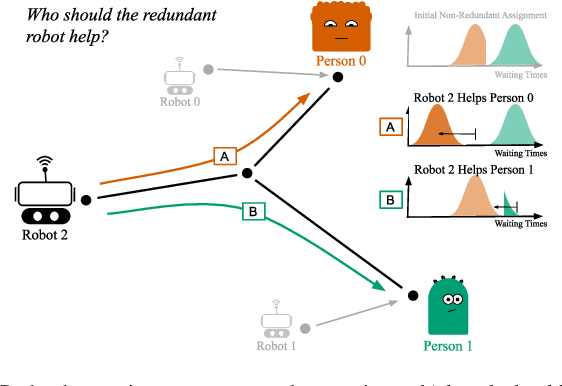

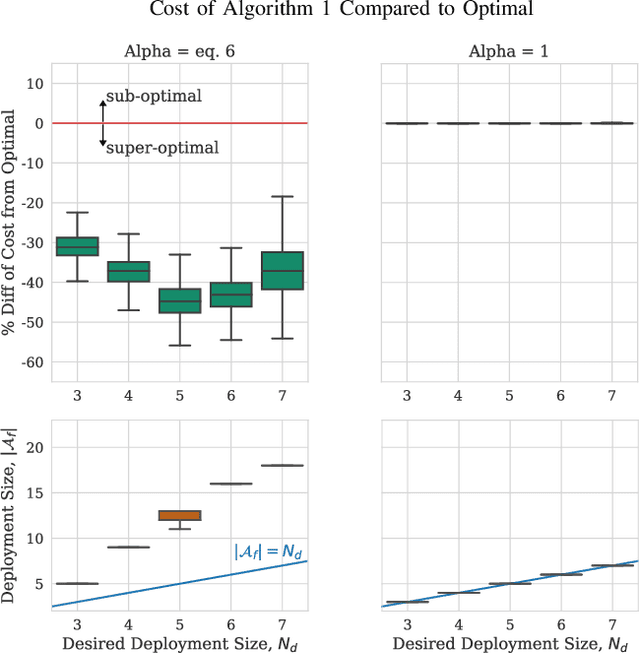

Fair Robust Assignment using Redundancy

Mar 05, 2021

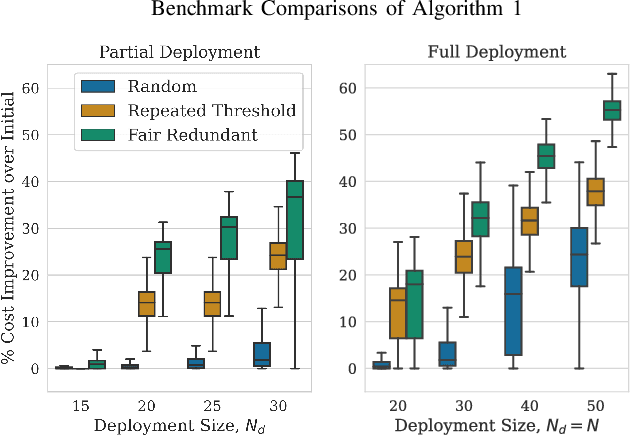

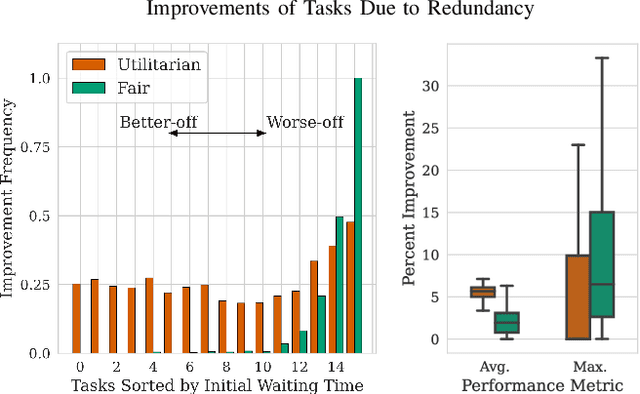

Abstract:We study the consideration of fairness in redundant assignment for multi-agent task allocation. It has recently been shown that redundant assignment of agents to tasks provides robustness to uncertainty in task performance. However, the question of how to fairly assign these redundant resources across tasks remains unaddressed. In this paper, we present a novel problem formulation for fair redundant task allocation, which we cast as the optimization of worst-case task costs under a cardinality constraint. Solving this problem optimally is NP-hard. We exploit properties of supermodularity to propose a polynomial-time, near-optimal solution. In supermodular redundant assignment, the use of additional agents always improves task costs. Therefore, we provide a solution set that is $\alpha$ times larger than the cardinality constraint. This constraint relaxation enables our approach to achieve a super-optimal cost by using a sub-optimal assignment size. We derive the sub-optimality bound on this cardinality relaxation, $\alpha$. Additionally, we demonstrate that our algorithm performs near-optimally without the cardinality relaxation. We show simulations of redundant assignments of robots to goal nodes on transport networks with uncertain travel times. Empirically, our algorithm outperforms benchmarks, scales to large problems, and provides improvements in both fairness and average utility.

Reactive Temporal Logic Planning for Multiple Robots in Unknown Occupancy Grid Maps

Dec 14, 2020

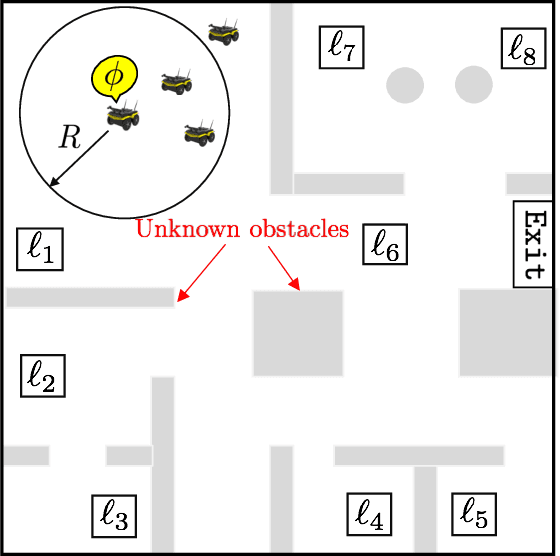

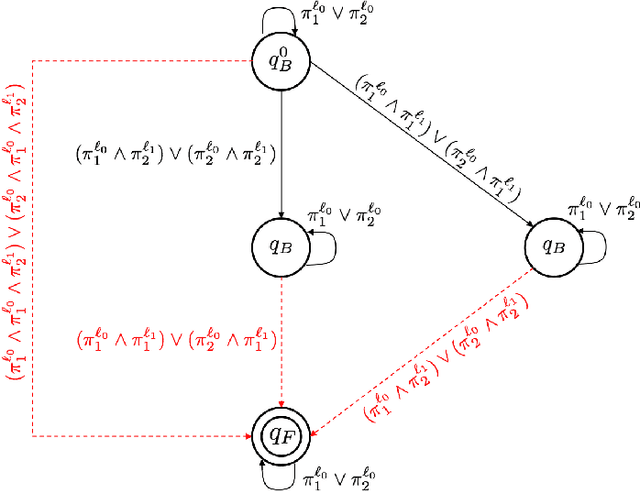

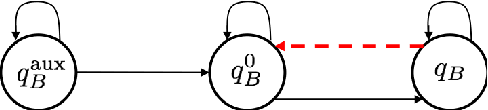

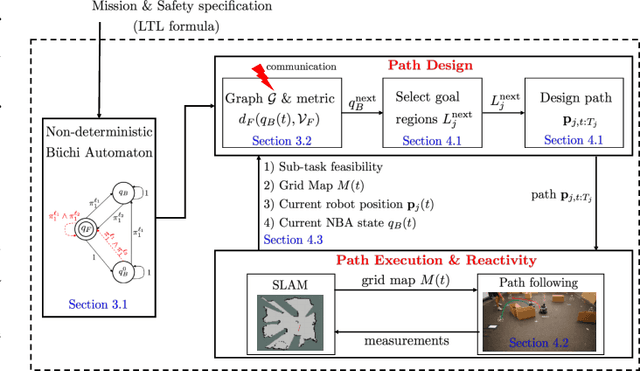

Abstract:This paper proposes a new reactive temporal logic planning algorithm for multiple robots that operate in environments with unknown geometry modeled using occupancy grid maps. The robots are equipped with individual sensors that allow them to continuously learn a grid map of the unknown environment using existing Simultaneous Localization and Mapping (SLAM) methods. The goal of the robots is to accomplish complex collaborative tasks, captured by global Linear Temporal Logic (LTL) formulas. The majority of existing LTL planning approaches rely on discrete abstractions of the robot dynamics operating in known environments and, as a result, they cannot be applied to the more realistic scenarios where the environment is initially unknown. In this paper, we address this novel challenge by proposing the first reactive, abstraction-free, and distributed LTL planning algorithm that can be applied for complex mission planning of multiple robots operating in unknown environments. The proposed algorithm is reactive, i.e., planning is adapting to the updated environmental map and abstraction-free as it does not rely on designing abstractions of the robot dynamics. Also, our algorithm is distributed in the sense that the global LTL task is decomposed into single-agent reachability problems constructed online based on the continuously learned map. The proposed algorithm is complete under mild assumptions on the structure of the environment and the sensor models. We provide extensive numerical simulations and hardware experiments that illustrate the theoretical analysis and show that the proposed algorithm can address complex planning tasks for large-scale multi-robot systems in unknown environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge