Guilherme Paulino-Passos

Preference-Based Abstract Argumentation for Case-Based Reasoning (with Appendix)

Aug 03, 2024Abstract:In the pursuit of enhancing the efficacy and flexibility of interpretable, data-driven classification models, this work introduces a novel incorporation of user-defined preferences with Abstract Argumentation and Case-Based Reasoning (CBR). Specifically, we introduce Preference-Based Abstract Argumentation for Case-Based Reasoning (which we call AA-CBR-P), allowing users to define multiple approaches to compare cases with an ordering that specifies their preference over these comparison approaches. We prove that the model inherently follows these preferences when making predictions and show that previous abstract argumentation for case-based reasoning approaches are insufficient at expressing preferences over constituents of an argument. We then demonstrate how this can be applied to a real-world medical dataset sourced from a clinical trial evaluating differing assessment methods of patients with a primary brain tumour. We show empirically that our approach outperforms other interpretable machine learning models on this dataset.

Contestable AI needs Computational Argumentation

May 17, 2024

Abstract:AI has become pervasive in recent years, but state-of-the-art approaches predominantly neglect the need for AI systems to be contestable. Instead, contestability is advocated by AI guidelines (e.g. by the OECD) and regulation of automated decision-making (e.g. GDPR). In this position paper we explore how contestability can be achieved computationally in and for AI. We argue that contestable AI requires dynamic (human-machine and/or machine-machine) explainability and decision-making processes, whereby machines can (i) interact with humans and/or other machines to progressively explain their outputs and/or their reasoning as well as assess grounds for contestation provided by these humans and/or other machines, and (ii) revise their decision-making processes to redress any issues successfully raised during contestation. Given that much of the current AI landscape is tailored to static AIs, the need to accommodate contestability will require a radical rethinking, that, we argue, computational argumentation is ideally suited to support.

Technical Report on the Learning of Case Relevance in Case-Based Reasoning with Abstract Argumentation

Oct 30, 2023Abstract:Case-based reasoning is known to play an important role in several legal settings. In this paper we focus on a recent approach to case-based reasoning, supported by an instantiation of abstract argumentation whereby arguments represent cases and attack between arguments results from outcome disagreement between cases and a notion of relevance. In this context, relevance is connected to a form of specificity among cases. We explore how relevance can be learnt automatically in practice with the help of decision trees, and explore the combination of case-based reasoning with abstract argumentation (AA-CBR) and learning of case relevance for prediction in legal settings. Specifically, we show that, for two legal datasets, AA-CBR and decision-tree-based learning of case relevance perform competitively in comparison with decision trees. We also show that AA-CBR with decision-tree-based learning of case relevance results in a more compact representation than their decision tree counterparts, which could be beneficial for obtaining cognitively tractable explanations.

On Interactive Explanations as Non-Monotonic Reasoning

Jul 30, 2022

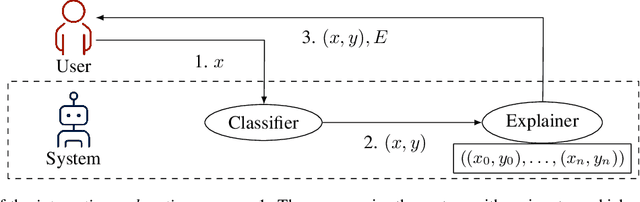

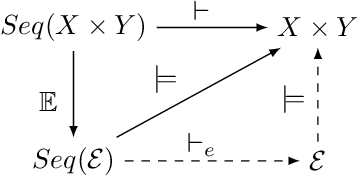

Abstract:Recent work shows issues of consistency with explanations, with methods generating local explanations that seem reasonable instance-wise, but that are inconsistent across instances. This suggests not only that instance-wise explanations can be unreliable, but mainly that, when interacting with a system via multiple inputs, a user may actually lose confidence in the system. To better analyse this issue, in this work we treat explanations as objects that can be subject to reasoning and present a formal model of the interactive scenario between user and system, via sequences of inputs, outputs, and explanations. We argue that explanations can be thought of as committing to some model behaviour (even if only prima facie), suggesting a form of entailment, which, we argue, should be thought of as non-monotonic. This allows: 1) to solve some considered inconsistencies in explanation, such as via a specificity relation; 2) to consider properties from the non-monotonic reasoning literature and discuss their desirability, gaining more insight on the interactive explanation scenario.

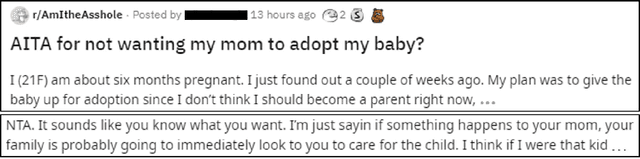

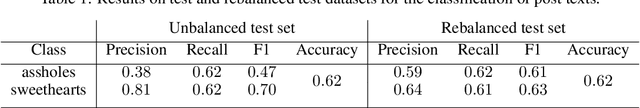

Explainable Patterns for Distinction and Prediction of Moral Judgement on Reddit

Jan 26, 2022

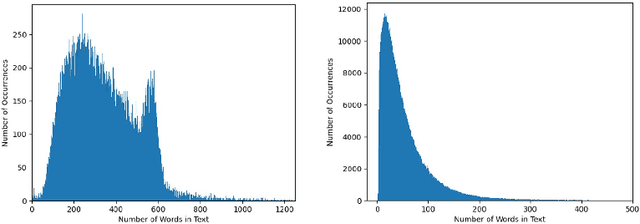

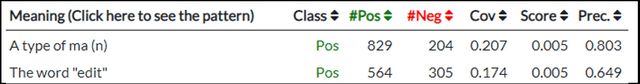

Abstract:The forum r/AmITheAsshole in Reddit hosts discussion on moral issues based on concrete narratives presented by users. Existing analysis of the forum focuses on its comments, and does not make the underlying data publicly available. In this paper we build a new dataset of comments and also investigate the classification of the posts in the forum. Further, we identify textual patterns associated with the provocation of moral judgement by posts, with the expression of moral stance in comments, and with the decisions of trained classifiers of posts and comments.

Monotonicity and Noise-Tolerance in Case-Based Reasoning with Abstract Argumentation (with Appendix)

Jul 13, 2021

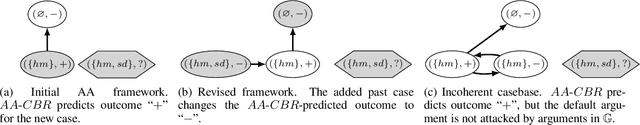

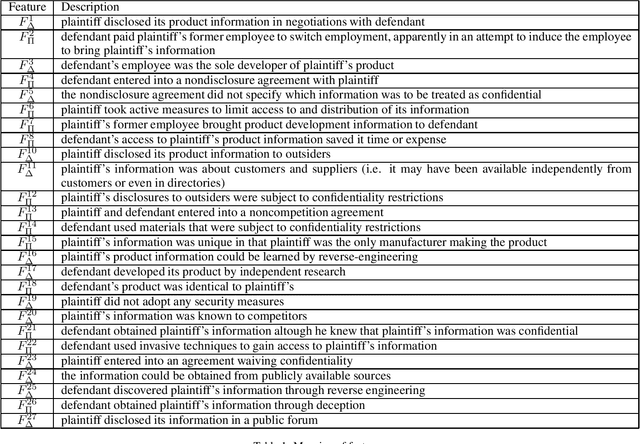

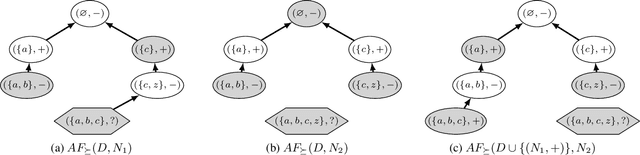

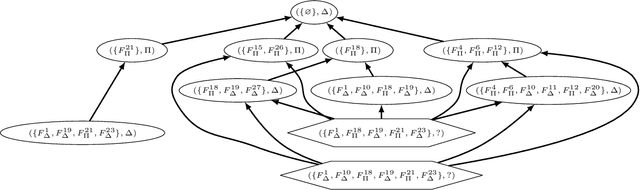

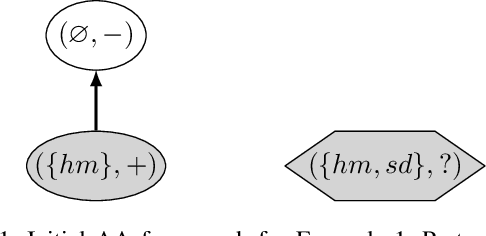

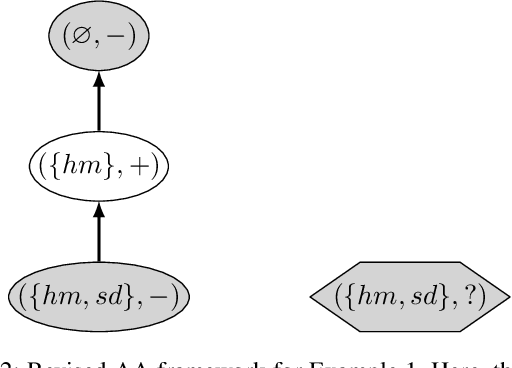

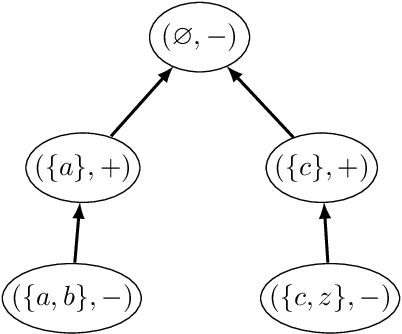

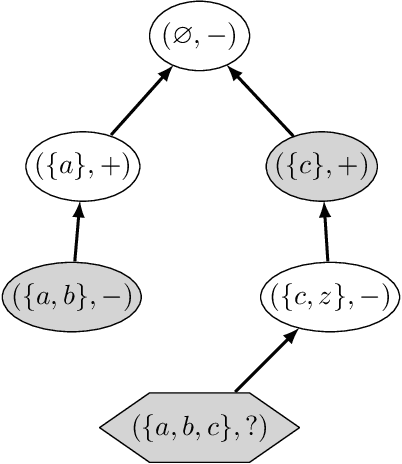

Abstract:Recently, abstract argumentation-based models of case-based reasoning ($AA{\text -} CBR$ in short) have been proposed, originally inspired by the legal domain, but also applicable as classifiers in different scenarios. However, the formal properties of $AA{\text -} CBR$ as a reasoning system remain largely unexplored. In this paper, we focus on analysing the non-monotonicity properties of a regular version of $AA{\text -} CBR$ (that we call $AA{\text -} CBR_{\succeq}$). Specifically, we prove that $AA{\text -} CBR_{\succeq}$ is not cautiously monotonic, a property frequently considered desirable in the literature. We then define a variation of $AA{\text -} CBR_{\succeq}$ which is cautiously monotonic. Further, we prove that such variation is equivalent to using $AA{\text -} CBR_{\succeq}$ with a restricted casebase consisting of all "surprising" and "sufficient" cases in the original casebase. As a by-product, we prove that this variation of $AA{\text -} CBR_{\succeq}$ is cumulative, rationally monotonic, and empowers a principled treatment of noise in "incoherent" casebases. Finally, we illustrate $AA{\text -} CBR$ and cautious monotonicity questions on a case study on the U.S. Trade Secrets domain, a legal casebase.

Agree to Disagree: Subjective Fairness in Privacy-Restricted Decentralised Conflict Resolution

Jun 30, 2021

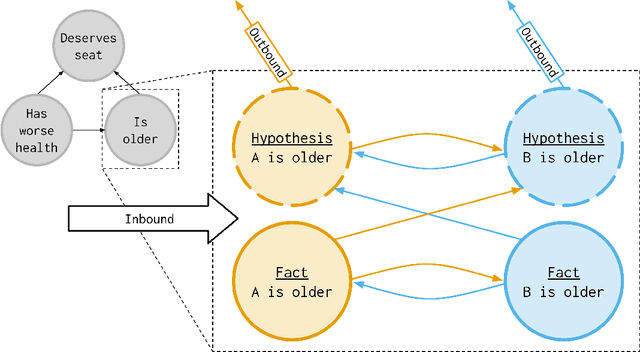

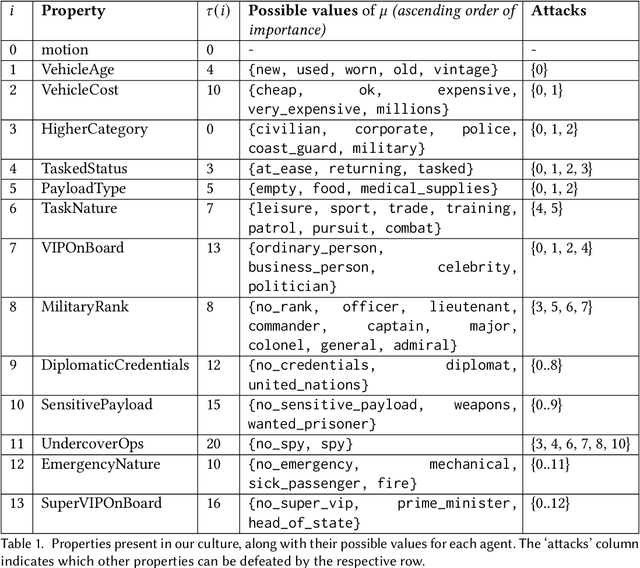

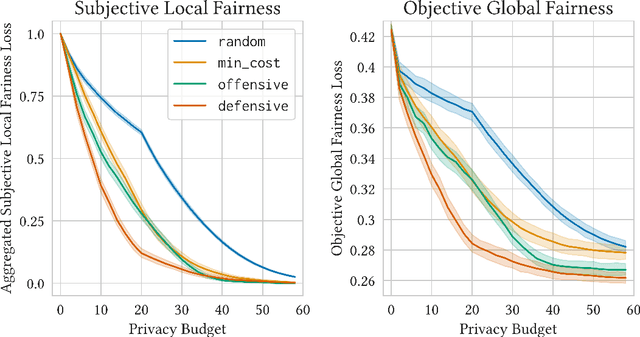

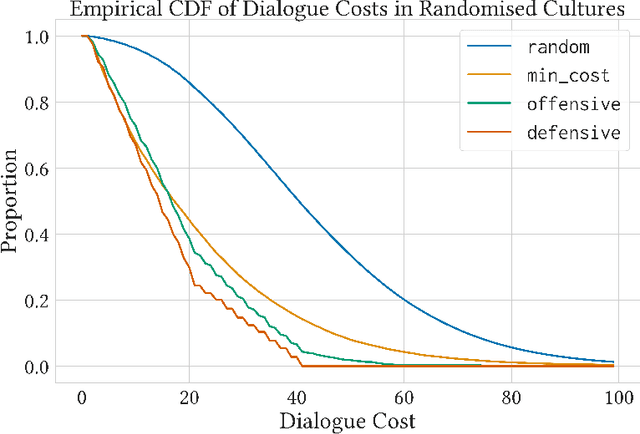

Abstract:Fairness is commonly seen as a property of the global outcome of a system and assumes centralisation and complete knowledge. However, in real decentralised applications, agents only have partial observation capabilities. Under limited information, agents rely on communication to divulge some of their private (and unobservable) information to others. When an agent deliberates to resolve conflicts, limited knowledge may cause its perspective of a correct outcome to differ from the actual outcome of the conflict resolution. This is subjective unfairness. To enable decentralised, fairness-aware conflict resolution under privacy constraints, we have two contributions: (1) a novel interaction approach and (2) a formalism of the relationship between privacy and fairness. Our proposed interaction approach is an architecture for privacy-aware explainable conflict resolution where agents engage in a dialogue of hypotheses and facts. To measure the privacy-fairness relationship, we define subjective and objective fairness on both the local and global scope and formalise the impact of partial observability due to privacy in these different notions of fairness. We first study our proposed architecture and the privacy-fairness relationship in the abstract, testing different argumentation strategies on a large number of randomised cultures. We empirically demonstrate the trade-off between privacy, objective fairness, and subjective fairness and show that better strategies can mitigate the effects of privacy in distributed systems. In addition to this analysis across a broad set of randomised abstract cultures, we analyse a case study for a specific scenario: we instantiate our architecture in a multi-agent simulation of prioritised rule-aware collision avoidance with limited information disclosure.

Cautious Monotonicity in Case-Based Reasoning with Abstract Argumentation

Jul 13, 2020

Abstract:Recently, abstract argumentation-based models of case-based reasoning ($AA{\text -}CBR$ in short) have been proposed, originally inspired by the legal domain, but also applicable as classifiers in different scenarios, including image classification, sentiment analysis of text, and in predicting the passage of bills in the UK Parliament. However, the formal properties of $AA{\text -}CBR$ as a reasoning system remain largely unexplored. In this paper, we focus on analysing the non-monotonicity properties of a regular version of $AA{\text -}CBR$ (that we call $AA{\text -}CBR_{\succeq}$). Specifically, we prove that $AA{\text -}CBR_{\succeq}$ is not cautiously monotonic, a property frequently considered desirable in the literature of non-monotonic reasoning. We then define a variation of $AA{\text -}CBR_{\succeq}$ which is cautiously monotonic, and provide an algorithm for obtaining it. Further, we prove that such variation is equivalent to using $AA{\text -}CBR_{\succeq}$ with a restricted casebase consisting of all "surprising" cases in the original casebase.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge