Alex Raymond

Explanation-Aware Experience Replay in Rule-Dense Environments

Sep 29, 2021

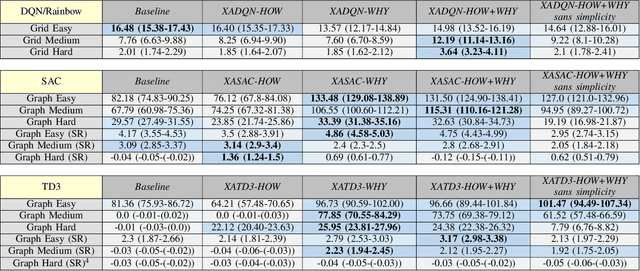

Abstract:Human environments are often regulated by explicit and complex rulesets. Integrating Reinforcement Learning (RL) agents into such environments motivates the development of learning mechanisms that perform well in rule-dense and exception-ridden environments such as autonomous driving on regulated roads. In this paper, we propose a method for organising experience by means of partitioning the experience buffer into clusters labelled on a per-explanation basis. We present discrete and continuous navigation environments compatible with modular rulesets and 9 learning tasks. For environments with explainable rulesets, we convert rule-based explanations into case-based explanations by allocating state-transitions into clusters labelled with explanations. This allows us to sample experiences in a curricular and task-oriented manner, focusing on the rarity, importance, and meaning of events. We label this concept Explanation-Awareness (XA). We perform XA experience replay (XAER) with intra and inter-cluster prioritisation, and introduce XA-compatible versions of DQN, TD3, and SAC. Performance is consistently superior with XA versions of those algorithms, compared to traditional Prioritised Experience Replay baselines, indicating that explanation engineering can be used in lieu of reward engineering for environments with explainable features.

Agree to Disagree: Subjective Fairness in Privacy-Restricted Decentralised Conflict Resolution

Jun 30, 2021

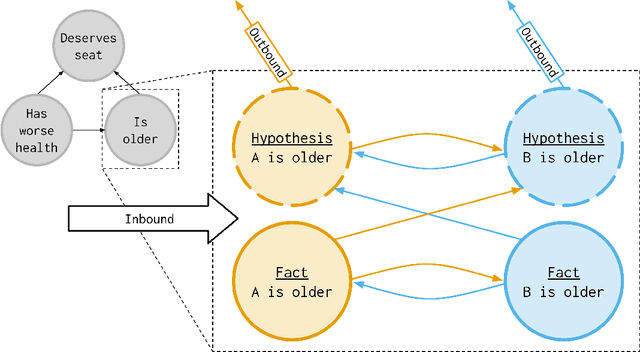

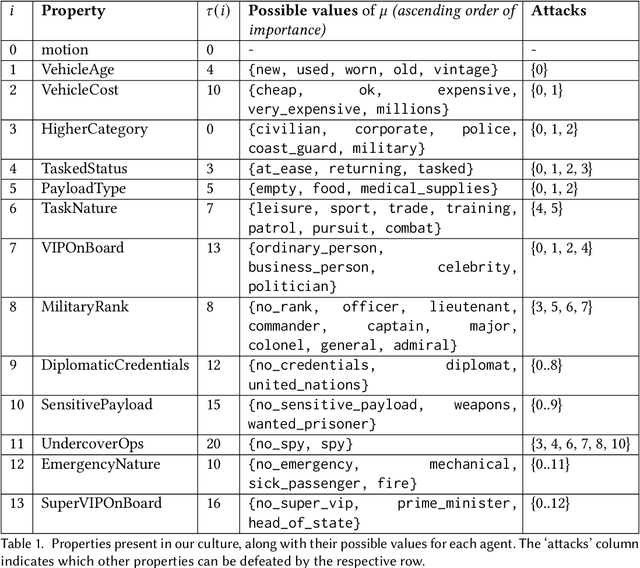

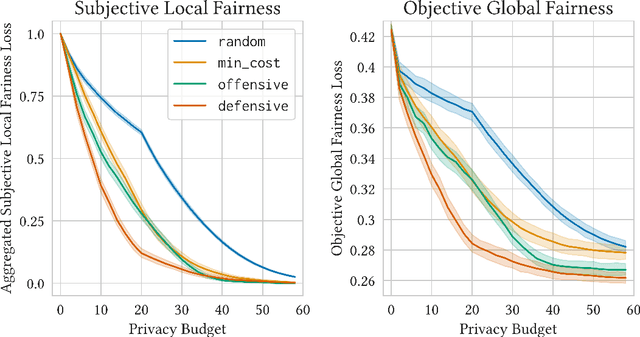

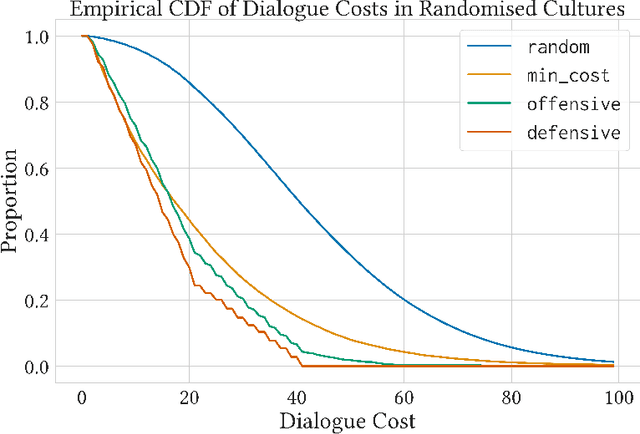

Abstract:Fairness is commonly seen as a property of the global outcome of a system and assumes centralisation and complete knowledge. However, in real decentralised applications, agents only have partial observation capabilities. Under limited information, agents rely on communication to divulge some of their private (and unobservable) information to others. When an agent deliberates to resolve conflicts, limited knowledge may cause its perspective of a correct outcome to differ from the actual outcome of the conflict resolution. This is subjective unfairness. To enable decentralised, fairness-aware conflict resolution under privacy constraints, we have two contributions: (1) a novel interaction approach and (2) a formalism of the relationship between privacy and fairness. Our proposed interaction approach is an architecture for privacy-aware explainable conflict resolution where agents engage in a dialogue of hypotheses and facts. To measure the privacy-fairness relationship, we define subjective and objective fairness on both the local and global scope and formalise the impact of partial observability due to privacy in these different notions of fairness. We first study our proposed architecture and the privacy-fairness relationship in the abstract, testing different argumentation strategies on a large number of randomised cultures. We empirically demonstrate the trade-off between privacy, objective fairness, and subjective fairness and show that better strategies can mitigate the effects of privacy in distributed systems. In addition to this analysis across a broad set of randomised abstract cultures, we analyse a case study for a specific scenario: we instantiate our architecture in a multi-agent simulation of prioritised rule-aware collision avoidance with limited information disclosure.

Toward Affective XAI: Facial Affect Analysis for Understanding Explainable Human-AI Interactions

Jun 16, 2021

Abstract:As machine learning approaches are increasingly used to augment human decision-making, eXplainable Artificial Intelligence (XAI) research has explored methods for communicating system behavior to humans. However, these approaches often fail to account for the emotional responses of humans as they interact with explanations. Facial affect analysis, which examines human facial expressions of emotions, is one promising lens for understanding how users engage with explanations. Therefore, in this work, we aim to (1) identify which facial affect features are pronounced when people interact with XAI interfaces, and (2) develop a multitask feature embedding for linking facial affect signals with participants' use of explanations. Our analyses and results show that the occurrence and values of facial AU1 and AU4, and Arousal are heightened when participants fail to use explanations effectively. This suggests that facial affect analysis should be incorporated into XAI to personalize explanations to individuals' interaction styles and to adapt explanations based on the difficulty of the task performed.

Culture-Based Explainable Human-Agent Deconfliction

Nov 22, 2019

Abstract:Law codes and regulations help organise societies for centuries, and as AI systems gain more autonomy, we question how human-agent systems can operate as peers under the same norms, especially when resources are contended. We posit that agents must be accountable and explainable by referring to which rules justify their decisions. The need for explanations is associated with user acceptance and trust. This paper's contribution is twofold: i) we propose an argumentation-based human-agent architecture to map human regulations into a culture for artificial agents with explainable behaviour. Our architecture leans on the notion of argumentative dialogues and generates explanations from the history of such dialogues; and ii) we validate our architecture with a user study in the context of human-agent path deconfliction. Our results show that explanations provide a significantly higher improvement in human performance when systems are more complex. Consequently, we argue that the criteria defining the need of explanations should also consider the complexity of a system. Qualitative findings show that when rules are more complex, explanations significantly reduce the perception of challenge for humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge