Marynel Vázquez

Yale University

Few-Shot Inference of Human Perceptions of Robot Performance in Social Navigation Scenarios

Dec 17, 2025Abstract:Understanding how humans evaluate robot behavior during human-robot interactions is crucial for developing socially aware robots that behave according to human expectations. While the traditional approach to capturing these evaluations is to conduct a user study, recent work has proposed utilizing machine learning instead. However, existing data-driven methods require large amounts of labeled data, which limits their use in practice. To address this gap, we propose leveraging the few-shot learning capabilities of Large Language Models (LLMs) to improve how well a robot can predict a user's perception of its performance, and study this idea experimentally in social navigation tasks. To this end, we extend the SEAN TOGETHER dataset with additional real-world human-robot navigation episodes and participant feedback. Using this augmented dataset, we evaluate the ability of several LLMs to predict human perceptions of robot performance from a small number of in-context examples, based on observed spatio-temporal cues of the robot and surrounding human motion. Our results demonstrate that LLMs can match or exceed the performance of traditional supervised learning models while requiring an order of magnitude fewer labeled instances. We further show that prediction performance can improve with more in-context examples, confirming the scalability of our approach. Additionally, we investigate what kind of sensor-based information an LLM relies on to make these inferences by conducting an ablation study on the input features considered for performance prediction. Finally, we explore the novel application of personalized examples for in-context learning, i.e., drawn from the same user being evaluated, finding that they further enhance prediction accuracy. This work paves the path to improving robot behavior in a scalable manner through user-centered feedback.

Let's move on: Topic Change in Robot-Facilitated Group Discussions

Apr 02, 2025Abstract:Robot-moderated group discussions have the potential to facilitate engaging and productive interactions among human participants. Previous work on topic management in conversational agents has predominantly focused on human engagement and topic personalization, with the agent having an active role in the discussion. Also, studies have shown the usefulness of including robots in groups, yet further exploration is still needed for robots to learn when to change the topic while facilitating discussions. Accordingly, our work investigates the suitability of machine-learning models and audiovisual non-verbal features in predicting appropriate topic changes. We utilized interactions between a robot moderator and human participants, which we annotated and used for extracting acoustic and body language-related features. We provide a detailed analysis of the performance of machine learning approaches using sequential and non-sequential data with different sets of features. The results indicate promising performance in classifying inappropriate topic changes, outperforming rule-based approaches. Additionally, acoustic features exhibited comparable performance and robustness compared to the complete set of multimodal features. Our annotated data is publicly available at https://github.com/ghadj/topic-change-robot-discussions-data-2024.

* 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN)

Dynamic Fairness Perceptions in Human-Robot Interaction

Sep 11, 2024

Abstract:People deeply care about how fairly they are treated by robots. The established paradigm for probing fairness in Human-Robot Interaction (HRI) involves measuring the perception of the fairness of a robot at the conclusion of an interaction. However, such an approach is limited as interactions vary over time, potentially causing changes in fairness perceptions as well. To validate this idea, we conducted a 2x2 user study with a mixed design (N=40) where we investigated two factors: the timing of unfair robot actions (early or late in an interaction) and the beneficiary of those actions (either another robot or the participant). Our results show that fairness judgments are not static. They can shift based on the timing of unfair robot actions. Further, we explored using perceptions of three key factors (reduced welfare, conduct, and moral transgression) proposed by a Fairness Theory from Organizational Justice to predict momentary perceptions of fairness in our study. Interestingly, we found that the reduced welfare and moral transgression factors were better predictors than all factors together. Our findings reinforce the idea that unfair robot behavior can shape perceptions of group dynamics and trust towards a robot and pave the path to future research directions on moment-to-moment fairness perceptions

Aligning Multiclass Neural Network Classifier Criterion with Task Performance via $F_β$-Score

May 31, 2024

Abstract:Multiclass neural network classifiers are typically trained using cross-entropy loss. Following training, the performance of this same neural network is evaluated using an application-specific metric based on the multiclass confusion matrix, such as the Macro $F_\beta$-Score. It is questionable whether the use of cross-entropy will yield a classifier that aligns with the intended application-specific performance criteria, particularly in scenarios where there is a need to emphasize one aspect of classifier performance. For example, if greater precision is preferred over recall, the $\beta$ value in the $F_\beta$ evaluation metric can be adjusted accordingly, but the cross-entropy objective remains unaware of this preference during training. We propose a method that addresses this training-evaluation gap for multiclass neural network classifiers such that users can train these models informed by the desired final $F_\beta$-Score. Following prior work in binary classification, we utilize the concepts of the soft-set confusion matrices and a piecewise-linear approximation of the Heaviside step function. Our method extends the $2 \times 2$ binary soft-set confusion matrix to a multiclass $d \times d$ confusion matrix and proposes dynamic adaptation of the threshold value $\tau$, which parameterizes the piecewise-linear Heaviside approximation during run-time. We present a theoretical analysis that shows that our method can be used to optimize for a soft-set based approximation of Macro-$F_\beta$ that is a consistent estimator of Macro-$F_\beta$, and our extensive experiments show the practical effectiveness of our approach.

Learning Human Preferences Over Robot Behavior as Soft Planning Constraints

Mar 28, 2024

Abstract:Preference learning has long been studied in Human-Robot Interaction (HRI) in order to adapt robot behavior to specific user needs and desires. Typically, human preferences are modeled as a scalar function; however, such a formulation confounds critical considerations on how the robot should behave for a given task, with desired -- but not required -- robot behavior. In this work, we distinguish between such required and desired robot behavior by leveraging a planning framework. Specifically, we propose a novel problem formulation for preference learning in HRI where various types of human preferences are encoded as soft planning constraints. Then, we explore a data-driven method to enable a robot to infer preferences by querying users, which we instantiate in rearrangement tasks in the Habitat 2.0 simulator. We show that the proposed approach is promising at inferring three types of preferences even under varying levels of noise in simulated user choices between potential robot behaviors. Our contributions open up doors to adaptable planning-based robot behavior in the future.

REACT: Two Datasets for Analyzing Both Human Reactions and Evaluative Feedback to Robots Over Time

Jan 31, 2024

Abstract:Recent work in Human-Robot Interaction (HRI) has shown that robots can leverage implicit communicative signals from users to understand how they are being perceived during interactions. For example, these signals can be gaze patterns, facial expressions, or body motions that reflect internal human states. To facilitate future research in this direction, we contribute the REACT database, a collection of two datasets of human-robot interactions that display users' natural reactions to robots during a collaborative game and a photography scenario. Further, we analyze the datasets to show that interaction history is an important factor that can influence human reactions to robots. As a result, we believe that future models for interpreting implicit feedback in HRI should explicitly account for this history. REACT opens up doors to this possibility in the future.

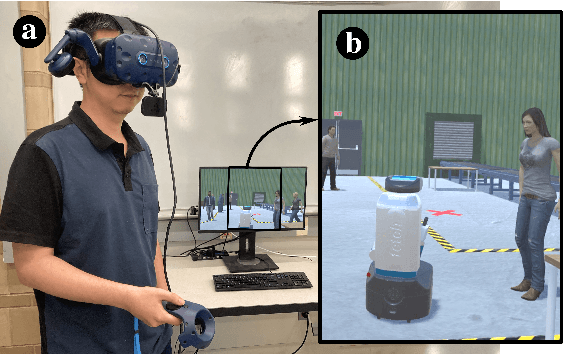

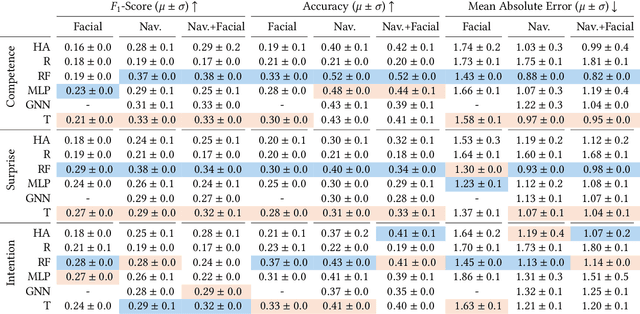

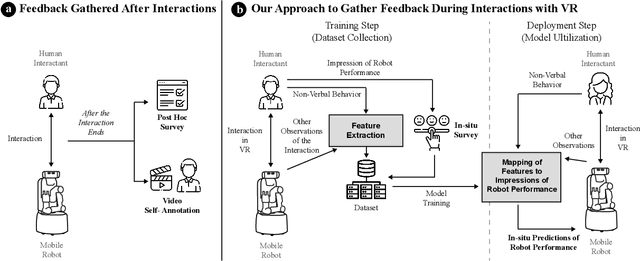

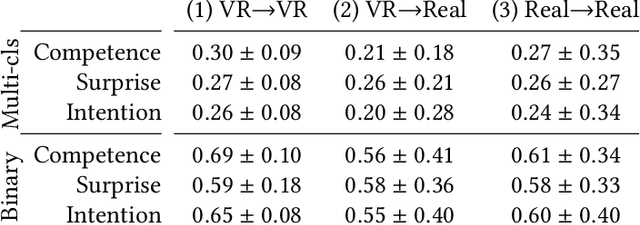

Towards Inferring Users' Impressions of Robot Performance in Navigation Scenarios

Oct 17, 2023

Abstract:Human impressions of robot performance are often measured through surveys. As a more scalable and cost-effective alternative, we study the possibility of predicting people's impressions of robot behavior using non-verbal behavioral cues and machine learning techniques. To this end, we first contribute the SEAN TOGETHER Dataset consisting of observations of an interaction between a person and a mobile robot in a Virtual Reality simulation, together with impressions of robot performance provided by users on a 5-point scale. Second, we contribute analyses of how well humans and supervised learning techniques can predict perceived robot performance based on different combinations of observation types (e.g., facial, spatial, and map features). Our results show that facial expressions alone provide useful information about human impressions of robot performance; but in the navigation scenarios we tested, spatial features are the most critical piece of information for this inference task. Also, when evaluating results as binary classification (rather than multiclass classification), the F1-Score of human predictions and machine learning models more than doubles, showing that both are better at telling the directionality of robot performance than predicting exact performance ratings. Based on our findings, we provide guidelines for implementing these predictions models in real-world navigation scenarios.

Shutter, the Robot Photographer: Leveraging Behavior Trees for Public, In-the-Wild Human-Robot Interactions

Feb 01, 2023

Abstract:Deploying interactive systems in-the-wild requires adaptability to situations not encountered in lab environments. Our work details our experience about the impact of architecture choice on behavior reusability and reactivity while deploying a public interactive system. In particular, we introduce Shutter, a robot photographer and a platform for public interaction. In designing Shutter's architecture, we focused on adaptability for in-the-wild deployment, while developing a reusable platform to facilitate future research in public human-robot interaction. We find that behavior trees allow reactivity, especially in group settings, and encourage designing reusable behaviors.

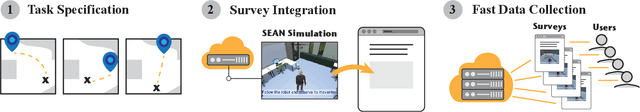

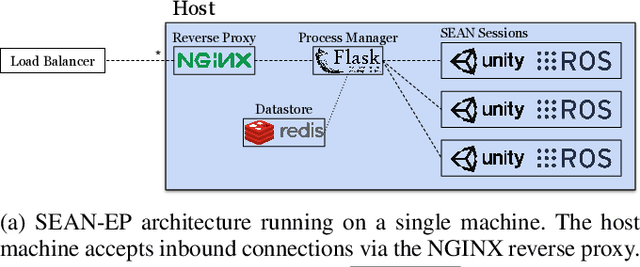

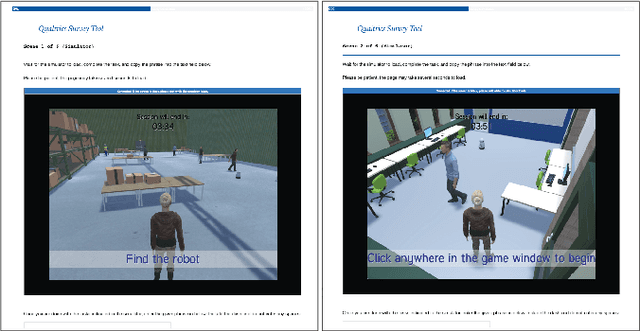

SEAN-EP: A Platform for Collecting Human Feedback for Social Robot Navigation at Scale

Dec 22, 2020

Abstract:We introduce the SEAN Experimental Platform (SEAN-EP), an open-source system that allows roboticists to gather human feedback for social robot navigation at scale using online interactive simulations. Through SEAN-EP, remote users can control the motion of a human avatar via their web browser and interact with a virtual robot controlled through the Robot Operating System. Heavy computation in SEAN-EP is delegated to cloud servers such that users do not need specialized hardware to take part in the simulations. We validated SEAN-EP and its usability through an online survey, and compared the data collected from this survey with a similar video survey. Our results suggest that human perceptions of robots may differ based on whether they interact with the robots in simulation or observe them in videos. Also, our study suggests that people may perceive the surveys with interactive simulations as less mentally demanding than video surveys.

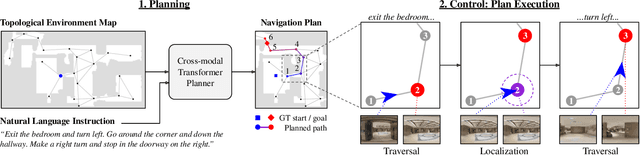

Topological Planning with Transformers for Vision-and-Language Navigation

Dec 09, 2020

Abstract:Conventional approaches to vision-and-language navigation (VLN) are trained end-to-end but struggle to perform well in freely traversable environments. Inspired by the robotics community, we propose a modular approach to VLN using topological maps. Given a natural language instruction and topological map, our approach leverages attention mechanisms to predict a navigation plan in the map. The plan is then executed with low-level actions (e.g. forward, rotate) using a robust controller. Experiments show that our method outperforms previous end-to-end approaches, generates interpretable navigation plans, and exhibits intelligent behaviors such as backtracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge