Fethiye Irmak Dogan

Investigating Associational Biases in Inter-Model Communication of Large Generative Models

Jan 29, 2026Abstract:Social bias in generative AI can manifest not only as performance disparities but also as associational bias, whereby models learn and reproduce stereotypical associations between concepts and demographic groups, even in the absence of explicit demographic information (e.g., associating doctors with men). These associations can persist, propagate, and potentially amplify across repeated exchanges in inter-model communication pipelines, where one generative model's output becomes another's input. This is especially salient for human-centred perception tasks, such as human activity recognition and affect prediction, where inferences about behaviour and internal states can lead to errors or stereotypical associations that propagate into unequal treatment. In this work, focusing on human activity and affective expression, we study how such associations evolve within an inter-model communication pipeline that alternates between image generation and image description. Using the RAF-DB and PHASE datasets, we quantify demographic distribution drift induced by model-to-model information exchange and assess whether these drifts are systematic using an explainability pipeline. Our results reveal demographic drifts toward younger representations for both actions and emotions, as well as toward more female-presenting representations, primarily for emotions. We further find evidence that some predictions are supported by spurious visual regions (e.g., background or hair) rather than concept-relevant cues (e.g., body or face). We also examine whether these demographic drifts translate into measurable differences in downstream behaviour, i.e., while predicting activity and emotion labels. Finally, we outline mitigation strategies spanning data-centric, training and deployment interventions, and emphasise the need for careful safeguards when deploying interconnected models in human-centred AI systems.

Expectations, Explanations, and Embodiment: Attempts at Robot Failure Recovery

Apr 09, 2025

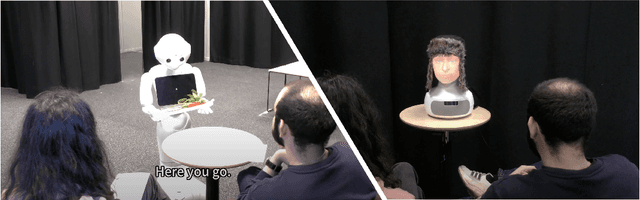

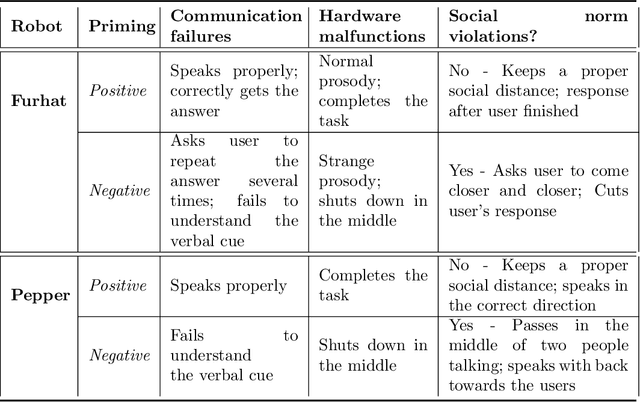

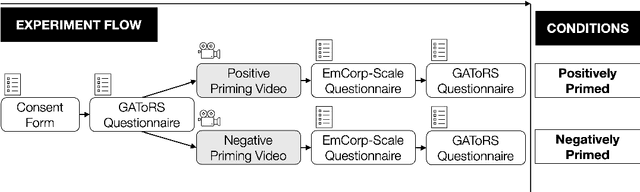

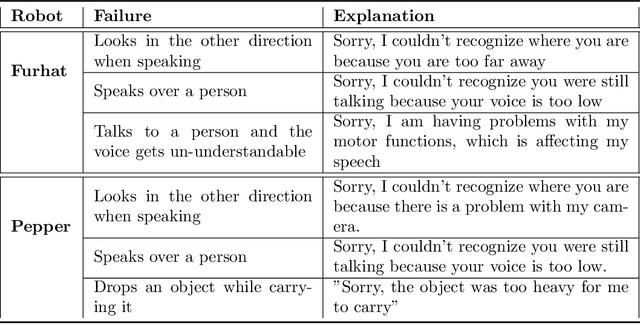

Abstract:Expectations critically shape how people form judgments about robots, influencing whether they view failures as minor technical glitches or deal-breaking flaws. This work explores how high and low expectations, induced through brief video priming, affect user perceptions of robot failures and the utility of explanations in HRI. We conducted two online studies ($N=600$ total participants); each replicated two robots with different embodiments, Furhat and Pepper. In our first study, grounded in expectation theory, participants were divided into two groups, one primed with positive and the other with negative expectations regarding the robot's performance, establishing distinct expectation frameworks. This validation study aimed to verify whether the videos could reliably establish low and high-expectation profiles. In the second study, participants were primed using the validated videos and then viewed a new scenario in which the robot failed at a task. Half viewed a version where the robot explained its failure, while the other half received no explanation. We found that explanations significantly improved user perceptions of Furhat, especially when participants were primed to have lower expectations. Explanations boosted satisfaction and enhanced the robot's perceived expressiveness, indicating that effectively communicating the cause of errors can help repair user trust. By contrast, Pepper's explanations produced minimal impact on user attitudes, suggesting that a robot's embodiment and style of interaction could determine whether explanations can successfully offset negative impressions. Together, these findings underscore the need to consider users' expectations when tailoring explanation strategies in HRI. When expectations are initially low, a cogent explanation can make the difference between dismissing a failure and appreciating the robot's transparency and effort to communicate.

AsyReC: A Multimodal Graph-based Framework for Spatio-Temporal Asymmetric Dyadic Relationship Classification

Apr 07, 2025Abstract:Dyadic social relationships, which refer to relationships between two individuals who know each other through repeated interactions (or not), are shaped by shared spatial and temporal experiences. Current computational methods for modeling these relationships face three major challenges: (1) the failure to model asymmetric relationships, e.g., one individual may perceive the other as a friend while the other perceives them as an acquaintance, (2) the disruption of continuous interactions by discrete frame sampling, which segments the temporal continuity of interaction in real-world scenarios, and (3) the limitation to consider periodic behavioral cues, such as rhythmic vocalizations or recurrent gestures, which are crucial for inferring the evolution of dyadic relationships. To address these challenges, we propose AsyReC, a multimodal graph-based framework for asymmetric dyadic relationship classification, with three core innovations: (i) a triplet graph neural network with node-edge dual attention that dynamically weights multimodal cues to capture interaction asymmetries (addressing challenge 1); (ii) a clip-level relationship learning architecture that preserves temporal continuity, enabling fine-grained modeling of real-world interaction dynamics (addressing challenge 2); and (iii) a periodic temporal encoder that projects time indices onto sine/cosine waveforms to model recurrent behavioral patterns (addressing challenge 3). Extensive experiments on two public datasets demonstrate state-of-the-art performance, while ablation studies validate the critical role of asymmetric interaction modeling and periodic temporal encoding in improving the robustness of dyadic relationship classification in real-world scenarios. Our code is publicly available at: https://github.com/tw-repository/AsyReC.

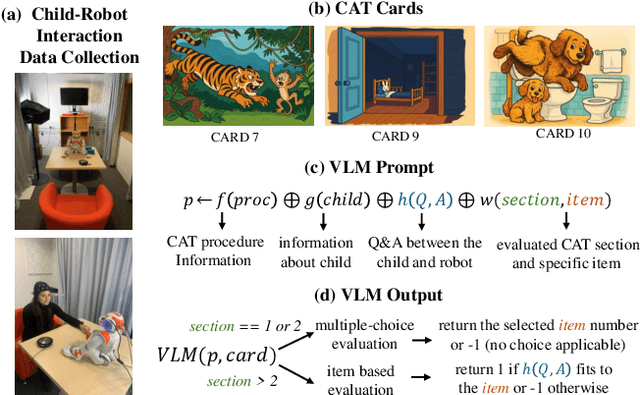

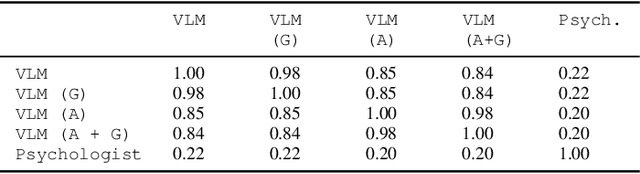

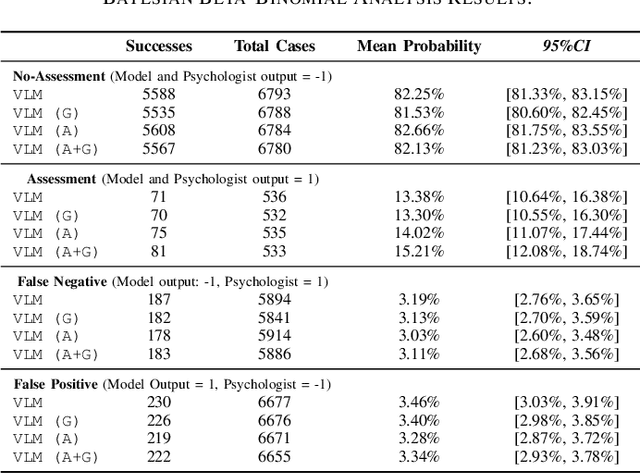

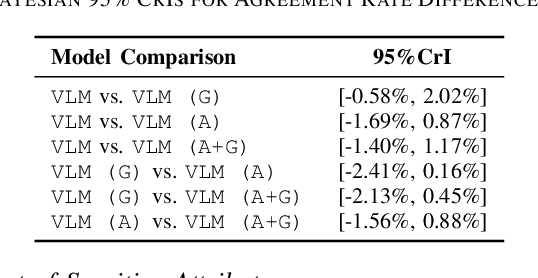

Robot-Led Vision Language Model Wellbeing Assessment of Children

Apr 03, 2025

Abstract:This study presents a novel robot-led approach to assessing children's mental wellbeing using a Vision Language Model (VLM). Inspired by the Child Apperception Test (CAT), the social robot NAO presented children with pictorial stimuli to elicit their verbal narratives of the images, which were then evaluated by a VLM in accordance with CAT assessment guidelines. The VLM's assessments were systematically compared to those provided by a trained psychologist. The results reveal that while the VLM demonstrates moderate reliability in identifying cases with no wellbeing concerns, its ability to accurately classify assessments with clinical concern remains limited. Moreover, although the model's performance was generally consistent when prompted with varying demographic factors such as age and gender, a significantly higher false positive rate was observed for girls, indicating potential sensitivity to gender attribute. These findings highlight both the promise and the challenges of integrating VLMs into robot-led assessments of children's wellbeing.

BT-ACTION: A Test-Driven Approach for Modular Understanding of User Instruction Leveraging Behaviour Trees and LLMs

Apr 03, 2025Abstract:Natural language instructions are often abstract and complex, requiring robots to execute multiple subtasks even for seemingly simple queries. For example, when a user asks a robot to prepare avocado toast, the task involves several sequential steps. Moreover, such instructions can be ambiguous or infeasible for the robot or may exceed the robot's existing knowledge. While Large Language Models (LLMs) offer strong language reasoning capabilities to handle these challenges, effectively integrating them into robotic systems remains a key challenge. To address this, we propose BT-ACTION, a test-driven approach that combines the modular structure of Behavior Trees (BT) with LLMs to generate coherent sequences of robot actions for following complex user instructions, specifically in the context of preparing recipes in a kitchen-assistance setting. We evaluated BT-ACTION in a comprehensive user study with 45 participants, comparing its performance to direct LLM prompting. Results demonstrate that the modular design of BT-ACTION helped the robot make fewer mistakes and increased user trust, and participants showed a significant preference for the robot leveraging BT-ACTION. The code is publicly available at https://github.com/1Eggbert7/BT_LLM.

Let's move on: Topic Change in Robot-Facilitated Group Discussions

Apr 02, 2025Abstract:Robot-moderated group discussions have the potential to facilitate engaging and productive interactions among human participants. Previous work on topic management in conversational agents has predominantly focused on human engagement and topic personalization, with the agent having an active role in the discussion. Also, studies have shown the usefulness of including robots in groups, yet further exploration is still needed for robots to learn when to change the topic while facilitating discussions. Accordingly, our work investigates the suitability of machine-learning models and audiovisual non-verbal features in predicting appropriate topic changes. We utilized interactions between a robot moderator and human participants, which we annotated and used for extracting acoustic and body language-related features. We provide a detailed analysis of the performance of machine learning approaches using sequential and non-sequential data with different sets of features. The results indicate promising performance in classifying inappropriate topic changes, outperforming rule-based approaches. Additionally, acoustic features exhibited comparable performance and robustness compared to the complete set of multimodal features. Our annotated data is publicly available at https://github.com/ghadj/topic-change-robot-discussions-data-2024.

* 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN)

Streaming Network for Continual Learning of Object Relocations under Household Context Drifts

Nov 08, 2024

Abstract:In most applications, robots need to adapt to new environments and be multi-functional without forgetting previous information. This requirement gains further importance in real-world scenarios where robots operate in coexistence with humans. In these complex environments, human actions inevitably lead to changes, requiring robots to adapt accordingly. To effectively address these dynamics, the concept of continual learning proves essential. It not only enables learning models to integrate new knowledge while preserving existing information but also facilitates the acquisition of insights from diverse contexts. This aspect is particularly relevant to the issue of context-switching, where robots must navigate and adapt to changing situational dynamics. Our approach introduces a novel approach to effectively tackle the problem of context drifts by designing a Streaming Graph Neural Network that incorporates both regularization and rehearsal techniques. Our Continual\_GTM model enables us to retain previous knowledge from different contexts, and it is more effective than traditional fine-tuning approaches. We evaluated the efficacy of Continual\_GTM in predicting human routines within household environments, leveraging spatio-temporal object dynamics across diverse scenarios.

Semantically-Driven Disambiguation for Human-Robot Interaction

Sep 25, 2024Abstract:Ambiguities are common in human-robot interaction, especially when a robot follows user instructions in a large collocated space. For instance, when the user asks the robot to find an object in a home environment, the object might be in several places depending on its varying semantic properties (e.g., a bowl can be in the kitchen cabinet or on the dining room table, depending on whether it is clean/dirty, full/empty and the other objects around it). Previous works on object semantics have predicted such relationships using one shot-inferences which are likely to fail for ambiguous or partially understood instructions. This paper focuses on this gap and suggests a semantically-driven disambiguation approach by utilizing follow-up clarifications to handle such uncertainties. To achieve this, we first obtain semantic knowledge embeddings, and then these embeddings are used to generate clarifying questions by following an iterative process. The evaluation of our method shows that our approach is model agnostic, i.e., applicable to different semantic embedding models, and follow-up clarifications improve the performance regardless of the embedding model. Additionally, our ablation studies show the significance of informative clarifications and iterative predictions to enhance system accuracies.

GRACE: Generating Socially Appropriate Robot Actions Leveraging LLMs and Human Explanations

Sep 25, 2024

Abstract:When operating in human environments, robots need to handle complex tasks while both adhering to social norms and accommodating individual preferences. For instance, based on common sense knowledge, a household robot can predict that it should avoid vacuuming during a social gathering, but it may still be uncertain whether it should vacuum before or after having guests. In such cases, integrating common-sense knowledge with human preferences, often conveyed through human explanations, is fundamental yet a challenge for existing systems. In this paper, we introduce GRACE, a novel approach addressing this while generating socially appropriate robot actions. GRACE leverages common sense knowledge from Large Language Models (LLMs), and it integrates this knowledge with human explanations through a generative network architecture. The bidirectional structure of GRACE enables robots to refine and enhance LLM predictions by utilizing human explanations and makes robots capable of generating such explanations for human-specified actions. Our experimental evaluations show that integrating human explanations boosts GRACE's performance, where it outperforms several baselines and provides sensible explanations.

Leveraging Explainability for Comprehending Referring Expressions in the Real World

Jul 12, 2021

Abstract:For effective human-robot collaboration, it is crucial for robots to understand requests from users and ask reasonable follow-up questions when there are ambiguities. While comprehending the users' object descriptions in the requests, existing studies have focused on this challenge for limited object categories that can be detected or localized with existing object detection and localization modules. On the other hand, in the wild, it is impossible to limit the object categories that can be encountered during the interaction. To understand described objects and resolve ambiguities in the wild, for the first time, we suggest a method by leveraging explainability. Our method focuses on the active regions of a scene to find the described objects without putting the previous constraints on object categories and natural language instructions. We evaluate our method in varied real-world images and observe that the regions suggested by our method can help resolve ambiguities. When we compare our method with a state-of-the-art baseline, we show that our method performs better in scenes with ambiguous objects which cannot be recognized by existing object detectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge