Mohamed Hussein

Speak the Art: A Direct Speech to Image Generation Framework

Dec 24, 2025Abstract:Direct speech-to-image generation has recently shown promising results. However, compared to text-to-image generation, there is still a large gap to enclose. Current approaches use two stages to tackle this task: speech encoding network and image generative adversarial network (GAN). The speech encoding networks in these approaches produce embeddings that do not capture sufficient linguistic information to semantically represent the input speech. GANs suffer from issues such as non-convergence, mode collapse, and diminished gradient, which result in unstable model parameters, limited sample diversity, and ineffective generator learning, respectively. To address these weaknesses, we introduce a framework called \textbf{Speak the Art (STA)} which consists of a speech encoding network and a VQ-Diffusion network conditioned on speech embeddings. To improve speech embeddings, the speech encoding network is supervised by a large pre-trained image-text model during training. Replacing GANs with diffusion leads to more stable training and the generation of diverse images. Additionally, we investigate the feasibility of extending our framework to be multilingual. As a proof of concept, we trained our framework with two languages: English and Arabic. Finally, we show that our results surpass state-of-the-art models by a large margin.

Attack-Agnostic Adversarial Detection

Jun 01, 2022

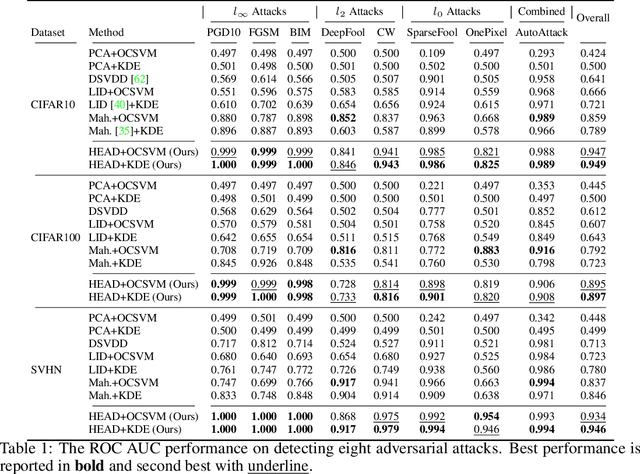

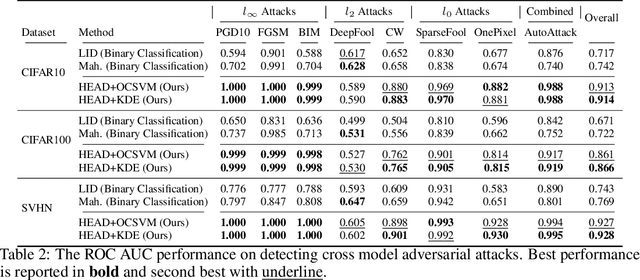

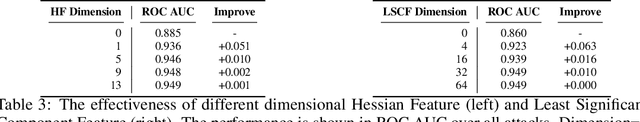

Abstract:The growing number of adversarial attacks in recent years gives attackers an advantage over defenders, as defenders must train detectors after knowing the types of attacks, and many models need to be maintained to ensure good performance in detecting any upcoming attacks. We propose a way to end the tug-of-war between attackers and defenders by treating adversarial attack detection as an anomaly detection problem so that the detector is agnostic to the attack. We quantify the statistical deviation caused by adversarial perturbations in two aspects. The Least Significant Component Feature (LSCF) quantifies the deviation of adversarial examples from the statistics of benign samples and Hessian Feature (HF) reflects how adversarial examples distort the landscape of the model's optima by measuring the local loss curvature. Empirical results show that our method can achieve an overall ROC AUC of 94.9%, 89.7%, and 94.6% on CIFAR10, CIFAR100, and SVHN, respectively, and has comparable performance to adversarial detectors trained with adversarial examples on most of the attacks.

Detection and Continual Learning of Novel Face Presentation Attacks

Aug 27, 2021

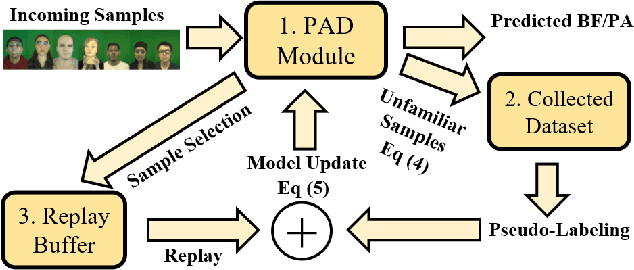

Abstract:Advances in deep learning, combined with availability of large datasets, have led to impressive improvements in face presentation attack detection research. However, state-of-the-art face antispoofing systems are still vulnerable to novel types of attacks that are never seen during training. Moreover, even if such attacks are correctly detected, these systems lack the ability to adapt to newly encountered attacks. The post-training ability of continually detecting new types of attacks and self-adaptation to identify these attack types, after the initial detection phase, is highly appealing. In this paper, we enable a deep neural network to detect anomalies in the observed input data points as potential new types of attacks by suppressing the confidence-level of the network outside the training samples' distribution. We then use experience replay to update the model to incorporate knowledge about new types of attacks without forgetting the past learned attack types. Experimental results are provided to demonstrate the effectiveness of the proposed method on two benchmark datasets as well as a newly introduced dataset which exhibits a large variety of attack types.

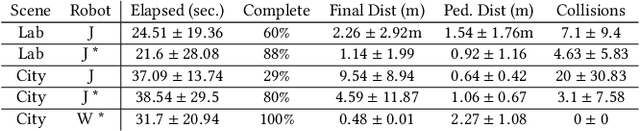

SEAN-EP: A Platform for Collecting Human Feedback for Social Robot Navigation at Scale

Dec 22, 2020

Abstract:We introduce the SEAN Experimental Platform (SEAN-EP), an open-source system that allows roboticists to gather human feedback for social robot navigation at scale using online interactive simulations. Through SEAN-EP, remote users can control the motion of a human avatar via their web browser and interact with a virtual robot controlled through the Robot Operating System. Heavy computation in SEAN-EP is delegated to cloud servers such that users do not need specialized hardware to take part in the simulations. We validated SEAN-EP and its usability through an online survey, and compared the data collected from this survey with a similar video survey. Our results suggest that human perceptions of robots may differ based on whether they interact with the robots in simulation or observe them in videos. Also, our study suggests that people may perceive the surveys with interactive simulations as less mentally demanding than video surveys.

SEAN: Social Environment for Autonomous Navigation

Sep 09, 2020

Abstract:Social navigation research is performed on a variety of robotic platforms, scenarios, and environments. Making comparisons between navigation algorithms is challenging because of the effort involved in building these systems and the diversity of platforms used by the community; nonetheless, evaluation is critical to understanding progress in the field. In a step towards reproducible evaluation of social navigation algorithms, we propose the Social Environment for Autonomous Navigation (SEAN). SEAN is a high visual fidelity, open source, and extensible social navigation simulation platform which includes a toolkit for evaluation of navigation algorithms. We demonstrate SEAN and its evaluation toolkit in two environments with dynamic pedestrians and using two different robots.

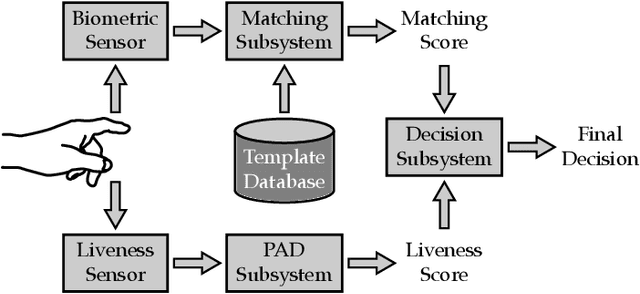

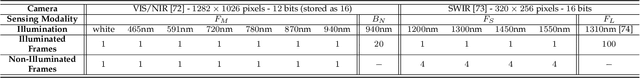

Multi-Modal Fingerprint Presentation Attack Detection: Evaluation On A New Dataset

Jun 16, 2020

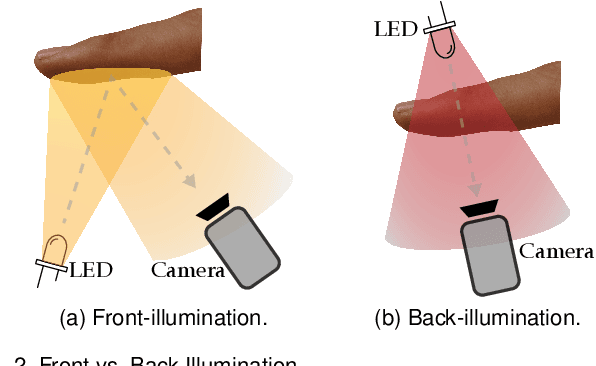

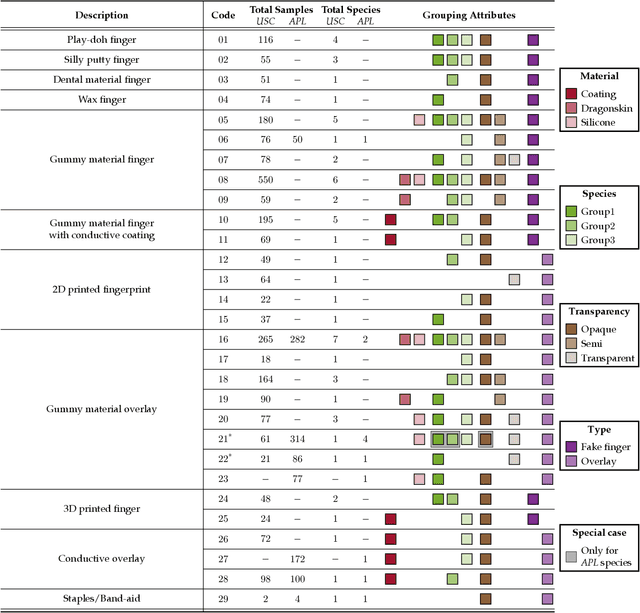

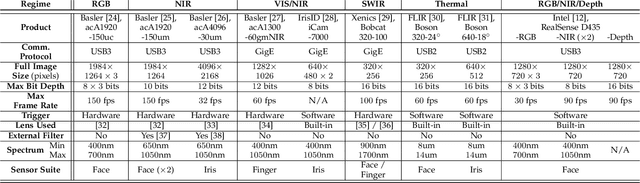

Abstract:Fingerprint presentation attack detection is becoming an increasingly challenging problem due to the continuous advancement of attack preparation techniques, which generate realistic-looking fake fingerprint presentations. In this work, rather than relying on legacy fingerprint images, which are widely used in the community, we study the usefulness of multiple recently introduced sensing modalities. Our study covers front-illumination imaging using short-wave-infrared, near-infrared, and laser illumination; and back-illumination imaging using near-infrared light. Toward studying the effectiveness of each of these unconventional sensing modalities and their fusion for liveness detection, we conducted a comprehensive analysis using a fully convolutional deep neural network framework. Our evaluation compares different combination of the new sensing modalities to legacy data from one of our collections as well as the public LivDet2015 dataset, showing the superiority of the new sensing modalities in most cases. It also covers the cases of known and unknown attacks and the cases of intra-dataset and inter-dataset evaluations. Our results indicate that the power of our approach stems from the nature of the captured data rather than the employed classification framework, which justifies the extra cost for hardware-based (or hybrid) solutions. We plan to publicly release one of our dataset collections.

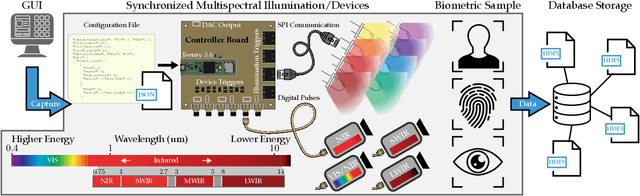

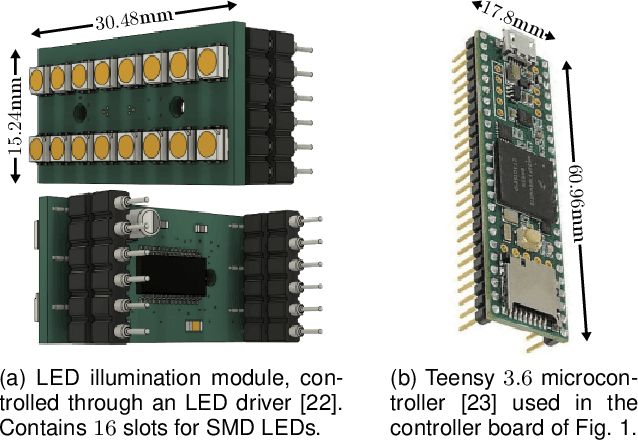

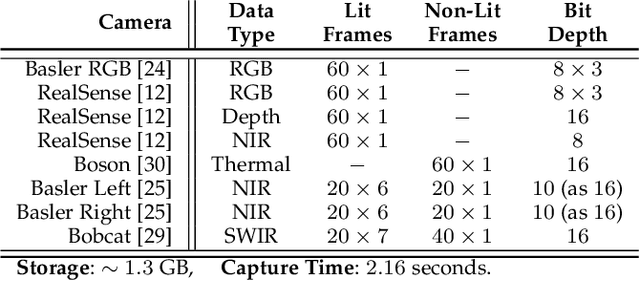

Multispectral Biometrics System Framework: Application to Presentation Attack Detection

Jun 12, 2020

Abstract:In this work, we present a general framework for building a biometrics system capable of capturing multispectral data from a series of sensors synchronized with active illumination sources. The framework unifies the system design for different biometric modalities and its realization on face, finger and iris data is described in detail. To the best of our knowledge, the presented design is the first to employ such a diverse set of electromagnetic spectrum bands, ranging from visible to long-wave-infrared wavelengths, and is capable of acquiring large volumes of data in seconds. Having performed a series of data collections, we run a comprehensive analysis on the captured data using a deep-learning classifier for presentation attack detection. Our study follows a data-centric approach attempting to highlight the strengths and weaknesses of each spectral band at distinguishing live from fake samples.

On the Effectiveness of Laser Speckle Contrast Imaging and Deep Neural Networks for Detecting Known and Unknown Fingerprint Presentation Attacks

Jun 06, 2019

Abstract:Fingerprint presentation attack detection (FPAD) is becoming an increasingly challenging problem due to the continuous advancement of attack techniques, which generate `realistic-looking' fake fingerprint presentations. Recently, laser speckle contrast imaging (LSCI) has been introduced as a new sensing modality for FPAD. LSCI has the interesting characteristic of capturing the blood flow under the skin surface. Toward studying the importance and effectiveness of LSCI for FPAD, we conduct a comprehensive study using different patch-based deep neural network architectures. Our studied architectures include 2D and 3D convolutional networks as well as a recurrent network using long short-term memory (LSTM) units. The study demonstrates that strong FPAD performance can be achieved using LSCI. We evaluate the different models over a new large dataset. The dataset consists of 3743 bona fide samples, collected from 335 unique subjects, and 218 presentation attack samples, including six different types of attacks. To examine the effect of changing the training and testing sets, we conduct a 3-fold cross validation evaluation. To examine the effect of the presence of an unseen attack, we apply a leave-one-attack out strategy. The FPAD classification results of the networks, which are separately optimized and tuned for the temporal and spatial patch-sizes, indicate that the best performance is achieved by LSTM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge