Maryam Bandari

A System for Imitation Learning of Contact-Rich Bimanual Manipulation Policies

Aug 01, 2022

Abstract:In this paper, we discuss a framework for teaching bimanual manipulation tasks by imitation. To this end, we present a system and algorithms for learning compliant and contact-rich robot behavior from human demonstrations. The presented system combines insights from admittance control and machine learning to extract control policies that can (a) recover from and adapt to a variety of disturbances in time and space, while also (b) effectively leveraging physical contact with the environment. We demonstrate the effectiveness of our approach using a real-world insertion task involving multiple simultaneous contacts between a manipulated object and insertion pegs. We also investigate efficient means of collecting training data for such bimanual settings. To this end, we conduct a human-subject study and analyze the effort and mental demand as reported by the users. Our experiments show that, while harder to provide, the additional force/torque information available in teleoperated demonstrations is crucial for phase estimation and task success. Ultimately, force/torque data substantially improves manipulation robustness, resulting in a 90% success rate in a multipoint insertion task. Code and videos can be found at https://bimanualmanipulation.com/

Detection and Physical Interaction with Deformable Linear Objects

May 17, 2022

Abstract:Deformable linear objects (e.g., cables, ropes, and threads) commonly appear in our everyday lives. However, perception of these objects and the study of physical interaction with them is still a growing area. There have already been successful methods to model and track deformable linear objects. However, the number of methods that can automatically extract the initial conditions in non-trivial situations for these methods has been limited, and they have been introduced to the community only recently. On the other hand, while physical interaction with these objects has been done with ground manipulators, there have not been any studies on physical interaction and manipulation of the deformable linear object with aerial robots. This workshop describes our recent work on detecting deformable linear objects, which uses the segmentation output of the existing methods to provide the initialization required by the tracking methods automatically. It works with crossings and can fill the gaps and occlusions in the segmentation and output the model desirable for physical interaction and simulation. Then we present our work on using the method for tasks such as routing and manipulation with the ground and aerial robots. We discuss our feasibility analysis on extending the physical interaction with these objects to aerial manipulation applications.

Efficient Spatial Representation and Routing of Deformable One-Dimensional Objects for Manipulation

Feb 13, 2022

Abstract:With the field of rigid-body robotics having matured in the last fifty years, routing, planning, and manipulation of deformable objects have emerged in recent years as a more untouched research area in many fields ranging from surgical robotics to industrial assembly and construction. Routing approaches for deformable objects which rely on learned implicit spatial representations (e.g., Learning-from-Demonstration methods) make them vulnerable to changes in the environment and the specific setup. On the other hand, algorithms that entirely separate the spatial representation of the deformable object from the routing and manipulation, often using a representation approach independent of planning, result in slow planning in high dimensional space. This paper proposes a novel approach to spatial representation combined with route planning that allows efficient routing of deformable one-dimensional objects (e.g., wires, cables, ropes, threads). The spatial representation is based on the geometrical decomposition of the space into convex subspaces, which allows an efficient coding of the configuration. Having such a configuration, the routing problem can be solved using a dynamic programming matching method with a quadratic time and space complexity. The proposed method couples the routing and efficient configuration for improved planning time. Our tests and experiments show the method correctly computing the next manipulation action in sub-millisecond time and accomplishing various routing and manipulation tasks.

Deformable One-Dimensional Object Detection for Routing and Manipulation

Jan 18, 2022

Abstract:Many methods exist to model and track deformable one-dimensional objects (e.g., cables, ropes, and threads) across a stream of video frames. However, these methods depend on the existence of some initial conditions. To the best of our knowledge, the topic of detection methods that can extract those initial conditions in non-trivial situations has hardly been addressed. The lack of detection methods limits the use of the tracking methods in real-world applications and is a bottleneck for fully autonomous applications that work with these objects. This paper proposes an approach for detecting deformable one-dimensional objects which can handle crossings and occlusions. It can be used for tasks such as routing and manipulation and automatically provides the initialization required by the tracking methods. Our algorithm takes an image containing a deformable object and outputs a chain of fixed-length cylindrical segments connected with passive spherical joints. The chain follows the natural behavior of the deformable object and fills the gaps and occlusions in the original image. Our tests and experiments have shown that the method can correctly detect deformable one-dimensional objects in various complex conditions.

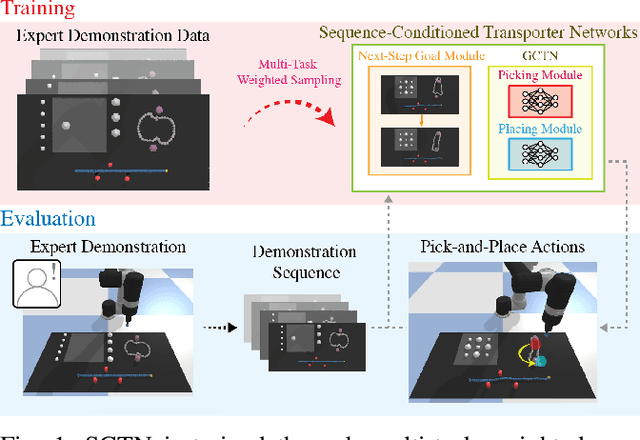

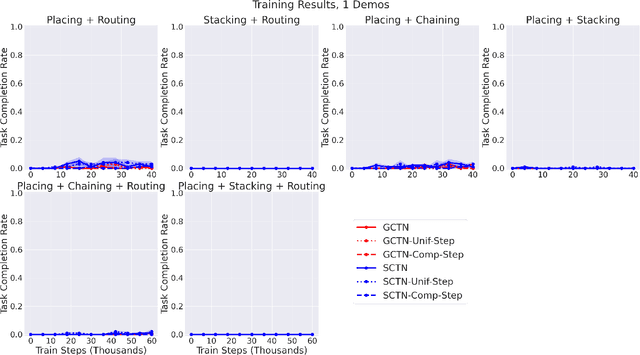

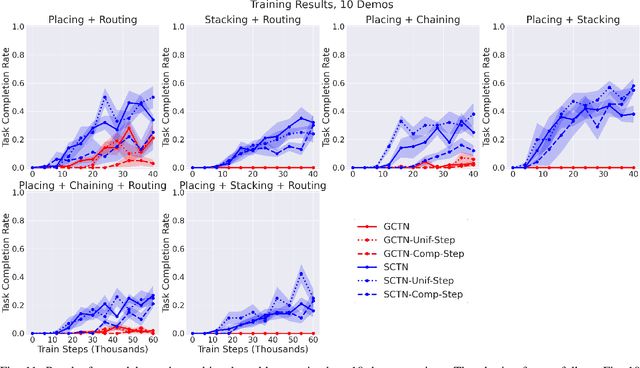

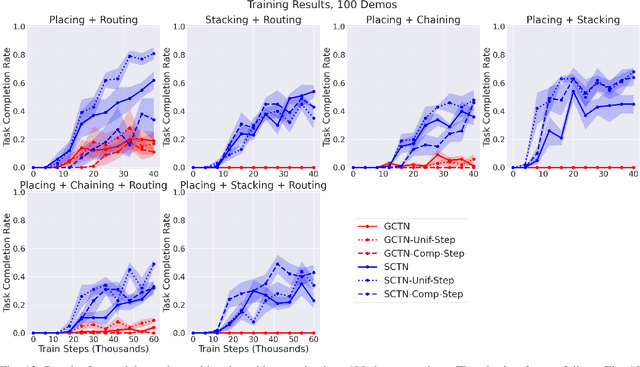

Multi-Task Learning with Sequence-Conditioned Transporter Networks

Sep 15, 2021

Abstract:Enabling robots to solve multiple manipulation tasks has a wide range of industrial applications. While learning-based approaches enjoy flexibility and generalizability, scaling these approaches to solve such compositional tasks remains a challenge. In this work, we aim to solve multi-task learning through the lens of sequence-conditioning and weighted sampling. First, we propose a new suite of benchmark specifically aimed at compositional tasks, MultiRavens, which allows defining custom task combinations through task modules that are inspired by industrial tasks and exemplify the difficulties in vision-based learning and planning methods. Second, we propose a vision-based end-to-end system architecture, Sequence-Conditioned Transporter Networks, which augments Goal-Conditioned Transporter Networks with sequence-conditioning and weighted sampling and can efficiently learn to solve multi-task long horizon problems. Our analysis suggests that not only the new framework significantly improves pick-and-place performance on novel 10 multi-task benchmark problems, but also the multi-task learning with weighted sampling can vastly improve learning and agent performances on individual tasks.

Neural Collision Clearance Estimator for Fast Robot Motion Planning

Oct 14, 2019

Abstract:Collision checking is a well known bottleneck in sampling-based motion planning due to its computational expense and the large number of checks required. To alleviate this bottleneck, we present a fast neural network collision checking heuristic, ClearanceNet, and incorporate it within a planning algorithm, ClearanceNet-RRT (CN-RRT). ClearanceNet takes as input a robot pose and the location of all obstacles in the workspace and learns to predict the clearance, i.e., distance to nearest obstacle. CN-RRT then efficiently computes a motion plan by leveraging three key features of ClearanceNet. First, as neural network inference is massively parallel, CN-RRT explores the space via a parallel RRT, which expands nodes in parallel, allowing for thousands of collision checks at once. Second, CN-RRT adaptively relaxes its clearance threshold for more difficult problems. Third, to repair errors, CN-RRT shifts states towards higher clearance through a gradient-based approach that uses the analytic gradient of ClearanceNet. Once a path is found, any errors are repaired via RRT over the misclassified sections, thus maintaining the theoretical guarantees of sampling-based motion planning. We evaluate the collision checking speed, planning speed, and motion plan efficiency in configuration spaces with up to 30 degrees of freedom. The collision checking achieves speedups of more than two orders of magnitude over traditional collision detection methods. Sampling-based planning over multiple robotic arms in new environment configurations achieves speedups of up to 51% over a baseline, with paths up to 25% more efficient. Experiments on a physical Fetch robot reaching into shelves in a cluttered environment confirm the feasibility of this method on real robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge