M. Khalid Jawed

Py-DiSMech: A Scalable and Efficient Framework for Discrete Differential Geometry-Based Modeling and Control of Soft Robots

Dec 20, 2025Abstract:High-fidelity simulation has become essential to the design and control of soft robots, where large geometric deformations and complex contact interactions challenge conventional modeling tools. Recent advances in the field demand simulation frameworks that combine physical accuracy, computational scalability, and seamless integration with modern control and optimization pipelines. In this work, we present Py-DiSMech, a Python-based, open-source simulation framework for modeling and control of soft robotic structures grounded in the principles of Discrete Differential Geometry (DDG). By discretizing geometric quantities such as curvature and strain directly on meshes, Py-DiSMech captures the nonlinear deformation of rods, shells, and hybrid structures with high fidelity and reduced computational cost. The framework introduces (i) a fully vectorized NumPy implementation achieving order-of-magnitude speed-ups over existing geometry-based simulators; (ii) a penalty-energy-based fully implicit contact model that supports rod-rod, rod-shell, and shell-shell interactions; (iii) a natural-strain-based feedback-control module featuring a proportional-integral (PI) controller for shape regulation and trajectory tracking; and (iv) a modular, object-oriented software design enabling user-defined elastic energies, actuation schemes, and integration with machine-learning libraries. Benchmark comparisons demonstrate that Py-DiSMech substantially outperforms the state-of-the-art simulator Elastica in computational efficiency while maintaining physical accuracy. Together, these features establish Py-DiSMech as a scalable, extensible platform for simulation-driven design, control validation, and sim-to-real research in soft robotics.

DePT3R: Joint Dense Point Tracking and 3D Reconstruction of Dynamic Scenes in a Single Forward Pass

Dec 15, 2025

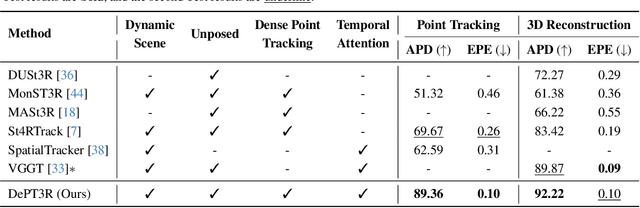

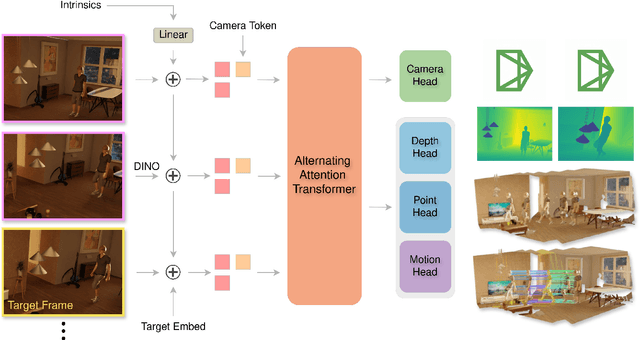

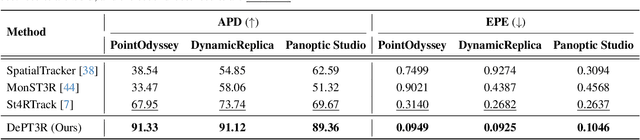

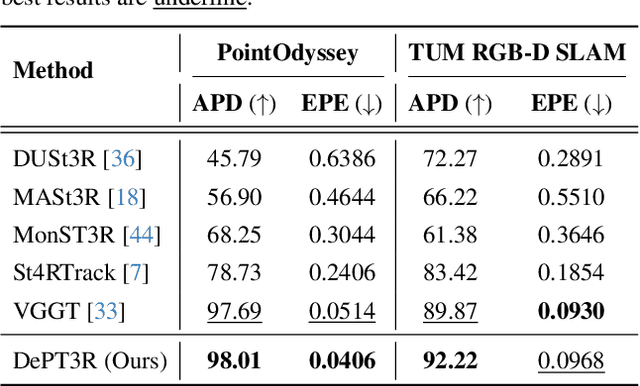

Abstract:Current methods for dense 3D point tracking in dynamic scenes typically rely on pairwise processing, require known camera poses, or assume a temporal ordering to input frames, constraining their flexibility and applicability. Additionally, recent advances have successfully enabled efficient 3D reconstruction from large-scale, unposed image collections, underscoring opportunities for unified approaches to dynamic scene understanding. Motivated by this, we propose DePT3R, a novel framework that simultaneously performs dense point tracking and 3D reconstruction of dynamic scenes from multiple images in a single forward pass. This multi-task learning is achieved by extracting deep spatio-temporal features with a powerful backbone and regressing pixel-wise maps with dense prediction heads. Crucially, DePT3R operates without requiring camera poses, substantially enhancing its adaptability and efficiency-especially important in dynamic environments with rapid changes. We validate DePT3R on several challenging benchmarks involving dynamic scenes, demonstrating strong performance and significant improvements in memory efficiency over existing state-of-the-art methods. Data and codes are available via the open repository: https://github.com/StructuresComp/DePT3R

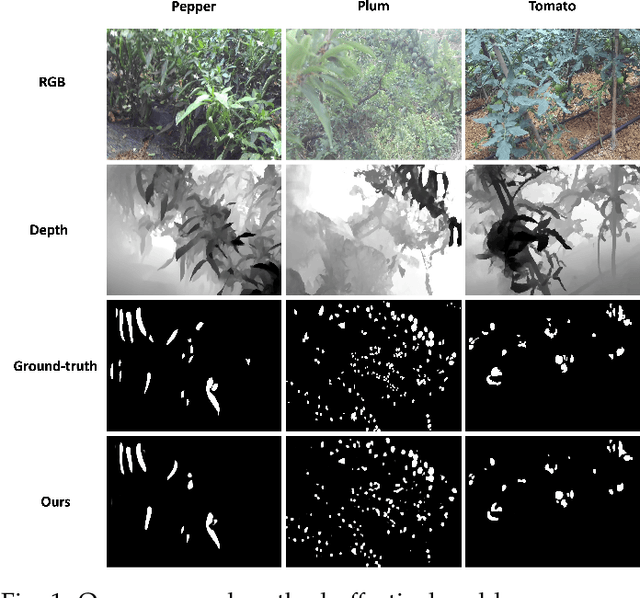

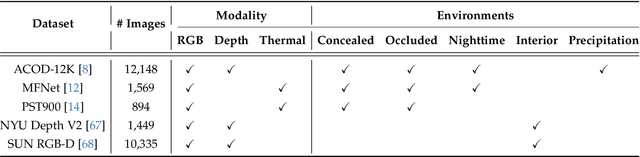

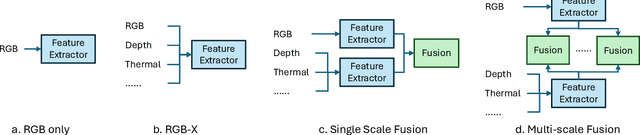

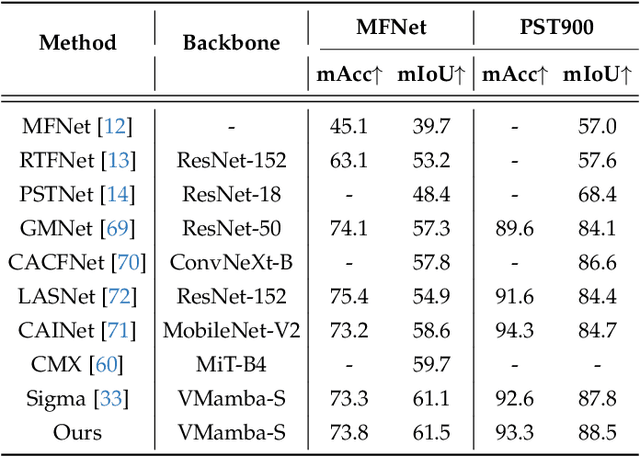

HiddenObject: Modality-Agnostic Fusion for Multimodal Hidden Object Detection

Aug 28, 2025

Abstract:Detecting hidden or partially concealed objects remains a fundamental challenge in multimodal environments, where factors like occlusion, camouflage, and lighting variations significantly hinder performance. Traditional RGB-based detection methods often fail under such adverse conditions, motivating the need for more robust, modality-agnostic approaches. In this work, we present HiddenObject, a fusion framework that integrates RGB, thermal, and depth data using a Mamba-based fusion mechanism. Our method captures complementary signals across modalities, enabling enhanced detection of obscured or camouflaged targets. Specifically, the proposed approach identifies modality-specific features and fuses them in a unified representation that generalizes well across challenging scenarios. We validate HiddenObject across multiple benchmark datasets, demonstrating state-of-the-art or competitive performance compared to existing methods. These results highlight the efficacy of our fusion design and expose key limitations in current unimodal and na\"ive fusion strategies. More broadly, our findings suggest that Mamba-based fusion architectures can significantly advance the field of multimodal object detection, especially under visually degraded or complex conditions.

AgriChrono: A Multi-modal Dataset Capturing Crop Growth and Lighting Variability with a Field Robot

Aug 26, 2025Abstract:Existing datasets for precision agriculture have primarily been collected in static or controlled environments such as indoor labs or greenhouses, often with limited sensor diversity and restricted temporal span. These conditions fail to reflect the dynamic nature of real farmland, including illumination changes, crop growth variation, and natural disturbances. As a result, models trained on such data often lack robustness and generalization when applied to real-world field scenarios. In this paper, we present AgriChrono, a novel robotic data collection platform and multi-modal dataset designed to capture the dynamic conditions of real-world agricultural environments. Our platform integrates multiple sensors and enables remote, time-synchronized acquisition of RGB, Depth, LiDAR, and IMU data, supporting efficient and repeatable long-term data collection across varying illumination and crop growth stages. We benchmark a range of state-of-the-art 3D reconstruction models on the AgriChrono dataset, highlighting the difficulty of reconstruction in real-world field environments and demonstrating its value as a research asset for advancing model generalization under dynamic conditions. The code and dataset are publicly available at: https://github.com/StructuresComp/agri-chrono

Reconstruction Using the Invisible: Intuition from NIR and Metadata for Enhanced 3D Gaussian Splatting

Aug 20, 2025

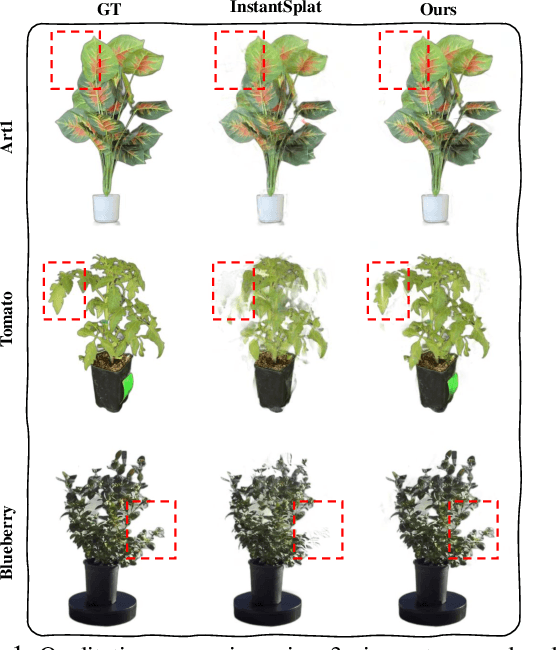

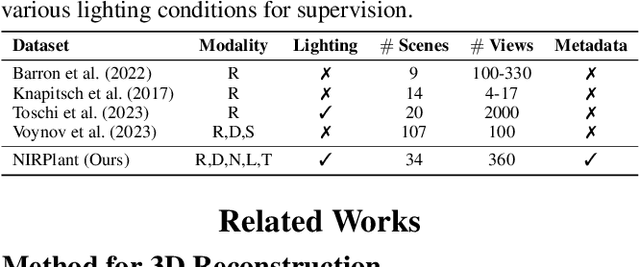

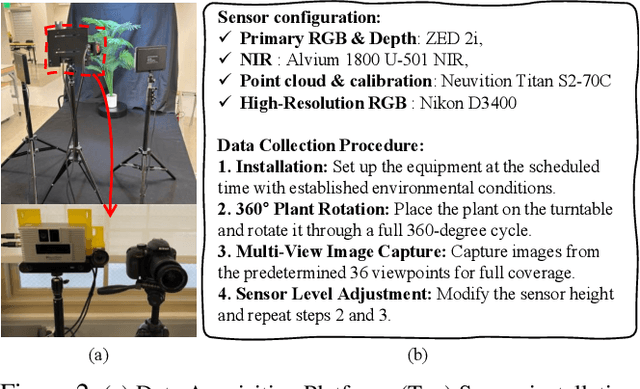

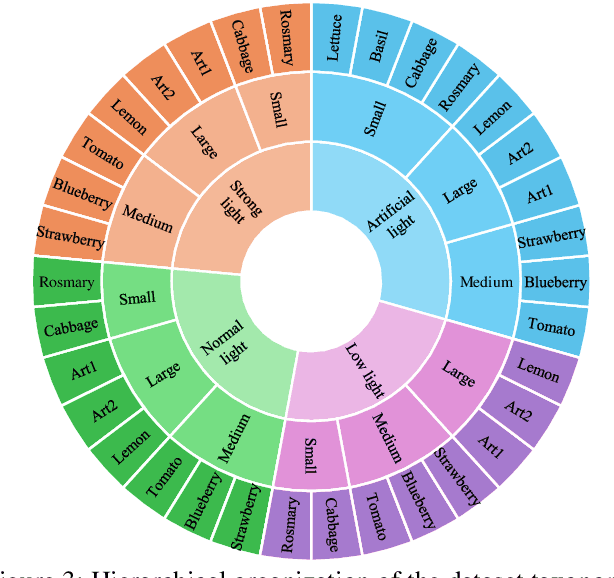

Abstract:While 3D Gaussian Splatting (3DGS) has rapidly advanced, its application in agriculture remains underexplored. Agricultural scenes present unique challenges for 3D reconstruction methods, particularly due to uneven illumination, occlusions, and a limited field of view. To address these limitations, we introduce \textbf{NIRPlant}, a novel multimodal dataset encompassing Near-Infrared (NIR) imagery, RGB imagery, textual metadata, Depth, and LiDAR data collected under varied indoor and outdoor lighting conditions. By integrating NIR data, our approach enhances robustness and provides crucial botanical insights that extend beyond the visible spectrum. Additionally, we leverage text-based metadata derived from vegetation indices, such as NDVI, NDWI, and the chlorophyll index, which significantly enriches the contextual understanding of complex agricultural environments. To fully exploit these modalities, we propose \textbf{NIRSplat}, an effective multimodal Gaussian splatting architecture employing a cross-attention mechanism combined with 3D point-based positional encoding, providing robust geometric priors. Comprehensive experiments demonstrate that \textbf{NIRSplat} outperforms existing landmark methods, including 3DGS, CoR-GS, and InstantSplat, highlighting its effectiveness in challenging agricultural scenarios. The code and dataset are publicly available at: https://github.com/StructuresComp/3D-Reconstruction-NIR

Emergent morphogenesis via planar fabrication enabled by a reduced model of composites

Aug 11, 2025Abstract:The ability to engineer complex three-dimensional shapes from planar sheets with precise, programmable control underpins emerging technologies in soft robotics, reconfigurable devices, and functional materials. Here, we present a reduced-order numerical and experimental framework for a bilayer system consisting of a stimuli-responsive thermoplastic sheet (Shrinky Dink) bonded to a kirigami-patterned, inert plastic layer. Upon uniform heating, the active layer contracts while the patterned layer constrains in-plane stretch but allows out-of-plane bending, yielding programmable 3D morphologies from simple planar precursors. Our approach enables efficient computational design and scalable manufacturing of 3D forms with a single-layer reduced model that captures the coupled mechanics of stretching and bending. Unlike traditional bilayer modeling, our framework collapses the multilayer composite into a single layer of nodes and elements, reducing the degrees of freedom and enabling simulation on a 2D geometry. This is achieved by introducing a novel energy formulation that captures the coupling between in-plane stretch mismatch and out-of-plane bending - extending beyond simple isotropic linear elastic models. Experimentally, we establish a fully planar, repeatable fabrication protocol using a stimuli-responsive thermoplastic and a laser-cut inert plastic layer. The programmed strain mismatch drives an array of 3D morphologies, such as bowls, canoes, and flower petals, all verified by both simulation and physical prototypes.

MAT-DiSMech: A Discrete Differential Geometry-based Computational Tool for Simulation of Rods, Shells, and Soft Robots

Apr 24, 2025Abstract:Accurate and efficient simulation tools are essential in robotics, enabling the visualization of system dynamics and the validation of control laws before committing resources to physical experimentation. Developing physically accurate simulation tools is particularly challenging in soft robotics, largely due to the prevalence of geometrically nonlinear deformation. A variety of robot simulators tackle this challenge by using simplified modeling techniques -- such as lumped mass models -- which lead to physical inaccuracies in real-world applications. On the other hand, high-fidelity simulation methods for soft structures, like finite element analysis, offer increased accuracy but lead to higher computational costs. In light of this, we present a Discrete Differential Geometry-based simulator that provides a balance between physical accuracy and computational speed. Building on an extensive body of research on rod and shell-based representations of soft robots, our tool provides a pathway to accurately model soft robots in a computationally tractable manner. Our open-source MATLAB-based framework is capable of simulating the deformations of rods, shells, and their combinations, primarily utilizing implicit integration techniques. The software design is modular for the user to customize the code, for example, add new external forces and impose custom boundary conditions. The implementations for prevalent forces encountered in robotics, including gravity, contact, kinetic and viscous friction, and aerodynamic drag, have been provided. We provide several illustrative examples that showcase the capabilities and validate the physical accuracy of the simulator. The open-source code is available at https://github.com/StructuresComp/dismech-matlab. We anticipate that the proposed simulator can serve as an effective digital twin tool, enhancing the Sim2Real pathway in soft robotics research.

Modeling, Characterization, and Control of Bacteria-inspired Bi-flagellated Mechanism with Tumbling

Jun 30, 2023

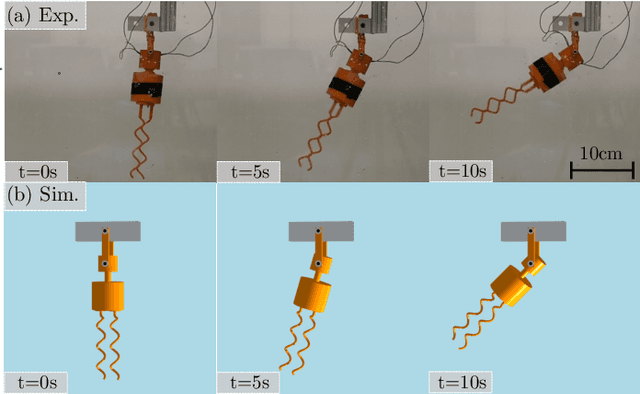

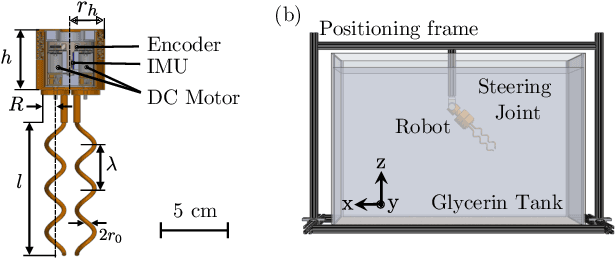

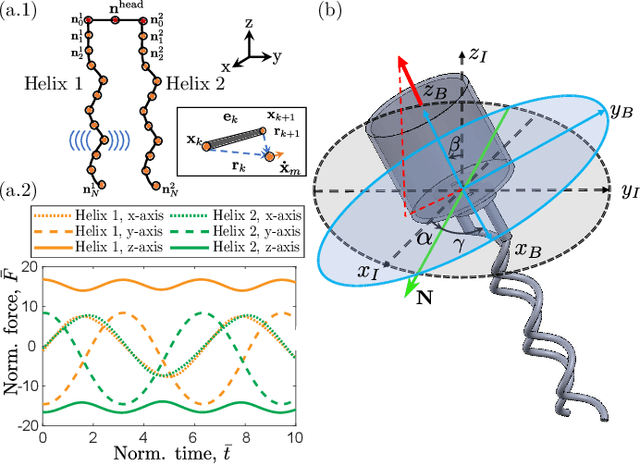

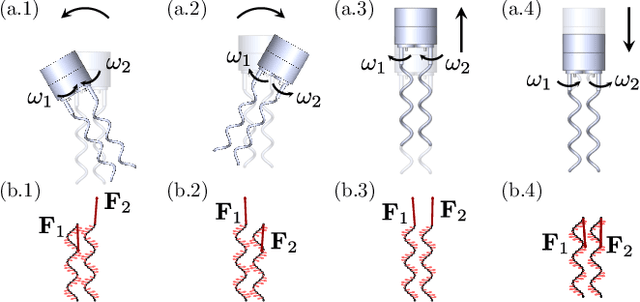

Abstract:Multi-flagellated bacteria utilize the hydrodynamic interaction between their filamentary tails, known as flagella, to swim and change their swimming direction in low Reynolds number flow. This interaction, referred to as bundling and tumbling, is often overlooked in simplified hydrodynamic models such as Resistive Force Theories (RFT). However, for the development of efficient and steerable robots inspired by bacteria, it becomes crucial to exploit this interaction. In this paper, we present the construction of a macroscopic bio-inspired robot featuring two rigid flagella arranged as right-handed helices, along with a cylindrical head. By rotating the flagella in opposite directions, the robot's body can reorient itself through repeatable and controllable tumbling. To accurately model this bi-flagellated mechanism in low Reynolds flow, we employ a coupling of rigid body dynamics and the method of Regularized Stokeslet Segments (RSS). Unlike RFT, RSS takes into account the hydrodynamic interaction between distant filamentary structures. Furthermore, we delve into the exploration of the parameter space to optimize the propulsion and torque of the system. To achieve the desired reorientation of the robot, we propose a tumble control scheme that involves modulating the rotation direction and speed of the two flagella. By implementing this scheme, the robot can effectively reorient itself to attain the desired attitude. Notably, the overall scheme boasts a simplified design and control as it only requires two control inputs. With our macroscopic framework serving as a foundation, we envision the eventual miniaturization of this technology to construct mobile and controllable micro-scale bacterial robots.

Agronav: Autonomous Navigation Framework for Agricultural Robots and Vehicles using Semantic Segmentation and Semantic Line Detection

Apr 10, 2023

Abstract:The successful implementation of vision-based navigation in agricultural fields hinges upon two critical components: 1) the accurate identification of key components within the scene, and 2) the identification of lanes through the detection of boundary lines that separate the crops from the traversable ground. We propose Agronav, an end-to-end vision-based autonomous navigation framework, which outputs the centerline from the input image by sequentially processing it through semantic segmentation and semantic line detection models. We also present Agroscapes, a pixel-level annotated dataset collected across six different crops, captured from varying heights and angles. This ensures that the framework trained on Agroscapes is generalizable across both ground and aerial robotic platforms. Codes, models and dataset will be released at \href{https://github.com/shivamkumarpanda/agronav}{github.com/shivamkumarpanda/agronav}.

Sim2Real Physically Informed Neural Controllers for Robotic Deployment of Deformable Linear Objects

Mar 05, 2023Abstract:Deformable linear objects, such as rods, cables, and ropes, play important roles in daily life. However, manipulation of DLOs is challenging as large geometrically nonlinear deformations may occur during the manipulation process. This problem is made even more difficult as the different deformation modes (e.g., stretching, bending, and twisting) may result in elastic instabilities during manipulation. In this paper, we formulate a physics-guided data-driven method to solve a challenging manipulation task -- accurately deploying a DLO (an elastic rod) onto a rigid substrate along various prescribed patterns. Our framework combines machine learning, scaling analysis, and physics-based simulations to develop a physically informed neural controller for deployment. We explore the complex interplay between the gravitational and elastic energies of the manipulated DLO and obtain a control method for DLO deployment that is robust against friction and material properties. Out of the numerous geometrical and material properties of the rod and substrate, we show that only three non-dimensional parameters are needed to describe the deployment process with physical analysis. Therefore, the essence of the controlling law for the manipulation task can be constructed with a low-dimensional model, drastically increasing the computation speed. The effectiveness of our optimal control scheme is shown through a comprehensive robotic case study comparing against a heuristic control method for deploying rods for a wide variety of patterns. In addition to this, we also showcase the practicality of our control scheme by having a robot accomplish challenging high-level tasks such as mimicking human handwriting and tying knots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge