Luca Calatroni

On the Sample Complexity of Learning for Blind Inverse Problems

Dec 29, 2025Abstract:Blind inverse problems arise in many experimental settings where the forward operator is partially or entirely unknown. In this context, methods developed for the non-blind case cannot be adapted in a straightforward manner. Recently, data-driven approaches have been proposed to address blind inverse problems, demonstrating strong empirical performance and adaptability. However, these methods often lack interpretability and are not supported by rigorous theoretical guarantees, limiting their reliability in applied domains such as imaging inverse problems. In this work, we shed light on learning in blind inverse problems within the simplified yet insightful framework of Linear Minimum Mean Square Estimators (LMMSEs). We provide an in-depth theoretical analysis, deriving closed-form expressions for optimal estimators and extending classical results. In particular, we establish equivalences with suitably chosen Tikhonov-regularized formulations, where the regularization depends explicitly on the distributions of the unknown signal, the noise, and the random forward operators. We also prove convergence results under appropriate source condition assumptions. Furthermore, we derive rigorous finite-sample error bounds that characterize the performance of learned estimators as a function of the noise level, problem conditioning, and number of available samples. These bounds explicitly quantify the impact of operator randomness and reveal the associated convergence rates as this randomness vanishes. Finally, we validate our theoretical findings through illustrative numerical experiments that confirm the predicted convergence behavior.

Deep Equilibrium models for Poisson Imaging Inverse problems via Mirror Descent

Jul 15, 2025Abstract:Deep Equilibrium Models (DEQs) are implicit neural networks with fixed points, which have recently gained attention for learning image regularization functionals, particularly in settings involving Gaussian fidelities, where assumptions on the forward operator ensure contractiveness of standard (proximal) Gradient Descent operators. In this work, we extend the application of DEQs to Poisson inverse problems, where the data fidelity term is more appropriately modeled by the Kullback-Leibler divergence. To this end, we introduce a novel DEQ formulation based on Mirror Descent defined in terms of a tailored non-Euclidean geometry that naturally adapts with the structure of the data term. This enables the learning of neural regularizers within a principled training framework. We derive sufficient conditions to guarantee the convergence of the learned reconstruction scheme and propose computational strategies that enable both efficient training and fully parameter-free inference. Numerical experiments show that our method outperforms traditional model-based approaches and it is comparable to the performance of Bregman Plug-and-Play methods, while mitigating their typical drawbacks - namely, sensitivity to initialization and careful tuning of hyperparameters. The code is publicly available at https://github.com/christiandaniele/DEQ-MD.

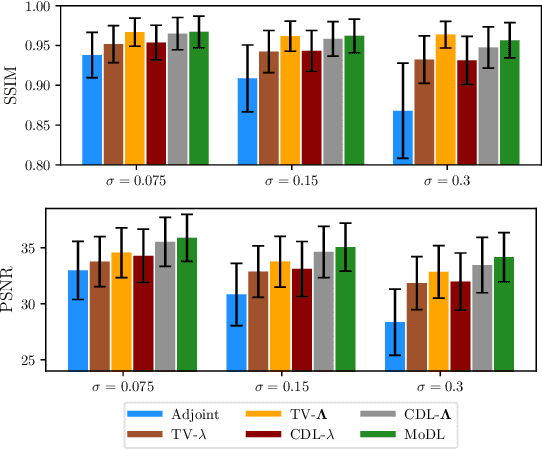

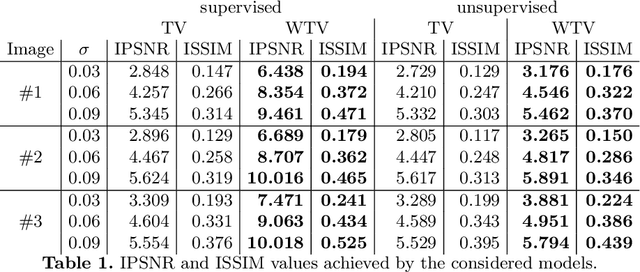

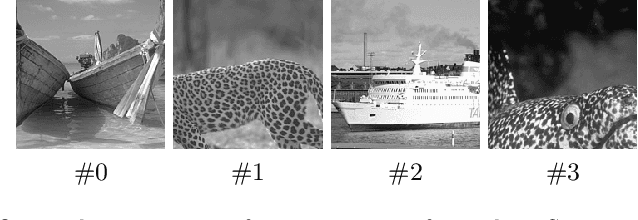

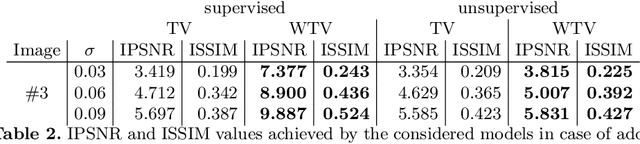

Patch-based learning of adaptive Total Variation parameter maps for blind image denoising

Mar 20, 2025Abstract:We consider a patch-based learning approach defined in terms of neural networks to estimate spatially adaptive regularisation parameter maps for image denoising with weighted Total Variation and test it to situations when the noise distribution is unknown. As an example, we consider situations where noise could be either Gaussian or Poisson and perform preliminary model selection by a standard binary classification network. Then, we define a patch-based approach where at each image pixel an optimal weighting between TV regularisation and the corresponding data fidelity is learned in a supervised way using reference natural image patches upon optimisation of SSIM and in a sliding window fashion. Extensive numerical results are reported for both noise models, showing significant improvement w.r.t. results obtained by means of optimal scalar regularisation.

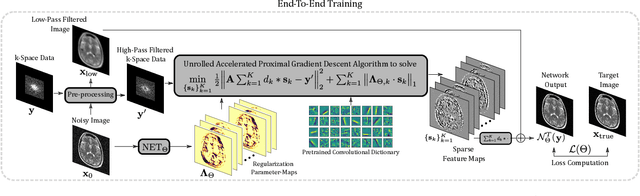

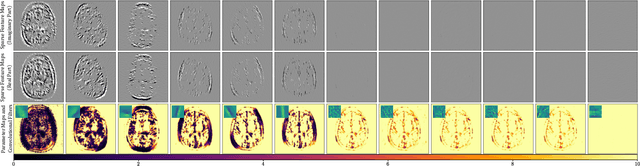

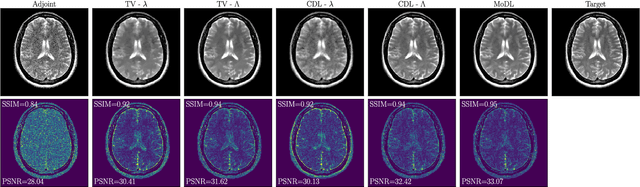

Learning Spatially Adaptive $\ell_1$-Norms Weights for Convolutional Synthesis Regularization

Mar 12, 2025

Abstract:We propose an unrolled algorithm approach for learning spatially adaptive parameter maps in the framework of convolutional synthesis-based $\ell_1$ regularization. More precisely, we consider a family of pre-trained convolutional filters and estimate deeply parametrized spatially varying parameters applied to the sparse feature maps by means of unrolling a FISTA algorithm to solve the underlying sparse estimation problem. The proposed approach is evaluated for image reconstruction of low-field MRI and compared to spatially adaptive and non-adaptive analysis-type procedures relying on Total Variation regularization and to a well-established model-based deep learning approach. We show that the proposed approach produces visually and quantitatively comparable results with the latter approaches and at the same time remains highly interpretable. In particular, the inferred parameter maps quantify the local contribution of each filter in the reconstruction, which provides valuable insight into the algorithm mechanism and could potentially be used to discard unsuited filters.

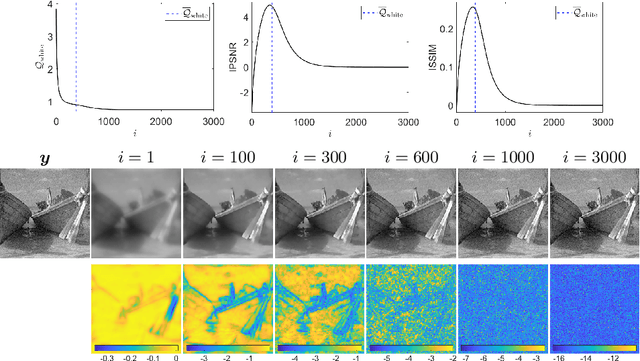

Whiteness-based bilevel estimation of weighted TV parameter maps for image denoising

Mar 10, 2025

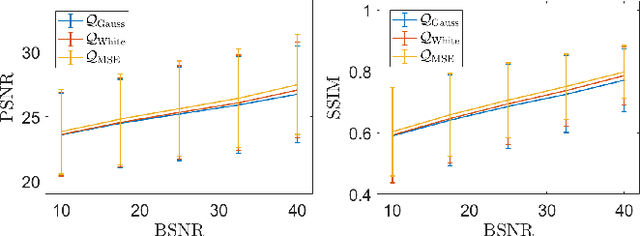

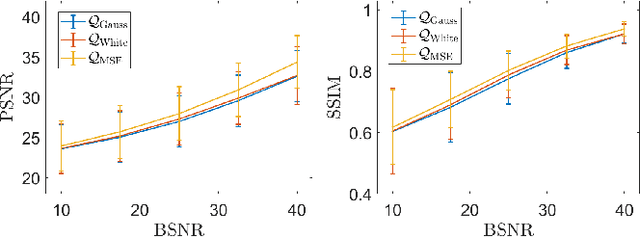

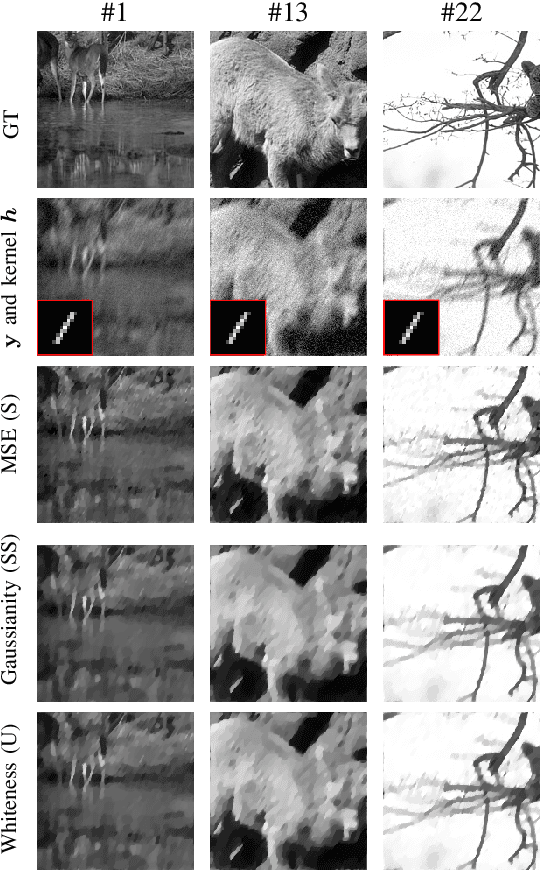

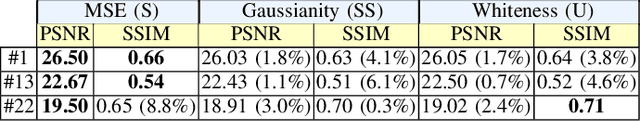

Abstract:We consider a bilevel optimisation strategy based on normalised residual whiteness loss for estimating the weighted total variation parameter maps for denoising images corrupted by additive white Gaussian noise. Compared to supervised and semi-supervised approaches relying on prior knowledge of (approximate) reference data and/or information on the noise magnitude, the proposal is fully unsupervised. To avoid noise overfitting an early stopping strategy is used, relying on simple statistics of optimal performances on a set of natural images. Numerical results comparing the supervised/unsupervised procedures for scalar/pixel-dependent \mbox{parameter maps are shown.

Whiteness-based bilevel learning of regularization parameters in imaging

Mar 10, 2024

Abstract:We consider an unsupervised bilevel optimization strategy for learning regularization parameters in the context of imaging inverse problems in the presence of additive white Gaussian noise. Compared to supervised and semi-supervised metrics relying either on the prior knowledge of reference data and/or on some (partial) knowledge on the noise statistics, the proposed approach optimizes the whiteness of the residual between the observed data and the observation model with no need of ground-truth data.We validate the approach on standard Total Variation-regularized image deconvolution problems which show that the proposed quality metric provides estimates close to the mean-square error oracle and to discrepancy-based principles.

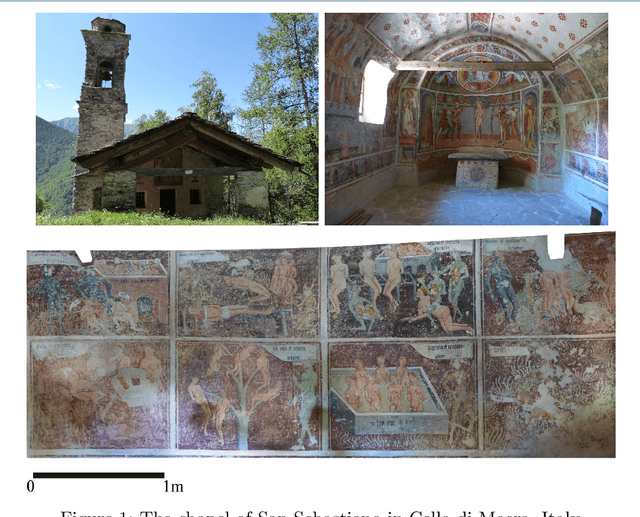

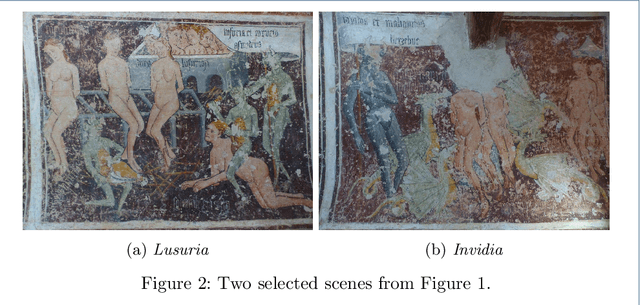

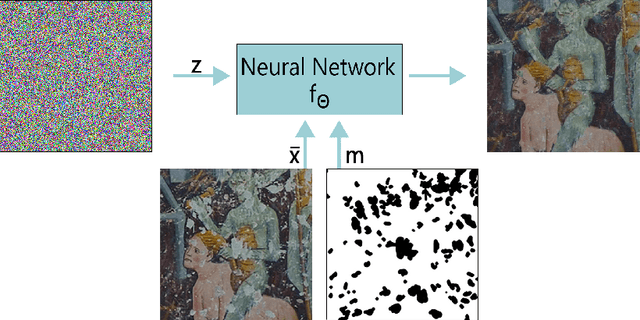

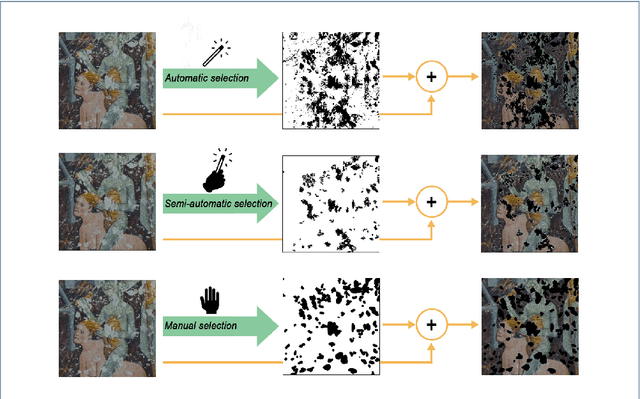

Deep image prior inpainting of ancient frescoes in the Mediterranean Alpine arc

Jun 25, 2023

Abstract:The unprecedented success of image reconstruction approaches based on deep neural networks has revolutionised both the processing and the analysis paradigms in several applied disciplines. In the field of digital humanities, the task of digital reconstruction of ancient frescoes is particularly challenging due to the scarce amount of available training data caused by ageing, wear, tear and retouching over time. To overcome these difficulties, we consider the Deep Image Prior (DIP) inpainting approach which computes appropriate reconstructions by relying on the progressive updating of an untrained convolutional neural network so as to match the reliable piece of information in the image at hand while promoting regularisation elsewhere. In comparison with state-of-the-art approaches (based on variational/PDEs and patch-based methods), DIP-based inpainting reduces artefacts and better adapts to contextual/non-local information, thus providing a valuable and effective tool for art historians. As a case study, we apply such approach to reconstruct missing image contents in a dataset of highly damaged digital images of medieval paintings located into several chapels in the Mediterranean Alpine Arc and provide a detailed description on how visible and invisible (e.g., infrared) information can be integrated for identifying and reconstructing damaged image regions.

Fluctuation-based deconvolution in fluorescence microscopy using plug-and-play denoisers

Mar 20, 2023Abstract:The spatial resolution of images of living samples obtained by fluorescence microscopes is physically limited due to the diffraction of visible light, which makes the study of entities of size less than the diffraction barrier (around 200 nm in the x-y plane) very challenging. To overcome this limitation, several deconvolution and super-resolution techniques have been proposed. Within the framework of inverse problems, modern approaches in fluorescence microscopy reconstruct a super-resolved image from a temporal stack of frames by carefully designing suitable hand-crafted sparsity-promoting regularisers. Numerically, such approaches are solved by proximal gradient-based iterative schemes. Aiming at obtaining a reconstruction more adapted to sample geometries (e.g. thin filaments), we adopt a plug-and-play denoising approach with convergence guarantees and replace the proximity operator associated with the explicit image regulariser with an image denoiser (i.e. a pre-trained network) which, upon appropriate training, mimics the action of an implicit prior. To account for the independence of the fluctuations between molecules, the model relies on second-order statistics. The denoiser is then trained on covariance images coming from data representing sequences of fluctuating fluorescent molecules with filament structure. The method is evaluated on both simulated and real fluorescence microscopy images, showing its ability to correctly reconstruct filament structures with high values of peak signal-to-noise ratio (PSNR).

COL0RME: Super-resolution microscopy based on sparse blinking fluorophore localization and intensity estimation

Aug 16, 2021

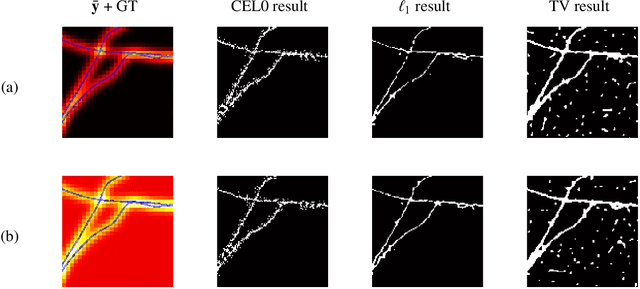

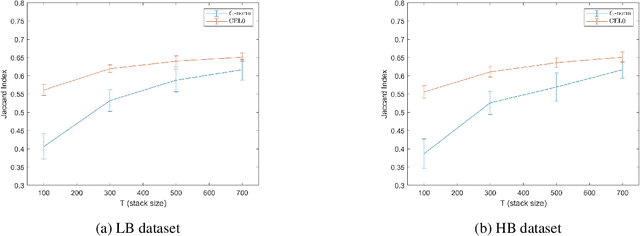

Abstract:To overcome the physical barriers caused by light diffraction, super-resolution techniques are often applied in fluorescent microscopy. State-of-the-art approaches require specific and often demanding acquisition conditions to achieve adequate levels of both spatial and temporal resolution. Analyzing the stochastic fluctuations of the fluorescent molecules provides a solution to the aforementioned limitations, as sufficiently high spatio-temporal resolution for live-cell imaging can be achieved by using common microscopes and conventional fluorescent dyes. Based on this idea, we present COL0RME, a method for COvariance-based $\ell_0$ super-Resolution Microscopy with intensity Estimation, which achieves good spatio-temporal resolution by solving a sparse optimization problem in the covariance domain, and discuss automatic parameter selection strategies. The method is composed of two steps: the former where both the emitters' independence and the sparse distribution of the fluorescent molecules are exploited to provide an accurate localization; the latter where real intensity values are estimated given the computed support. The paper is furnished with several numerical results both on synthetic and real fluorescent microscopy images and several comparisons with state-of-the art approaches are provided. Our results show that COL0RME outperforms competing methods exploiting analogously temporal fluctuations; in particular, it achieves better localization, reduces background artifacts and avoids fine parameter tuning.

A cortical-inspired sub-Riemannian model for Poggendorff-type visual illusions

Jan 29, 2021

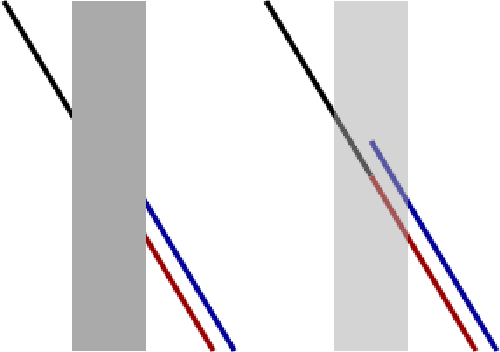

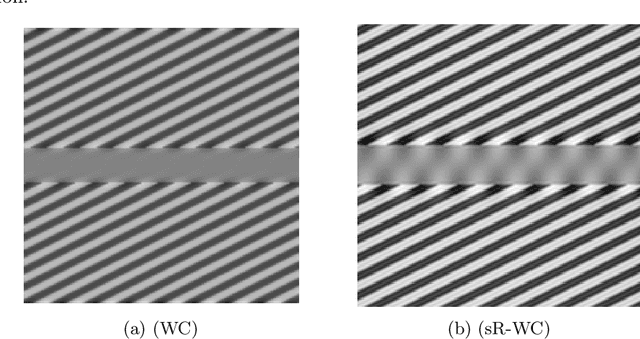

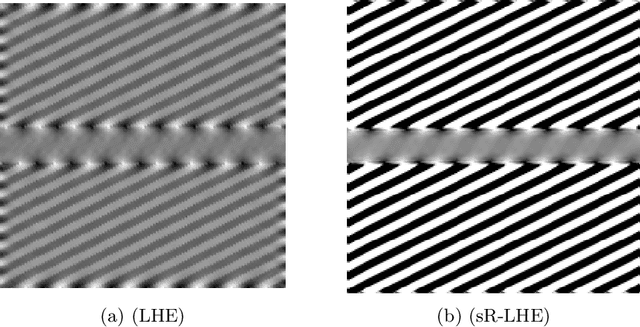

Abstract:We consider Wilson-Cowan-type models for the mathematical description of orientation-dependent Poggendorff-like illusions. Our modelling improves two previously proposed cortical-inspired approaches embedding the sub-Riemannian heat kernel into the neuronal interaction term, in agreement with the intrinsically anisotropic functional architecture of V1 based on both local and lateral connections. For the numerical realisation of both models, we consider standard gradient descent algorithms combined with Fourier-based approaches for the efficient computation of the sub-Laplacian evolution. Our numerical results show that the use of the sub-Riemannian kernel allows to reproduce numerically visual misperceptions and inpainting-type biases in a stronger way in comparison with the previous approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge