Liyuan Li

Combined CNN Transformer Encoder for Enhanced Fine-grained Human Action Recognition

Aug 03, 2022

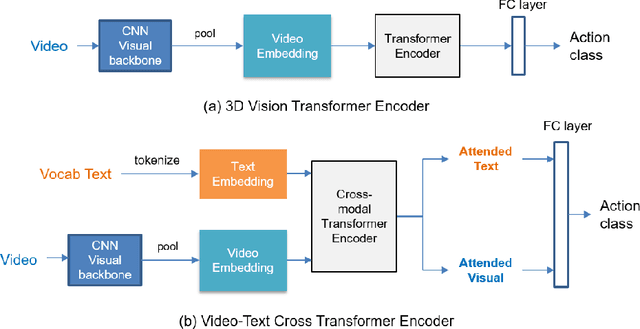

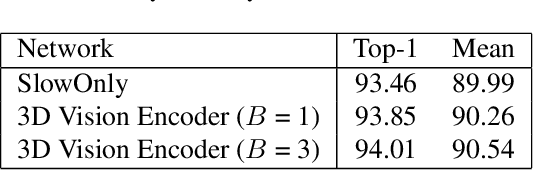

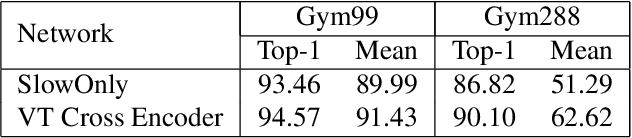

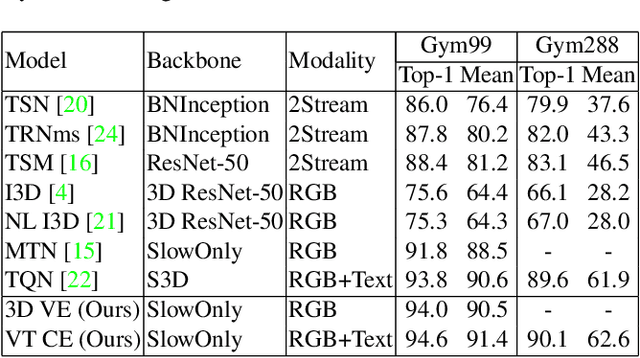

Abstract:Fine-grained action recognition is a challenging task in computer vision. As fine-grained datasets have small inter-class variations in spatial and temporal space, fine-grained action recognition model requires good temporal reasoning and discrimination of attribute action semantics. Leveraging on CNN's ability in capturing high level spatial-temporal feature representations and Transformer's modeling efficiency in capturing latent semantics and global dependencies, we investigate two frameworks that combine CNN vision backbone and Transformer Encoder to enhance fine-grained action recognition: 1) a vision-based encoder to learn latent temporal semantics, and 2) a multi-modal video-text cross encoder to exploit additional text input and learn cross association between visual and text semantics. Our experimental results show that both our Transformer encoder frameworks effectively learn latent temporal semantics and cross-modality association, with improved recognition performance over CNN vision model. We achieve new state-of-the-art performance on the FineGym benchmark dataset for both proposed architectures.

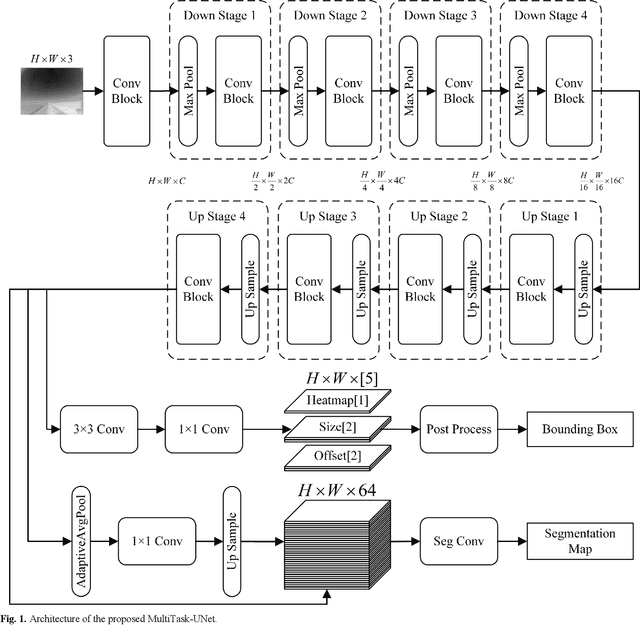

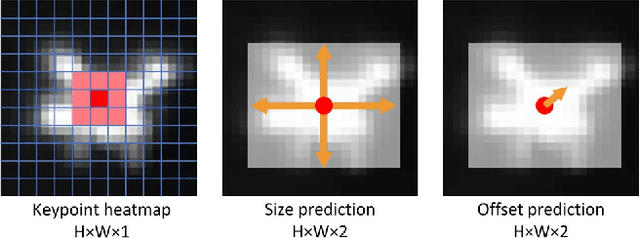

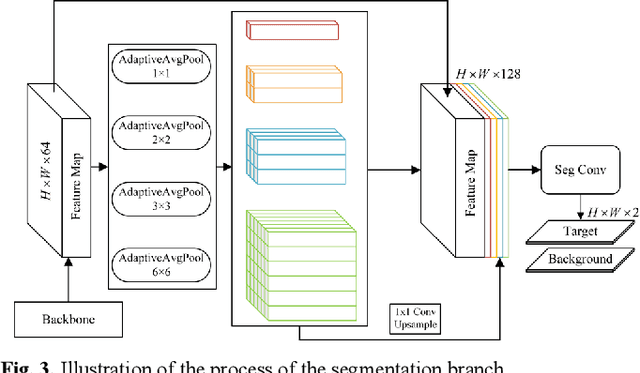

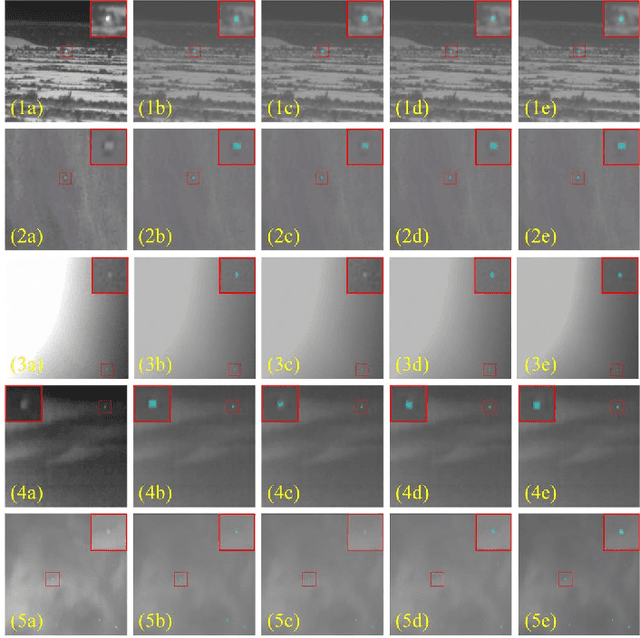

A Multi-task Framework for Infrared Small Target Detection and Segmentation

Jun 14, 2022

Abstract:Due to the complicated background and noise of infrared images, infrared small target detection is one of the most difficult problems in the field of computer vision. In most existing studies, semantic segmentation methods are typically used to achieve better results. The centroid of each target is calculated from the segmentation map as the detection result. In contrast, we propose a novel end-to-end framework for infrared small target detection and segmentation in this paper. First, with the use of UNet as the backbone to maintain resolution and semantic information, our model can achieve a higher detection accuracy than other state-of-the-art methods by attaching a simple anchor-free head. Then, a pyramid pool module is used to further extract features and improve the precision of target segmentation. Next, we use semantic segmentation tasks that pay more attention to pixel-level features to assist in the training process of object detection, which increases the average precision and allows the model to detect some targets that were previously not detectable. Furthermore, we develop a multi-task framework for infrared small target detection and segmentation. Our multi-task learning model reduces complexity by nearly half and speeds up inference by nearly twice compared to the composite single-task model, while maintaining accuracy. The code and models are publicly available at https://github.com/Chenastron/MTUNet.

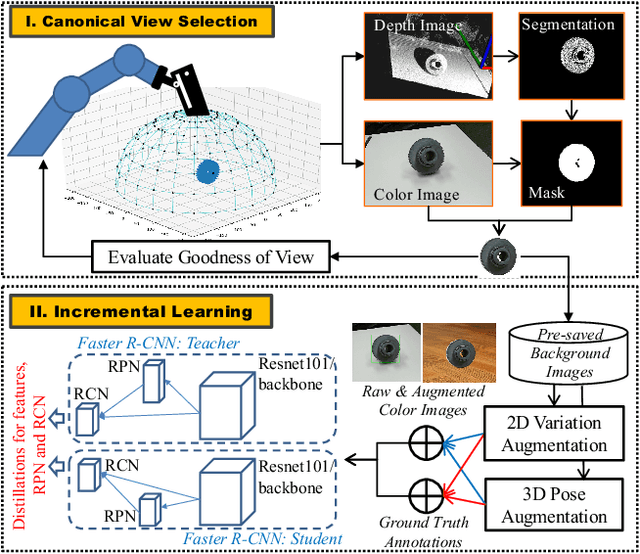

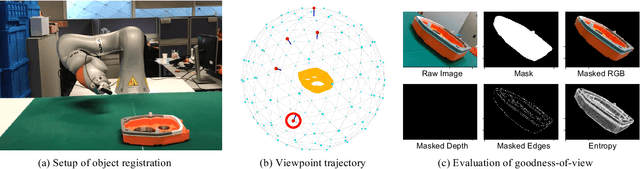

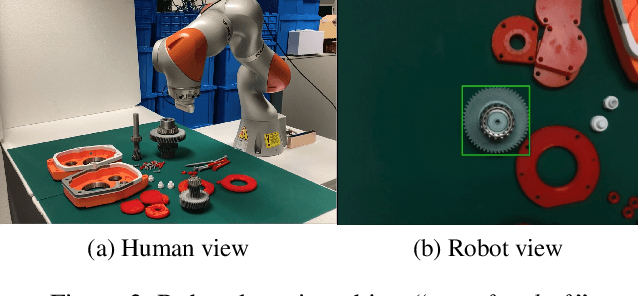

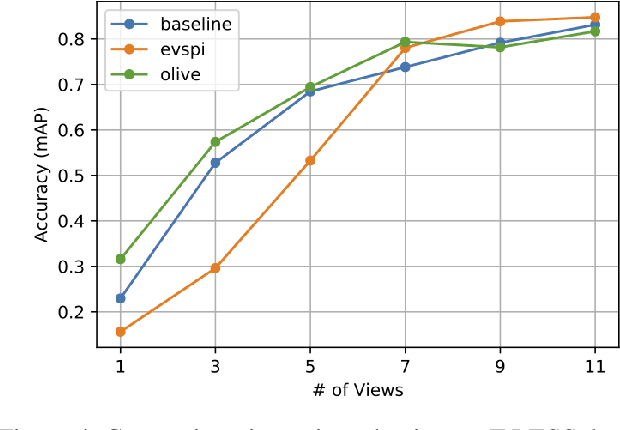

TAILOR: Teaching with Active and Incremental Learning for Object Registration

May 24, 2022

Abstract:When deploying a robot to a new task, one often has to train it to detect novel objects, which is time-consuming and labor-intensive. We present TAILOR -- a method and system for object registration with active and incremental learning. When instructed by a human teacher to register an object, TAILOR is able to automatically select viewpoints to capture informative images by actively exploring viewpoints, and employs a fast incremental learning algorithm to learn new objects without potential forgetting of previously learned objects. We demonstrate the effectiveness of our method with a KUKA robot to learn novel objects used in a real-world gearbox assembly task through natural interactions.

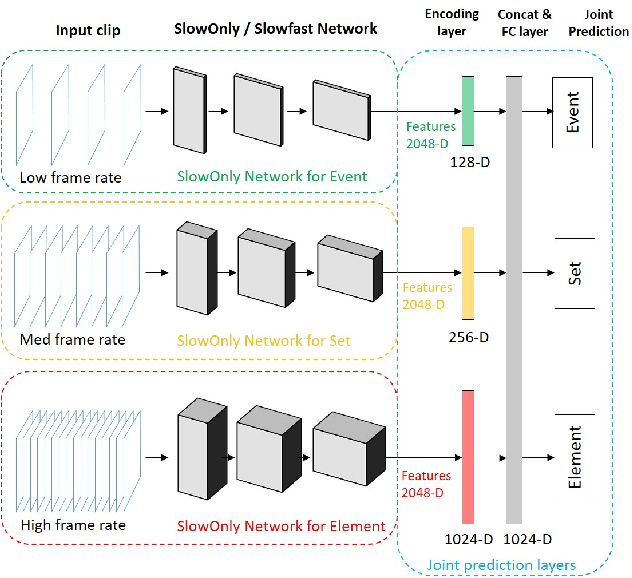

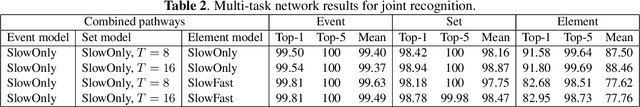

Joint Learning On The Hierarchy Representation for Fine-Grained Human Action Recognition

Oct 12, 2021

Abstract:Fine-grained human action recognition is a core research topic in computer vision. Inspired by the recently proposed hierarchy representation of fine-grained actions in FineGym and SlowFast network for action recognition, we propose a novel multi-task network which exploits the FineGym hierarchy representation to achieve effective joint learning and prediction for fine-grained human action recognition. The multi-task network consists of three pathways of SlowOnly networks with gradually increased frame rates for events, sets and elements of fine-grained actions, followed by our proposed integration layers for joint learning and prediction. It is a two-stage approach, where it first learns deep feature representation at each hierarchical level, and is followed by feature encoding and fusion for multi-task learning. Our empirical results on the FineGym dataset achieve a new state-of-the-art performance, with 91.80% Top-1 accuracy and 88.46% mean accuracy for element actions, which are 3.40% and 7.26% higher than the previous best results.

* Camera ready for IEEE ICIP 2021

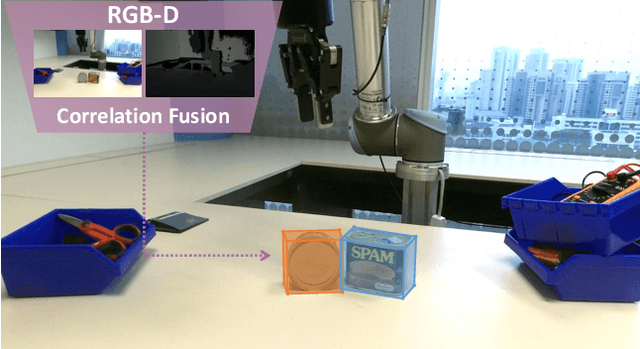

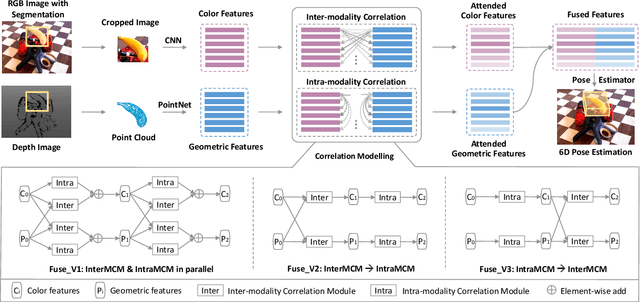

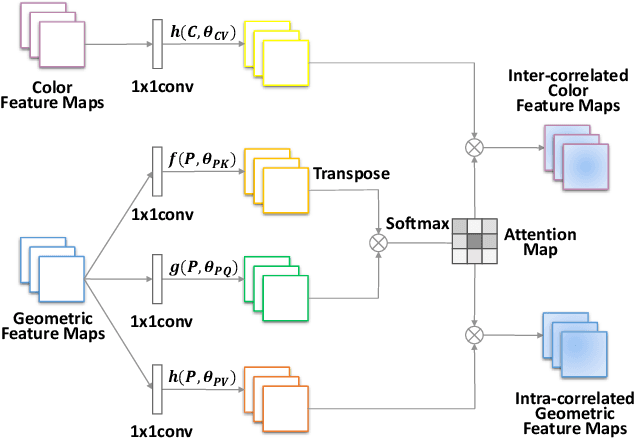

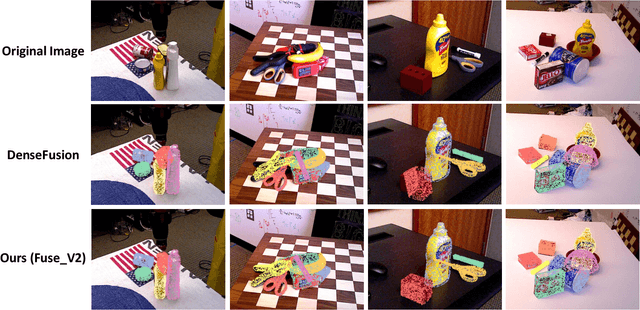

6D Pose Estimation with Correlation Fusion

Sep 24, 2019

Abstract:6D object pose estimation is widely applied in robotic tasks such as grasping and manipulation. Prior methods using RGB-only images are vulnerable to heavy occlusion and poor illumination, so it is important to complement them with depth information. However, existing methods using RGB-D data don't adequately exploit consistent and complementary information between two modalities. In this paper, we present a novel method to effectively consider the correlation within and across RGB and depth modalities with attention mechanism to learn discriminative multi-modal features. Then, effective fusion strategies for intra- and inter-correlation modules are explored to ensure efficient information flow between RGB and depth. To the best of our knowledge, this is the first work to explore effective intra- and inter-modality fusion in 6D pose estimation and experimental results show that our method can help achieve the state-of-the-art performance on LineMOD and YCB-Video datasets as well as benefit robot grasping task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge