Ling He

Wearable Device-Based Physiological Signal Monitoring: An Assessment Study of Cognitive Load Across Tasks

Jun 11, 2024Abstract:This study employs cutting-edge wearable monitoring technology to conduct high-precision, high-temporal-resolution cognitive load assessment on EEG data from the FP1 channel and heart rate variability (HRV) data of secondary vocational students(SVS). By jointly analyzing these two critical physiological indicators, the research delves into their application value in assessing cognitive load among SVS students and their utility across various tasks. The study designed two experiments to validate the efficacy of the proposed approach: Initially, a random forest classification model, developed using the N-BACK task, enabled the precise decoding of physiological signal characteristics in SVS students under different levels of cognitive load, achieving a classification accuracy of 97%. Subsequently, this classification model was applied in a cross-task experiment involving the National Computer Rank Examination, demonstrating the method's significant applicability and cross-task transferability in diverse learning contexts. Conducted with high portability, this research holds substantial theoretical and practical significance for optimizing teaching resource allocation in secondary vocational education, as well as for cognitive load assessment methods and monitoring. Currently, the research findings are undergoing trial implementation in the school.

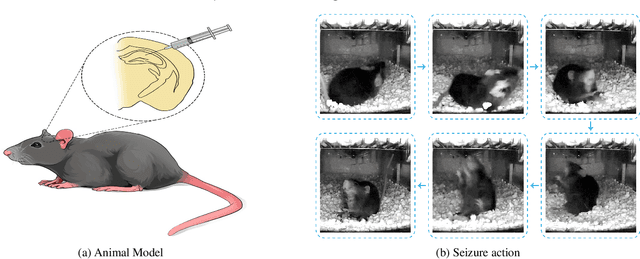

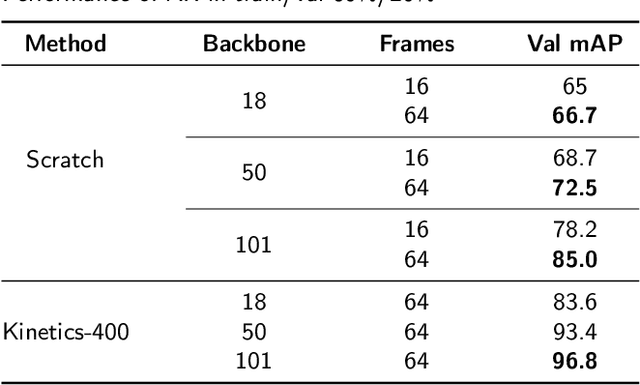

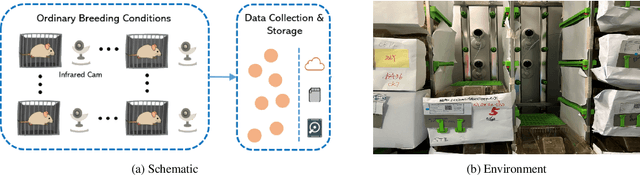

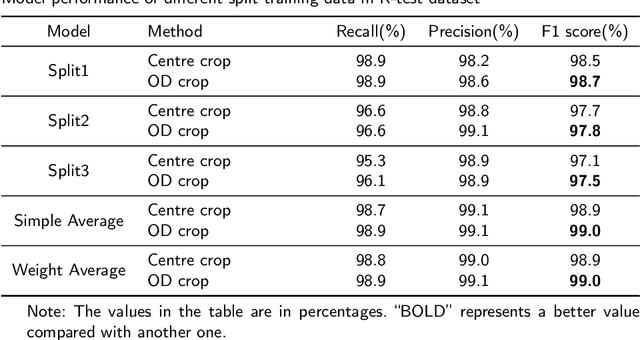

EPIDetect: Video-based convulsive seizure detection in chronic epilepsy mouse model for anti-epilepsy drug screening

May 31, 2024

Abstract:In the preclinical translational studies, drug candidates with remarkable anti-epileptic efficacy demonstrate long-term suppression of spontaneous recurrent seizures (SRSs), particularly convulsive seizures (CSs), in mouse models of chronic epilepsy. However, the current methods for monitoring CSs have limitations in terms of invasiveness, specific laboratory settings, high cost, and complex operation, which hinder drug screening efforts. In this study, a camera-based system for automated detection of CSs in chronically epileptic mice is first established to screen potential anti-epilepsy drugs.

Delay Aware Secure Offloading for NOMA-Assisted Mobile Edge Computing in Internet of Vehicles

Aug 01, 2021

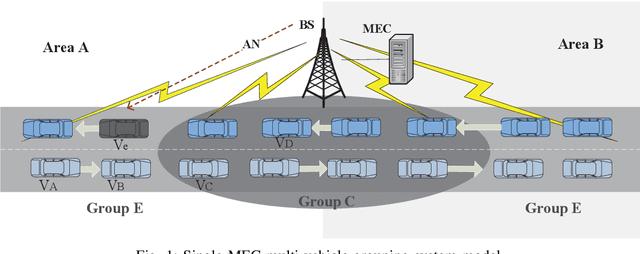

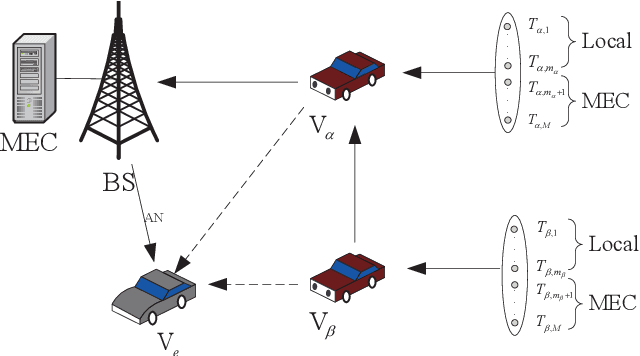

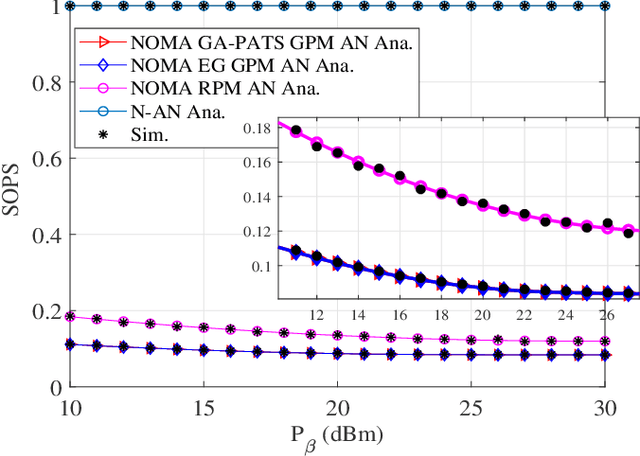

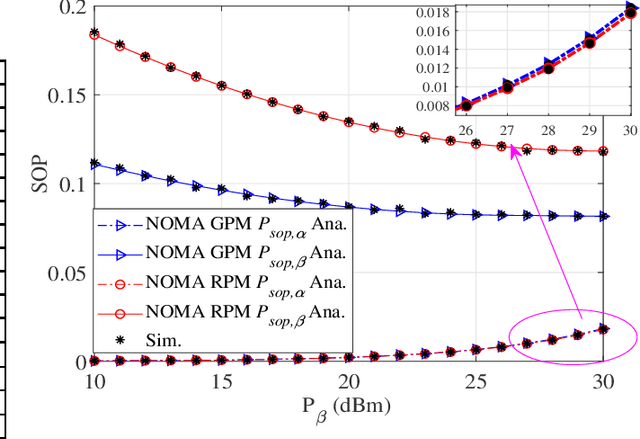

Abstract:In this paper, a multi-vehicle multi-task nonorthogonal multiple access (NOMA) assisted mobile edge computing (MEC) system with passive eavesdropping vehicles is investigated. To heighten the performance of edge vehicles, we propose a vehicle grouping pairing method, which utilizes vehicles near the MEC as full-duplex relays to assist edge vehicles. For promoting transmission security, we employ artificial noise to interrupt eavesdropping vehicles. Furthermore, we derive the approximate expression of secrecy outage probability of the system. The combined optimization of vehicle task division, power allocation, and transmit beamforming is formulated to minimize the total delay of task completion of edge vehicles. Then, we design a power allocation and task scheduling algorithm based on genetic algorithm to solve the mixed-integer nonlinear programming problem. Numerical results demonstrate the superiority of our proposed scheme in terms of system security and transmission delay.

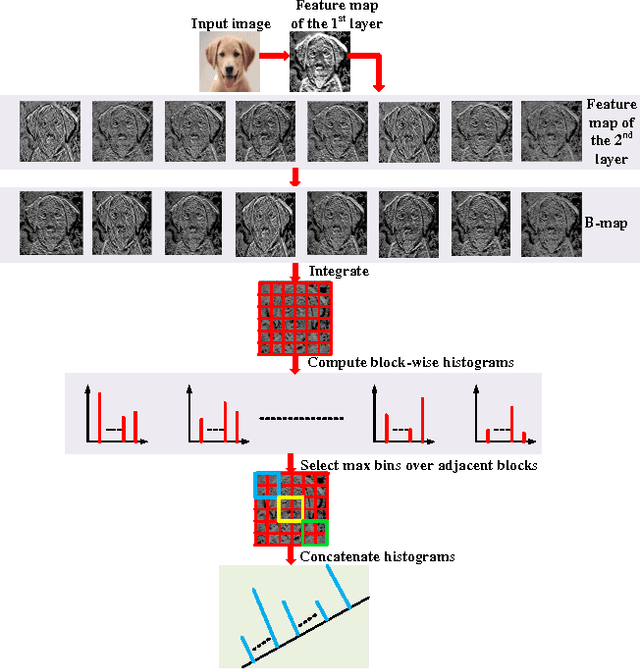

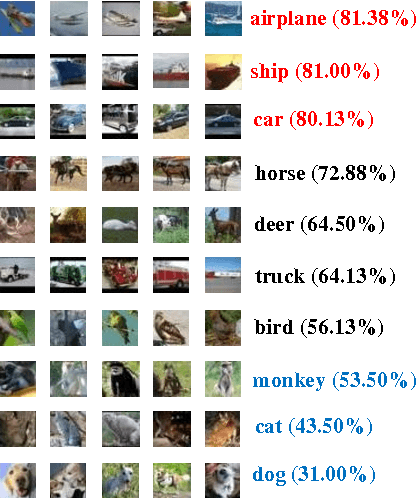

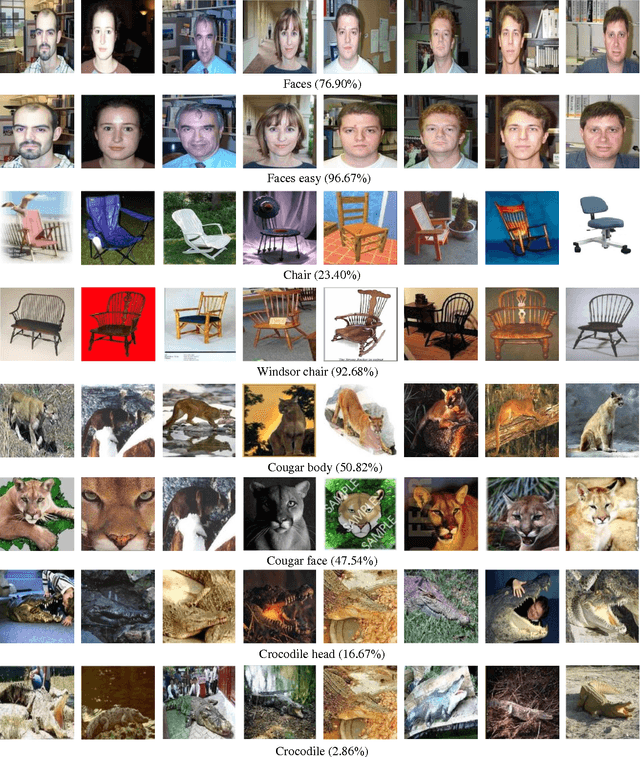

CUNet: A Compact Unsupervised Network for Image Classification

Jul 06, 2016

Abstract:In this paper, we propose a compact network called CUNet (compact unsupervised network) to counter the image classification challenge. Different from the traditional convolutional neural networks learning filters by the time-consuming stochastic gradient descent, CUNet learns the filter bank from diverse image patches with the simple K-means, which significantly avoids the requirement of scarce labeled training images, reduces the training consumption, and maintains the high discriminative ability. Besides, we propose a new pooling method named weighted pooling considering the different weight values of adjacent neurons, which helps to improve the robustness to small image distortions. In the output layer, CUNet integrates the feature maps gained in the last hidden layer, and straightforwardly computes histograms in non-overlapped blocks. To reduce feature redundancy, we implement the max-pooling operation on adjacent blocks to select the most competitive features. Comprehensive experiments are conducted to demonstrate the state-of-the-art classification performances with CUNet on CIFAR-10, STL-10, MNIST and Caltech101 benchmark datasets.

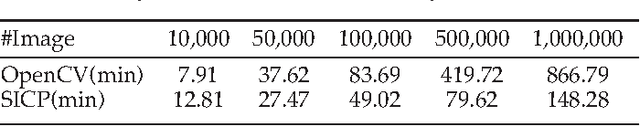

A Hierarchical Distributed Processing Framework for Big Image Data

Jul 03, 2016

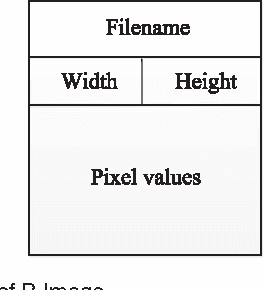

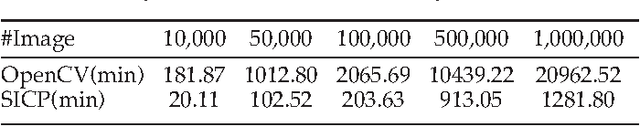

Abstract:This paper introduces an effective processing framework nominated ICP (Image Cloud Processing) to powerfully cope with the data explosion in image processing field. While most previous researches focus on optimizing the image processing algorithms to gain higher efficiency, our work dedicates to providing a general framework for those image processing algorithms, which can be implemented in parallel so as to achieve a boost in time efficiency without compromising the results performance along with the increasing image scale. The proposed ICP framework consists of two mechanisms, i.e. SICP (Static ICP) and DICP (Dynamic ICP). Specifically, SICP is aimed at processing the big image data pre-stored in the distributed system, while DICP is proposed for dynamic input. To accomplish SICP, two novel data representations named P-Image and Big-Image are designed to cooperate with MapReduce to achieve more optimized configuration and higher efficiency. DICP is implemented through a parallel processing procedure working with the traditional processing mechanism of the distributed system. Representative results of comprehensive experiments on the challenging ImageNet dataset are selected to validate the capacity of our proposed ICP framework over the traditional state-of-the-art methods, both in time efficiency and quality of results.

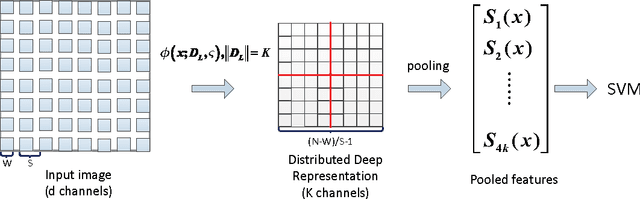

A Distributed Deep Representation Learning Model for Big Image Data Classification

Jul 02, 2016

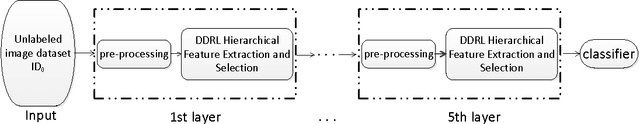

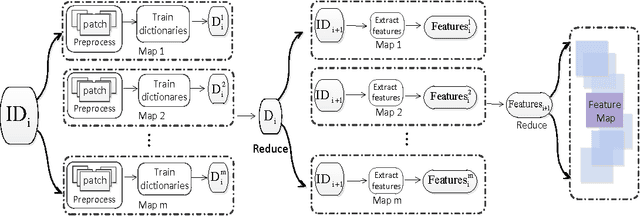

Abstract:This paper describes an effective and efficient image classification framework nominated distributed deep representation learning model (DDRL). The aim is to strike the balance between the computational intensive deep learning approaches (tuned parameters) which are intended for distributed computing, and the approaches that focused on the designed parameters but often limited by sequential computing and cannot scale up. In the evaluation of our approach, it is shown that DDRL is able to achieve state-of-art classification accuracy efficiently on both medium and large datasets. The result implies that our approach is more efficient than the conventional deep learning approaches, and can be applied to big data that is too complex for parameter designing focused approaches. More specifically, DDRL contains two main components, i.e., feature extraction and selection. A hierarchical distributed deep representation learning algorithm is designed to extract image statistics and a nonlinear mapping algorithm is used to map the inherent statistics into abstract features. Both algorithms are carefully designed to avoid millions of parameters tuning. This leads to a more compact solution for image classification of big data. We note that the proposed approach is designed to be friendly with parallel computing. It is generic and easy to be deployed to different distributed computing resources. In the experiments, the largescale image datasets are classified with a DDRM implementation on Hadoop MapReduce, which shows high scalability and resilience.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge