Gaipeng Kong

CUNet: A Compact Unsupervised Network for Image Classification

Jul 06, 2016

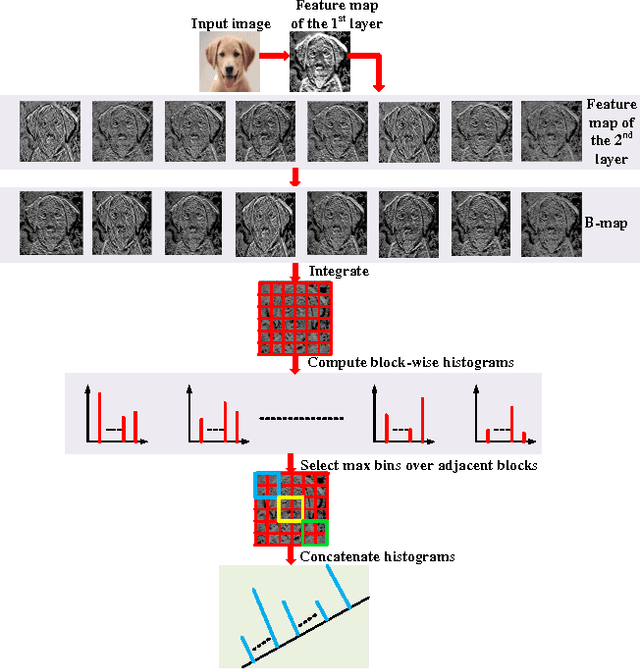

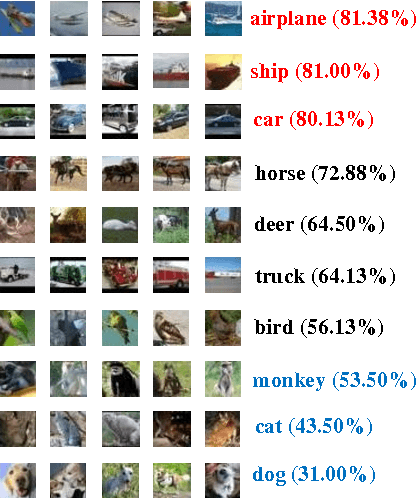

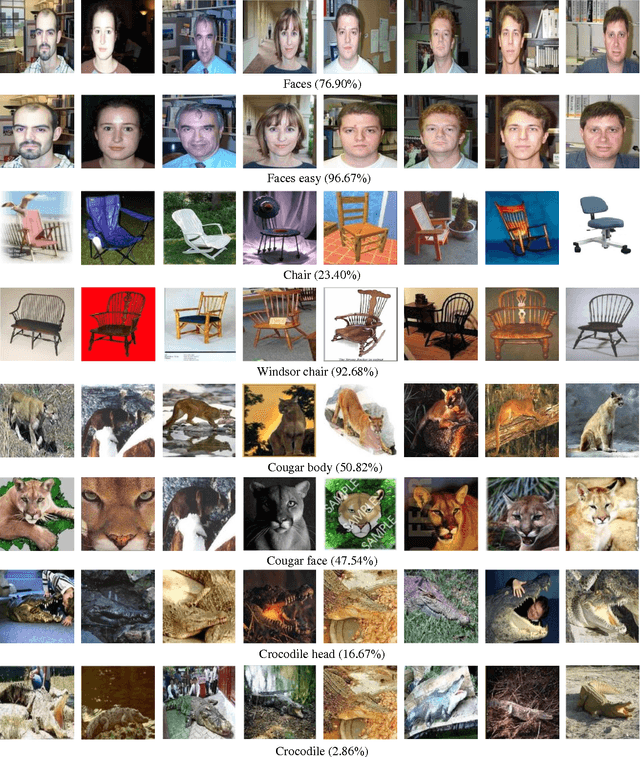

Abstract:In this paper, we propose a compact network called CUNet (compact unsupervised network) to counter the image classification challenge. Different from the traditional convolutional neural networks learning filters by the time-consuming stochastic gradient descent, CUNet learns the filter bank from diverse image patches with the simple K-means, which significantly avoids the requirement of scarce labeled training images, reduces the training consumption, and maintains the high discriminative ability. Besides, we propose a new pooling method named weighted pooling considering the different weight values of adjacent neurons, which helps to improve the robustness to small image distortions. In the output layer, CUNet integrates the feature maps gained in the last hidden layer, and straightforwardly computes histograms in non-overlapped blocks. To reduce feature redundancy, we implement the max-pooling operation on adjacent blocks to select the most competitive features. Comprehensive experiments are conducted to demonstrate the state-of-the-art classification performances with CUNet on CIFAR-10, STL-10, MNIST and Caltech101 benchmark datasets.

Coarse2Fine: Two-Layer Fusion For Image Retrieval

Jul 04, 2016

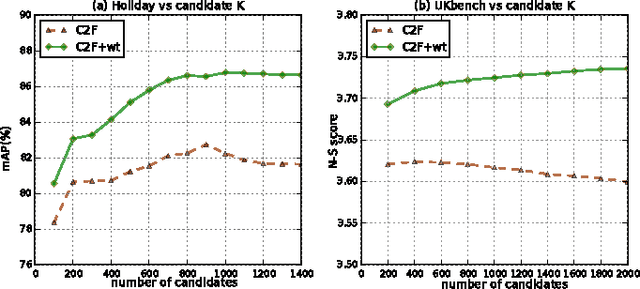

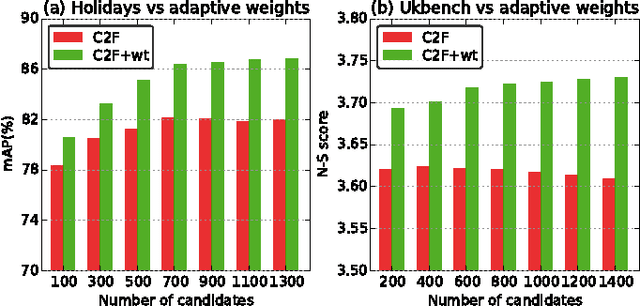

Abstract:This paper addresses the problem of large-scale image retrieval. We propose a two-layer fusion method which takes advantage of global and local cues and ranks database images from coarse to fine (C2F). Departing from the previous methods fusing multiple image descriptors simultaneously, C2F is featured by a layered procedure composed by filtering and refining. In particular, C2F consists of three components. 1) Distractor filtering. With holistic representations, noise images are filtered out from the database, so the number of candidate images to be used for comparison with the query can be greatly reduced. 2) Adaptive weighting. For a certain query, the similarity of candidate images can be estimated by holistic similarity scores in complementary to the local ones. 3) Candidate refining. Accurate retrieval is conducted via local features, combining the pre-computed adaptive weights. Experiments are presented on two benchmarks, \emph{i.e.,} Holidays and Ukbench datasets. We show that our method outperforms recent fusion methods in terms of storage consumption and computation complexity, and that the accuracy is competitive to the state-of-the-arts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge