Leilei Cao

Enhancing Sa2VA for Referent Video Object Segmentation: 2nd Solution for 7th LSVOS RVOS Track

Sep 19, 2025

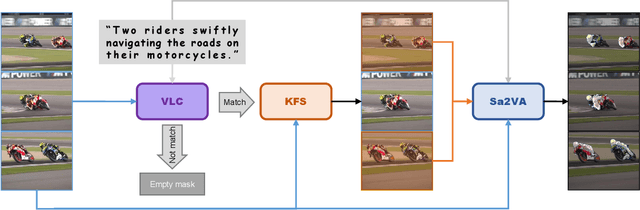

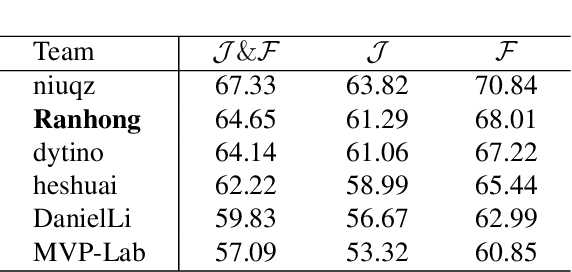

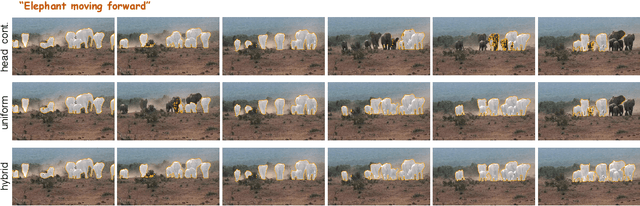

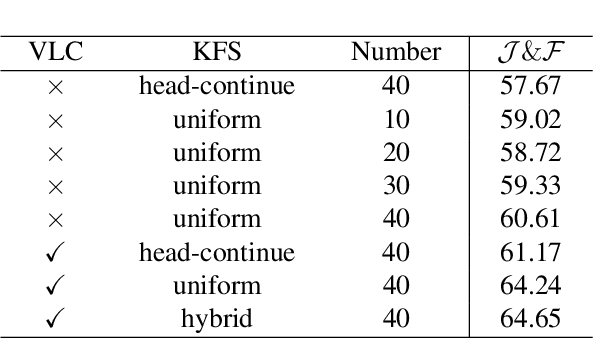

Abstract:Referential Video Object Segmentation (RVOS) aims to segment all objects in a video that match a given natural language description, bridging the gap between vision and language understanding. Recent work, such as Sa2VA, combines Large Language Models (LLMs) with SAM~2, leveraging the strong video reasoning capability of LLMs to guide video segmentation. In this work, we present a training-free framework that substantially improves Sa2VA's performance on the RVOS task. Our method introduces two key components: (1) a Video-Language Checker that explicitly verifies whether the subject and action described in the query actually appear in the video, thereby reducing false positives; and (2) a Key-Frame Sampler that adaptively selects informative frames to better capture both early object appearances and long-range temporal context. Without any additional training, our approach achieves a J&F score of 64.14% on the MeViS test set, ranking 2nd place in the RVOS track of the 7th LSVOS Challenge at ICCV 2025.

Pseudo-Label Enhanced Cascaded Framework: 2nd Technical Report for LSVOS 2025 VOS Track

Sep 18, 2025

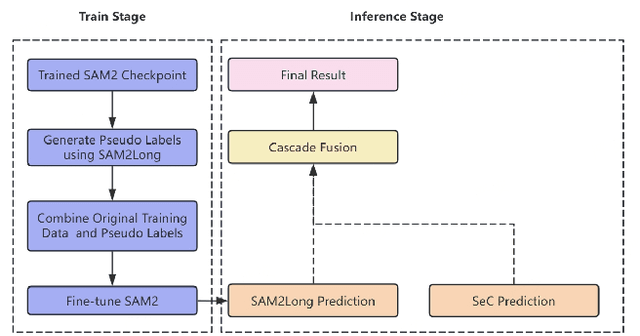

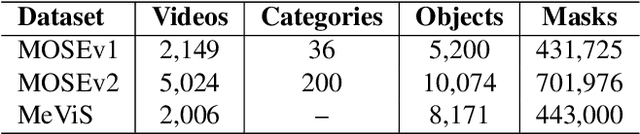

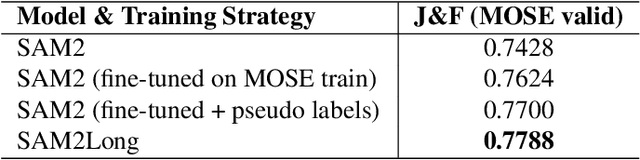

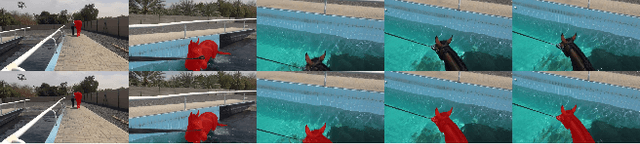

Abstract:Complex Video Object Segmentation (VOS) presents significant challenges in accurately segmenting objects across frames, especially in the presence of small and similar targets, frequent occlusions, rapid motion, and complex interactions. In this report, we present our solution for the LSVOS 2025 VOS Track based on the SAM2 framework. We adopt a pseudo-labeling strategy during training: a trained SAM2 checkpoint is deployed within the SAM2Long framework to generate pseudo labels for the MOSE test set, which are then combined with existing data for further training. For inference, the SAM2Long framework is employed to obtain our primary segmentation results, while an open-source SeC model runs in parallel to produce complementary predictions. A cascaded decision mechanism dynamically integrates outputs from both models, exploiting the temporal stability of SAM2Long and the concept-level robustness of SeC. Benefiting from pseudo-label training and cascaded multi-model inference, our approach achieves a J\&F score of 0.8616 on the MOSE test set -- +1.4 points over our SAM2Long baseline -- securing the 2nd place in the LSVOS 2025 VOS Track, and demonstrating strong robustness and accuracy in long, complex video segmentation scenarios.

Inter- and intra-uncertainty based feature aggregation model for semi-supervised histopathology image segmentation

Mar 19, 2024Abstract:Acquiring pixel-level annotations is often limited in applications such as histology studies that require domain expertise. Various semi-supervised learning approaches have been developed to work with limited ground truth annotations, such as the popular teacher-student models. However, hierarchical prediction uncertainty within the student model (intra-uncertainty) and image prediction uncertainty (inter-uncertainty) have not been fully utilized by existing methods. To address these issues, we first propose a novel inter- and intra-uncertainty regularization method to measure and constrain both inter- and intra-inconsistencies in the teacher-student architecture. We also propose a new two-stage network with pseudo-mask guided feature aggregation (PG-FANet) as the segmentation model. The two-stage structure complements with the uncertainty regularization strategy to avoid introducing extra modules in solving uncertainties and the aggregation mechanisms enable multi-scale and multi-stage feature integration. Comprehensive experimental results over the MoNuSeg and CRAG datasets show that our PG-FANet outperforms other state-of-the-art methods and our semi-supervised learning framework yields competitive performance with a limited amount of labeled data.

Prototype as Query for Few Shot Semantic Segmentation

Nov 27, 2022

Abstract:Few-shot Semantic Segmentation (FSS) was proposed to segment unseen classes in a query image, referring to only a few annotated examples named support images. One of the characteristics of FSS is spatial inconsistency between query and support targets, e.g., texture or appearance. This greatly challenges the generalization ability of methods for FSS, which requires to effectively exploit the dependency of the query image and the support examples. Most existing methods abstracted support features into prototype vectors and implemented the interaction with query features using cosine similarity or feature concatenation. However, this simple interaction may not capture spatial details in query features. To alleviate this limitation, a few methods utilized all pixel-wise support information via computing the pixel-wise correlations between paired query and support features implemented with the attention mechanism of Transformer. These approaches suffer from heavy computation on the dot-product attention between all pixels of support and query features. In this paper, we propose a simple yet effective framework built upon Transformer termed as ProtoFormer to fully capture spatial details in query features. It views the abstracted prototype of the target class in support features as Query and the query features as Key and Value embeddings, which are input to the Transformer decoder. In this way, the spatial details can be better captured and the semantic features of target class in the query image can be focused. The output of the Transformer-based module can be viewed as semantic-aware dynamic kernels to filter out the segmentation mask from the enriched query features. Extensive experiments on PASCAL-$5^{i}$ and COCO-$20^{i}$ show that our ProtoFormer significantly advances the state-of-the-art methods.

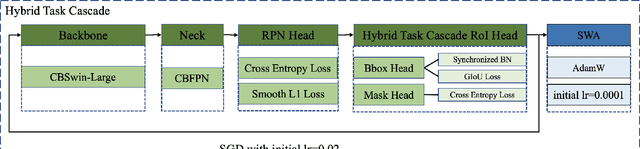

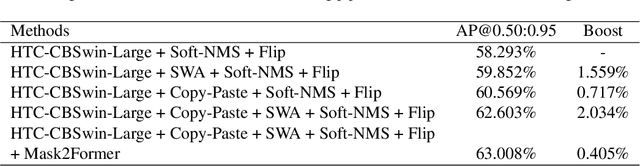

The Third Place Solution for CVPR2022 AVA Accessibility Vision and Autonomy Challenge

Jun 28, 2022

Abstract:The goal of AVA challenge is to provide vision-based benchmarks and methods relevant to accessibility. In this paper, we introduce the technical details of our submission to the CVPR2022 AVA Challenge. Firstly, we conducted some experiments to help employ proper model and data augmentation strategy for this task. Secondly, an effective training strategy was applied to improve the performance. Thirdly, we integrated the results from two different segmentation frameworks to improve the performance further. Experimental results demonstrate that our approach can achieve a competitive result on the AVA test set. Finally, our approach achieves 63.008\%AP@0.50:0.95 on the test set of CVPR2022 AVA Challenge.

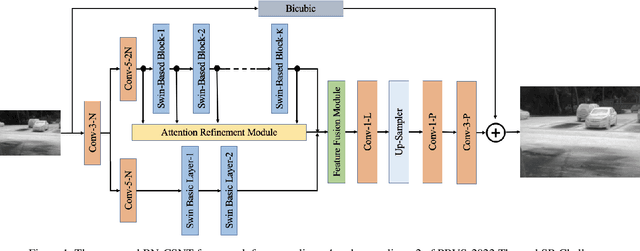

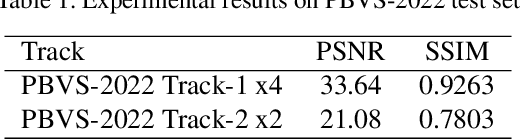

Bilateral Network with Channel Splitting Network and Transformer for Thermal Image Super-Resolution

Jun 24, 2022

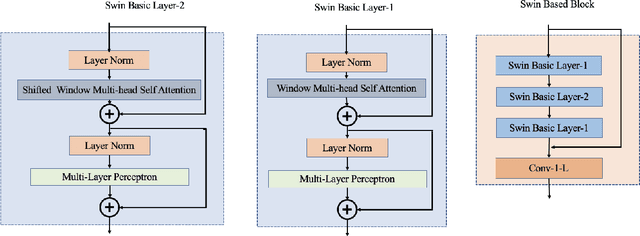

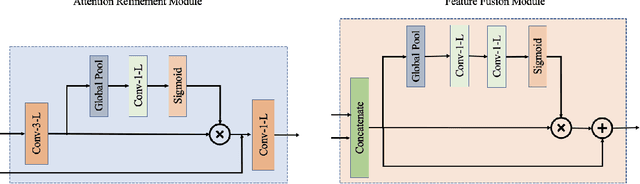

Abstract:In recent years, the Thermal Image Super-Resolution (TISR) problem has become an attractive research topic. TISR would been used in a wide range of fields, including military, medical, agricultural and animal ecology. Due to the success of PBVS-2020 and PBVS-2021 workshop challenge, the result of TISR keeps improving and attracts more researchers to sign up for PBVS-2022 challenge. In this paper, we will introduce the technical details of our submission to PBVS-2022 challenge designing a Bilateral Network with Channel Splitting Network and Transformer(BN-CSNT) to tackle the TISR problem. Firstly, we designed a context branch based on channel splitting network with transformer to obtain sufficient context information. Secondly, we designed a spatial branch with shallow transformer to extract low level features which can preserve the spatial information. Finally, for the context branch in order to fuse the features from channel splitting network and transformer, we proposed an attention refinement module, and then features from context branch and spatial branch are fused by proposed feature fusion module. The proposed method can achieve PSNR=33.64, SSIM=0.9263 for x4 and PSNR=21.08, SSIM=0.7803 for x2 in the PBVS-2022 challenge test dataset.

The Second Place Solution for The 4th Large-scale Video Object Segmentation Challenge--Track 3: Referring Video Object Segmentation

Jun 24, 2022

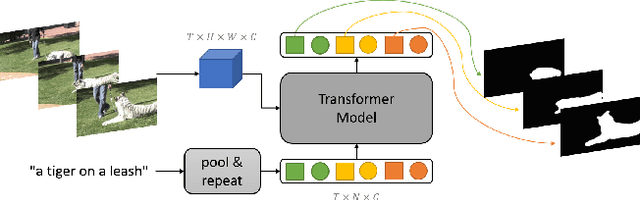

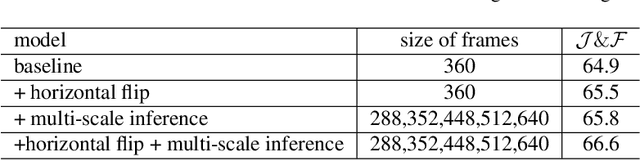

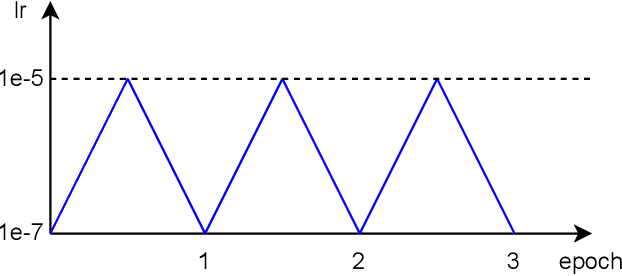

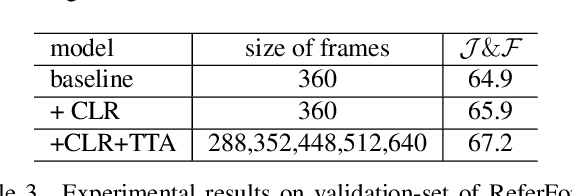

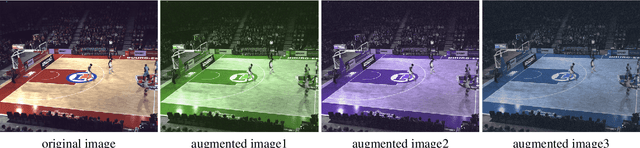

Abstract:The referring video object segmentation task (RVOS) aims to segment object instances in a given video referred by a language expression in all video frames. Due to the requirement of understanding cross-modal semantics within individual instances, this task is more challenging than the traditional semi-supervised video object segmentation where the ground truth object masks in the first frame are given. With the great achievement of Transformer in object detection and object segmentation, RVOS has been made remarkable progress where ReferFormer achieved the state-of-the-art performance. In this work, based on the strong baseline framework--ReferFormer, we propose several tricks to boost further, including cyclical learning rates, semi-supervised approach, and test-time augmentation inference. The improved ReferFormer ranks 2nd place on CVPR2022 Referring Youtube-VOS Challenge.

The Second Place Solution for ICCV2021 VIPriors Instance Segmentation Challenge

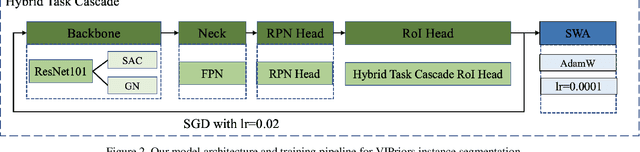

Dec 02, 2021

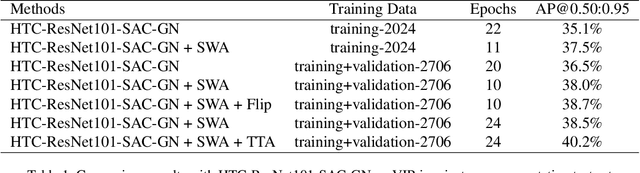

Abstract:The Visual Inductive Priors(VIPriors) for Data-Efficient Computer Vision challenges ask competitors to train models from scratch in a data-deficient setting. In this paper, we introduce the technical details of our submission to the ICCV2021 VIPriors instance segmentation challenge. Firstly, we designed an effective data augmentation method to improve the problem of data-deficient. Secondly, we conducted some experiments to select a proper model and made some improvements for this task. Thirdly, we proposed an effective training strategy which can improve the performance. Experimental results demonstrate that our approach can achieve a competitive result on the test set. According to the competition rules, we do not use any external image or video data and pre-trained weights. The implementation details above are described in section 2 and section 3. Finally, our approach can achieve 40.2\%AP@0.50:0.95 on the test set of ICCV2021 VIPriors instance segmentation challenge.

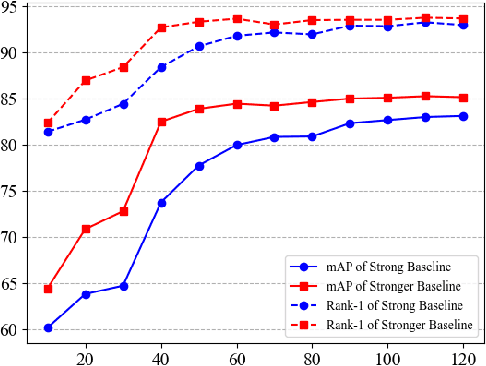

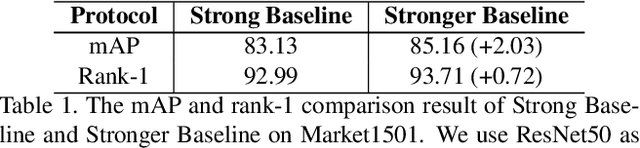

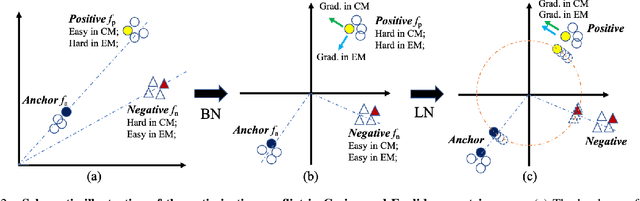

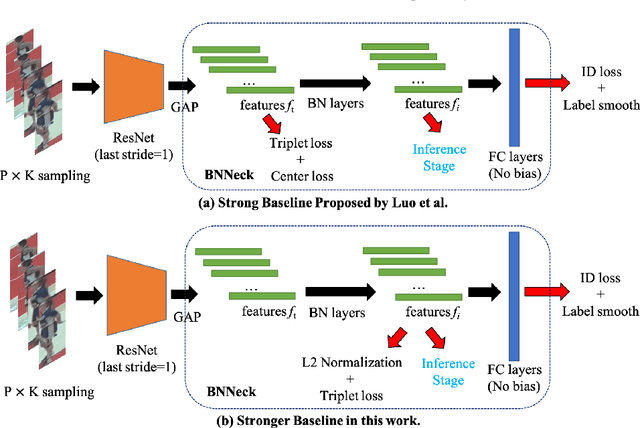

Stronger Baseline for Person Re-Identification

Dec 02, 2021

Abstract:Person re-identification (re-ID) aims to identify the same person of interest across non-overlapping capturing cameras, which plays an important role in visual surveillance applications and computer vision research areas. Fitting a robust appearance-based representation extractor with limited collected training data is crucial for person re-ID due to the high expanse of annotating the identity of unlabeled data. In this work, we propose a Stronger Baseline for person re-ID, an enhancement version of the current prevailing method, namely, Strong Baseline, with tiny modifications but a faster convergence rate and higher recognition performance. With the aid of Stronger Baseline, we obtained the third place (i.e., 0.94 in mAP) in 2021 VIPriors Re-identification Challenge without the auxiliary of ImageNet-based pre-trained parameter initialization and any extra supplemental dataset.

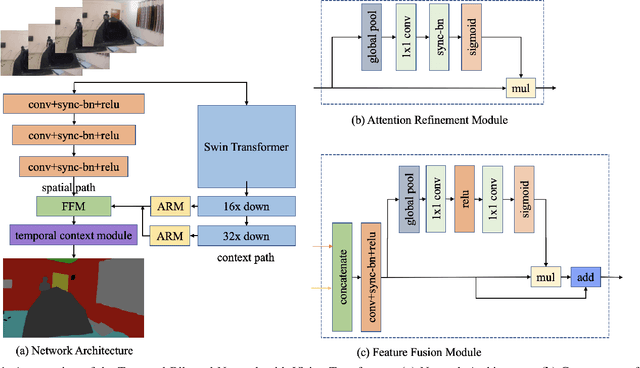

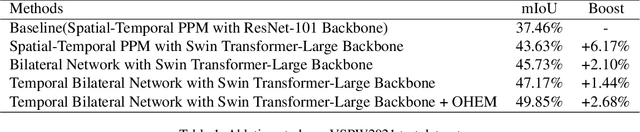

TBN-ViT: Temporal Bilateral Network with Vision Transformer for Video Scene Parsing

Dec 02, 2021

Abstract:Video scene parsing in the wild with diverse scenarios is a challenging and great significance task, especially with the rapid development of automatic driving technique. The dataset Video Scene Parsing in the Wild(VSPW) contains well-trimmed long-temporal, dense annotation and high resolution clips. Based on VSPW, we design a Temporal Bilateral Network with Vision Transformer. We first design a spatial path with convolutions to generate low level features which can preserve the spatial information. Meanwhile, a context path with vision transformer is employed to obtain sufficient context information. Furthermore, a temporal context module is designed to harness the inter-frames contextual information. Finally, the proposed method can achieve the mean intersection over union(mIoU) of 49.85\% for the VSPW2021 Challenge test dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge