Latifur Khan

PPSEBM: An Energy-Based Model with Progressive Parameter Selection for Continual Learning

Dec 17, 2025

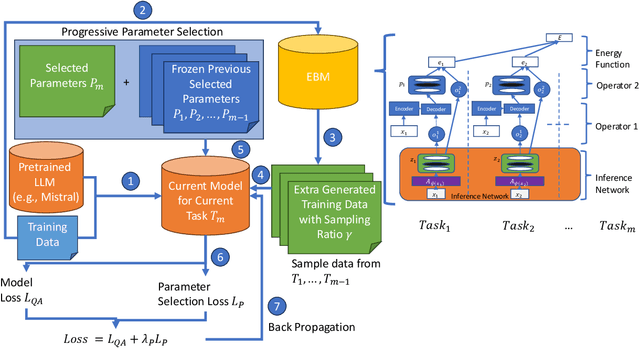

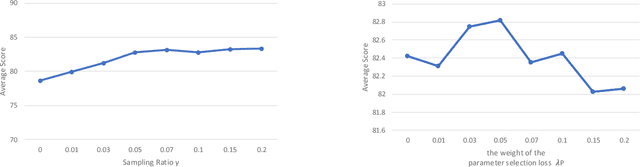

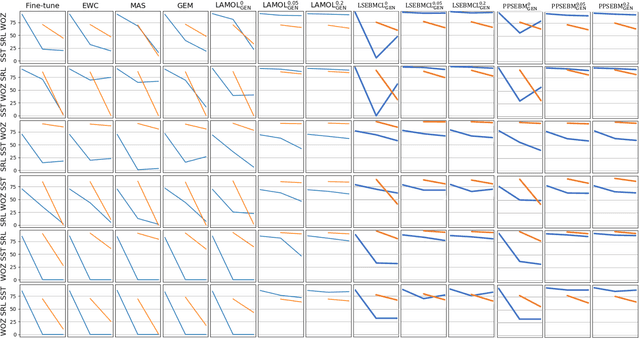

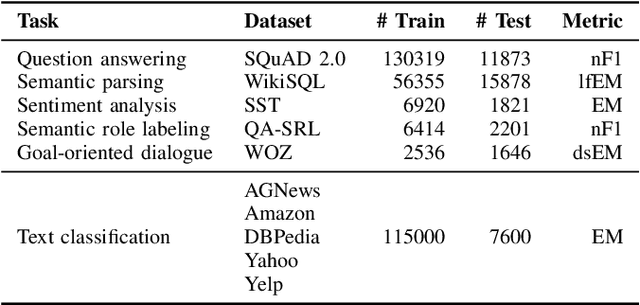

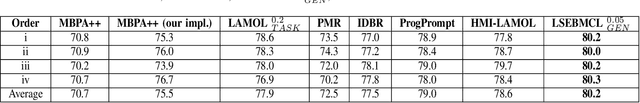

Abstract:Continual learning remains a fundamental challenge in machine learning, requiring models to learn from a stream of tasks without forgetting previously acquired knowledge. A major obstacle in this setting is catastrophic forgetting, where performance on earlier tasks degrades as new tasks are learned. In this paper, we introduce PPSEBM, a novel framework that integrates an Energy-Based Model (EBM) with Progressive Parameter Selection (PPS) to effectively address catastrophic forgetting in continual learning for natural language processing tasks. In PPSEBM, progressive parameter selection allocates distinct, task-specific parameters for each new task, while the EBM generates representative pseudo-samples from prior tasks. These generated samples actively inform and guide the parameter selection process, enhancing the model's ability to retain past knowledge while adapting to new tasks. Experimental results on diverse NLP benchmarks demonstrate that PPSEBM outperforms state-of-the-art continual learning methods, offering a promising and robust solution to mitigate catastrophic forgetting.

Retrieval Augmented Generation-based Large Language Models for Bridging Transportation Cybersecurity Legal Knowledge Gaps

May 23, 2025Abstract:As connected and automated transportation systems evolve, there is a growing need for federal and state authorities to revise existing laws and develop new statutes to address emerging cybersecurity and data privacy challenges. This study introduces a Retrieval-Augmented Generation (RAG) based Large Language Model (LLM) framework designed to support policymakers by extracting relevant legal content and generating accurate, inquiry-specific responses. The framework focuses on reducing hallucinations in LLMs by using a curated set of domain-specific questions to guide response generation. By incorporating retrieval mechanisms, the system enhances the factual grounding and specificity of its outputs. Our analysis shows that the proposed RAG-based LLM outperforms leading commercial LLMs across four evaluation metrics: AlignScore, ParaScore, BERTScore, and ROUGE, demonstrating its effectiveness in producing reliable and context-aware legal insights. This approach offers a scalable, AI-driven method for legislative analysis, supporting efforts to update legal frameworks in line with advancements in transportation technologies.

Graph-Based Re-ranking: Emerging Techniques, Limitations, and Opportunities

Mar 19, 2025Abstract:Knowledge graphs have emerged to be promising datastore candidates for context augmentation during Retrieval Augmented Generation (RAG). As a result, techniques in graph representation learning have been simultaneously explored alongside principal neural information retrieval approaches, such as two-phased retrieval, also known as re-ranking. While Graph Neural Networks (GNNs) have been proposed to demonstrate proficiency in graph learning for re-ranking, there are ongoing limitations in modeling and evaluating input graph structures for training and evaluation for passage and document ranking tasks. In this survey, we review emerging GNN-based ranking model architectures along with their corresponding graph representation construction methodologies. We conclude by providing recommendations on future research based on community-wide challenges and opportunities.

LSEBMCL: A Latent Space Energy-Based Model for Continual Learning

Jan 09, 2025

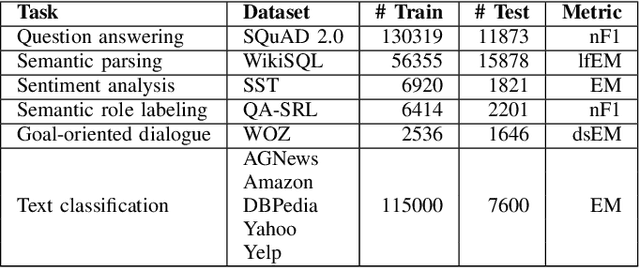

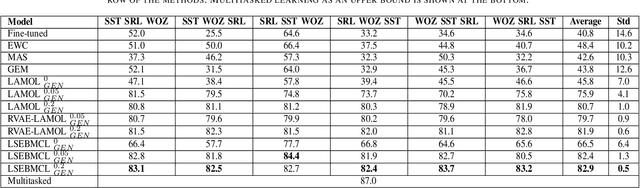

Abstract:Continual learning has become essential in many practical applications such as online news summaries and product classification. The primary challenge is known as catastrophic forgetting, a phenomenon where a model inadvertently discards previously learned knowledge when it is trained on new tasks. Existing solutions involve storing exemplars from previous classes, regularizing parameters during the fine-tuning process, or assigning different model parameters to each task. The proposed solution LSEBMCL (Latent Space Energy-Based Model for Continual Learning) in this work is to use energy-based models (EBMs) to prevent catastrophic forgetting by sampling data points from previous tasks when training on new ones. The EBM is a machine learning model that associates an energy value with each input data point. The proposed method uses an EBM layer as an outer-generator in the continual learning framework for NLP tasks. The study demonstrates the efficacy of EBM in NLP tasks, achieving state-of-the-art results in all experiments.

ConfliBERT: A Language Model for Political Conflict

Dec 19, 2024

Abstract:Conflict scholars have used rule-based approaches to extract information about political violence from news reports and texts. Recent Natural Language Processing developments move beyond rigid rule-based approaches. We review our recent ConfliBERT language model (Hu et al. 2022) to process political and violence related texts. The model can be used to extract actor and action classifications from texts about political conflict. When fine-tuned, results show that ConfliBERT has superior performance in accuracy, precision and recall over other large language models (LLM) like Google's Gemma 2 (9B), Meta's Llama 3.1 (7B), and Alibaba's Qwen 2.5 (14B) within its relevant domains. It is also hundreds of times faster than these more generalist LLMs. These results are illustrated using texts from the BBC, re3d, and the Global Terrorism Dataset (GTD).

Dynamic Environment Responsive Online Meta-Learning with Fairness Awareness

Feb 19, 2024

Abstract:The fairness-aware online learning framework has emerged as a potent tool within the context of continuous lifelong learning. In this scenario, the learner's objective is to progressively acquire new tasks as they arrive over time, while also guaranteeing statistical parity among various protected sub-populations, such as race and gender, when it comes to the newly introduced tasks. A significant limitation of current approaches lies in their heavy reliance on the i.i.d (independent and identically distributed) assumption concerning data, leading to a static regret analysis of the framework. Nevertheless, it's crucial to note that achieving low static regret does not necessarily translate to strong performance in dynamic environments characterized by tasks sampled from diverse distributions. In this paper, to tackle the fairness-aware online learning challenge in evolving settings, we introduce a unique regret measure, FairSAR, by incorporating long-term fairness constraints into a strongly adapted loss regret framework. Moreover, to determine an optimal model parameter at each time step, we introduce an innovative adaptive fairness-aware online meta-learning algorithm, referred to as FairSAOML. This algorithm possesses the ability to adjust to dynamic environments by effectively managing bias control and model accuracy. The problem is framed as a bi-level convex-concave optimization, considering both the model's primal and dual parameters, which pertain to its accuracy and fairness attributes, respectively. Theoretical analysis yields sub-linear upper bounds for both loss regret and the cumulative violation of fairness constraints. Our experimental evaluation on various real-world datasets in dynamic environments demonstrates that our proposed FairSAOML algorithm consistently outperforms alternative approaches rooted in the most advanced prior online learning methods.

Fairness-Aware Domain Generalization under Covariate and Dependence Shifts

Nov 23, 2023

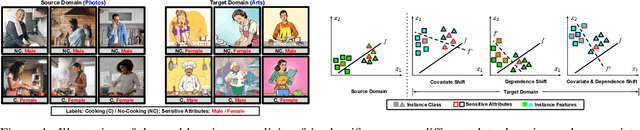

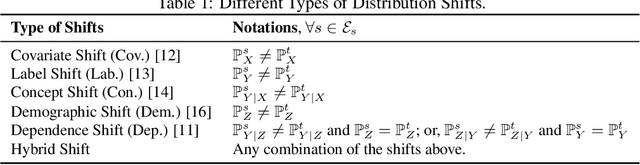

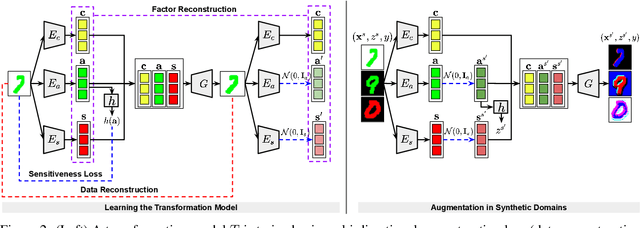

Abstract:Achieving the generalization of an invariant classifier from source domains to shifted target domains while simultaneously considering model fairness is a substantial and complex challenge in machine learning. Existing domain generalization research typically attributes domain shifts to concept shift, which relates to alterations in class labels, and covariate shift, which pertains to variations in data styles. In this paper, by introducing another form of distribution shift, known as dependence shift, which involves variations in fair dependence patterns across domains, we propose a novel domain generalization approach that addresses domain shifts by considering both covariate and dependence shifts. We assert the existence of an underlying transformation model can transform data from one domain to another. By generating data in synthetic domains through the model, a fairness-aware invariant classifier is learned that enforces both model accuracy and fairness in unseen domains. Extensive empirical studies on four benchmark datasets demonstrate that our approach surpasses state-of-the-art methods.

Synthesizing Political Zero-Shot Relation Classification via Codebook Knowledge, NLI, and ChatGPT

Aug 15, 2023

Abstract:Recent supervised models for event coding vastly outperform pattern-matching methods. However, their reliance solely on new annotations disregards the vast knowledge within expert databases, hindering their applicability to fine-grained classification. To address these limitations, we explore zero-shot approaches for political event ontology relation classification, by leveraging knowledge from established annotation codebooks. Our study encompasses both ChatGPT and a novel natural language inference (NLI) based approach named ZSP. ZSP adopts a tree-query framework that deconstructs the task into context, modality, and class disambiguation levels. This framework improves interpretability, efficiency, and adaptability to schema changes. By conducting extensive experiments on our newly curated datasets, we pinpoint the instability issues within ChatGPT and highlight the superior performance of ZSP. ZSP achieves an impressive 40% improvement in F1 score for fine-grained Rootcode classification. ZSP demonstrates competitive performance compared to supervised BERT models, positioning it as a valuable tool for event record validation and ontology development. Our work underscores the potential of leveraging transfer learning and existing expertise to enhance the efficiency and scalability of research in the field.

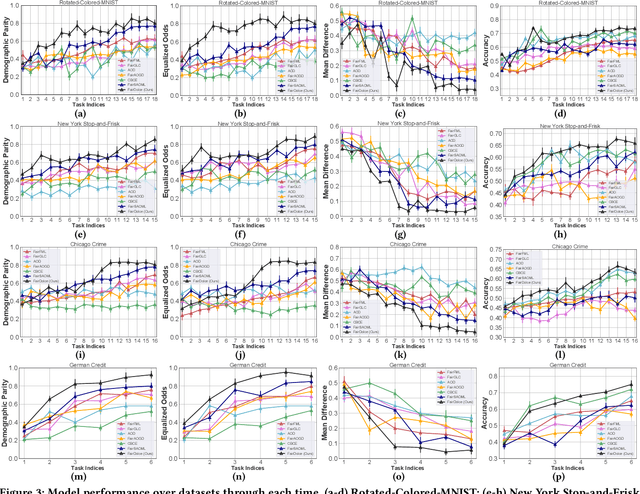

Towards Fair Disentangled Online Learning for Changing Environments

May 31, 2023

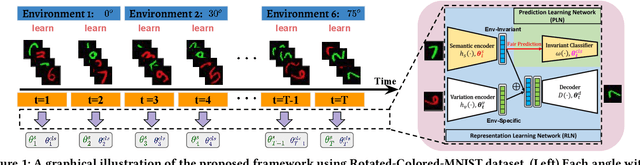

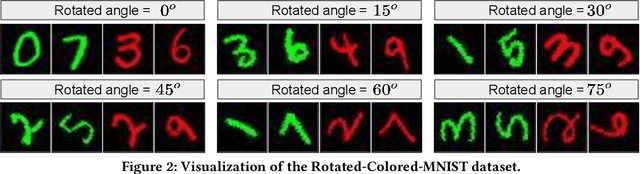

Abstract:In the problem of online learning for changing environments, data are sequentially received one after another over time, and their distribution assumptions may vary frequently. Although existing methods demonstrate the effectiveness of their learning algorithms by providing a tight bound on either dynamic regret or adaptive regret, most of them completely ignore learning with model fairness, defined as the statistical parity across different sub-population (e.g., race and gender). Another drawback is that when adapting to a new environment, an online learner needs to update model parameters with a global change, which is costly and inefficient. Inspired by the sparse mechanism shift hypothesis, we claim that changing environments in online learning can be attributed to partial changes in learned parameters that are specific to environments and the rest remain invariant to changing environments. To this end, in this paper, we propose a novel algorithm under the assumption that data collected at each time can be disentangled with two representations, an environment-invariant semantic factor and an environment-specific variation factor. The semantic factor is further used for fair prediction under a group fairness constraint. To evaluate the sequence of model parameters generated by the learner, a novel regret is proposed in which it takes a mixed form of dynamic and static regret metrics followed by a fairness-aware long-term constraint. The detailed analysis provides theoretical guarantees for loss regret and violation of cumulative fairness constraints. Empirical evaluations on real-world datasets demonstrate our proposed method sequentially outperforms baseline methods in model accuracy and fairness.

An Automated Vulnerability Detection Framework for Smart Contracts

Jan 20, 2023Abstract:With the increase of the adoption of blockchain technology in providing decentralized solutions to various problems, smart contracts have become more popular to the point that billions of US Dollars are currently exchanged every day through such technology. Meanwhile, various vulnerabilities in smart contracts have been exploited by attackers to steal cryptocurrencies worth millions of dollars. The automatic detection of smart contract vulnerabilities therefore is an essential research problem. Existing solutions to this problem particularly rely on human experts to define features or different rules to detect vulnerabilities. However, this often causes many vulnerabilities to be ignored, and they are inefficient in detecting new vulnerabilities. In this study, to overcome such challenges, we propose a framework to automatically detect vulnerabilities in smart contracts on the blockchain. More specifically, first, we utilize novel feature vector generation techniques from bytecode of smart contract since the source code of smart contracts are rarely available in public. Next, the collected vectors are fed into our novel metric learning-based deep neural network(DNN) to get the detection result. We conduct comprehensive experiments on large-scale benchmarks, and the quantitative results demonstrate the effectiveness and efficiency of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge